Why Over Half of UK Companies Now Regret Replacing Humans with AI

The rapid proliferation of artificial intelligence (AI) in the business world has reshaped the operational landscape for organizations across the United Kingdom. Once heralded as the pinnacle of technological advancement and a key driver of efficiency, AI was swiftly adopted by UK enterprises in a bid to reduce costs, streamline processes, and remain competitive in an increasingly digital marketplace. From automating customer service roles with chatbots to implementing intelligent data analysis tools, the UK business sector embarked on an aggressive journey of AI integration, often replacing human workers in the process.

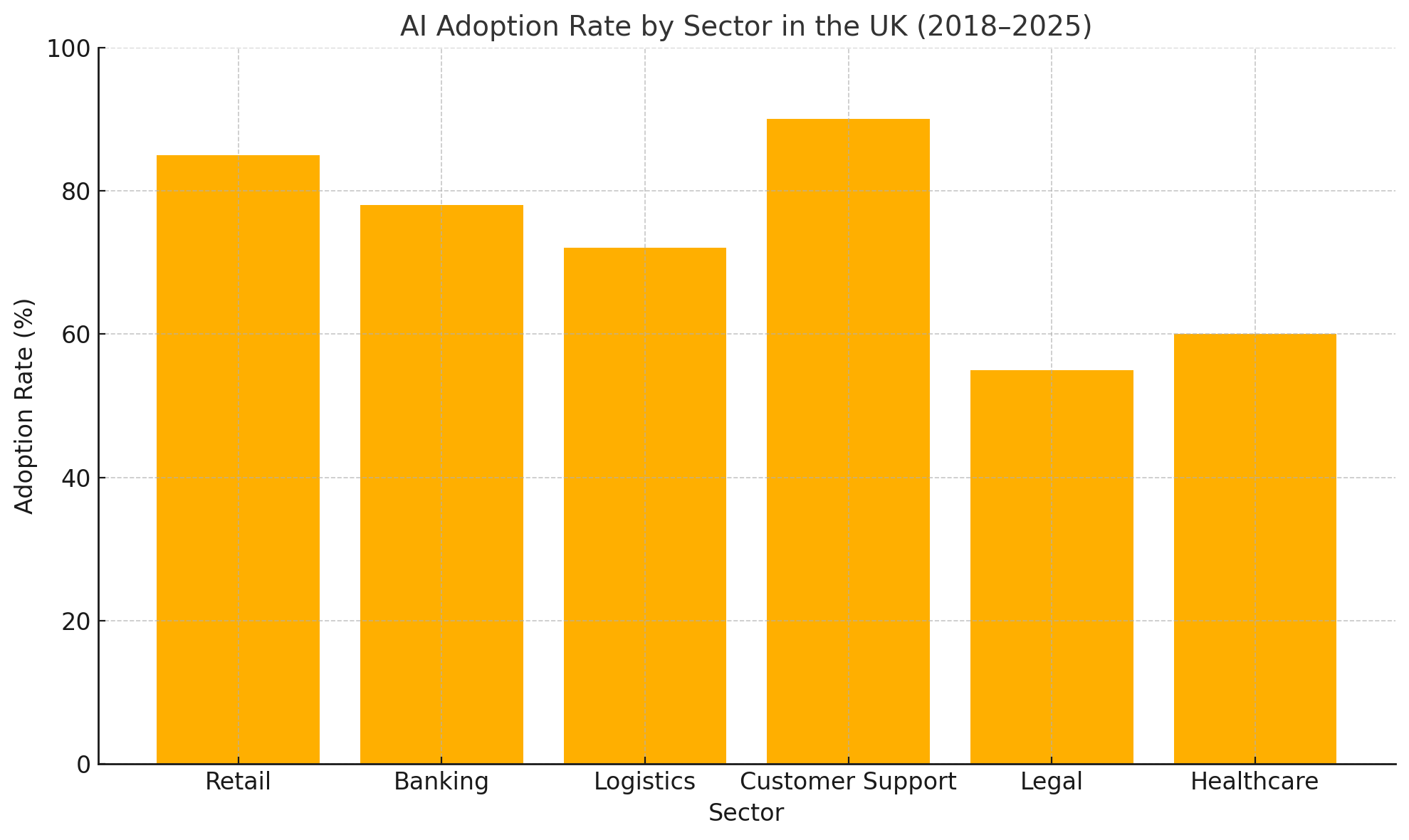

Initially, the enthusiasm surrounding AI was buoyed by compelling projections. Industry reports anticipated significant cost reductions, increased output, and faster decision-making cycles. AI was marketed not merely as a tool, but as a transformational force capable of surpassing human capabilities in precision and consistency. In a landscape defined by tight profit margins and high consumer expectations, many UK businesses saw AI as an indispensable asset. Consequently, the years between 2018 and 2023 witnessed a surge in AI deployments across various sectors, including retail, logistics, banking, and professional services.

However, as the dust settles and early adopters reflect on their experiences, a more complex picture has emerged—one that tempers the earlier optimism with a sober acknowledgment of AI’s limitations and unintended consequences. A recent nationwide survey conducted in early 2025 revealed that over 50% of UK businesses now regret replacing human workers with AI-driven systems. This shift in sentiment has sparked a wave of introspection across the corporate landscape. Business leaders, once firm proponents of full-scale automation, are beginning to reconsider the roles of humans and machines within their organizations.

The sources of this regret are multifaceted. Many companies underestimated the operational and cultural costs associated with large-scale automation. For instance, while AI systems offered consistency, they often lacked the adaptability, empathy, and contextual awareness that human employees brought to their roles. In customer-facing industries, businesses experienced a noticeable decline in service quality and client satisfaction. Furthermore, the anticipated cost savings were frequently offset by the high expenses of AI maintenance, integration failures, and the need for ongoing technical oversight.

In addition to operational inefficiencies, the human impact of AI-driven displacement has been profound. The erosion of workforce morale, public criticism over layoffs, and ethical concerns about over-automation have cast a shadow over AI’s promise. In sectors such as healthcare administration, education, and finance—where human judgment, trust, and discretion are paramount—the removal of experienced personnel in favor of algorithmic solutions has, in some cases, led to reputational damage and even regulatory scrutiny.

Against this backdrop, a growing number of UK businesses are reassessing their digital transformation strategies. Rather than continuing on a trajectory of pure automation, many are pivoting toward hybrid workforce models that seek to harness the strengths of both AI systems and human employees. This recalibration is not merely reactive but strategic—aimed at fostering long-term sustainability, enhancing organizational agility, and restoring trust among customers and workers alike.

This blog post examines the evolving narrative of AI in UK businesses, from initial adoption to the emerging wave of regret. Through a critical analysis of sectoral trends, empirical data, and real-world case studies, we aim to uncover why so many firms now question their decision to replace humans with machines. We will also explore the comparative strengths of AI and human labor, highlight efforts to reintegrate human expertise, and offer insights into how businesses can build resilient, adaptive, and ethically sound workforce strategies for the future.

As organizations globally look to the UK’s experience as a cautionary tale or a learning opportunity, one thing becomes increasingly clear: the future of work lies not in choosing between humans or AI, but in mastering the art of thoughtful integration.

The AI Adoption Boom in the UK

The trajectory of artificial intelligence (AI) adoption in the United Kingdom has been nothing short of transformative. Between 2018 and 2025, UK businesses—spanning traditional industries and emergent digital sectors—have aggressively implemented AI technologies across a broad range of functions. This surge was driven by a combination of technological optimism, mounting competitive pressures, and a belief that automation was the inevitable path toward sustainable growth.

The roots of this AI boom can be traced back to the convergence of three critical factors: advancements in machine learning and natural language processing, a supportive policy environment promoting digital innovation, and a labor market increasingly strained by talent shortages and rising costs. Early success stories in Silicon Valley and among global tech giants emboldened UK firms to emulate similar models, albeit at varying scales. As a result, AI rapidly shifted from a niche research interest to a boardroom priority.

Government Incentives and Policy Signals

The UK government played a significant role in encouraging AI adoption. The 2018 Industrial Strategy White Paper identified AI and data as one of the four “Grand Challenges” intended to drive productivity and economic transformation. This led to the creation of the Office for Artificial Intelligence and initiatives such as the AI Sector Deal, which channeled funding into AI research, industry partnerships, and workforce upskilling.

Public-private collaborations emerged swiftly. Universities partnered with firms to develop AI tools tailored to specific sectors. Government-backed accelerators nurtured AI startups, and generous R&D tax credits incentivized firms to invest in intelligent automation solutions. The message was clear: AI was not only the future of business—it was an area where the UK intended to lead.

Sectoral Breakdown of AI Integration

The early adopters of AI technologies were predominantly in sectors where cost efficiency and data processing were paramount. Retail, for instance, used AI for inventory optimization, personalized marketing, and customer service automation. Major retailers implemented chatbots and recommendation engines to mimic in-store service and track customer preferences across channels. AI-enabled forecasting helped reduce waste, a significant issue in the grocery sector.

Banking and financial services also embraced AI with considerable enthusiasm. Algorithmic trading, fraud detection, and robo-advisors became central to many firms’ digital strategies. UK-based institutions such as Barclays and HSBC led in deploying AI to enhance compliance, analyze credit risk, and streamline customer onboarding through intelligent document processing.

In logistics and supply chain management, AI was used to predict delivery timelines, manage fleet operations, and optimize warehouse layouts. Companies such as Ocado leveraged AI-powered robotics and computer vision systems to fulfill growing e-commerce demands with minimal human intervention.

Call centers and customer support operations, traditionally labor-intensive, experienced a particularly aggressive wave of AI adoption. Natural language processing (NLP) technologies were integrated into voice response systems, enabling round-the-clock service with reduced human involvement. AI-assisted sentiment analysis tools helped managers monitor conversations and escalate complex issues to human agents.

Smaller-scale adoption also took place in legal services (contract analysis and e-discovery), manufacturing (predictive maintenance), and real estate (property valuation algorithms), though these implementations were often less visible to the public.

AI Replacing Human Workers: Motivations and Justifications

The decision to replace human workers with AI systems was primarily framed through the lens of economic rationality. Many firms cited cost efficiency as a primary motivation—AI systems do not demand salaries, benefits, or time off. Once deployed, they promised to operate continuously, improving throughput while reducing human error.

Executives also highlighted the benefits of scalability and standardization. Whereas human workers might vary in performance and availability, AI systems could uniformly execute processes across branches or departments. This consistency was especially attractive in customer service, where maintaining uniform service quality was both challenging and critical.

Another key driver was data exploitation. As UK firms began to accumulate more digital data, AI offered a mechanism to convert this data into actionable insights. Machine learning models could detect patterns that escaped human analysts, enabling faster decision-making in marketing, operations, and product development.

Finally, many companies justified their automation strategies by referencing the global competitiveness argument. As AI became mainstream in U.S. and Chinese enterprises, UK firms feared being left behind in a technology race that promised to redefine productivity and innovation benchmarks. In a post-Brexit economy looking to maintain global relevance, automation was seen as a vital strategic investment.

Hype and High Expectations

This widespread AI adoption was accompanied by an almost evangelical optimism. Influential business consultancies, technology vendors, and futurists painted AI as a panacea for nearly all operational inefficiencies. In some instances, projections suggested that AI could increase UK GDP by as much as 10% by 2030. These forecasts, often based on macroeconomic models and ambitious scenario planning, fueled a race to automate across both public and private sectors.

Workforce transformation narratives also abounded. AI, it was argued, would liberate employees from repetitive tasks and enable them to focus on more creative, strategic work. Upskilling and reskilling were often touted as realistic counterbalances to job displacement. In reality, however, many organizations failed to establish concrete pathways for displaced workers, leading to a mismatch between narrative and implementation.

Challenges Concealed by Momentum

While the initial wave of AI implementation generated excitement and media attention, it also masked early warning signs. Integration hurdles, high development and deployment costs, data quality issues, and a lack of in-house AI expertise often slowed or undermined intended outcomes. Moreover, many systems implemented lacked the maturity required for mission-critical decision-making and proved fragile in the face of edge cases or shifting conditions.

Cultural resistance within organizations also emerged. Employees viewed AI with suspicion—often as a harbinger of job loss rather than a partner in innovation. Meanwhile, middle managers tasked with supervising AI initiatives found themselves navigating new responsibilities without adequate support or guidance.

Despite these obstacles, the momentum behind AI adoption continued largely unchecked through 2023. It was only in the subsequent years—2024 and early 2025—that the cumulative effects of these shortcomings began to surface in public discourse and internal reviews. For the first time, business leaders began to question not only the costs and benefits of AI systems but the wisdom of having replaced human workers so rapidly and comprehensively.

In summary, the UK’s AI adoption boom was driven by a mix of economic imperatives, policy encouragement, and optimistic projections. However, the accelerated pace of deployment, often without sufficient foresight into operational and human consequences, laid the groundwork for the widespread regret many firms are now experiencing. As we transition into the next section, we will delve into the specific causes of this regret and the lessons they reveal for the future of work and technology integration.

The Regret Realized—Why Businesses Are Reconsidering

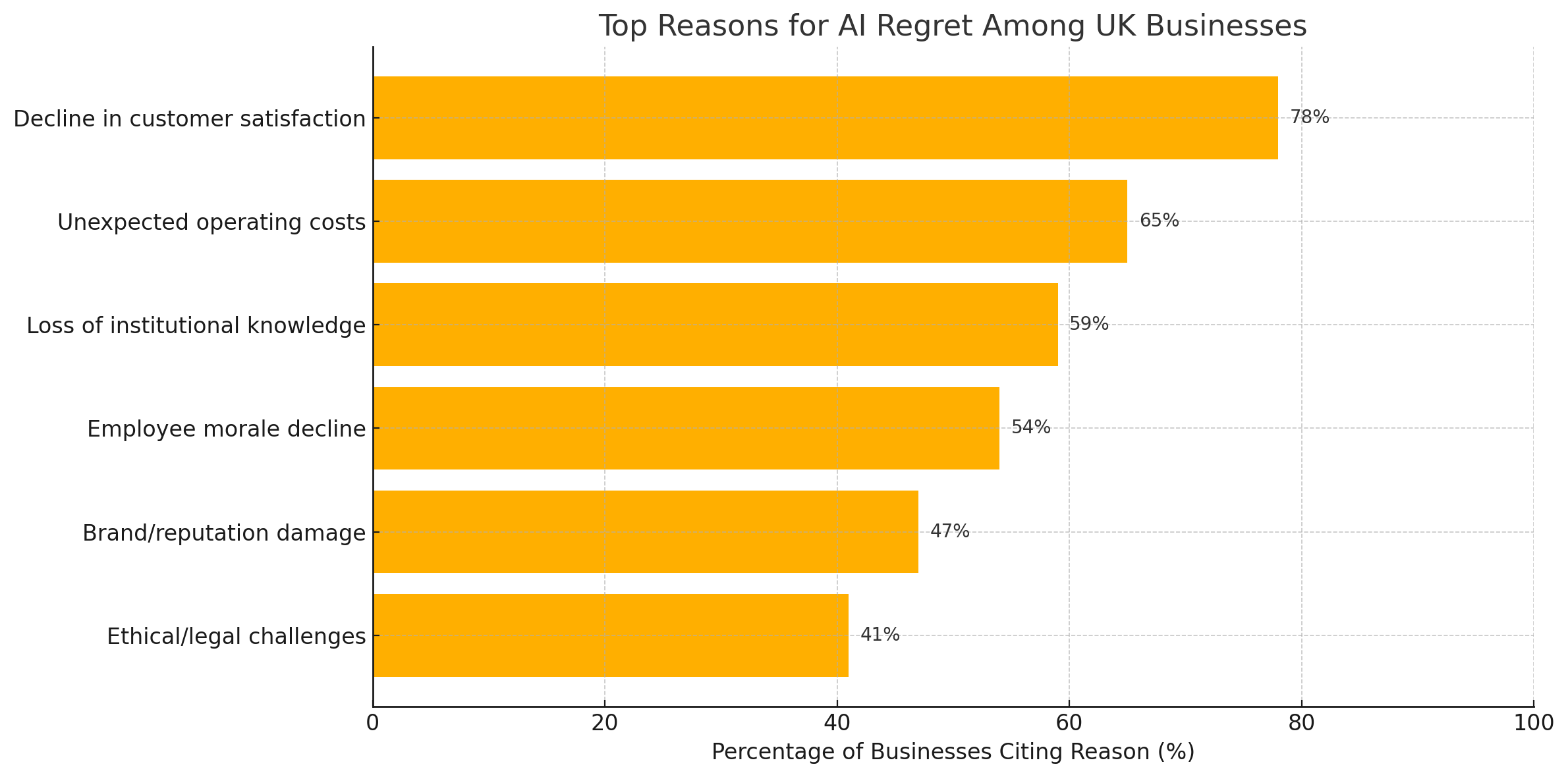

Despite the enthusiastic adoption of artificial intelligence (AI) systems by UK businesses over the past several years, a significant and growing number of these firms have begun to reassess the outcomes of their automation strategies. A 2025 industry survey revealed a striking statistic: over 50% of UK companies now express regret over decisions to replace human workers with AI. This widespread sentiment has prompted a wave of introspection and strategic reevaluation across sectors. The initial optimism surrounding AI’s potential is now tempered by a more nuanced understanding of its limitations, unintended consequences, and the human dimensions it often fails to capture.

Customer Experience and Satisfaction Decline

One of the most prominent and measurable outcomes of excessive reliance on AI has been the degradation of customer experience. While AI systems offer impressive capabilities in handling high volumes of interactions and providing standardized responses, they often fall short in delivering the nuanced, empathetic service that customers expect—especially in emotionally sensitive or complex scenarios.

For instance, in the banking and insurance sectors, AI-powered chatbots and virtual assistants were deployed to manage everything from account queries to claims processing. However, many users reported frustration with rigid scripts, difficulty escalating issues to human agents, and a sense of depersonalization. According to a 2024 study conducted by the Institute of Customer Service, companies that leaned heavily on AI for front-line support experienced a 14% decline in customer satisfaction scores compared to those that maintained a human-AI hybrid model.

This dissatisfaction translated not only into reputational risks but also tangible financial losses. Companies reported higher churn rates, increased complaint volumes, and lower Net Promoter Scores (NPS), undermining the very efficiency gains AI was meant to provide. The failure to adequately replicate human emotional intelligence has proven to be a persistent limitation for even the most advanced AI systems.

Unanticipated Operational Costs

Another major source of regret stems from the underestimation of AI's true cost. Contrary to the narrative that AI offers low-cost, high-return automation, many businesses encountered substantial ongoing expenses associated with system maintenance, retraining, and performance optimization.

AI systems are not static; they require continual monitoring, data input, model retraining, and technical oversight to remain effective. Firms without robust in-house expertise faced a steep learning curve and were often forced to rely on expensive external consultants. In several cases, AI deployments were rendered ineffective due to poor data hygiene, model drift, or incompatibility with legacy IT infrastructure.

Moreover, security and compliance risks introduced by AI have compounded operational burdens. For example, AI systems processing personal or financial data must adhere to stringent GDPR regulations. Failure to meet compliance due to algorithmic opacity or data misuse has already resulted in penalties and reputational damage for several firms.

In aggregate, many businesses found that the total cost of ownership for AI systems far exceeded initial projections, nullifying many of the short-term savings realized by workforce reductions.

Loss of Institutional Knowledge and Expertise

A less tangible but equally significant consequence of AI-driven replacement strategies is the erosion of institutional knowledge. When experienced employees are displaced, organizations often lose more than just labor—they forfeit years of accumulated wisdom, procedural nuance, and contextual understanding that no machine can instantly replicate.

In industries such as healthcare administration, legal services, and education, AI tools were introduced to automate decision-making or content generation. However, these systems frequently lacked the ability to navigate exceptions, apply ethical considerations, or understand organizational culture. Human judgment, built through experience and refined over time, proved irreplaceable in many situations.

This phenomenon was particularly evident in public sector institutions, where automation initiatives led to reductions in administrative staff. Subsequent audits revealed gaps in case processing, misinterpretations of policy, and a decline in service delivery quality. In hindsight, it became evident that human oversight was not merely supplementary—but foundational to ensuring responsible and effective operations.

Negative Impacts on Workforce Morale and Culture

The psychological and cultural costs of AI adoption have also emerged as major contributors to business regret. Mass layoffs, especially those attributed to AI-driven restructuring, have generated distrust and disengagement among remaining employees. In environments where workers fear technological displacement, motivation and innovation tend to suffer.

Surveys conducted by the Chartered Institute of Personnel and Development (CIPD) in late 2024 indicate that over 60% of employees in firms that adopted AI at scale reported increased anxiety regarding job security. Moreover, a significant portion of middle managers found themselves caught in organizational limbo—tasked with overseeing AI implementations without a clear understanding of their own long-term roles.

This erosion of workplace morale has cascading effects. High turnover, lack of collaboration, and reduced loyalty are just a few of the challenges organizations now face as they attempt to repair fractured internal cultures. Ironically, while AI was supposed to free up human talent for higher-order tasks, the way it was deployed in many cases stifled creativity and discouraged engagement.

Brand and Ethical Repercussions

In an era where corporate ethics and social responsibility are under intense public scrutiny, the replacement of human workers with machines has invited backlash from consumers, investors, and advocacy groups. Firms that adopted automation without clear ethical frameworks or communication strategies found themselves accused of prioritizing profits over people.

Notable cases in the retail and transportation sectors drew media attention for mass layoffs tied to AI implementation, often juxtaposed with growing executive compensation and shareholder returns. These incidents damaged brand equity and contributed to a broader narrative that AI adoption is intrinsically anti-worker—a perception that many businesses are now working to reverse.

Moreover, ethical concerns related to algorithmic bias, transparency, and accountability continue to challenge the legitimacy of AI decision-making. Several public sector trials involving AI in judicial or policing contexts were halted following accusations of racial or socioeconomic bias embedded in training datasets. As a result, the public trust in AI systems has become increasingly fragile, and companies that rely on them must work harder to demonstrate integrity and fairness.

Reversal and Retrenchment: A Growing Trend

Faced with these multi-dimensional challenges, a number of UK companies have begun to roll back some of their AI-driven changes. This reversal does not necessarily represent a wholesale abandonment of AI, but rather a strategic retrenchment aimed at restoring balance and reintroducing human oversight where it matters most.

High-profile examples include banks that reintroduced human customer service agents to handle complex inquiries, retailers that scaled back self-checkout systems in favor of personal interaction, and logistics firms that reinstated human dispatchers to complement algorithmic routing tools. These moves are not signs of technological failure per se, but indicators of overreach—where AI was deployed beyond its natural limits or without adequate consideration of contextual and human variables.

In each of these cases, the companies involved cited improved outcomes post-reintegration. Employees reported higher satisfaction, customers appreciated the return of personal service, and executives found that combining human intuition with machine precision yielded better decision quality.

In conclusion, the regret now expressed by over half of UK businesses is not an indictment of AI itself, but of the manner in which it was deployed—often hastily, uncritically, and without sufficient human foresight. The transition from enthusiasm to regret underscores a vital lesson: that technology, no matter how advanced, cannot substitute for human judgment, empathy, and cultural alignment. As we move forward, organizations must approach automation with greater care, ensuring that it complements rather than displaces the human foundation upon which all businesses ultimately rest.

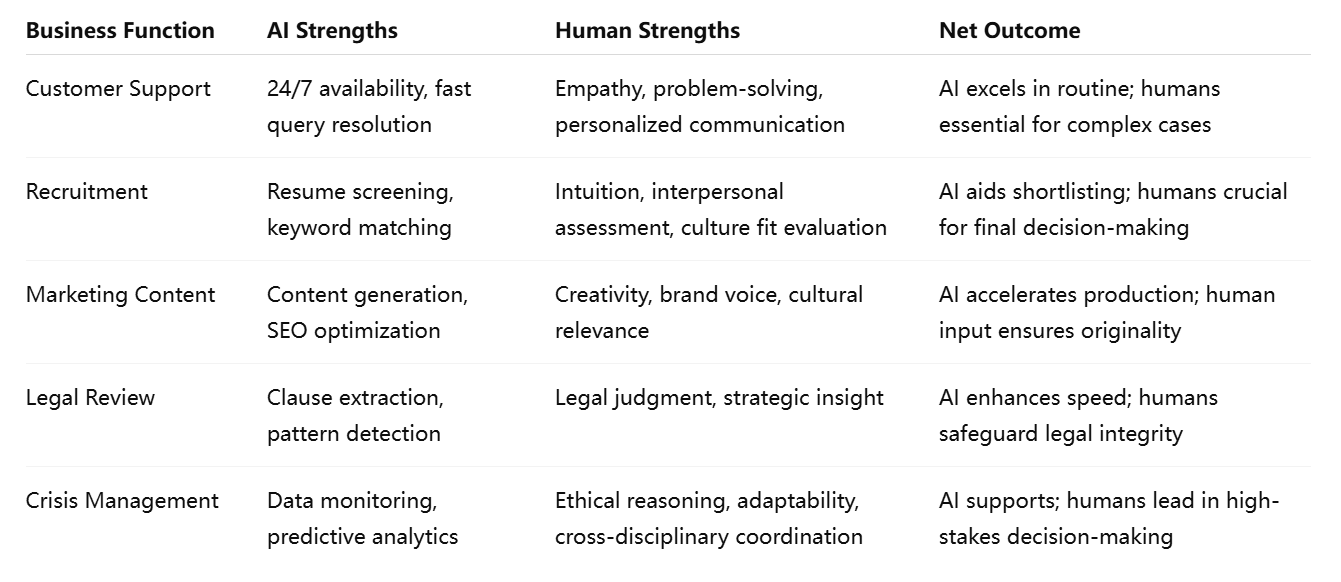

Human Skills vs AI Capabilities—What Was Lost

The replacement of human workers with artificial intelligence (AI) systems across UK businesses has illuminated a critical oversight in the automation movement: the underestimation of irreplaceable human qualities. While AI has proven highly effective in executing routine, data-driven, and repetitive tasks with remarkable efficiency, it still falls short in areas that require emotional intelligence, ethical judgment, and creative reasoning. The consequences of this imbalance are now becoming apparent, as companies grapple with diminished adaptability, impaired organizational memory, and weakened interpersonal dynamics.

This section explores the contrast between human competencies and AI capabilities, highlighting what has been lost in the push toward automation and why certain human attributes remain central to sustainable business operations.

Emotional Intelligence and Empathy

One of the most significant casualties of large-scale AI deployment is the loss of emotional intelligence in customer-facing roles. Human workers possess an innate ability to detect tone, mood, and non-verbal cues in conversations—an ability AI systems, regardless of their sophistication, struggle to replicate. While large language models can simulate empathy through trained responses, they do not "feel" in any true sense, nor can they adapt dynamically to complex emotional situations.

In industries such as healthcare, social services, and financial advising, empathy is not just a desirable trait—it is a functional necessity. Patients, clients, and customers often seek guidance during times of stress or vulnerability, and require reassurance, trust, and understanding. The inability of AI to exhibit genuine compassion has led to a marked decline in customer trust and relationship-building across sectors where these attributes are vital.

Additionally, many businesses have reported increased customer complaints related to perceived coldness or indifference in AI-driven interactions. While AI chatbots can provide instant answers, they lack the situational awareness to understand when a human touch is necessary—an oversight that has undermined customer retention in several service-oriented industries.

Judgment and Contextual Reasoning

Human workers also excel in applying judgment in ambiguous or context-dependent situations. Unlike AI, which relies heavily on training data and statistical patterns, humans can reason through unfamiliar problems by drawing on experience, common sense, and ethical considerations. This ability to navigate gray areas is especially important in sectors where decisions must account for cultural norms, legal interpretations, or evolving circumstances.

For instance, in legal services, AI-powered contract analysis tools can identify clauses and flag anomalies, but they often fail to understand the strategic implications of a contract’s structure or its alignment with broader business objectives. Similarly, in human resources, algorithms can shortlist candidates based on pattern recognition, but they may overlook the intangible qualities—such as leadership potential or team dynamics—that a seasoned recruiter can detect through interpersonal engagement.

The loss of contextual intelligence due to workforce displacement has led to a string of avoidable missteps. Companies have misinterpreted regulatory changes, mishandled sensitive employee issues, and made poor strategic decisions when relying too heavily on machine-generated insights. These incidents reinforce the idea that AI should support—not supplant—human decision-making in contexts that require discernment and nuance.

Creativity and Innovation

Creativity is another domain where humans maintain a distinct advantage. While AI can generate content, mimic styles, and even produce novel outputs based on training data, it lacks the intentionality and subjective insight that characterize human creativity. Innovation, particularly at the strategic or conceptual level, depends on the ability to question assumptions, imagine possibilities, and think abstractly—qualities deeply embedded in human cognition.

In design, marketing, product development, and content creation, organizations that automated creative processes often encountered a decline in originality and brand authenticity. AI-generated marketing materials, though grammatically sound and contextually relevant, frequently lacked the emotional resonance or wit that human copywriters could deliver. The result was content that felt generic, uninspired, or disconnected from the audience.

Moreover, innovation often arises from serendipitous collaboration, a dynamic that thrives in human teams but is absent in AI-only workflows. The cross-pollination of ideas, spontaneous brainstorming, and the iterative refinement of concepts require a level of spontaneity and interpersonal chemistry that cannot be coded. As such, firms that prioritized efficiency over creativity found themselves lagging in product differentiation and long-term strategic vision.

Trust and Relationship Building

Another overlooked loss is the human capacity to build and sustain trust-based relationships. Whether between employer and employee, service provider and customer, or brand and consumer, trust is a cornerstone of effective communication and loyalty. It is cultivated over time through consistent behavior, transparency, and shared values—all of which are difficult for AI to embody.

In B2B contexts, client relationships often hinge on personal rapport, historical understanding, and the ability to negotiate mutually beneficial terms. AI tools may assist in data gathering or communication logistics, but they cannot replace the trust that emerges from human interaction. Clients who once had account managers they could call on for nuanced advice now find themselves routed through impersonal digital interfaces, eroding confidence and satisfaction.

Internally, employee trust in leadership has also suffered in organizations that used AI as a justification for layoffs or role eliminations. Workers who feel disposable in the face of automation are less likely to engage deeply, take initiative, or remain loyal. This cultural deterioration poses long-term risks to organizational cohesion and resilience.

Resilience and Ethical Reflexivity

Humans are uniquely capable of demonstrating resilience and moral reflection under conditions of uncertainty and stress. When faced with unprecedented events—such as a global pandemic, political upheaval, or economic crisis—human teams can adapt creatively, revisit ethical principles, and redefine priorities. AI, by contrast, functions within the parameters of its training and requires human intervention to recalibrate or reinterpret its outputs in light of new developments.

During the COVID-19 pandemic, for example, many AI systems failed to adapt to shifts in consumer behavior, supply chain disruptions, or the emotional needs of stakeholders. It was human leaders, frontline workers, and cross-functional teams who devised innovative workarounds, made discretionary decisions, and sustained operations through empathy and perseverance.

This highlights the importance of moral and situational reflexivity—the ability to pause, reflect, and respond with compassion and ethical intent. As AI continues to evolve, it will undoubtedly become more flexible and adaptive, but it is unlikely to replicate the fundamentally human capacity to weigh competing values and act in ways that honor both efficiency and dignity.

In sum, while AI has indisputably enhanced certain operational capabilities, the wholesale replacement of human workers has resulted in the diminution of essential soft skills and strategic faculties that machines cannot yet emulate. Businesses are now coming to terms with the reality that automation, if deployed without regard for the human elements of work, can lead to diminished resilience, creativity, and long-term value creation. Going forward, the goal must be integration—not substitution—where AI complements human expertise in a balanced, ethically sound, and contextually aware framework.

Toward a Hybrid Model—Reintegrating Human Roles

As UK businesses reflect on the unintended consequences of replacing human workers with artificial intelligence (AI), a growing number are moving beyond regret to proactive recalibration. The path forward, for many, lies not in abandoning AI, but in pursuing a hybrid workforce model that synthesizes the efficiency of automation with the irreplaceable value of human skills. This shift marks a significant evolution in enterprise thinking—one that emphasizes coexistence, complementarity, and long-term resilience over short-term cost-cutting.

This section explores the rationale, architecture, and emerging best practices behind hybrid workforce models, and examines how UK firms are reintegrating human roles in strategic, operational, and cultural domains.

The Case for Hybrid Workforce Models

The limitations of full automation, as explored in prior sections, underscore the need for a more balanced and adaptive approach. Hybrid models aim to allocate tasks based on their inherent suitability: repetitive, data-intensive processes are assigned to AI systems, while functions requiring judgment, empathy, and creativity are retained or reclaimed by human professionals.

This dual approach not only addresses the deficits of pure automation but also enables a division of labor that optimizes strengths on both sides. AI becomes a tool that enhances human capabilities—rather than a substitute that marginalizes them. Crucially, hybrid models also mitigate reputational and ethical risks by demonstrating a commitment to both technological innovation and social responsibility.

From a strategic standpoint, hybrid models offer superior organizational agility. They allow businesses to scale rapidly using AI during peak demand periods while relying on human oversight to navigate exceptions, manage crises, and build customer relationships. This dynamic flexibility has become a competitive differentiator, particularly in sectors facing volatile market conditions or high customer expectations.

Reintegrating Human Roles: Key Domains

Several UK firms have begun reintegrating human roles into functions where AI proved inadequate or counterproductive. The following domains have seen particularly significant recalibration:

1. Customer Service and Experience

After a period of enthusiastic chatbot deployment, numerous companies have restored human agents to key points in the customer journey. For instance, telecom providers and financial institutions have adopted “AI-assist” models, where bots handle initial triage and routine queries, but seamlessly transfer customers to human representatives for complex issues.

This layered approach not only improves customer satisfaction but also empowers human agents with AI-generated insights—such as customer history, sentiment analysis, and recommended responses—thereby enhancing service quality and speed without sacrificing empathy or trust.

2. Human Resources and Recruitment

In recruitment, many organizations initially used AI to automate resume screening and candidate ranking. However, concerns about algorithmic bias and cultural misalignment prompted a shift back toward human-led interviews, assessments, and onboarding.

Today, forward-thinking firms use AI as a decision-support tool, not a decision-maker. Algorithms help narrow applicant pools and identify potential fits based on skills and experience, but human recruiters make final determinations by considering interpersonal qualities, team compatibility, and organizational values—factors that algorithms cannot reliably evaluate.

3. Strategic Planning and Governance

At the executive level, some businesses had experimented with AI in forecasting, financial modeling, and even boardroom analytics. However, the COVID-19 pandemic, geopolitical instability, and inflationary shocks revealed the limitations of predictive models in non-linear, uncertain environments.

Executives are now placing greater emphasis on human judgment in interpreting data, making scenario-based decisions, and integrating qualitative variables such as employee well-being and ESG (Environmental, Social, and Governance) considerations. AI is still used, but as an input to a more holistic strategic process led by experienced leaders.

4. Marketing and Creative Production

The use of AI in marketing—particularly for content creation and A/B testing—has been scaled back in many brands after realizing that authenticity and emotional resonance cannot be fully automated. UK fashion and lifestyle companies, for example, have reinvested in human copywriters, designers, and campaign strategists to regain creative control and brand voice.

Rather than abandoning AI altogether, these companies use it to generate ideas, identify trends, and streamline production, while relying on human professionals to fine-tune tone, storytelling, and audience alignment.

Emerging Roles in Hybrid Organizations

As companies implement hybrid models, they are not merely reinstating old roles—they are also creating new positions that bridge the gap between AI systems and human workflows. These include:

- AI Trainers: Employees responsible for curating training data, correcting model errors, and fine-tuning AI behavior to align with organizational standards.

- AI Ethics Officers: Professionals tasked with overseeing compliance, fairness, and ethical implications of AI usage.

- Human-AI Interaction Designers: Specialists who optimize user interfaces and workflows to ensure seamless collaboration between humans and machines.

- Algorithm Auditors: Independent or in-house experts who regularly test AI systems for bias, reliability, and regulatory compliance.

These roles highlight a broader trend: the future of work in AI-enabled enterprises will require not only technical skills but also interdisciplinary fluency, critical thinking, and cultural awareness.

Implementation Challenges and Considerations

Transitioning to a hybrid model is not without challenges. Organizational change of this magnitude requires:

- Cultural Readiness: Employees must be engaged early in the process and reassured that technology will augment, not threaten, their roles. Transparent communication is essential to foster trust and collaboration.

- Investment in Training: To realize the full benefits of a hybrid model, businesses must invest in upskilling both technical and non-technical staff. Digital literacy, data interpretation, and AI system management are fast becoming core competencies.

- Process Reengineering: Legacy workflows may need to be redesigned to accommodate joint human-AI participation. This involves clarifying handoffs, responsibilities, and performance metrics.

- Governance Structures: Hybrid organizations require robust oversight mechanisms to ensure that AI systems remain aligned with legal, ethical, and strategic goals. This includes clear escalation protocols, audit trails, and accountability frameworks.

Case Studies of Reintegrated Human-AI Models

A prominent UK supermarket chain recently revised its in-store operations by reintroducing human cashiers alongside self-checkout machines, citing customer preference for personal interaction and reduced theft rates. The move was widely applauded, boosting brand perception and employee morale.

Another case involves a mid-sized legal consultancy that had automated its document review process. After encountering a series of misclassifications that led to client dissatisfaction, the firm reinstated a team of junior associates to work alongside the AI, improving accuracy while offering entry-level talent valuable exposure.

These examples illustrate the viability and desirability of hybrid approaches that seek to restore the human dimension without abandoning technological progress.

In conclusion, the hybrid workforce model represents a pragmatic and forward-looking solution to the challenges posed by unchecked automation. It offers a path toward sustainable digital transformation—one that respects human dignity, maximizes productivity, and preserves organizational adaptability. As UK businesses continue to refine this model, they are not only correcting past missteps but also pioneering a new paradigm for the future of work—where AI and humanity coexist, complement, and elevate one another.

Redefining Intelligent Work in the Age of AI

The initial promise of artificial intelligence (AI) in the corporate landscape was one of transformation—of efficiency gains, innovation acceleration, and operational precision. For many UK businesses, this vision prompted rapid and widespread adoption, often involving the replacement of human workers with intelligent systems. Yet, as the findings now show, over half of these organizations have come to regret the haste with which they pursued automation at the expense of human labor.

This widespread sentiment of regret marks a pivotal moment in the trajectory of enterprise AI. It challenges the simplistic narratives of technological determinism and compels decision-makers to reevaluate not only how AI is deployed, but why it is used and what kind of organizational future it is building. While AI excels in processing vast amounts of structured data, identifying patterns, and executing predefined tasks, it remains limited in key areas: emotional intelligence, contextual judgment, ethical discernment, and creative thinking.

These limitations became starkly visible when organizations began experiencing degraded customer satisfaction, unforeseen costs, diminished trust, and loss of institutional wisdom. The displacement of humans in roles where interpersonal dynamics, empathy, and discretion are crucial proved to be a miscalculation—one that affected not only performance metrics but also brand reputation and organizational morale.

What these outcomes underscore is that intelligent work is not solely about efficiency. It is equally about relational depth, ethical consideration, and the ability to adapt dynamically to evolving circumstances—domains in which humans retain a distinct advantage. This understanding is prompting a reorientation away from the false dichotomy of "human versus machine" toward a more sophisticated vision of collaboration between the two.

The emergence of hybrid workforce models is emblematic of this new direction. By integrating AI and human strengths, businesses are beginning to realize more balanced, resilient, and ethically sustainable frameworks for productivity. These models acknowledge the power of AI to optimize and support, while affirming the irreplaceable value of human insight, experience, and creativity.

Such a transformation requires not merely technical reconfiguration but a philosophical shift in leadership and organizational culture. It demands that business leaders move beyond cost-centric calculations to broader evaluations that include social responsibility, employee well-being, and long-term strategic alignment. It calls for continuous learning—not only in terms of digital skills, but also in fostering ethical literacy, emotional intelligence, and collaborative fluency.

Furthermore, the UK's experience serves as a cautionary yet instructive case for the global business community. Nations and corporations looking to scale AI adoption would do well to study the lessons emerging from the UK’s early overreach. Technology, however powerful, cannot replace the social and cognitive fabric that holds institutions together. Disregarding this truth may deliver temporary efficiencies, but at the cost of enduring harm to both people and organizational integrity.

The future of AI in business is not a story of regret but one of recalibration and renewal. As firms refine their approaches, reinvest in human potential, and develop more thoughtful deployment strategies, they are laying the groundwork for a new era—one where artificial intelligence is not a replacement, but a resource; not an end, but a means to augment human capability and drive purposeful innovation.

In this recalibrated landscape, success will belong not to those who automate the most, but to those who automate wisely—guided by clarity of purpose, ethical rigor, and an unwavering commitment to human dignity.

References

- The Role of AI in UK Business Transformation

https://www.uktech.news/ai-in-business-transformation - Over 50% of UK Businesses Regret AI Replacing Jobs

https://www.personneltoday.com/regret-ai-job-replacement - AI Customer Service Fails to Meet Human Expectations

https://www.instituteofcustomerservice.com/ai-customer-experience - The Hidden Costs of AI Implementation

https://www.computerweekly.com/hidden-costs-of-ai - Why Emotional Intelligence Still Beats AI in the Workplace

https://hbr.org/emotional-intelligence-vs-ai - Hybrid Workforces Are the Future of AI Integration

https://www.forbes.com/hybrid-workforce-future-ai - Ethical Risks in Automated Decision-Making

https://www.oxfordinsights.com/ai-ethics-uk - UK Businesses Rethink AI Strategy Post-Layoffs

https://www.ft.com/uk-business-rethinks-ai-strategy - How AI Bias Impacts Recruitment Decisions

https://www.bbc.com/news/ai-recruitment-bias - Reintegrating Humans Into AI-Heavy Organizations

https://www.cipd.org/reintegration-of-human-roles