Why Open Source AI Models Are Critical for the Future of Artificial Intelligence

Artificial intelligence (AI) has rapidly evolved into one of the most transformative technologies of the 21st century, reshaping industries, redefining business processes, and reimagining the ways individuals interact with machines. As AI systems become more complex and influential, questions surrounding transparency, accessibility, and ethical governance have gained prominence. One of the most promising developments addressing these concerns is the rise of open source AI models.

Open source AI models refer to machine learning and deep learning systems whose architectures, codebases, or pretrained weights are freely available for public use, modification, and distribution. This openness stands in contrast to proprietary AI models developed by major technology companies, which are typically closed systems accessible only through restricted APIs or licensed software. The significance of open source in AI lies not only in the technical transparency it provides but also in its potential to democratize innovation, foster trust, and support a broader and more inclusive AI ecosystem.

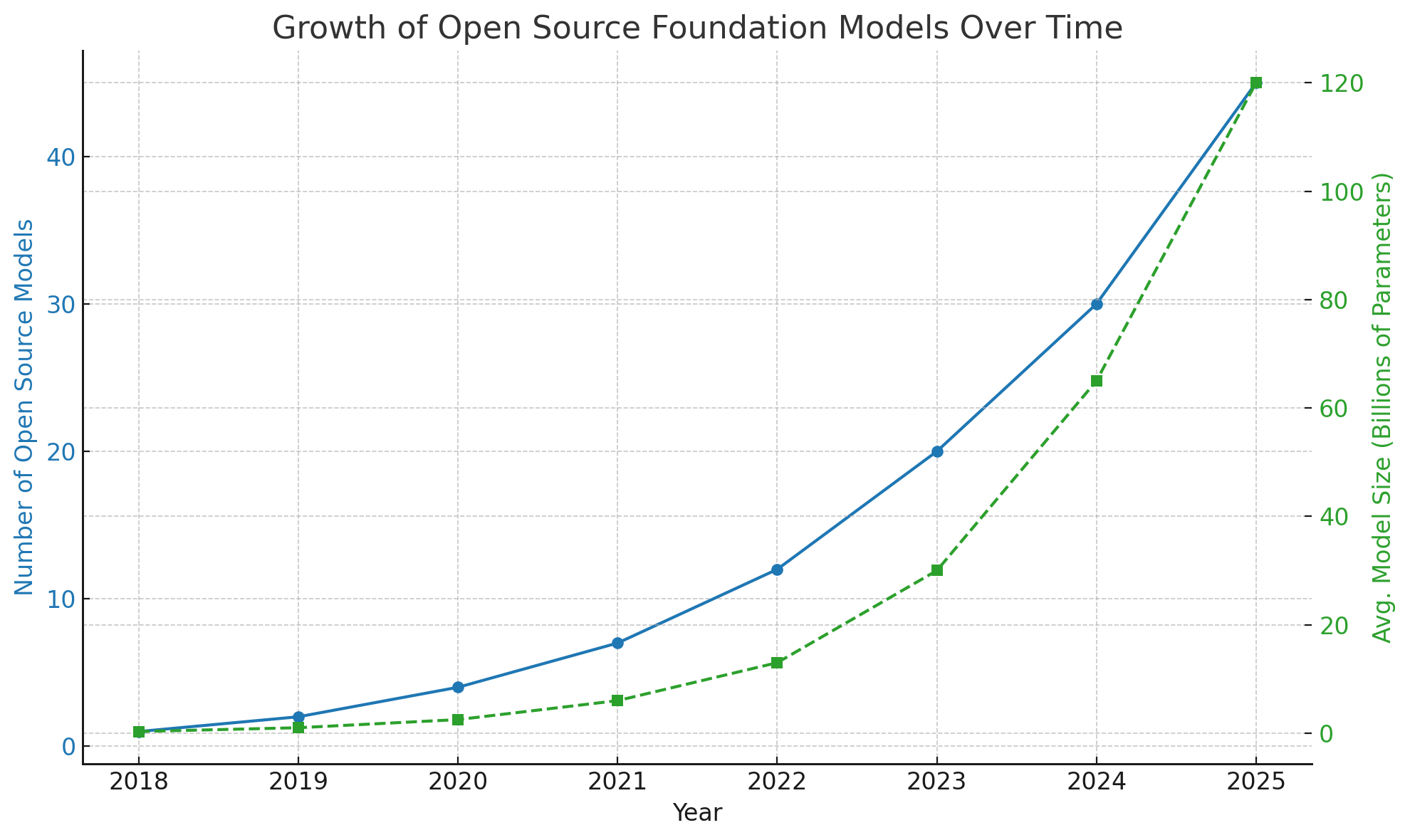

The momentum behind open source AI has been driven by both academic institutions and independent developer communities, often fueled by the desire to create more accessible and explainable AI systems. From early contributions like Theano and TensorFlow to more recent open large language models such as Meta's LLaMA series and the EleutherAI initiative, the ecosystem has expanded rapidly. This growth reflects a global recognition that the future of AI must be built on principles of openness, collaboration, and shared responsibility.

In an age where concerns over algorithmic bias, surveillance, and monopolistic control are increasingly prevalent, open source AI offers a pathway to more accountable and participatory technology development. It allows researchers, policymakers, and citizens alike to examine how these powerful models function, to audit their outcomes, and to contribute to their evolution in ways that align with collective societal goals.

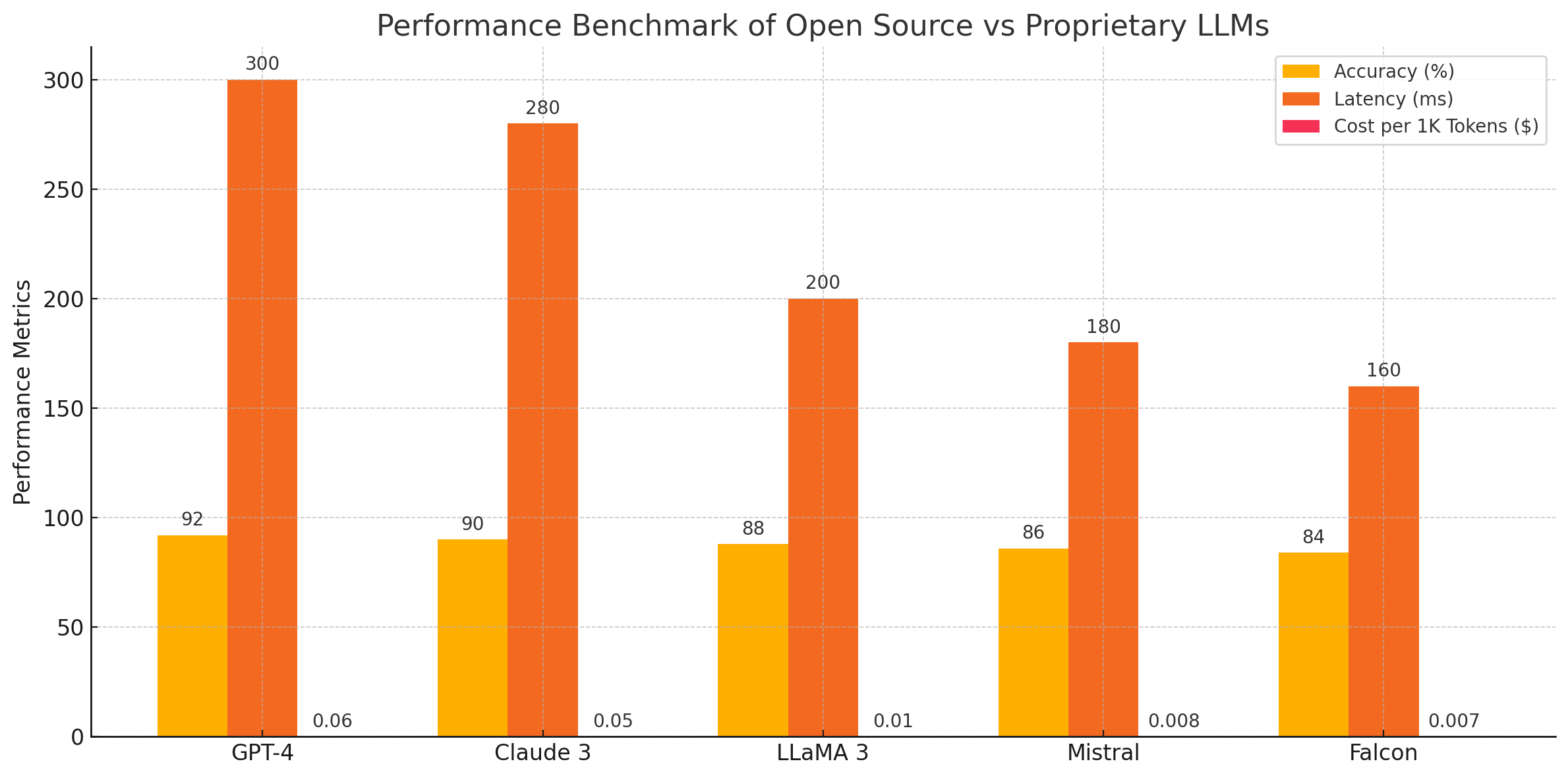

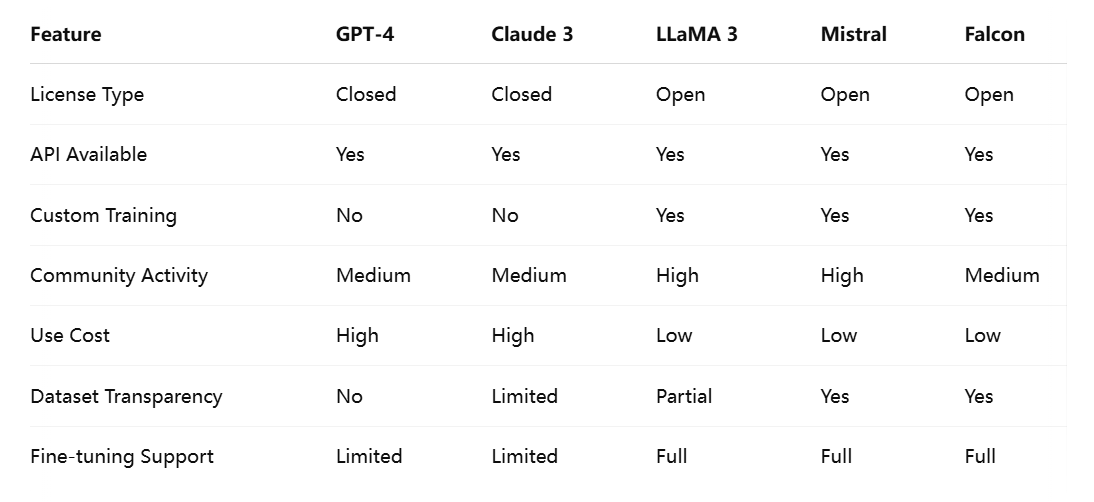

This blog post explores the multifaceted importance of open source AI models by delving into their historical development, practical benefits, comparisons with proprietary systems, and the challenges that lie ahead. It also includes two visualizations—a chart tracking the growth of open source foundation models and a benchmark performance comparison—and a table that contrasts key features of open and closed AI models.

Through this comprehensive analysis, we aim to highlight why open source AI is not merely a technical preference but a foundational pillar in shaping a responsible and equitable AI-driven future.

The Evolution of Open Source in AI

A Historical Look at Open Source in Software

The roots of open source trace back to the early days of software development when knowledge sharing was a fundamental tenet of computing culture. In the 1980s, Richard Stallman launched the GNU Project and the Free Software Foundation, promoting the idea that software should be freely available, modifiable, and shareable. This philosophy laid the groundwork for the open source movement, which gained considerable momentum with the release of the Linux operating system in the 1990s. Linux's rapid success illustrated how community-driven innovation could produce highly robust and scalable systems, even without corporate oversight or commercial incentives.

Open source software (OSS) subsequently permeated almost every layer of the digital infrastructure, from web servers like Apache to programming languages like Python. The defining characteristic of OSS—transparent codebases that invite collaboration—proved to be not only philosophically compelling but also practically advantageous. It allowed developers worldwide to inspect, improve, and customize software to suit diverse applications. The ethos of openness and collective improvement soon made its way into the machine learning (ML) domain, particularly as the complexity of algorithms and systems grew.

Rise of Open Source AI Frameworks

With the increasing sophistication of machine learning, the need for accessible and standardized tools became evident. In response, the open source community developed a suite of frameworks that would fundamentally alter how AI research and application were conducted. Google’s release of TensorFlow in 2015 marked a turning point, offering a comprehensive and flexible library for building and training neural networks. It was soon joined by Facebook’s PyTorch, which gained popularity for its dynamic computation graph and Pythonic design.

These tools dramatically lowered the barriers to entry for AI experimentation and innovation. Students, startups, and independent researchers could now access the same high-quality resources as established tech firms. Hugging Face further democratized natural language processing by offering an easy-to-use platform and repository of pretrained transformer models. Similarly, OpenCV (for computer vision), scikit-learn (for classical ML algorithms), and MLflow (for model management and deployment) empowered developers across various AI disciplines.

The rise of open source frameworks not only accelerated the pace of development but also created a global network of contributors. This network continuously refines tools, shares best practices, and ensures that critical innovations are not locked behind proprietary walls. The resulting culture of openness and reproducibility has become a cornerstone of modern AI development.

Transition from Toolkits to Foundation Models

In recent years, the open source movement has advanced beyond tools and libraries into the realm of foundation models—large-scale neural networks pretrained on massive datasets that can be adapted for a wide range of downstream tasks. Foundation models, especially large language models (LLMs), have redefined what AI systems can achieve. While proprietary models such as OpenAI's GPT-4 and Anthropic’s Claude dominate headlines, a robust parallel movement has emerged around open source alternatives.

Initiatives such as EleutherAI, BigScience, and LAION have proven that it is possible to build state-of-the-art models without the backing of trillion-dollar corporations. For instance, the GPT-J and GPT-NeoX models developed by EleutherAI were among the first open source attempts to replicate the architecture and performance of OpenAI's GPT-3. Meta’s LLaMA (Large Language Model Meta AI) series further demonstrated that open source models could rival or even exceed the performance of closed systems when fine-tuned and deployed effectively.

The open release of model weights, training datasets, and documentation has spurred a wave of derivative works and innovation. Models such as Mistral, Falcon, and BLOOM exemplify this trend, each contributing to the growing ecosystem of powerful, accessible LLMs. These models are often accompanied by permissive licenses that allow for modification, fine-tuning, and deployment in commercial settings—something typically restricted by proprietary vendors.

Notably, this transition has also fostered new models of collaboration, including multi-institutional research efforts and public-private partnerships. The BigScience project, for example, brought together over 1,000 researchers from academia, industry, and civil society to build BLOOM, a multilingual LLM trained on publicly available data. Such efforts underscore the viability and value of cooperative AI development at scale.

Catalysts of the Open Source AI Movement

Several factors have catalyzed the growth of open source AI. The first is the increasing accessibility of cloud computing and specialized hardware (e.g., GPUs and TPUs), which has made training and fine-tuning large models feasible for a wider audience. The second is the cultural shift in academia and industry toward reproducibility and openness. Researchers are now more likely than ever to share code, data, and pretrained models alongside their publications.

The third catalyst is community engagement. Open source AI thrives on decentralized collaboration through forums such as GitHub, Reddit, Hugging Face Hub, and Discord servers. These platforms facilitate knowledge exchange, community-driven testing, and rapid iteration. Importantly, they also serve as incubators for ethical debate, governance proposals, and technical safeguards.

Finally, regulatory developments in regions such as the European Union and the United States are beginning to emphasize transparency, auditability, and ethical accountability in AI systems. Open source models are uniquely well-suited to meet these requirements because their inner workings can be inspected and scrutinized by third parties.

Why Open Source AI Matters

As artificial intelligence increasingly permeates critical aspects of society—from healthcare diagnostics and financial forecasting to public services and educational platforms—the foundational values embedded in the design, deployment, and governance of these systems become paramount. Open source AI models, by virtue of their transparency, accessibility, and collaborative potential, stand at the forefront of this paradigm shift. This section explores the multidimensional significance of open source AI through the lenses of transparency, ethics, innovation, and economic strategy.

Transparency and Accountability

One of the most pressing challenges in modern AI development is the “black box” nature of proprietary models. These systems, while often achieving remarkable performance, operate without meaningful transparency into their decision-making processes. This opacity raises critical concerns, particularly when AI is deployed in high-stakes domains such as healthcare, law enforcement, and finance.

Open source models mitigate these concerns by offering visibility into their architectures, training data (where available), and operational mechanics. Researchers, policymakers, and civil society organizations can inspect these models to understand how predictions are made, identify potential biases, and ensure alignment with regulatory standards. For instance, when a model exhibits biased behavior or disproportionately impacts certain demographic groups, access to the source code and weights facilitates root-cause analysis and the implementation of corrective measures.

Moreover, open source fosters a culture of accountability in AI development. Developers are encouraged to document their work, provide training metadata, and articulate the intended use cases and limitations of their models. This level of rigor is often absent in proprietary systems, where performance claims cannot be independently verified.

Transparency is not merely a technical benefit—it is a social imperative. In democratic societies, systems that influence public life must be open to scrutiny. Open source AI fulfills this requirement by enabling public auditability, thereby strengthening trust and legitimacy.

Ethical and Safety Considerations

Ethical AI is a topic of global importance, and open source plays a critical role in enabling responsible practices. Because open models are publicly accessible, they allow a wide range of stakeholders to examine how they are constructed and deployed. This collective oversight is essential for addressing systemic risks such as algorithmic bias, model hallucination, adversarial vulnerability, and misuse for malicious purposes.

One of the primary ethical benefits of open source AI is bias detection. AI models are only as fair as the data they are trained on, and proprietary developers rarely disclose the datasets underlying their systems. Open source models, by contrast, often include documentation about training corpora or even the corpora themselves, allowing researchers to conduct fairness audits and implement mitigation strategies.

Safety is another domain where open source contributes meaningfully. When models are open, security researchers can test them for adversarial robustness and propose countermeasures. This collaborative testing process strengthens the resilience of models deployed in real-world environments. For example, models that are vulnerable to prompt injection or data poisoning attacks can be patched more rapidly when the broader community is involved in their analysis and refinement.

Additionally, open licensing mechanisms—such as the Responsible AI Licenses (RAIL)—have emerged to guide the ethical use of open source models. These licenses place restrictions on use cases deemed harmful (e.g., surveillance, autonomous weapons) while still preserving the spirit of openness. Although such licenses are not universally enforceable, they represent an important step toward embedding ethics into the fabric of AI development.

Accelerating Innovation and Research

Open source AI dramatically accelerates the pace of innovation by lowering barriers to entry and promoting knowledge transfer. Historically, AI research was limited to well-funded academic institutions or major corporations with access to proprietary tools and high-performance computing infrastructure. The advent of open source has changed this dynamic, allowing individuals, startups, and researchers in emerging economies to participate in cutting-edge AI work.

For example, a small startup can now fine-tune a large language model on a domain-specific dataset to create an industry-specific chatbot, without building a model from scratch. A university lab can replicate state-of-the-art results by using openly available model checkpoints and training scripts. Educators can introduce students to real-world AI systems without the need for expensive commercial licenses.

The compounding effect of shared knowledge and collective development also yields significant gains. When one group publishes an innovation—such as a new attention mechanism or an optimization strategy—it can be adopted, modified, and improved by others. This iterative process fosters rapid evolution in model architecture, training efficiency, and deployment techniques.

Open source also supports cross-disciplinary research. Models like CLIP, SAM, and Segment Anything—released by Meta AI—are routinely repurposed by researchers in fields as diverse as computational biology, digital humanities, and environmental science. The ability to adapt models to novel contexts is a testament to the versatility and modularity that open source enables.

Economic and Strategic Benefits

From an economic standpoint, open source AI levels the playing field for organizations of all sizes. By reducing dependency on expensive commercial APIs and licensing fees, it empowers companies to build custom AI solutions that are cost-effective and strategically tailored to their needs. This democratization of access is especially valuable in sectors such as agriculture, education, and public health, where budgets may be limited but the need for intelligent systems is acute.

Governments, too, stand to benefit from open source AI. As nations increasingly view AI as a pillar of economic competitiveness and national security, there is growing interest in AI sovereignty—the ability to develop and control critical AI infrastructure domestically. Open source models facilitate this goal by providing a base upon which local institutions can build without external dependency. The French government’s investment in BLOOM and the European Union’s support for open AI ecosystems exemplify this strategic orientation.

Moreover, open source models often incur lower long-term operational costs. Organizations can deploy models on their own infrastructure, optimize them for specific tasks, and integrate them with proprietary workflows without facing vendor lock-in. This flexibility not only enhances efficiency but also stimulates economic activity by enabling innovation at the edge of the enterprise.

Lastly, the open source model encourages ecosystem growth through community-driven platforms, third-party toolchains, and startup incubation. Projects such as Hugging Face, LangChain, and OpenMMLab have created entire ecosystems around open models, providing job opportunities, consulting services, and commercial extensions that enrich the broader AI economy.

In summary, open source AI models serve not merely as technical tools but as catalysts for a more transparent, ethical, innovative, and equitable AI ecosystem. Their significance extends across research, governance, industry, and global development. By embracing open source, stakeholders can collectively shape the trajectory of artificial intelligence to better reflect the needs and values of diverse communities worldwide.

Comparing Open vs Proprietary Models

As artificial intelligence capabilities advance rapidly, the landscape of model development has bifurcated into two dominant paradigms: open source and proprietary. Each approach reflects distinct priorities, with open source favoring transparency, community engagement, and adaptability, while proprietary models emphasize product polish, enterprise integration, and commercial viability. This section presents a structured comparison of these paradigms across three key dimensions—licensing and access, performance and cost efficiency, and ecosystem support—followed by a tabular overview of leading models.

Licensing and Access

One of the most evident distinctions between open source and proprietary models lies in their licensing terms and the extent of user access they permit. Proprietary models such as GPT-4 (OpenAI) and Claude 3 (Anthropic) are released as black-box systems. Users interact with these models through restricted application programming interfaces (APIs), often at considerable cost and under strict terms of service. These limitations hinder experimentation, model interpretability, and full-scale customization, particularly for research institutions and small businesses.

Conversely, open source models are typically distributed under permissive licenses—such as Apache 2.0, MIT, or CreativeML Open RAIL—which allow users to download model weights, inspect source code, and deploy systems on their own infrastructure. Meta’s LLaMA 3, Mistral’s Mixtral, and Technology Innovation Institute’s Falcon are examples of open models that have embraced this philosophy. Although some licenses may include ethical use clauses or restrict certain commercial activities, the overarching intent remains accessibility and transparency.

The open source approach aligns more closely with academic and public sector values, where reproducibility and peer review are paramount. It also facilitates compliance with emerging AI governance frameworks that mandate explainability, fairness, and data provenance—qualities difficult to audit in closed systems.

Performance and Cost Efficiency

Performance remains a central concern in AI adoption, particularly for natural language processing (NLP), computer vision, and multimodal applications. Proprietary models have traditionally held a performance edge, largely due to access to massive proprietary datasets, extensive computational budgets, and closed-source optimization techniques. GPT-4, for example, is known for its advanced reasoning abilities and nuanced language generation across multiple domains.

However, open source models have significantly narrowed the gap. Recent benchmarks demonstrate that LLaMA 3 and Mistral achieve comparable scores to GPT-3.5 and Claude 2 on tasks such as summarization, question answering, and code generation. These advances are not coincidental but the result of collective intelligence: thousands of contributors iteratively refining architectures, loss functions, and tokenization methods.

From a cost-efficiency perspective, open source models offer considerable advantages. Organizations can fine-tune open models for domain-specific use cases, deploy them locally to reduce API latency, and avoid recurring usage fees. This flexibility is particularly appealing for enterprises with stringent data privacy requirements or limited IT budgets. By contrast, proprietary models incur operational expenditures that scale with usage volume, often making them cost-prohibitive for sustained or high-volume tasks.

Moreover, the open source community continues to innovate around optimization techniques—such as quantization, pruning, and low-rank adaptation (LoRA)—to enable efficient deployment on consumer-grade hardware. These advancements enhance the accessibility of AI technology in under-resourced settings, supporting digital inclusion and equitable AI adoption.

This table illustrates key differences in licensing, accessibility, cost structure, and community engagement. Notably, all open source models support local fine-tuning and self-hosting, giving developers full control over model behavior and system integration.

Community vs Corporate Support

Another critical dimension in this comparison is the ecosystem surrounding the models. Proprietary AI models benefit from robust corporate backing, offering well-documented APIs, enterprise support plans, and seamless integration into commercial platforms such as Microsoft Azure (OpenAI) and Amazon Bedrock (Anthropic). These services cater primarily to enterprise clients and provide stability, uptime guarantees, and customer service—features that appeal to risk-averse organizations with mission-critical AI applications.

Open source models, in contrast, are sustained by decentralized communities. While they may lack formal customer support, they benefit from a vibrant ecosystem of contributors, integrators, and toolmakers. Platforms like Hugging Face, GitHub, and Papers with Code serve as knowledge hubs for sharing models, datasets, evaluation tools, and reproducible code. This peer-driven approach encourages innovation at the edge and accelerates the development of auxiliary tools for model compression, benchmarking, visualization, and alignment.

Furthermore, open ecosystems foster interdisciplinary collaboration. Engineers, linguists, ethicists, and domain experts converge to examine model behavior, challenge assumptions, and co-create inclusive solutions. This pluralistic development process stands in contrast to proprietary systems, which are often shaped by narrow commercial imperatives and inaccessible to external scrutiny.

It is also worth noting that many open source models are now being integrated into commercial products. Companies such as Cohere, AI21, and Mistral have adopted hybrid strategies—offering open weights for community use while monetizing API services and enterprise customization. This convergence suggests that the boundary between open and closed is increasingly porous, and that sustainable AI business models can be built around openness.

The choice between open source and proprietary AI models is not merely a technical consideration—it reflects broader values about how AI should be built, governed, and deployed. Open source models provide greater autonomy, transparency, and adaptability, enabling organizations and individuals to engage meaningfully with AI systems. Proprietary models, while often more performant out-of-the-box, come with limitations on customizability, reproducibility, and long-term cost control.

As the AI field matures, a hybrid future appears most likely—one in which open source models serve as foundational building blocks, complemented by proprietary layers that offer differentiated services or enterprise-grade assurances. What remains clear, however, is that open source AI will continue to play an indispensable role in democratizing access, advancing scientific discovery, and anchoring ethical innovation in a rapidly evolving technological landscape.

Challenges and the Road Ahead

While open source AI models offer substantial benefits in terms of accessibility, transparency, and collaborative innovation, they are not without their challenges. The road to widespread adoption and sustainable development is marked by technical, ethical, and regulatory complexities. As open source AI continues to mature, it must navigate these challenges with thoughtful governance, robust infrastructure, and inclusive community leadership. This section examines the key hurdles currently facing open source AI and explores emerging strategies to address them while projecting the future trajectory of this transformative movement.

Technical Challenges in Open Source AI

Despite impressive progress, the development and deployment of open source AI models remain technically demanding. Training state-of-the-art models requires access to large-scale computational resources, including GPU clusters and high-speed networking infrastructure—resources that are typically concentrated in the hands of a few technology giants. This resource asymmetry creates a structural disadvantage for independent developers and research collectives seeking to train models from scratch.

Moreover, reproducibility is a persistent issue. Open source projects often struggle to maintain detailed documentation, training logs, and consistent benchmarking practices. Minor variations in preprocessing steps or hyperparameter tuning can lead to significant discrepancies in model performance, hindering replicability and trust in published results. While initiatives like MLCommons and Papers with Code promote standardized evaluation, achieving comprehensive reproducibility remains an ongoing challenge.

Another technical barrier lies in model alignment and safety. As models grow in size and complexity, ensuring that they behave as intended—especially in ambiguous or adversarial contexts—becomes increasingly difficult. Proprietary developers may implement post-training alignment techniques such as reinforcement learning from human feedback (RLHF), but these are often resource-intensive and difficult to replicate in open projects. This limitation could compromise the safety and reliability of open source systems deployed in sensitive domains.

Finally, the sustainability of open source AI development poses a serious concern. Many open projects rely on volunteer contributions, small academic grants, or nonprofit support. Without reliable funding models, these projects may struggle to maintain momentum, respond to security issues, or invest in long-term planning. The emergence of mission-driven companies and public-private partnerships offers a promising path forward, but more institutional support is needed to ensure stability and resilience.

Governance and Responsible Use

The open nature of these models—while a strength in many respects—also introduces risks of misuse. Open source AI can be exploited for harmful purposes, including the generation of disinformation, creation of deepfakes, spam automation, cyberattacks, and other malicious activities. Unlike proprietary models, which can implement usage safeguards at the API level, open source models—once downloaded—can be modified and deployed without oversight.

To mitigate these risks, the open source community must advance responsible governance practices. One approach involves adopting licensing schemes that restrict harmful use while preserving openness. Licenses such as the Responsible AI License (RAIL) and the OpenRAIL suite represent an evolving attempt to balance accessibility with accountability. Although enforcement remains a challenge, these licenses signal a normative shift and create a framework for ethical model distribution.

Additionally, open governance frameworks are emerging to guide the development and stewardship of large-scale models. For example, the BigScience project established a participatory governance model that included ethicists, legal scholars, and civil society representatives throughout the development process. These models of collective decision-making can help identify and preempt risks early in the development cycle.

Transparency reports, model cards, and data statements are other tools that promote responsible use. By documenting the provenance, training objectives, and known limitations of a model, developers can empower users to make informed decisions about its application. The continued adoption of these tools across open source projects is critical to fostering trust and social legitimacy.

Industry and Policy Trends

The future of open source AI will be shaped not only by technical and ethical considerations but also by regulatory and industry dynamics. Governments around the world are increasingly scrutinizing the deployment of advanced AI systems, with particular attention to transparency, bias mitigation, and explainability. The European Union’s AI Act, for instance, proposes a risk-based regulatory framework that may impose new obligations on both proprietary and open source developers.

While such regulations are designed to protect consumers and prevent misuse, they may inadvertently place a disproportionate burden on smaller open source projects that lack the compliance infrastructure of large corporations. It is essential that policymakers adopt a nuanced approach—one that protects public interests without stifling innovation in the open ecosystem. Provisions that support transparency and reproducibility without mandating unattainable audit standards could offer a viable middle ground.

In the private sector, a growing number of companies are incorporating open source models into their offerings. Platforms like Hugging Face, LangChain, and Weights & Biases have built thriving ecosystems around open tools and models, demonstrating that commercial success and openness are not mutually exclusive. Furthermore, some large technology companies, including Meta and Google DeepMind, are selectively open-sourcing their models or tools to encourage community collaboration.

This trend points toward the emergence of hybrid models that combine open source foundations with proprietary extensions or services. These hybrid approaches may help address some of the resource and governance challenges while preserving the benefits of openness. However, care must be taken to ensure that the open core remains genuinely accessible and that commercial overlays do not dilute the principles of transparency and community ownership.

Future Outlook

Looking ahead, the prospects for open source AI are both promising and contingent on strategic evolution. Technological innovations—such as federated learning, distributed training frameworks, and zero-cost inference—may help address some of the current resource constraints. Meanwhile, the maturation of alignment techniques, safety toolkits, and interpretability frameworks will further strengthen the reliability of open models.

Institutional support will also play a critical role. Universities, government agencies, and philanthropic foundations can provide the resources needed to support long-term open source development. National AI strategies could include mandates or funding streams for open research, ensuring that public investment aligns with the public good.

Furthermore, community norms and values must continue to evolve. Inclusivity, diversity, and global representation should be prioritized to ensure that open source AI reflects a multiplicity of perspectives and serves a broad spectrum of societal needs. International collaboration—modeled on successful efforts like CERN or the Human Genome Project—may be instrumental in realizing this vision.

Ultimately, the trajectory of open source AI will depend on the willingness of stakeholders to confront difficult trade-offs and to invest in a collective future. If nurtured responsibly, open source models can become the backbone of a global AI infrastructure that is trustworthy, inclusive, and resilient—an infrastructure that empowers humanity, rather than concentrating power in the hands of a few.

Conclusion

As artificial intelligence continues to shape the modern world, the choices made about how these technologies are developed, distributed, and governed will have lasting implications for society. Open source AI models have emerged as a vital alternative to closed, proprietary systems, offering a path toward greater transparency, inclusivity, and innovation. By making advanced models accessible to researchers, developers, and institutions of all sizes, the open source approach reduces dependence on a handful of tech conglomerates and fosters a more pluralistic AI ecosystem.

The journey of open source AI, from foundational toolkits to sophisticated large language models, has demonstrated the power of collaborative development. Communities across academia, industry, and civil society have come together to build systems that are not only performant but also auditable and adaptable. These efforts have made it possible to audit bias, improve safety, and accelerate scientific progress—goals that are increasingly vital in an era of rapidly expanding AI capabilities.

However, the promise of open source AI is accompanied by a complex set of challenges. Technical barriers, such as the high costs of training and alignment, remain significant. Ethical risks, including misuse and unintended consequences, demand proactive governance and responsible licensing. Moreover, the evolving regulatory landscape poses both opportunities and constraints, requiring thoughtful engagement from open source stakeholders.

To address these challenges and sustain momentum, strategic investments and policy support are essential. Governments, philanthropic organizations, and academic institutions must provide stable funding and infrastructure to ensure the long-term viability of open source projects. Meanwhile, developers and communities must continue to cultivate a culture of responsibility, inclusivity, and openness, recognizing that the strength of open source lies not only in code but in collective purpose.

Looking ahead, the future of open source AI is bright but not guaranteed. It will require deliberate effort to preserve its foundational principles while evolving to meet new demands. Hybrid models that blend open access with commercial sustainability may offer a viable path forward, provided they maintain the integrity of the open core. International collaboration, interdisciplinary engagement, and community-driven governance will be key to realizing the full potential of open source AI.

In a world increasingly shaped by algorithmic decision-making, open source AI is more than a technical movement—it is a societal imperative. It embodies the belief that the benefits of artificial intelligence should be broadly shared, its risks collectively managed, and its direction democratically shaped. The road ahead is complex, but with continued commitment, open source AI can help build a future where technology empowers humanity, rather than dividing or dominating it.

References

- Hugging Face – Transformers

https://huggingface.co/transformers/ - LLaMA Model Overview

https://ai.meta.com/llama/ - GPT-Neo and GPT-J Projects

https://www.eleuther.ai/ - Open-Weight Language Models

https://www.mistral.ai/ - BigScience Project – BLOOM Model

https://bigscience.huggingface.co/ - Falcon LLM by TII

https://falconllm.tii.ae/ - Responsible AI Licensing

https://www.licenses.ai/ - Model Benchmarks and Datasets

https://paperswithcode.com/ - AI Benchmarking and Reproducibility

https://mlcommons.org/ - The EU Artificial Intelligence Act (AI Act)

https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence