When Smart Becomes Suspect: How AI Use at Work Can Damage Your Professional Reputation

Artificial Intelligence (AI) has rapidly transitioned from a peripheral innovation to a central force reshaping the modern workplace. From generative language models assisting with content creation to machine learning algorithms optimizing workflows and decision-making, AI is redefining what productivity looks like across sectors. For knowledge workers, particularly those in white-collar professions such as marketing, software engineering, finance, and consulting, AI tools like ChatGPT, GitHub Copilot, Grammarly, and Midjourney have become as commonplace as email and spreadsheets. These tools promise efficiency, creativity, and a competitive edge. Yet, beneath this surge in digital augmentation lies an underexplored dilemma: while AI can enhance performance and output, its usage may simultaneously undermine professional credibility and damage reputations.

This paradox—the simultaneous enhancement and erosion of perceived value—has created a new layer of complexity in how employees are evaluated. In environments where performance metrics emphasize outcomes, AI tools provide undeniable benefits. Automated emails, pitch decks, reports, and even code snippets can now be produced at record speeds. However, as the line between human-generated and machine-generated content continues to blur, employees risk being viewed as overly dependent on machines, or worse, as dishonest or unskilled. The irony is stark: a well-written report or presentation produced with AI assistance may attract suspicion rather than praise, especially if it surpasses expectations without clear attribution.

In recent years, workplace culture has begun to grapple with the social implications of AI use. While employers eagerly adopt AI to boost productivity and lower costs, they often fail to establish clear guidelines for ethical and transparent usage. This vacuum has left employees navigating uncertain terrain, unsure whether disclosing AI assistance will be viewed as responsible transparency or a confession of inadequacy. Consequently, workers must walk a fine line between leveraging technology and preserving the perception of personal expertise. The situation becomes particularly precarious in collaborative settings, where credit attribution matters and peer evaluation can influence advancement.

Moreover, professional reputation is not built on results alone; it is cultivated through demonstrations of insight, originality, diligence, and interpersonal trust. When AI takes over portions of these functions—such as generating ideas, drafting reports, or even summarizing meetings—colleagues may question whether an individual still possesses the skills and knowledge traditionally associated with those tasks. As AI continues to displace not just tasks but the visible signs of effort and craftsmanship, professionals may find it harder to distinguish themselves, leading to diluted personal branding and stunted career growth.

This blog post will explore the nuanced ways in which AI use in the workplace can harm professional reputation. It will begin with a look at the growing adoption of AI-driven workflows and the promises they offer. It will then delve into specific reputation risks, including perceptions of dishonesty, loss of authenticity, and the erosion of skill-based value. The post will also examine how organizational dynamics, including managerial attitudes and role-based power structures, influence how AI use is interpreted. Finally, it will offer concrete strategies for mitigating these risks—ensuring that AI augments human performance without displacing the professional integrity that careers are built upon.

In doing so, this analysis aims to contribute to a more informed conversation about the ethical, social, and reputational dimensions of AI in the workplace. As the tools we use evolve, so too must the ways we evaluate contribution, trustworthiness, and merit. In the age of AI, safeguarding one’s professional image may no longer be just about working hard or delivering results—it may require a deeper understanding of how to balance innovation with integrity.

The Rise of AI-Driven Workflows: Efficiency with a Hidden Cost

The integration of artificial intelligence into workplace operations has been nothing short of transformative. Over the past five years, AI-driven workflows have moved from experimental tools into standard business practices across a wide range of industries. From corporate boardrooms to creative agencies and IT departments, AI systems are now routinely embedded in productivity suites, communication tools, customer service platforms, and software development environments. The appeal is obvious: AI offers unmatched speed, scalability, and convenience. However, while these benefits have catalyzed a rapid uptick in adoption, they also obscure a growing set of hidden costs—particularly in how AI usage may compromise the perceived value of human effort.

AI Tools Reshaping Workplace Practices

The types of AI tools now commonly used in workplaces can be broadly categorized into generative and predictive systems. Generative tools, such as OpenAI's ChatGPT, Anthropic's Claude, or Jasper, specialize in content creation, including emails, articles, presentations, and technical documentation. Visual design tools like Midjourney and DALL·E allow employees to generate high-quality images or marketing assets in seconds. Coding assistants such as GitHub Copilot or Tabnine can write functional code with minimal human input. Predictive systems, on the other hand, include recommendation engines, forecasting models, and analytics dashboards that support business decision-making and operational planning.

These tools are often integrated into existing platforms such as Microsoft 365, Google Workspace, Notion, and Salesforce, enabling users to access AI features seamlessly within their familiar work environments. The resulting efficiency gains have been well documented. Tasks that once took hours or even days are now completed within minutes, significantly reducing labor costs and boosting organizational productivity. For businesses focused on maximizing output and minimizing overhead, this development represents a strategic breakthrough.

Automation of Cognitive Labor

The unique aspect of this AI transformation is that it primarily targets cognitive labor rather than manual labor. Unlike previous waves of automation that impacted factory work and logistical operations, the current wave automates knowledge-intensive tasks: writing, researching, planning, designing, and coding. In doing so, it redefines what constitutes valuable work in the modern office. AI can generate reports, answer customer queries, summarize meetings, or write detailed analyses—all activities once considered to be exclusively within the human domain.

As AI begins to outperform average human output in both quality and speed, organizations are reconfiguring performance metrics. Instead of evaluating employees on the basis of time spent or process rigor, the focus is now on end results. Deliverables become decontextualized—produced more quickly and perhaps more elegantly than ever, but stripped of the visible labor that traditionally signals diligence, originality, and expertise. While managers may appreciate the faster turnaround, they may also begin to question the actual contribution of the individual, especially in environments where oversight of the AI’s role is limited.

Unintended Effects on Employee Identity

This shift poses a significant challenge to workers' professional identities. Many derive self-worth from the quality of their work, the effort it requires, and the recognition it garners. As AI tools assume more responsibility for output generation, employees may begin to feel like editors or orchestrators rather than creators. Their role changes from producing knowledge to managing its production, which can result in diminished job satisfaction, skill atrophy, and alienation from one's craft.

Moreover, there is a growing concern that reliance on AI tools may be perceived not as a strategic enhancement but as a crutch. Even in cases where AI use is encouraged or explicitly approved by an employer, there remains an underlying tension: to what extent is the work truly yours? This becomes particularly fraught in performance reviews, promotion cycles, or peer recognition events, where attribution plays a critical role. If colleagues or managers suspect that an individual’s impressive outputs are heavily AI-assisted, the perceived value of those contributions may decline, regardless of actual impact.

Employer Expectations and Normalized Dependence

The normalization of AI assistance also introduces a paradoxical expectation loop. As more employees use AI to meet rising productivity standards, those who do not—or who do so transparently—may be penalized for slower or less polished results. In such settings, AI becomes not an optional enhancer but a de facto requirement. Workers must now compete not only with their peers but with their peers' augmented capacities. Ironically, the very tools designed to ease workloads often lead to heightened performance expectations and intensified workplace pressure.

This is compounded by the lack of transparency around AI usage. Most organizations have yet to implement formal policies governing disclosure, attribution, or ethical boundaries for AI-generated work. The absence of such policies leaves workers in a gray zone, unsure whether to disclose their reliance on AI tools or to present the work as entirely their own. Some may overuse AI out of fear of falling behind; others may avoid it to preserve authenticity, thereby placing themselves at a disadvantage.

Reputational Tensions in Collaborative Workflows

In collaborative teams, the reputational risks of AI use become even more pronounced. Joint projects often involve shared authorship and distributed responsibilities, and yet AI-generated contributions can blur the lines of who did what. If one team member relies heavily on AI to complete their tasks while others contribute manually, the group dynamic may shift. Resentment can arise, and perceptions of inequity may grow. In the long run, this undermines trust and cohesiveness within teams, especially if management fails to recognize and appropriately credit human contributions.

These reputational tensions are particularly acute in creative, strategic, and client-facing roles. A marketing strategist who consistently delivers perfectly phrased campaigns may arouse skepticism if peers suspect AI involvement. A business analyst who produces comprehensive forecasts without deep domain experience may be perceived as leveraging tools over knowledge. While the outputs remain valuable, the professionals behind them may struggle to maintain credibility if AI is seen as doing the intellectual heavy lifting.

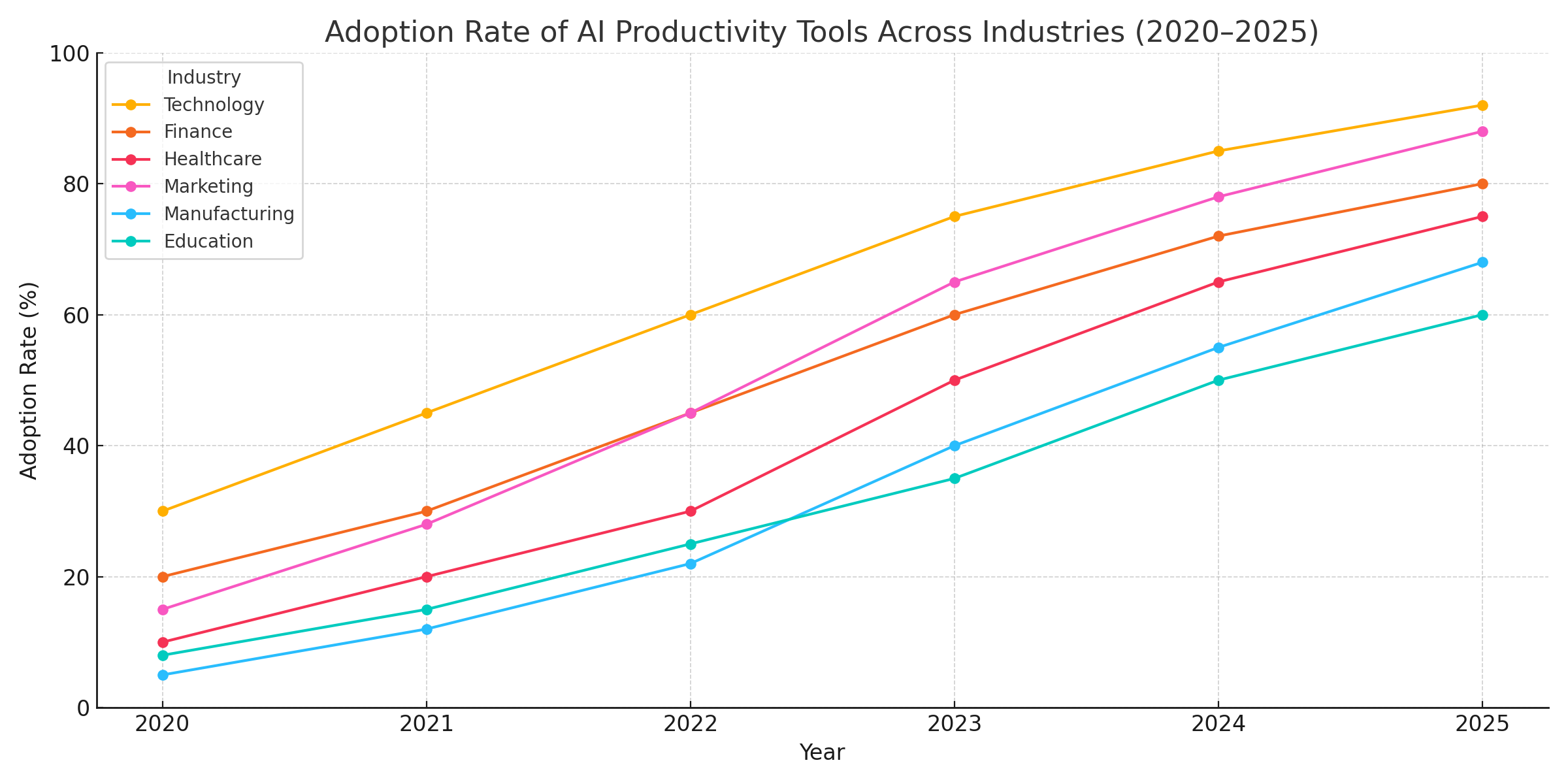

To illustrate the speed and breadth of AI integration, consider the chart below which shows the percentage of companies across various sectors that have adopted AI productivity tools from 2020 to 2025. The rapid rise demonstrates not only AI’s growing ubiquity but also its normalization as part of routine workflows.

This data underscores how AI has transitioned from a competitive edge to a basic workplace utility. However, with this normalization comes increased scrutiny. The question is no longer whether you use AI—but how you use it, and how that usage reflects on your professional character.

A Complex Trade-Off

Ultimately, the rise of AI-driven workflows presents a complex trade-off between speed and substance, output and authorship, convenience and credibility. While these tools unlock new forms of efficiency, they also redefine how work is judged, who receives credit, and what it means to be a professional in the AI era. Workers must not only master these technologies but also navigate the nuanced reputational risks they carry. As the next section will show, these risks are not theoretical—they are already manifesting in real-world scenarios, reshaping the career trajectories of employees across the corporate landscape.

Reputation Risks: When AI Use Backfires on Employees

While artificial intelligence tools have been widely embraced for enhancing efficiency and output, they are increasingly becoming a double-edged sword for employees. What is often marketed as an asset to productivity and innovation can, in practice, damage professional reputation when used without careful consideration. Employees who rely on AI may find themselves scrutinized not only for the quality of their deliverables but also for the authenticity and originality behind them. The reputational risks associated with workplace AI use are multifaceted—ranging from being perceived as lazy or dishonest to being undervalued or even replaced.

The “Lazy Worker” Stigma

One of the most immediate and damaging perceptions associated with AI use in the workplace is the notion that it enables laziness. Employees who use AI to assist with writing reports, generating designs, or completing coding tasks may be viewed as shirking responsibility rather than working smart. This stigma is especially pronounced in traditional corporate cultures that value visible effort, long hours, and hands-on craftsmanship. In such environments, AI-generated work—even if high-quality—can be seen as taking shortcuts.

This perception can be particularly damaging when AI-generated content significantly exceeds expectations. While a polished proposal or a beautifully designed pitch deck may draw praise initially, questions often follow. Colleagues may wonder whether the output is the result of deep insight or simply the push of a button. This skepticism can undercut the employee's perceived competence and raise doubts about their underlying skills, especially if the individual cannot confidently explain or defend the work produced.

Attribution and Ownership Confusion

The issue of ownership is central to workplace trust and credibility. When employees present work that has been heavily supported by AI, the question arises: who should receive credit? AI tools are capable of producing complete paragraphs, strategies, and even diagrams with minimal human intervention. If such work is presented without clear attribution, it may lead to unintentional misrepresentation, causing reputational harm when discovered.

This is not merely a hypothetical concern. There have been instances where employees have lost credibility—or worse, faced disciplinary action—after it was discovered that their deliverables were AI-generated and passed off as original. While most companies lack clear policies on disclosure, the implicit expectation remains that employees should contribute their unique expertise and effort. Any deviation from this, particularly when hidden, risks being interpreted as deceitful.

Ethical Ambiguity and the Question of Integrity

The lack of consensus on ethical norms around AI use further complicates the reputational landscape. In the absence of formal guidelines, employees are left to make subjective decisions about when and how to disclose AI involvement. This ambiguity creates vulnerability, as judgments about what is "acceptable" AI usage often vary across departments, managers, and peer groups.

For example, a junior analyst who uses ChatGPT to draft a performance review summary may view it as a time-saving aid. However, if a senior manager expects original wording or deeper personal reflection, the use of AI may be seen as a lack of engagement or sincerity. Similarly, a marketing executive who employs Midjourney to design campaign visuals may impress clients initially but face criticism from internal design teams for bypassing human collaboration. In each case, the reputational fallout stems not from the quality of the work itself, but from the misalignment of expectations and values.

Undermining Peer Relationships

Reputational risks also extend to team dynamics. In collaborative environments, trust and accountability are essential for group cohesion. When AI is used unevenly within teams, it can lead to resentment and perceptions of unfairness. For example, if one team member uses AI to draft their portion of a project while others contribute manually, the disparity in effort can breed friction—even if the AI-generated section is technically sound.

These tensions can result in a breakdown of collaboration and even passive exclusion. Colleagues may become less likely to assign critical tasks or trust the judgment of the AI-using team member, fearing that future contributions may lack human depth or originality. In this way, reliance on AI tools can marginalize an employee within their own team, leading to subtle but impactful reputational damage.

Public Exposure and Viral Backlash

In certain cases, AI misuse or overuse has become a public affair, resulting in broader reputational harm. Social media platforms are rife with examples of professionals being called out for submitting AI-generated content without proper review or oversight. Whether it is an academic submitting an AI-written abstract, a journalist caught using ChatGPT without fact-checking, or a designer plagiarizing AI-generated images, such incidents can escalate quickly—resulting in embarrassment, retraction, or termination.

In highly competitive industries such as tech, academia, and media, public exposure of inappropriate AI use can tarnish a career. The reputational impact extends beyond the individual incident, casting long shadows over future job prospects, client relationships, and credibility as a subject matter expert.

Case Studies: Real-World Reputational Fallout

Several real-world examples illustrate how AI use has backfired professionally:

- A junior software engineer at a major tech firm was discovered to have submitted code largely written by GitHub Copilot without thorough testing. When bugs emerged during deployment, the engineer was reprimanded not for the error itself, but for failing to understand or validate the AI-generated logic—resulting in a reputational hit and delayed promotion.

- A financial analyst at a global consultancy used an AI tool to generate a client report. While the report appeared polished, it contained several outdated references and unsupported assertions. The client raised concerns, prompting an internal audit that revealed the use of generative AI. The analyst’s judgment was called into question, leading to reassignment and loss of trust from colleagues.

- A marketing executive published an entire blog post generated by an AI model, which inadvertently replicated a paragraph from an existing online source. Accusations of plagiarism followed, damaging the individual’s reputation and forcing the company to issue a public clarification.

These examples underscore the delicate nature of professional reputation in the age of AI. Even when intentions are benign, the consequences of careless or undisclosed AI use can be severe.

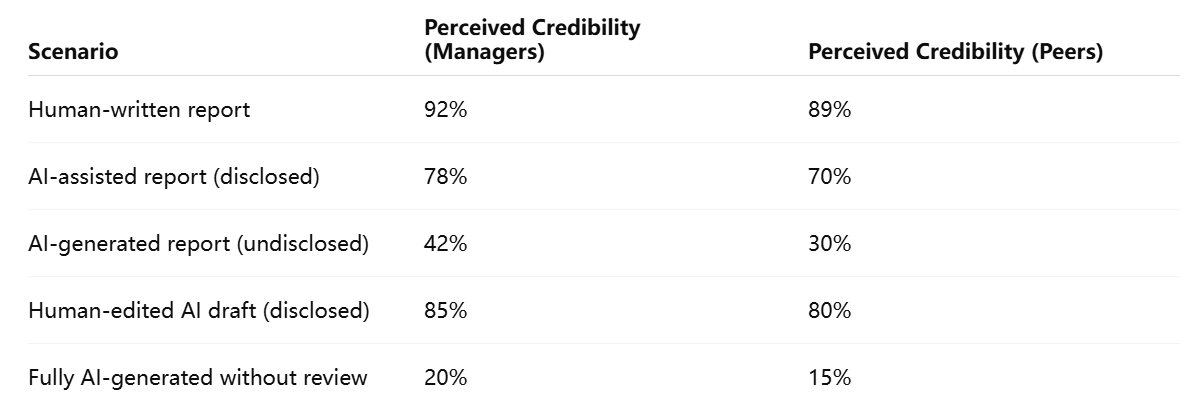

The table below summarizes survey results from a study of 500 employees and 200 managers across various industries, comparing perceptions of credibility based on disclosed AI use.

This data highlights a critical insight: transparency significantly mitigates reputational risk, while concealment or overreliance without verification dramatically erodes credibility.

Navigating the Risk Landscape

As AI tools become more embedded in everyday workflows, it is imperative that employees consider not only what these tools can do, but also how their use will be perceived. The workplace is a social ecosystem in which reputation is shaped by more than results—it is molded by authenticity, trust, consistency, and ethical conduct. The challenge is not merely to use AI effectively, but to do so in a manner that maintains professional integrity and aligns with evolving norms.

Organizational Dynamics: AI Use, Power Structures, and Accountability

The introduction of artificial intelligence into the workplace does not occur in a vacuum. Rather, its integration is deeply influenced by the organizational context in which it operates. Power structures, managerial expectations, corporate culture, and policy—or the lack thereof—all shape how AI usage is perceived and regulated. The implications of these dynamics are profound: depending on their position within the hierarchy, some employees may be rewarded for AI use, while others are penalized. This uneven terrain creates reputational risk asymmetries, wherein AI adoption can either elevate or diminish one’s professional standing based not on merit, but on role, visibility, and influence.

Hierarchical Divide in AI Privilege

One of the most salient features of organizational AI use is the hierarchical divide in how it is perceived and tolerated. Senior leaders and executives often enjoy greater freedom and discretion in leveraging AI tools. They are typically evaluated based on strategic outcomes, vision, and delegation, rather than the minutiae of their daily tasks. As such, an executive using AI to generate presentations, analyze data, or draft memos is often seen as forward-thinking or efficient.

Conversely, junior employees are more closely scrutinized for how they complete their tasks. Their work is typically more granular, execution-oriented, and subject to review. When junior staff rely on AI for routine deliverables, their use of automation may be interpreted as a lack of initiative or competence. This disparity is not always explicit, but it is reinforced through feedback mechanisms, performance reviews, and informal peer assessments.

Such uneven treatment fosters an environment where the acceptability of AI usage is inversely proportional to one’s position in the organizational hierarchy. The result is reputational fragility among those least empowered to protect themselves, further entrenching workplace inequalities.

The Role of Managers: Enablers or Gatekeepers?

Managers play a critical role in shaping perceptions of AI use within teams. Their attitudes—ranging from enthusiastic adoption to cautious skepticism—can determine whether AI-assisted work is viewed as innovative or corner-cutting. Unfortunately, many managers lack formal training or policy guidance on how to evaluate AI-generated contributions. As a result, their judgments are often based on personal bias, prior experience, or anecdotal understanding.

Some managers embrace AI as a performance enhancer and actively encourage their teams to adopt relevant tools. These leaders often prioritize results over process and are more likely to reward efficiency, even if it is AI-enabled. Others remain suspicious, concerned that employees may over-rely on technology or produce content they do not fully understand. In these cases, AI use may be discouraged, and its disclosure treated with wariness.

This inconsistency creates a fragmented workplace landscape where employees must tailor their AI usage strategies not to organizational policy, but to the idiosyncrasies of their direct supervisors. Such unpredictability adds an additional layer of reputational risk: the same behavior that earns praise in one department may attract criticism in another.

Policy Gaps and the Risks of Informality

Despite the widespread adoption of AI in daily workflows, most organizations have yet to establish comprehensive policies governing its use. Existing guidelines, if they exist at all, tend to focus on data privacy, intellectual property, or legal compliance—areas concerned more with risk mitigation than professional conduct. Rarely do these policies address key questions that affect employee reputation:

- When is AI usage acceptable, and when is it not?

- What level of disclosure is required?

- How should attribution be handled in team settings?

- What are the expectations for review and validation of AI-generated content?

In the absence of clear standards, employees must rely on informal cues—such as managerial tone, team culture, or peer behavior—to navigate AI use. This ambiguity exposes them to reputational risk, especially if their judgment diverges from that of their evaluators. Moreover, it leaves room for accusations of inconsistency or favoritism, eroding trust in leadership and organizational fairness.

Power and Attribution in Team Environments

In collaborative environments, attribution becomes a critical issue. When multiple team members contribute to a shared deliverable, the use of AI by one individual can skew perceptions of effort and value. If a team leader presents a well-crafted proposal that includes AI-generated content, they are often credited with strategic insight. Subordinates who contributed manually may receive less recognition, even if their inputs were more time-intensive or technically rigorous.

This dynamic highlights how power structures influence the reputational outcomes of AI use. Those with greater visibility or authority are more likely to benefit from AI-assisted work, while others risk being overshadowed or undervalued. In extreme cases, AI use by subordinates may even be co-opted by superiors—where AI-generated insights are presented as personal thought leadership by those higher up the chain.

These scenarios underscore the importance of transparent attribution practices, especially in environments that rely heavily on AI for content generation and analysis. Without them, workplace politics can distort the value of AI use, compounding reputational inequities.

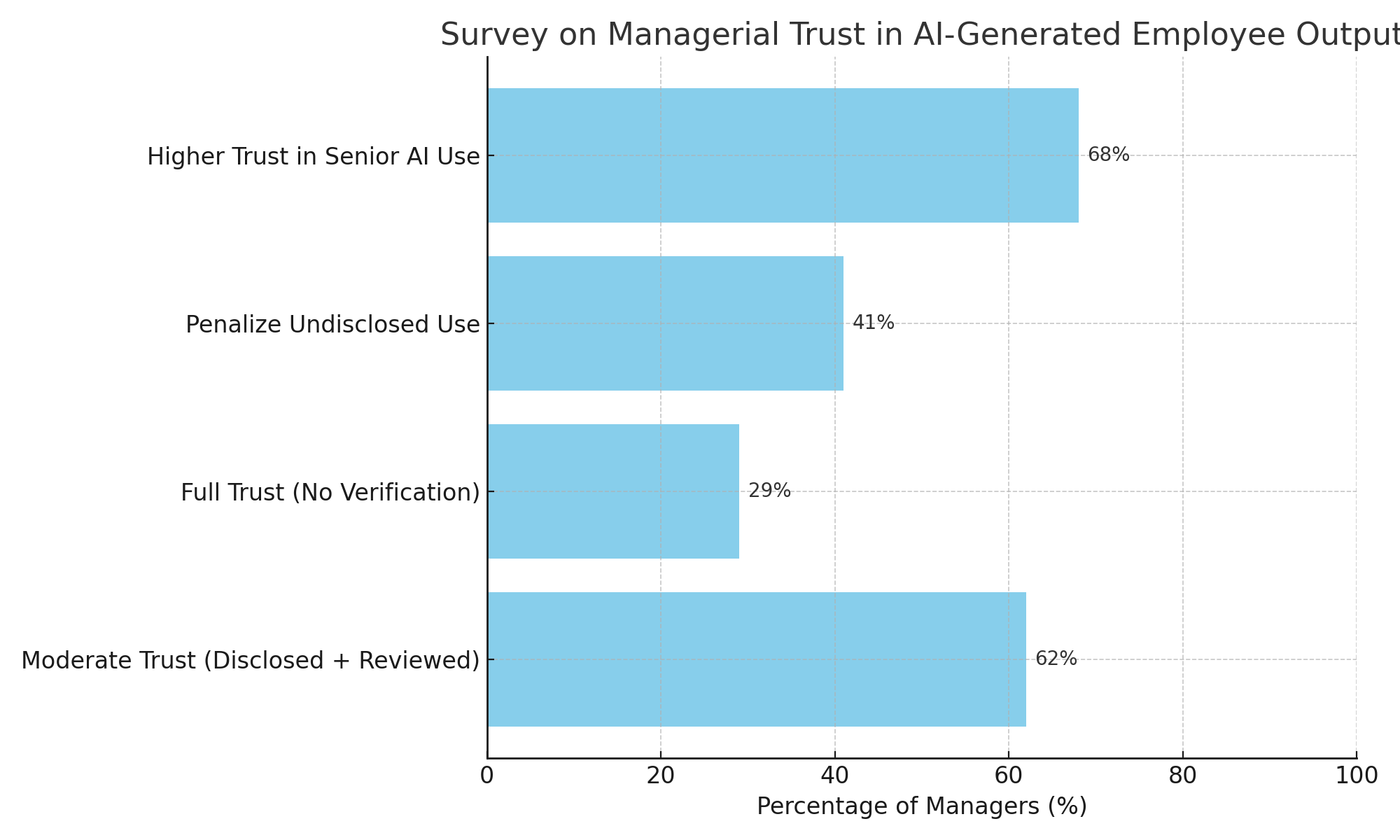

To better understand the organizational implications of AI use, a recent cross-industry survey asked 300 mid-level and senior managers how much trust they place in employee work that involves AI assistance.

The findings reveal significant variance:

- 62% of managers expressed moderate trust in AI-assisted work when disclosed and reviewed.

- Only 29% fully trusted such work without additional human verification.

- 41% admitted they would penalize undisclosed AI use if discovered post-submission.

- Trust levels were significantly higher for senior-level AI users than for junior staff.

These data points suggest that AI use is not evaluated solely on its merits, but is also filtered through the lens of trust, hierarchy, and role-based expectations.

Institutionalizing Ethical AI Use

To mitigate the reputational volatility introduced by these dynamics, organizations must move toward institutionalizing ethical AI practices. This includes developing and enforcing policies that clarify acceptable use, promote transparency, and support equitable attribution. Key components should include:

- Disclosure Requirements: Encouraging or mandating that employees specify when and how AI tools are used in deliverables.

- Review Standards: Establishing expectations for human oversight and validation of AI-generated content.

- Attribution Protocols: Clarifying how AI-assisted work is credited in individual and team contexts.

- Training and Awareness: Providing managers and employees with guidance on evaluating AI-enhanced outputs fairly.

Such measures not only protect reputations but also promote a culture of accountability and integrity. They help ensure that AI is used to elevate—not replace—human expertise and effort.

Navigating the Structural Terrain

As AI continues to reshape workflows, understanding its interaction with organizational dynamics is essential. Employees must not only be adept at using AI tools but also sensitive to the reputational implications of doing so within their specific organizational context. Likewise, leaders must recognize that unmanaged AI use can create hidden power imbalances, threaten fairness, and undermine team cohesion.

Mitigating Harm: Strategies for Responsible AI Use and Reputation Management

As artificial intelligence becomes an entrenched part of daily work, professionals must not only learn how to harness its capabilities but also develop safeguards to protect their reputations. The risks explored in previous sections—ranging from perceptions of dishonesty to imbalances in team attribution—underscore the importance of adopting a proactive and strategic approach to AI integration. This section outlines a set of actionable strategies that individuals and organizations can employ to ensure responsible AI use, protect credibility, and maintain a professional reputation grounded in transparency, competence, and trust.

Embracing Transparency in AI-Assisted Work

The foundation of any responsible AI use strategy lies in disclosure. Transparency fosters trust and signals ethical integrity. When employees openly communicate their use of AI tools in creating content, conducting analysis, or performing other job functions, they pre-empt suspicion and establish themselves as both honest and technologically adept.

This disclosure does not need to undermine the value of one’s contributions. On the contrary, articulating how AI tools were applied—along with how results were verified and refined—can enhance credibility. For instance, stating that an executive summary was initially drafted using ChatGPT but then edited for tone, accuracy, and clarity signals both efficiency and editorial judgment.

To support this, professionals can develop standard language for AI usage notes. Examples include:

- “This report was drafted with the assistance of generative AI and has been reviewed for accuracy and relevance.”

- “Visuals were generated using AI design tools and customized to meet brand guidelines.”

Such phrasing demystifies the process and helps normalize responsible AI integration across teams.

Demonstrating Human Oversight and Value

Disclosure alone is insufficient without clear indications of human input. One of the central fears among colleagues and supervisors is that AI will supplant, rather than support, critical thinking and expertise. To counter this perception, professionals must emphasize the uniquely human elements of their work—analysis, synthesis, decision-making, contextualization, and creativity.

When presenting work that involved AI assistance, employees should be prepared to explain their rationale, adjustments, and interpretation. For example, a data analyst using an AI model to forecast revenue should be able to discuss assumptions, anomalies, and limitations of the output. A marketer who used Midjourney to design visuals should explain how image prompts were crafted and how brand alignment was achieved.

This demonstration of oversight distinguishes responsible use from blind reliance. It reassures colleagues and supervisors that the employee is still the primary agent behind the work, thus preserving professional identity and reputation.

Setting Boundaries and Choosing Use Cases Wisely

Responsible AI use also involves knowing when not to use AI. Not all tasks benefit from automation, and some may carry reputational risks that outweigh the time saved. Professionals should avoid using AI in situations that require nuanced interpersonal judgment, high levels of confidentiality, or subject matter expertise they do not possess.

Tasks such as writing performance reviews, drafting legal documents, or communicating sensitive information are particularly risky. Even if AI can generate grammatically correct content, the subtleties involved often require human empathy, ethics, and experience. Missteps in these areas can damage internal relationships and trigger questions about an individual’s judgment and integrity.

Establishing personal criteria for AI use—based on task type, audience, and consequences—can help avoid unintentional harm. A simple rule of thumb might be: If the outcome reflects directly on your character, credibility, or competence, keep the human in the loop.

Building a Personal Brand that Includes AI Literacy

Rather than concealing AI use, professionals should consider making AI literacy part of their personal brand. As companies continue to integrate these tools, those who can use them effectively and ethically will become increasingly valuable. Demonstrating AI literacy can take many forms:

- Offering internal workshops on prompt engineering or AI ethics

- Publishing best practices on responsible AI use within teams

- Participating in AI governance or advisory committees

- Sharing lessons learned from using AI in client work or internal projects

By positioning oneself as a thought leader or responsible innovator, an employee shifts the narrative from dependence to expertise. This not only bolsters credibility but also positions the individual as an asset in navigating the organization's technological evolution.

Advocating for Organizational Guidelines

Professionals can also protect their reputations by helping shape the policies that govern AI use. Most reputational risks stem from ambiguity—when employees do not know what is expected of them or how their actions will be interpreted. Contributing to the development of internal AI policies can help clarify norms and reduce subjective judgments.

Key elements to advocate for include:

- Disclosure Protocols: Defining when and how AI use should be communicated.

- Attribution Guidelines: Establishing rules for crediting AI-generated content in collaborative settings.

- Review Standards: Outlining expectations for human oversight, especially in client-facing or high-stakes deliverables.

- Training Programs: Providing all employees, including managers, with the tools to understand and evaluate AI outputs appropriately.

By helping set the standards, professionals contribute to a more consistent, fair, and accountable workplace culture—ultimately benefiting their own and others’ reputations.

Navigating Reputational Recovery

In cases where reputational damage has already occurred—whether due to undisclosed AI use, errors in AI-generated content, or misaligned expectations—professionals can take steps to recover trust. The process requires accountability, learning, and communication.

First, acknowledge the misstep without deflecting blame to the technology. Transparency in admitting an oversight signals maturity and accountability. Next, explain what has been learned and how processes will change going forward. For example, committing to review all AI-generated content before submission or engaging in prompt writing training demonstrates a growth mindset.

Finally, reinforce one’s value by emphasizing areas of expertise that AI cannot replicate—strategic insight, ethical judgment, client rapport, and institutional knowledge. Over time, consistent delivery of high-quality, well-vetted work will help rebuild credibility and restore trust.

The Role of Leadership in Shaping Responsible AI Cultures

While individual strategies are essential, organizations bear the ultimate responsibility for fostering an environment where AI use enhances rather than undermines reputation. Leadership must set the tone by modeling responsible behavior, rewarding ethical innovation, and discouraging both reckless dependence and punitive scrutiny.

Organizations should also invest in AI competency frameworks that delineate expected skills, risks, and evaluation criteria at different levels of the organization. These frameworks help ensure that employees are not only trained to use AI, but also to navigate its professional implications with care and confidence.

A New Standard of Professionalism

The rise of AI marks a paradigm shift in how work is performed, but it also demands a new standard of professionalism—one rooted in integrity, adaptability, and accountability. As AI becomes increasingly integral to the workplace, professionals must evolve from mere users of technology to stewards of its impact on their work, their teams, and their reputations.

By embracing transparency, exercising discernment, investing in personal development, and shaping institutional norms, individuals can ensure that AI remains a tool for empowerment—not a risk to their credibility.

Balancing Innovation with Integrity

The integration of artificial intelligence into the modern workplace has irrevocably altered how work is conceptualized, executed, and evaluated. From streamlining complex tasks to enhancing creative processes, AI tools have become vital instruments of efficiency and innovation. However, this transformation has brought with it a new and complex dimension: the reputational risks associated with AI use. As employees increasingly rely on generative and predictive systems to augment their capabilities, questions surrounding authenticity, ownership, and professional integrity have come to the fore.

The reputational landscape in this AI-augmented era is riddled with asymmetries. Not all employees are judged equally for similar behaviors; hierarchical roles, organizational cultures, and the presence or absence of managerial trust significantly shape perceptions. Junior professionals may be penalized for the same practices that senior leaders are praised for. Teams may become fractured by inconsistent attribution practices. In the absence of clear policies, reputational harm becomes an ambient risk—one that employees must constantly navigate, often without guidance.

Yet, this challenge also presents an opportunity. By embracing transparency, applying discernment in AI use, and advocating for ethical standards, professionals can take control of the narrative. Responsible AI use is not just about protecting one’s image; it is about reaffirming one’s value in a workplace where technology accelerates output but cannot replicate judgment, empathy, or strategic insight. When properly integrated, AI has the potential to enhance—not erode—reputation, provided it is paired with a commitment to accountability and contextual awareness.

Organizations too must recognize their role in mitigating reputational risks. Developing formal policies, offering training, and fostering a culture of ethical innovation are not optional—they are essential to safeguarding fairness and trust. Without such structures, the burden falls unfairly on individuals, creating disparities and damaging morale.

As the workplace continues to evolve, the most respected professionals will not be those who avoid AI, nor those who blindly embrace it. Instead, they will be those who wield it with discernment, transparency, and an unwavering commitment to ethical standards. In this balancing act between innovation and integrity lies the future of credible, responsible, and resilient professional reputation.

References

- How AI Tools Are Reshaping the Workplace

https://hbr.org/technology/artificial-intelligence-workplace - The Ethics of AI Use in Professional Settings

https://www.brookings.edu/articles/ethics-in-the-age-of-ai - Why AI Could Be Hurting Your Career Without You Knowing

https://www.fastcompany.com/worklife/ai-tools-reputation-risk - Responsible AI Use in Corporate Environments

https://www.weforum.org/agenda/ai-responsible-guidelines - How Managers View AI-Generated Work

https://www.cnbc.com/work/manager-trust-ai-productivity - AI in the Workplace: Perceptions vs. Performance

https://www.mckinsey.com/future-of-work-ai-impacts - AI and Employee Trust: The Hidden Tension

https://www.forbes.com/sites/ai-and-workplace-trust - Disclosure and Attribution in AI-Enhanced Workflows

https://slate.com/technology/ai-authorship-ethics - The Dangers of Overreliance on Generative AI

https://www.technologyreview.com/generative-ai-dependence - Building a Culture of Ethical AI Use

https://www.gartner.com/en/articles/building-ethical-ai-in-workplaces