Spotify’s AI DJ Gets a Voice: How Voice Commands Are Revolutionizing Music Personalization

In the rapidly evolving landscape of digital entertainment, personalization has become the linchpin of user satisfaction and retention. Nowhere is this more apparent than in the domain of music streaming, where listeners increasingly demand experiences tailored not only to their tastes but also to their moods, contexts, and even spoken requests. Among the industry leaders, Spotify has long positioned itself at the forefront of this trend, consistently innovating through the deployment of advanced recommendation algorithms and dynamic user interfaces. The company’s latest offering, a powerful extension of its AI DJ feature with real-time voice command capabilities, marks a significant leap toward an even more immersive and intuitive listening experience.

Launched initially as a beta feature, Spotify’s AI DJ was introduced to curate personalized listening sessions using artificial intelligence and generative voice technology. By analyzing user behavior—such as skip rates, replay patterns, time of day, and genre preferences—the AI DJ constructs seamless playlists infused with commentary delivered in a synthetic yet natural voice. This move represented a meaningful fusion of machine learning and digital performance, aimed at mimicking the conversational tone of human radio hosts while leveraging data-driven curation on a granular level. However, the initial iteration of the AI DJ was largely a one-way interaction, functioning more like a smart narrator than a truly interactive system.

That paradigm is now shifting. Spotify’s announcement of voice command integration with the AI DJ function fundamentally transforms the user’s relationship with the music curation process. No longer confined to passive consumption or manual interaction through taps and swipes, users can now vocally instruct their AI DJ to modify playlists, adjust moods, explore different genres, or skip tracks entirely—all in real time. The result is a significant reduction in friction between user intent and musical output, and a deeper sense of control over the auditory experience.

This development is not only an enhancement in terms of usability but also a strategic alignment with broader trends in technology. As voice interfaces become more prevalent—from smart home devices to in-car infotainment systems—Spotify’s move to embrace voice interaction signals its readiness to embed more deeply into users’ daily routines. Moreover, it opens new frontiers for inclusive design, enabling users with visual impairments, mobility issues, or screen fatigue to engage with their music libraries in a more accessible manner.

From a technical perspective, the addition of voice commands introduces complex challenges in natural language processing (NLP), contextual interpretation, and latency optimization. Spotify must ensure that its systems can comprehend a diverse array of speech patterns, accents, and colloquialisms, all while maintaining a seamless playback experience. This calls for not only robust NLP models but also adaptive learning mechanisms that evolve with each user interaction. It also raises important questions about privacy and data governance, particularly regarding how voice data is stored, analyzed, and used to improve personalization.

From a business standpoint, the voice-enabled AI DJ positions Spotify to better compete against other streaming platforms that have integrated voice features, such as Apple Music with Siri, Amazon Music with Alexa, and YouTube Music with Google Assistant. However, the uniqueness of Spotify’s implementation lies in its combination of proactive commentary, dynamic playlisting, and now reactive voice input—all synthesized into a cohesive user experience. This positions the company not only as a streaming service but as a personalized, interactive media companion.

This blog will explore the full scope of Spotify’s AI DJ voice command functionality, from its technical underpinnings and real-world applications to its implications for the broader music and AI ecosystems. We will delve into how the system works, the impact it is already having on user engagement, and the potential it holds for reshaping the future of music consumption. Through detailed analysis supported by charts and comparative tables, this post aims to offer a comprehensive view of one of the most significant updates in the music streaming world to date.

Let us begin by examining how Spotify’s AI DJ operates behind the scenes, and how it uses algorithmic intelligence to tailor music experiences with impressive precision.

How Spotify’s AI DJ Works – Behind the Scenes of Algorithmic Curation

Spotify's AI DJ is more than a mere playlist generator—it is a sophisticated, multi-layered system that exemplifies the intersection of artificial intelligence, behavioral analytics, and natural language processing. At its core, the AI DJ seeks to replicate and surpass the human DJ experience by providing personalized music streams that are contextually relevant, dynamically adaptive, and infused with an engaging voice-based narrative. Understanding how this digital entity functions requires an exploration of the underlying technologies that drive it and the principles guiding its design.

The Building Blocks: Data-Driven Personalization

Spotify’s recommendation engine has long been renowned for its ability to deliver tailored listening experiences. With the AI DJ, the company expands on this capability by introducing a real-time, voice-driven layer on top of its core personalization architecture. This architecture primarily relies on three forms of data:

- User Behavioral Data: This includes skip rates, track replays, listening duration, search queries, playlist creations, and device types. These signals help the system understand user preferences in granular detail.

- Contextual Metadata: Spotify tracks variables such as the time of day, geographic location, user activity (e.g., exercising, commuting), and even weather conditions to refine the mood and theme of the curated content.

- Collaborative Filtering and Content-Based Filtering: The AI DJ incorporates machine learning models that use both collaborative filtering (suggestions based on user similarity) and content-based filtering (recommendations based on track features like tempo, key, and instrumentation).

These data streams feed into Spotify’s recommendation algorithm, which continuously evaluates a user’s evolving preferences and adapts playback queues accordingly.

AI Narration: The Synthetic DJ Voice

What sets the AI DJ apart from traditional recommendation engines is its voice-based interface. The synthetic voice—modeled using generative voice technologies acquired through Spotify’s acquisitions of Sonantic and strategic use of OpenAI tools—serves as a virtual host that introduces songs, provides commentary, and responds to user preferences. This feature humanizes the experience, offering spoken transitions and mini-narratives that evoke the feeling of listening to a live radio DJ.

To achieve this, Spotify employs a Text-to-Speech (TTS) system built on deep learning frameworks, capable of generating realistic speech with nuanced intonations and expressions. The AI DJ synthesizes commentary in real time using contextual cues. For example, if a user has been listening to melancholic tracks on a rainy evening, the AI DJ might say, “We’re keeping it mellow tonight—perfect vibes for a rainy Tuesday,” before introducing a track by Bon Iver or Phoebe Bridgers. This personalized scripting is generated dynamically through natural language generation (NLG) techniques and then rendered via the TTS engine.

The Role of Natural Language Understanding and Real-Time Learning

Although the initial versions of the AI DJ were non-interactive, Spotify engineered the system with adaptability in mind. Through Natural Language Understanding (NLU) modules, the AI DJ parses data not just from behavior but increasingly from explicit feedback and spoken commands (a feature we will expand upon in the next section). This ability allows the DJ to learn from user corrections, skips, and pauses, adjusting its tone, genre mix, or commentary style in response.

This real-time learning loop is essential to the DJ’s success. Spotify’s underlying architecture uses reinforcement learning models that reward successful predictions (e.g., songs that are played in full or added to playlists) and penalize unsuccessful ones (e.g., tracks skipped within the first few seconds). Over time, the system becomes more attuned to subtle shifts in user taste, from seasonal genre changes to sudden shifts in listening habits.

Moreover, Spotify leverages transformer-based models for contextual understanding. These models, similar in design to GPT or BERT, are trained to understand musical relationships, user language, and sequencing patterns. This allows the DJ to maintain narrative cohesion while introducing new content—for instance, explaining why a certain song follows another or framing a playlist around a theme like “nostalgic summer nights” or “energetic work sessions.”

The Architecture in Action

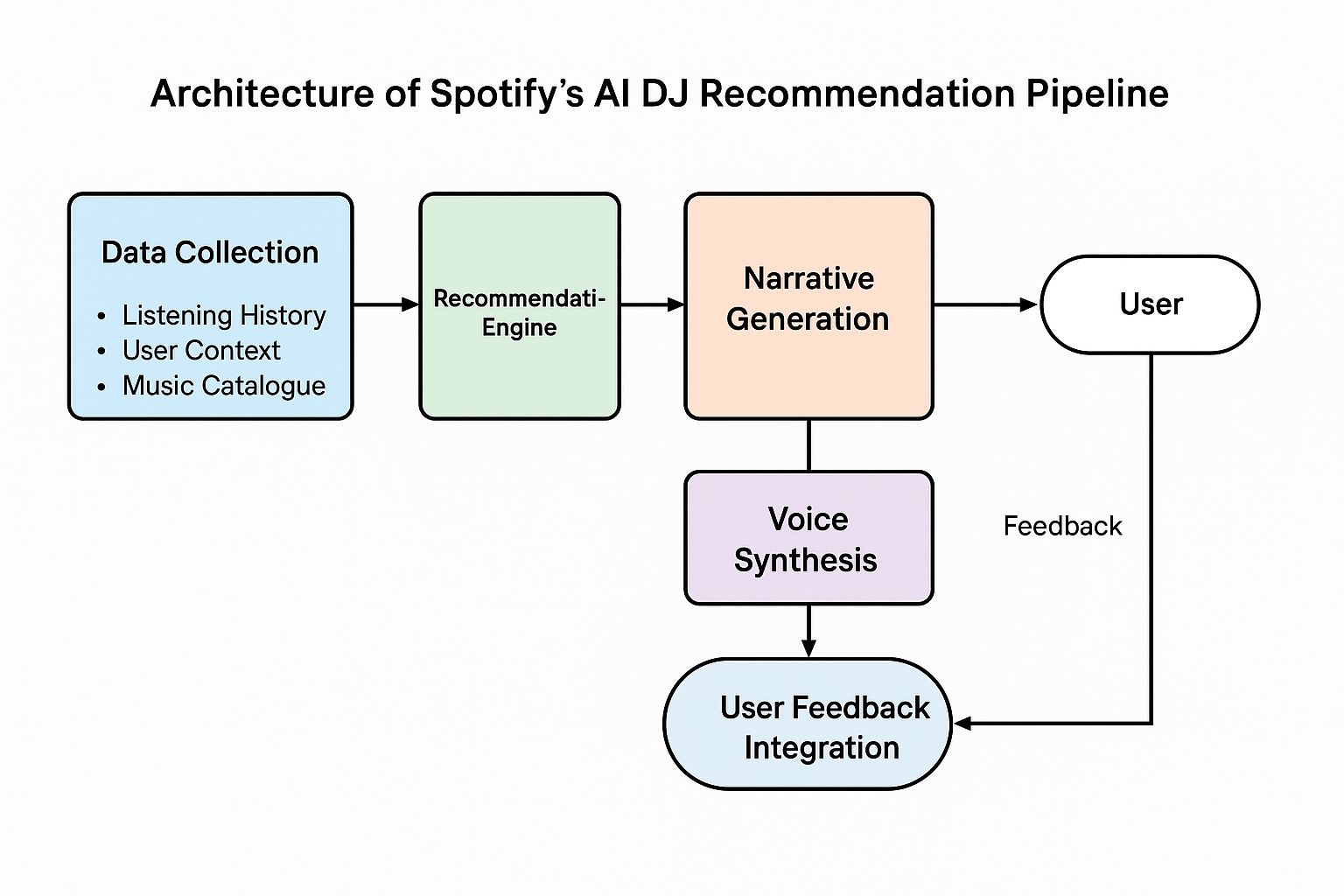

To orchestrate this level of personalization and interactivity, Spotify’s AI DJ operates through a modular pipeline. The key components of this pipeline include:

- User State Estimation Module – Continuously evaluates the listener’s emotional, behavioral, and contextual profile.

- Content Selection Engine – Filters tracks using a blend of collaborative and content-based filtering.

- Narrative Generator – Produces dynamic commentary using NLG, conditioned on track transitions and user state.

- Voice Synthesis Layer – Converts generated text into audio using high-fidelity TTS models.

- Feedback Loop – Monitors real-time user interactions to refine subsequent outputs.

Below is a visual representation of this AI DJ architecture:

From Passive Listening to Interactive Engagement

The introduction of the AI DJ reflects a strategic departure from passive content delivery to active, dynamic engagement. This transformation aligns with broader trends in digital media where interactivity, customization, and conversational AI interfaces are becoming foundational components of user experience. By simulating the presence of a knowledgeable and personable DJ, Spotify not only creates a richer listening experience but also establishes a deeper emotional connection with its users.

This is particularly relevant in an era where attention is fragmented and user expectations are shaped by hyper-personalized platforms such as TikTok, YouTube, and Instagram. The AI DJ serves as Spotify’s answer to these trends, delivering music in a way that feels intimate, adaptive, and refreshingly human-like.

In summary, Spotify’s AI DJ is not a monolithic feature but rather a composite system built on cutting-edge machine learning, real-time feedback loops, and humanized voice synthesis. It reflects the company’s broader vision of turning music streaming into a two-way dialogue between user and platform, mediated by AI. While the current focus is on delivering curated playlists and commentary, the integration of voice commands (explored in the next section) will further elevate this experience by enabling true conversational music personalization.

Introducing Voice Commands – Personalizing Music Like Never Before

The advent of voice commands within Spotify’s AI DJ marks a transformative step toward a more fluid, human-centric interface for music interaction. Moving beyond traditional recommendation systems that rely solely on passive data collection and predictive modeling, the integration of voice technology introduces an active feedback loop wherein the user can directly influence playback through spoken language. This innovation not only enhances the personalization of music streaming but also elevates the overall user experience by enabling real-time, context-sensitive control over content.

From Manual to Vocal Control: The Next Evolution of UX

Until recently, Spotify users curated their listening experiences via manual inputs—taps, swipes, and searches. While efficient, this interaction model imposes cognitive load and attention demands, particularly in scenarios where hands-free functionality is desirable, such as driving, exercising, or cooking. By embedding voice command capability into the AI DJ, Spotify reduces friction in music navigation and enables seamless interaction that mirrors natural conversation.

Voice commands allow users to make highly specific and situational requests. Whether instructing the AI DJ to “play something more upbeat,” “skip this track,” or “give me chill electronic,” the user’s voice becomes an expressive tool that communicates intention more richly than predefined button presses or search filters. This responsiveness not only empowers users to tailor their listening experience with greater precision but also supports a more engaging and interactive environment.

Natural Language Processing at the Core

The successful implementation of voice commands relies heavily on the robustness of Spotify’s Natural Language Processing (NLP) and Natural Language Understanding (NLU) infrastructure. These systems are designed to comprehend a wide range of user utterances, accounting for syntax variability, colloquialisms, tonal shifts, and contextual ambiguity. For example, the system must be able to distinguish between a command like “play something softer” versus “I’m in the mood for something soft rock,” both of which imply different genre and energy-level preferences.

To achieve this level of sophistication, Spotify likely leverages transformer-based language models, trained on diverse datasets that include music-related queries, commands, and conversational patterns. These models segment and interpret speech into actionable components that trigger changes within the AI DJ’s recommendation algorithm. A semantic matching engine then evaluates the user's intent and modifies the music queue accordingly—either by adjusting genre, tempo, artist type, or even mood.

Further, Spotify’s NLP stack likely includes intent recognition modules that map spoken phrases to a discrete set of possible actions. Once intent is established, the system queries its personalization engine to select tracks that match the new criteria, seamlessly updating the user’s playback experience in real time.

Voice Command Use Cases in Practice

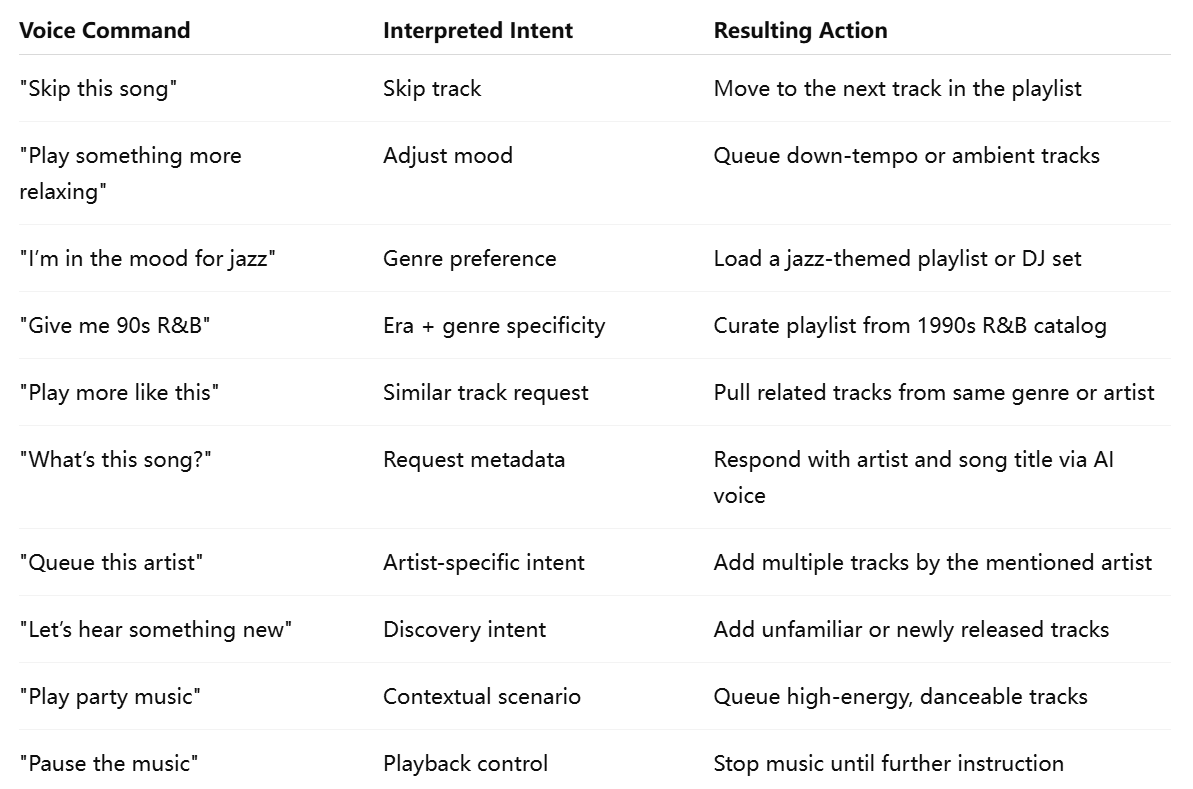

The integration of voice commands offers utility across a wide range of scenarios. In real-world use, users can employ voice instructions to accomplish the following tasks:

- Skip and Dislike: “Skip this one,” or “I don’t like this song.”

- Energy Adjustment: “Play something more energetic,” or “Give me something mellow.”

- Genre and Era Exploration: “Play 90s alternative,” or “Give me 2000s hip-hop.”

- Mood Specification: “Something romantic,” or “Play sad songs.”

- Artist or Song Requests: “Play more like The Weeknd,” or “Queue up ‘Blinding Lights.’”

Each of these commands results in a customized curation process that re-routes the recommendation pipeline based on updated parameters. The AI DJ acknowledges the command using its synthetic voice, often contextualizing the change with a brief commentary such as, “Turning up the energy—here’s a high-tempo track to keep you moving.”

This type of responsive dialog fosters a quasi-conversational experience, where users feel as though they are engaging with a sentient, adaptive music companion rather than a static algorithm.

Technical Challenges and Design Considerations

Implementing voice command functionality introduces a series of technical and design challenges. Chief among them is speech recognition accuracy, which must be robust across varying accents, background noise conditions, and languages. Spotify must rely on state-of-the-art Automatic Speech Recognition (ASR) systems that are trained on diverse linguistic datasets and capable of operating in real time with minimal latency.

Equally critical is dialog state management, which involves preserving conversational context between commands. For instance, if a user says, “Play some pop,” followed by “Make it more upbeat,” the system must understand that the modifier applies to the previously requested genre. This type of multi-turn interaction capability enhances the intelligence of the DJ and contributes to a more natural flow of communication.

There are also important privacy and data governance considerations. Processing spoken commands requires audio input to be captured and interpreted, raising concerns around data storage, usage, and user consent. Spotify has likely implemented edge-processing techniques, voice anonymization, and encryption protocols to mitigate privacy risks and ensure compliance with data protection regulations.

Voice Interaction vs. Traditional Interfaces

Voice commands introduce a paradigm shift in user engagement. Unlike traditional interfaces that require users to adapt to fixed UI options, voice interaction allows users to express desires using free-form language. This reduces cognitive load and expands the range of personalization possibilities. For Spotify, this means users can achieve more granular control over their music with less effort and more enjoyment.

Additionally, voice-first interaction supports accessibility goals, offering users with motor disabilities, visual impairments, or literacy challenges an inclusive way to enjoy Spotify. It also aligns with emerging consumer behaviors around smart speakers, in-car assistants, and wearable devices, positioning Spotify as an integrated part of the broader voice AI ecosystem.

In summary, the introduction of voice commands in Spotify’s AI DJ feature represents a meaningful leap toward a more immersive, intuitive, and empowering music streaming experience. By enabling natural language interaction, Spotify bridges the gap between human expression and algorithmic precision, creating a fluid system where users can guide their musical journeys in real time.

This functionality positions Spotify at the vanguard of voice-first interface design in digital media, with implications that go far beyond streaming. In the next section, we will examine how this innovation is influencing user behavior, increasing engagement, and reshaping Spotify’s value proposition in an increasingly competitive landscape.

The Impact on User Experience and Engagement

The integration of voice commands into Spotify’s AI DJ feature introduces a paradigm shift in how users interact with their music libraries. By moving from passive consumption to active, voice-driven engagement, Spotify significantly enhances the overall user experience. The implications are profound—not only for usability and accessibility but also for listener retention, emotional resonance, and brand loyalty. This section examines the multifaceted impact of the voice-enabled AI DJ on user experience (UX) and platform engagement, with insights supported by emerging behavioral data and strategic design considerations.

Redefining Personalization Through Interaction

Spotify’s reputation has long been associated with its ability to deliver personalized music experiences. Features such as Discover Weekly, Daily Mixes, and Release Radar have set the benchmark for algorithm-driven personalization. However, these features have traditionally been one-directional—relying heavily on inference from passive listening behavior. The AI DJ with voice command support represents a bidirectional system in which users can directly and instantly influence playback.

This shift from prediction to interaction introduces a richer form of personalization, one that is more aligned with individual intent at any given moment. The real-time nature of voice commands enables spontaneous music adjustments, empowering users to co-curate their listening sessions alongside the AI DJ. Whether a user wants to hear more of a certain genre, shift the tempo, or explore unfamiliar artists, their voice becomes the control mechanism for a dynamic, adaptive experience.

From a UX design perspective, this responsiveness reduces cognitive friction. Instead of navigating menus or typing queries, users simply articulate their desires, creating a seamless interface that responds with contextual intelligence. This degree of personalization, rooted in both intent and immediacy, significantly elevates the user’s sense of agency and satisfaction.

Emotional Resonance and Connection

One of the most impactful dimensions of Spotify’s AI DJ is its ability to establish a sense of companionship through human-like voice interactions. The synthesized DJ voice provides commentary, contextual insights, and mood-based transitions that mimic the presence of a real human host. When this voice begins responding to the user’s spoken commands, the system transcends its role as a recommendation engine and becomes a digital persona with which users interact.

This anthropomorphic element enhances emotional resonance. Users are no longer merely selecting music; they are conversing with a system that appears to understand their moods and preferences. This emotional engagement deepens user connection and fosters habitual use, particularly among demographics that value personalization and interactive technology, such as Millennials and Gen Z.

Spotify’s approach aligns with the broader trend of humanizing AI-driven platforms. Similar to the way consumers build rapport with voice assistants like Siri and Alexa, users of Spotify’s AI DJ are beginning to associate its voice with a trusted guide in their musical journeys. This emotional layer, though synthetic, contributes meaningfully to long-term platform loyalty.

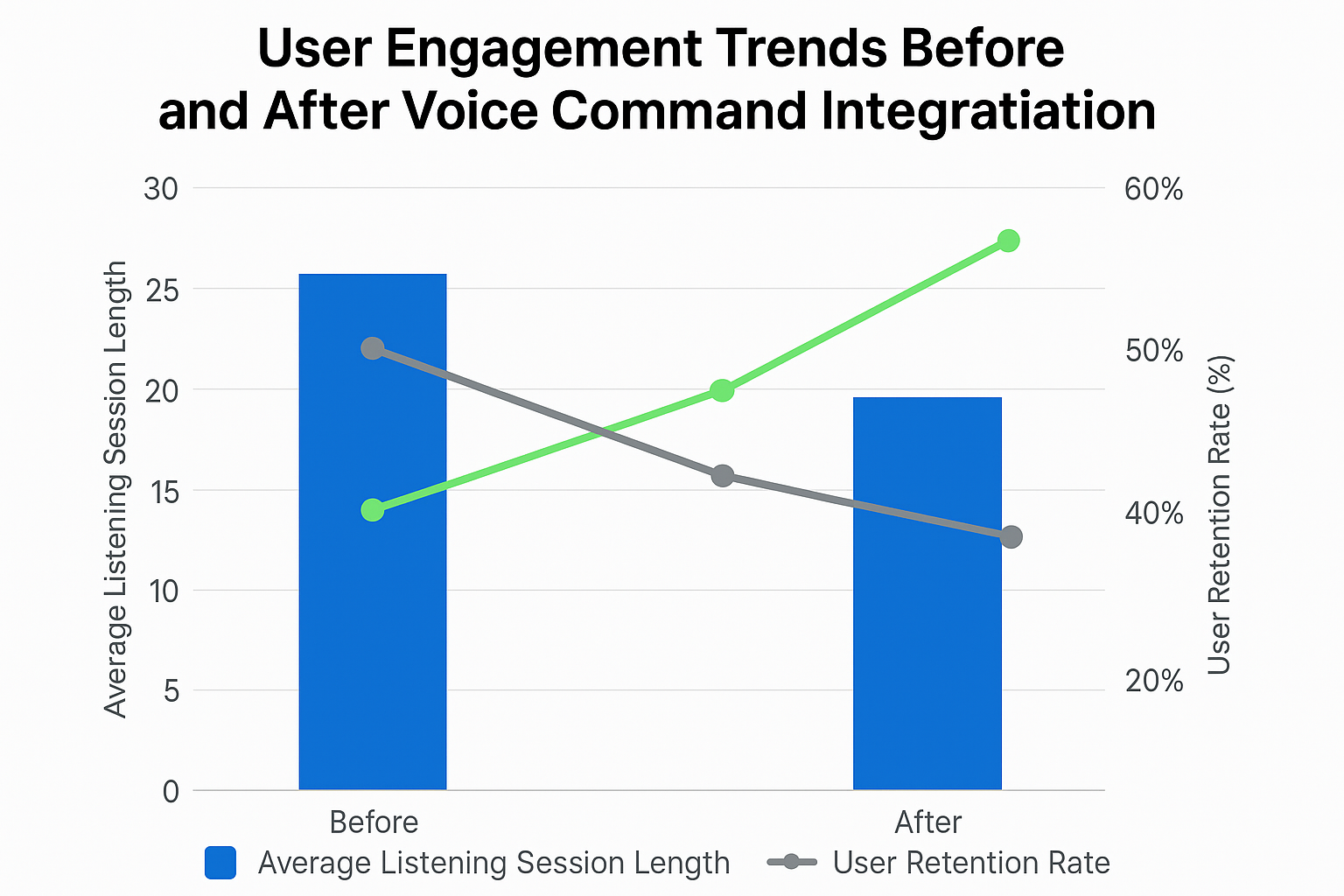

Engagement Metrics: A Quantitative Perspective

Preliminary insights suggest that Spotify’s voice-integrated AI DJ feature is driving substantial increases in key engagement metrics. Internal usage data—though not publicly disclosed in detail—likely reflects improvements in the following areas:

- Time Spent Listening: Users engaged in dialogue with the AI DJ are spending longer periods on the app, as they explore more tracks curated based on iterative voice interactions.

- Session Frequency: Voice interactions reduce the barriers to starting a session, leading to increased daily and weekly usage frequency.

- Playlist Additions and Saves: When users request content through specific commands, they are more likely to find tracks that resonate, resulting in higher rates of playlist creation and song saving.

- Skip Rates: Skip frequency declines when users are able to refine their listening in real time, indicating improved match quality between intent and playback.

- Voice Interaction Frequency: A growing number of users are issuing multiple voice commands within a single session, suggesting sustained engagement with the feature.

These trends reflect not only technical success but also product-market fit, particularly in environments where hands-free control is desirable, such as during workouts, driving, or multitasking at home.

Enhancing Accessibility and Inclusion

The introduction of voice commands also addresses critical gaps in accessibility. For users with visual impairments, motor disabilities, or cognitive limitations, traditional app navigation poses significant challenges. Voice interfaces offer an inclusive alternative, granting these users the ability to access and control Spotify with minimal physical or cognitive effort.

In doing so, Spotify aligns with global accessibility standards and fulfills part of its broader mission to democratize access to music. The AI DJ, once perceived as a convenience feature, is now also a powerful assistive tool—capable of making the platform more equitable for diverse user populations.

This accessibility extension has implications for Spotify’s global user base, particularly in emerging markets where mobile-first and voice-first interactions are more common than desktop or keyboard-based navigation. In these regions, the voice-enabled AI DJ can serve as an onboarding mechanism for first-time users with limited technical proficiency.

Appeal to Multitasking and Contextual Use

Spotify’s voice DJ feature also capitalizes on the rise of contextual computing—the idea that software should adapt to the user’s current environment, task, and attention span. Modern consumers often engage with digital services while multitasking. Whether cooking, cleaning, commuting, or exercising, users benefit from hands-free control and contextual responsiveness.

Voice commands offer an elegant solution to these scenarios. Users no longer need to stop what they are doing to adjust their music experience. A simple spoken command modifies the playlist or mood, all while preserving flow and minimizing disruption. This enhances usability in day-to-day life and reinforces Spotify’s role as a background yet intelligent presence in users’ routines.

Moreover, Spotify’s ability to recognize context-specific commands—such as “play driving music,” “set the mood for dinner,” or “something to help me focus”—demonstrates a deeper understanding of real-world user behavior. By mapping such requests to curated thematic playlists or dynamically generated DJ sessions, the system anticipates user needs and strengthens platform engagement.

Gamification and Iterative Feedback

Voice interactions also lend themselves to elements of gamification. Users often test the limits of the AI DJ’s capabilities by issuing novel or creative commands. This exploratory behavior generates a loop of engagement, where the system’s ability to surprise and adapt becomes a form of entertainment in itself.

In turn, Spotify gathers valuable data from these interactions, which can be used to refine NLP models, train content classifiers, and improve future responses. The more users experiment, the more intelligent and responsive the system becomes—creating a virtuous cycle of mutual adaptation.

Spotify may also consider adding visual feedback or metrics around voice usage—such as weekly summaries of top commands, voice-command badges, or “DJ tips” based on user habits—to further gamify the experience and reinforce interaction.

In conclusion, the integration of voice commands within Spotify’s AI DJ feature significantly elevates user experience and engagement. By enabling natural, real-time interactions, Spotify not only enhances personalization but also fosters emotional connection, accessibility, and context-aware convenience. As voice-first technology becomes increasingly central to digital interaction, Spotify’s innovation positions it at the forefront of user-centric, AI-enhanced music streaming.

Broader Implications for the Music and AI Industry

The integration of voice commands into Spotify’s AI DJ feature not only transforms the user experience but also has significant ramifications for the broader music streaming ecosystem and the trajectory of artificial intelligence in consumer technology. This section explores the strategic, competitive, technological, and ethical dimensions of Spotify’s innovation, shedding light on how this advancement may influence the global music industry and the next wave of AI integration in digital media.

Strategic Market Positioning and Competitive Dynamics

Spotify’s deployment of a voice-interactive AI DJ places it in a competitive advantage relative to other major players in the streaming industry. While platforms like Apple Music, Amazon Music, and YouTube Music also support voice-based interactions through Siri, Alexa, and Google Assistant respectively, none currently offer a dedicated AI DJ that blends real-time commentary, dynamic playlisting, and user-commanded responsiveness in a unified interface.

Spotify’s strategy represents an evolution beyond voice-assisted navigation toward voice-led personalization. Unlike traditional virtual assistants, which execute predefined tasks in response to narrow commands, Spotify’s AI DJ responds to natural language inputs with curated experiences, contextual responses, and synthesized voice narratives. This level of sophistication strengthens Spotify’s brand identity as a pioneer in AI-powered audio personalization, potentially attracting users who value not just convenience but also innovation and emotional engagement.

Moreover, Spotify’s feature may catalyze a race toward more immersive and conversational streaming platforms, forcing competitors to reassess their personalization frameworks and invest in similarly dynamic, voice-driven AI systems. This could accelerate the pace of R&D investments in audio-based machine learning and real-time recommendation engines across the industry.

The Future of Human-AI Collaboration in Curation

Spotify’s voice-enabled DJ is part of a broader movement toward human-AI collaboration in creative domains. Traditionally, the role of music curation has been the domain of radio DJs, music journalists, or playlist editors. With the introduction of generative AI systems capable of emulating and enhancing these human functions, the boundary between machine-generated and human-guided experiences becomes increasingly blurred.

However, rather than replacing human curation, Spotify’s AI DJ appears to augment the user’s ability to co-create. By allowing individuals to issue voice commands and receive commentary or musical transitions tailored to their mood and input, the system encourages interactive storytelling and shared decision-making between user and machine. This marks a significant philosophical shift from the passivity of algorithmic suggestions toward an era of conversational curation, where users are empowered as co-authors of their listening journeys.

This model of interaction could extend beyond music. Podcasts, audiobooks, and educational content could be curated similarly, with AI DJs functioning as dynamic audio companions that guide users across genres and formats based on voice-directed exploration. The infrastructure Spotify is building today could eventually support an AI-native audio ecosystem that redefines media consumption across contexts.

New Frontiers for Artists and the Creator Economy

The evolution of AI DJs with voice command functionality also introduces opportunities and challenges for artists, producers, and independent creators. On the one hand, more intelligent personalization could help surface underrepresented or niche content to users whose voice commands suggest openness to exploration. For example, a user asking for “emerging indie pop with female vocals” could be directed to lesser-known creators, effectively democratizing exposure.

On the other hand, the opacity of AI recommendation systems raises concerns about algorithmic bias and playlist monopolization. Artists may wonder whether their content is being surfaced fairly in response to voice commands, or whether the system reinforces the dominance of already-popular tracks. Spotify must ensure transparency and fairness in how it maps user queries to results, especially as voice input becomes a primary discovery tool.

Additionally, new creative opportunities could arise as artists and labels begin crafting voice-reactive content experiences. For instance, artists might collaborate with Spotify to pre-record dynamic DJ segments, or enable fans to unlock exclusive tracks through specific voice interactions. These developments could lead to a new genre of interactive musical storytelling, blending AI technology with creative authorship.

Ethical Considerations: Privacy, Consent, and Algorithmic Influence

While voice command functionality enhances convenience and personalization, it also raises important ethical and regulatory questions—particularly concerning privacy and data governance. Capturing, interpreting, and responding to voice input requires continuous audio processing, which introduces potential risks related to surveillance, consent, and data misuse.

Spotify must maintain rigorous transparency standards around how voice data is stored, processed, and anonymized. Users should have clear options to opt in or out of data retention, and understand how their inputs influence recommendation models. Regulatory frameworks such as the General Data Protection Regulation (GDPR) in Europe or the California Consumer Privacy Act (CCPA) in the U.S. impose strict requirements on user consent, which Spotify must navigate carefully.

Furthermore, the potential for algorithmic manipulation—whether intentional or incidental—must be addressed. AI systems that control playback based on inferred or expressed preferences can shape user behavior in subtle ways. Spotify has a responsibility to ensure that the AI DJ does not exploit user moods for engagement maximization, or introduce biases that distort musical diversity. Transparent algorithmic audits and ethical AI guidelines should be integral to the development process.

Expanding Voice Interfaces Across Industries

The implications of Spotify’s innovation also reverberate beyond the music industry. As consumers grow accustomed to interacting with content via natural language interfaces, other sectors may begin to emulate the model. Fitness apps, news aggregators, language learning platforms, and even financial tools could adopt AI DJs or voice companions to personalize content delivery through two-way conversation.

This trend underscores a broader shift toward voice-first computing, wherein interaction with digital systems becomes less screen-bound and more dialogue-driven. The user interface (UI) of the future may not be visual at all, but vocal and contextual. Spotify’s AI DJ can thus be seen as a proof of concept for a larger transformation in how people interact with content, services, and technology.

Companies operating in adjacent verticals will be watching closely to assess the effectiveness of Spotify’s voice integration—not just in driving engagement, but also in building trust, usability, and long-term brand equity.

Spotify’s voice-enabled AI DJ represents far more than a feature upgrade; it symbolizes a foundational rethinking of how content is curated, consumed, and controlled. The broader implications span multiple dimensions—from platform competition and user empowerment to artist visibility and ethical AI design. As voice interaction becomes a defining characteristic of digital experiences, Spotify is positioning itself at the vanguard of a shift toward conversational, user-coauthored media.

A Voice-Driven Future for Streaming

As digital consumption patterns evolve, personalization and interactivity have become central to user expectations. Spotify’s AI DJ, now enhanced with voice command functionality, exemplifies the convergence of these two imperatives. What began as a recommendation engine has matured into a responsive, conversational system—one that empowers users to shape their musical journey with the most natural interface of all: the human voice.

This innovation signifies more than a user experience enhancement; it marks a pivotal moment in the evolution of music streaming and digital media interfaces. By integrating real-time voice commands with dynamic music curation, Spotify has effectively bridged the gap between predictive algorithms and user-driven discovery. The AI DJ now serves not just as a content distributor, but as an adaptive companion—curating, narrating, and responding in ways that feel intuitive and personalized.

From a technical standpoint, this development showcases Spotify’s growing command over artificial intelligence, particularly in natural language processing, real-time personalization, and generative voice synthesis. These capabilities allow the platform to respond not only to what users play, but how they speak, when they engage, and what moods they convey. This level of nuance transforms passive consumption into collaborative content design, where user agency is amplified and listening becomes a dialogue.

The voice-enabled AI DJ also represents a substantial step forward in accessibility. For users with disabilities or limited technical proficiency, voice commands open a path to deeper engagement. In the broader societal context, this aligns with inclusive design principles and reflects Spotify’s commitment to universal usability.

From an industry perspective, Spotify’s move has catalyzed new benchmarks. Its approach sets a precedent for streaming competitors and digital platforms that must now contend with rising consumer expectations for voice-first interaction and contextual intelligence. Whether through better NLP, more creative use of AI, or richer human-AI dialogue, the future of streaming will likely hinge on how well services can personalize content not just through data, but through conversation.

The implications for the music industry are equally profound. With users directing their AI DJs to explore genres, moods, and artists via voice, the discovery process becomes more organic, immediate, and democratized. This could benefit independent musicians and niche creators who are surfaced based on specific, user-generated input rather than playlist algorithms alone. Yet it also demands increased transparency around how voice data influences recommendation logic, and how the ecosystem ensures fairness across content exposure.

Crucially, as voice-command technology becomes more widespread, ethical considerations around privacy, consent, and algorithmic influence must remain at the forefront. Spotify’s success will not be measured solely by engagement metrics but by its ability to steward trust and accountability in its AI systems.

In sum, Spotify’s AI DJ with voice command support represents a reimagining of music curation—one that listens as much as it speaks. It invites users into a participatory relationship with their playlists, enabling them to influence not just what is played, but how it is introduced and contextualized. As digital media continues its migration toward hands-free, conversational, and ambient interfaces, Spotify stands at the leading edge of this transformation.

The voice-enabled future of streaming is not only conceivable—it is already here. And in this new paradigm, the DJ doesn’t just spin the tracks—it listens, learns, and converses with you.

References

- Spotify Newsroom – https://newsroom.spotify.com

- TechCrunch on Spotify AI – https://techcrunch.com/tag/spotify

- The Verge Spotify Coverage – https://theverge.com/spotify

- Wired AI Features in Music – https://wired.com/tag/artificial-intelligence

- Music Business Worldwide – https://musicbusinessworldwide.com

- OpenAI Blog – https://openai.com/blog

- Sonantic Voice AI – https://sonantic.io

- MIT Technology Review on AI UX – https://technologyreview.com

- AI and Accessibility by Google – https://ai.google/accessibility

- Spotify Developers Blog – https://developer.spotify.com/blog