Rewriting the Future: How LLM Agents Are Transforming Code Migration

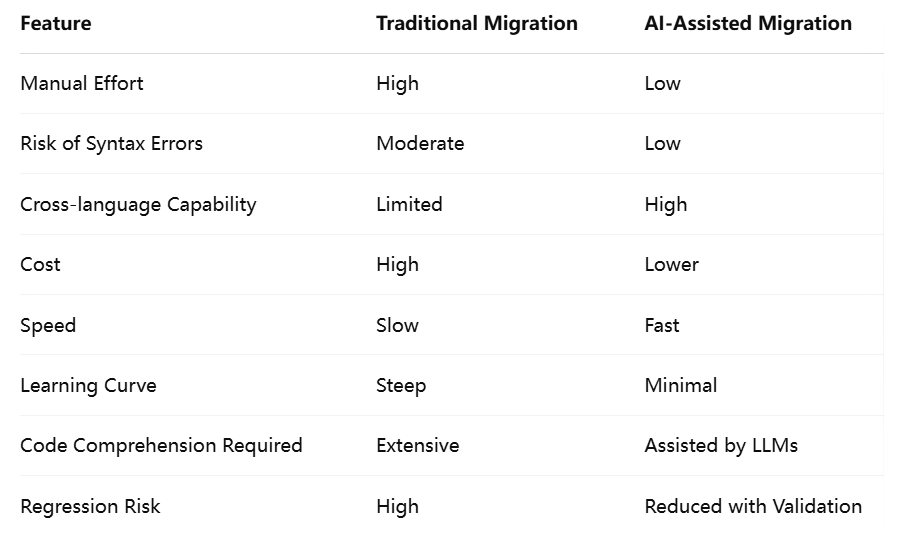

In the fast-evolving world of software development, the ability to modernize legacy systems efficiently and accurately has become a critical imperative for organizations across industries. Code migration—the process of transferring codebases from outdated or unsupported platforms, programming languages, or frameworks to more modern environments—is often necessary to reduce technical debt, improve scalability, meet security standards, and align with evolving business needs. Yet, despite its importance, code migration remains a resource-intensive and error-prone task when performed manually.

Traditional approaches to code migration typically involve meticulous planning, extensive manual rewriting, and rigorous validation by experienced developers. These methods are not only time-consuming and costly but also pose significant risks. For example, subtle semantic mismatches between the source and target languages can lead to functional discrepancies. Moreover, the documentation accompanying legacy code is frequently outdated or missing, making it even more challenging to preserve business logic accurately during the migration process.

Recent advances in artificial intelligence (AI), particularly in large language models (LLMs), have opened new avenues for automating complex programming tasks, including code migration. LLMs such as OpenAI’s Codex, Meta’s Code Llama, and Hugging Face’s StarCoder have demonstrated remarkable proficiency in understanding and generating human-readable code across multiple languages. These models are trained on vast corpora of open-source code and natural language documentation, enabling them to comprehend both syntax and semantics with impressive depth.

The emergence of AI-powered agents built on top of LLMs has further accelerated this transformation. These agents are capable of performing contextual analysis, inferring intent, and producing structurally sound and semantically meaningful code transformations with minimal human input. Whether converting an application from Java to Python or migrating a monolithic codebase to a microservices architecture, LLM agents can now serve as reliable co-pilots that dramatically enhance both the speed and quality of code migration efforts.

The objective of this blog post is to explore how AI-assisted code migration—enabled by sophisticated LLM agents—is redefining software modernization. We will examine the fundamentals of code migration and its traditional challenges, delve into the technical capabilities of LLMs in code translation and transformation, analyze the architecture of AI-assisted migration systems, and assess real-world applications in enterprise settings. We will also consider the limitations, risks, and future trajectories of this emerging paradigm.

As enterprises seek to remain competitive and agile in a digital-first landscape, leveraging LLM agents for code migration offers a compelling path forward. Not only do these tools reduce the manual burden on developers, but they also enable organizations to modernize legacy systems at scale—faster, safer, and more cost-effectively than ever before.

Understanding Code Migration

Code migration is a critical process in modern software engineering that involves transferring an application’s codebase from one environment to another. This could mean transitioning between programming languages, operating systems, frameworks, or platforms. The primary objective is to enhance maintainability, performance, security, and compliance while aligning software systems with current technological standards.

In an era where digital transformation drives competitive advantage, organizations cannot afford to let outdated systems hinder innovation. Enterprises are increasingly compelled to modernize legacy software—often written in languages such as COBOL, FORTRAN, or Visual Basic—that runs on mainframes or on-premise infrastructure. These systems, although functionally stable, pose growing challenges in terms of maintainability, integration with cloud-native tools, and availability of skilled developers.

Common Code Migration Scenarios

Understanding the types of migration projects commonly undertaken can illuminate the broader scope of challenges and opportunities. The most prevalent scenarios include:

- Language Migration: Shifting from legacy languages like COBOL or Perl to modern ones like Java, Python, or Rust. This is often pursued to enhance developer productivity and future-proof applications.

- Platform Migration: Moving code from one operating system or execution environment to another—for example, transitioning from Windows to Linux-based systems or from proprietary servers to open-source platforms.

- Framework or Architecture Migration: Rewriting code to accommodate new architectural paradigms, such as converting a monolithic application into a microservices-based solution or updating from an outdated web framework to a more modern one like React or Angular.

- Cloud Migration: Transferring on-premise applications to cloud environments such as AWS, Azure, or Google Cloud Platform. This often involves refactoring code to leverage cloud-native services and APIs.

Each scenario introduces unique technical, organizational, and operational complexities that must be meticulously managed.

Traditional Code Migration Challenges

Code migration, when done manually, is a painstaking and error-prone process. Even with a highly experienced team, the following challenges frequently arise:

- Lack of Up-to-Date Documentation: Legacy codebases often lack comprehensive documentation. Developers must reverse-engineer business logic, which slows down migration and increases the risk of introducing subtle bugs.

- Semantic Gaps Between Languages: Different programming languages possess distinct idioms, features, and runtime behaviors. Accurately translating logic from one language to another requires deep contextual understanding beyond mere syntax.

- Technical Debt and Code Smells: Legacy systems may contain outdated libraries, deprecated functions, or convoluted code paths. Migrating such code without addressing these issues may only transfer the problems to a new platform.

- Regression Risk: Migrated applications can exhibit functional discrepancies that are difficult to trace, leading to unexpected behavior in production environments.

- High Costs and Time Requirements: Manual migration efforts often involve months of planning and implementation, requiring significant financial investment and developer resources.

- Security and Compliance Risks: Failure to preserve or adapt security controls during migration can introduce vulnerabilities or lead to regulatory non-compliance.

These challenges have historically limited the pace at which organizations can modernize their technology stacks. This is where AI-assisted approaches, particularly those leveraging LLM agents, begin to show transformative potential.

The Shift Toward Automation in Code Migration

The rising complexity of modern IT environments and the demand for agility have catalyzed a shift from manual to automated solutions. Automation tools, while not new to the software industry, have traditionally been limited to code linters, static analysis, and template-based code generators. However, the advent of LLMs marks a new frontier in intelligent automation—one where machines can comprehend, generate, and transform code at a level approaching human expertise.

Instead of relying solely on pattern matching or rule-based transformations, LLMs use probabilistic language modeling to infer meaning from code context. This allows them to understand high-level business logic, variable naming conventions, and even architectural patterns across entire repositories. When integrated into specialized agents, these models can autonomously perform end-to-end code migration tasks—from reading the original codebase to producing the equivalent code in a target language or platform.

The potential efficiency gains are substantial. Projects that previously took several quarters may now be completed in weeks or even days, depending on the complexity and scale of the migration. Furthermore, these tools enable a “human-in-the-loop” model, where developers oversee and validate AI-generated code, ensuring quality and correctness while benefiting from automation's speed and scale.

As the limitations of traditional approaches become more pronounced, particularly in large enterprises with legacy dependencies, the case for AI-assisted migration is growing stronger. In the sections that follow, we will examine how LLM agents are being harnessed to address these exact challenges—delivering accurate, efficient, and scalable code migration solutions.

Large Language Models as Code Migration Agents

Large Language Models (LLMs) have ushered in a paradigm shift in the field of artificial intelligence by demonstrating an exceptional ability to understand and generate natural language. More recently, these models have been adapted and fine-tuned to comprehend and produce programming languages, leading to the emergence of specialized AI agents capable of automating tasks across the software development lifecycle. Among the most promising applications is the automation of code migration, an area traditionally fraught with complexity, cost, and risk.

In this section, we explore how LLMs function as code migration agents. We will unpack the core capabilities of these models, analyze their architecture, and evaluate their application in real-world code transformation scenarios. We also examine how context, prompt engineering, and surrounding tools enable LLMs to not only translate code but also preserve business logic and intent across languages and platforms.

Core Competencies of LLMs in Code Tasks

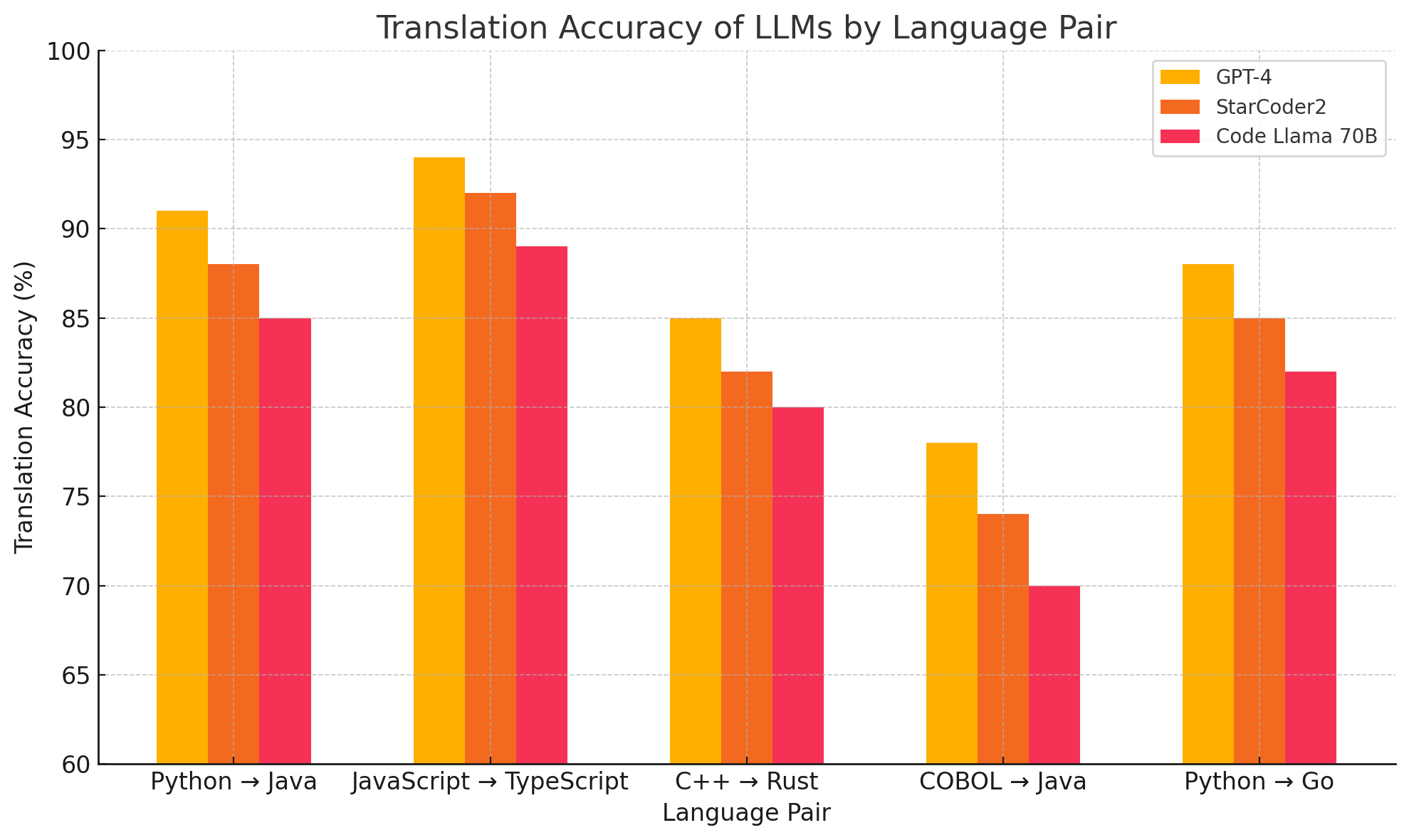

At the foundation of LLM-based code migration lies the concept of language modeling. LLMs such as GPT-4, StarCoder2, and Code Llama 70B are trained on massive corpora of source code, documentation, and technical discussions. This training enables them to learn the syntax, idiomatic structures, control flow patterns, and architectural paradigms of numerous programming languages.

Key competencies that make LLMs suitable for code migration include:

- Syntax and Semantic Understanding: LLMs do not merely operate at a lexical level. They can infer semantic relationships between variables, functions, and classes—critical for preserving intent during migration.

- Multi-language Proficiency: Unlike traditional transpilers that require hardcoded rules for specific language pairs, LLMs can generalize their understanding across a wide range of programming languages.

- Structural Awareness: Trained on multi-file projects and open-source repositories, LLMs can reason about file hierarchies, module imports, and architectural patterns.

- Natural Language Integration: LLMs can parse human-written comments, documentation, and code annotations, using this information to guide transformations and enhance output clarity.

- Autonomous Reasoning: Through techniques like chain-of-thought prompting, LLMs can decompose complex migration tasks into sequential steps, improving the robustness of their output.

LLM Agents vs General-Purpose Models

While general-purpose LLMs like GPT-4 and Claude possess considerable programming capabilities, domain-specific variants have been optimized for software engineering. These specialized models are trained on curated code datasets and fine-tuned using techniques like reinforcement learning from human feedback (RLHF), supervised fine-tuning, and contrastive learning.

Some notable LLMs for code-related tasks include:

- OpenAI Codex: Fine-tuned on both code and natural language, Codex powers GitHub Copilot and supports multi-language code generation and transformation.

- Code Llama 70B: Meta’s open-weight model trained specifically for coding tasks, supporting multi-file understanding and high performance across popular languages.

- StarCoder2: Developed by Hugging Face and ServiceNow, StarCoder2 supports 40+ programming languages and includes architecture-aware training to improve software project comprehension.

- DeepCoder-14B: A model optimized for learning algorithms and translating between problem descriptions and executable code using few-shot examples.

LLM agents combine these models with task-specific logic and tool integrations to automate migration flows. They may accept high-level instructions (e.g., “Convert this Python script to Java”) and autonomously orchestrate the migration, leveraging internal memory, search tools, and feedback mechanisms.

From Code Translation to Code Transformation

One of the most common forms of AI-assisted migration is code translation, where code written in one language is re-expressed in another. This requires syntactic and idiomatic conversion, along with the preservation of logic, error handling, and performance characteristics. For example, migrating a file from JavaScript to TypeScript is not a mere matter of syntax—it involves identifying and annotating types, restructuring asynchronous calls, and adhering to strict compiler rules.

More advanced LLM agents go beyond surface-level translation to perform code transformation, which may include:

- Refactoring and Optimization: Cleaning up legacy constructs and applying modern best practices during migration.

- API Mapping: Replacing obsolete or platform-specific APIs with their modern equivalents.

- Security Enhancement: Adding missing validation checks or updating encryption methods in compliance with modern standards.

- Architecture-Aware Rewriting: Transforming monolithic code into modular or service-oriented structures.

These tasks require deep contextual awareness, which LLM agents achieve by ingesting large amounts of related code, documentation, and test data before initiating migration.

This chart demonstrates that while LLMs excel at translating between modern languages, they also perform reasonably well in legacy-to-modern transformations—an area of high value in enterprise modernization efforts.

Prompt Engineering and Retrieval-Augmented Generation

The effectiveness of LLMs in code migration is highly dependent on the prompts they receive. Prompt engineering—the practice of crafting inputs that guide model behavior—is especially critical in multi-step migration tasks. Prompts may include:

- Code examples and templates.

- Natural language instructions (e.g., “Refactor this function to use async/await”).

- Inline comments specifying constraints or goals.

- Test cases or expected outputs.

More advanced setups use Retrieval-Augmented Generation (RAG) to provide LLMs with supplementary knowledge at inference time. For example, an LLM tasked with migrating a proprietary codebase might query internal documentation, API references, or change logs to better understand the intent and context of a given code snippet. This integration enhances reliability and reduces hallucination risk.

Human-in-the-Loop: Collaborative Code Migration

Despite their impressive capabilities, LLMs are not infallible. In complex migration tasks, human oversight remains essential. The best-performing systems adopt a human-in-the-loop (HITL) model, where the LLM suggests transformations, and developers review, validate, and revise the output as needed.

This collaborative approach combines the creative reasoning and experience of human engineers with the scale and efficiency of machine intelligence. Developers focus on high-value tasks—such as interpreting undocumented edge cases or defining system architecture—while LLMs handle mechanical translation and refactoring.

LLM agents represent a transformative evolution in how software engineers approach code migration. By combining deep language understanding with domain-specific expertise, these systems can automate large portions of the migration workflow—accelerating delivery timelines, reducing costs, and improving code quality.

As enterprises increasingly adopt these tools, the role of developers will shift from manual transformers to intelligent supervisors, orchestrating AI agents that perform complex transformations with remarkable fluency. In the following section, we will examine the architectural components of these AI-assisted systems, exploring how they integrate into development pipelines and enable scalable modernization strategies.

Architecture of AI-Assisted Code Migration Systems

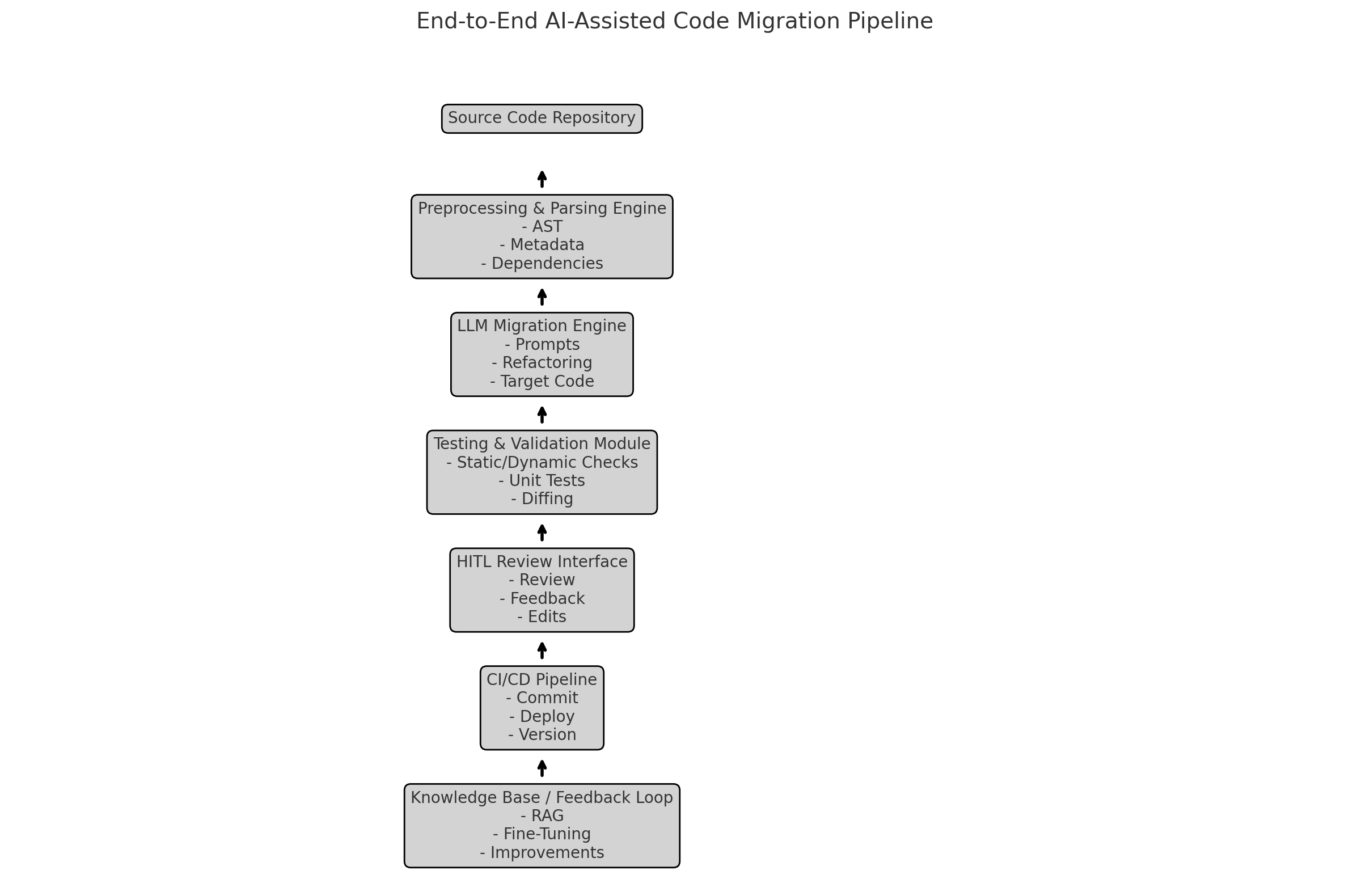

The success of AI-assisted code migration does not hinge on language models alone. Instead, it relies on a carefully orchestrated system architecture that combines multiple components into a cohesive, intelligent workflow. These systems are designed not only to translate and refactor code but also to validate, test, and iteratively improve it. This section presents a deep dive into the typical architecture of an AI-assisted code migration system and explores how these components interact to deliver high-accuracy, production-ready results.

Core Architectural Components

AI-assisted code migration systems typically comprise the following core modules:

1. Code Ingestion and Preprocessing Engine

Before migration can occur, the system must first ingest and analyze the source code. This process involves:

- Parsing: The code is parsed into Abstract Syntax Trees (ASTs) or intermediate representations that capture syntactic and semantic information.

- Dependency Analysis: The system maps out interdependencies across files, libraries, and modules.

- Metadata Extraction: Comments, documentation blocks, annotations, and configuration files are extracted to inform migration decisions.

This foundational step is critical for providing the LLM with sufficient context, ensuring that code is not translated in isolation but with awareness of the broader software ecosystem.

2. LLM-Powered Migration Engine

At the core of the system lies the large language model agent—responsible for transforming source code into its target form. This engine is tightly integrated with the preprocessing module to receive all relevant context.

Functions include:

- Code Translation: Rewriting code from the source language to the target language while maintaining logic fidelity.

- Transformation Rules: Applying domain-specific rules (e.g., replacing deprecated APIs with modern equivalents).

- Comment and Documentation Generation: Enhancing the translated code with inline explanations and updated comments.

- Prompt Templating: Dynamically generating prompts to steer the LLM toward accurate, style-consistent outputs.

In many architectures, this engine is not a monolithic model but an orchestrator that may call multiple LLMs or tools depending on the task complexity.

3. Testing and Validation Module

Once the code has been migrated, it must be validated through automated and manual processes. The validation module performs several critical roles:

- Static Analysis: The code is examined for syntactic correctness, style conformity, and possible security vulnerabilities.

- Automated Unit Testing: Tests are generated or reused to confirm that the migrated code produces correct outputs.

- Semantic Diffing: Comparing the behavior of original and migrated code by simulating execution paths or using symbolic execution tools.

- Benchmarking: Measuring performance differences between the original and translated code.

This module is instrumental in catching hallucinations or regressions introduced by the LLM during migration.

4. Human-in-the-Loop (HITL) Review Interface

Despite automation, human oversight remains indispensable. The HITL interface enables developers to:

- Review AI-generated diffs and transformation rationales.

- Accept, reject, or edit proposed changes.

- Provide corrective feedback to refine model behavior over time.

- Annotate business logic that may not be inferable from code alone.

This interface serves as a quality control checkpoint and contributes to continual improvement via fine-tuning loops.

5. CI/CD Integration and Deployment

To facilitate seamless delivery, AI-assisted migration systems are often integrated into Continuous Integration/Continuous Deployment (CI/CD) pipelines. Once validated, code can be automatically:

- Committed to version control systems like Git.

- Deployed to test environments for further evaluation.

- Tagged and versioned for rollback and auditing.

This automation layer is vital for maintaining software agility and governance in large-scale enterprises.

6. Knowledge Base and Feedback Loop

The most advanced systems leverage feedback loops and domain-specific knowledge bases to increase reliability over time. Features include:

- Retrieval-Augmented Generation (RAG): Dynamically pulling contextual documents, internal wikis, and API references during migration.

- Model Fine-Tuning: Continuously training LLMs on corrected outputs or user feedback to reduce repeated errors.

- Error Pattern Recognition: Detecting common migration pitfalls and applying preemptive fixes.

These feedback mechanisms transform the migration system into a self-improving tool, growing more accurate and efficient with each use.

Real-World Use Cases and Industry Applications

While the theoretical benefits of AI-assisted code migration are significant, their full value is most apparent in real-world applications. Enterprises, startups, government agencies, and open-source communities have begun to deploy large language model (LLM) agents to accelerate software modernization, reduce costs, and overcome skill shortages. This section examines practical implementations across various domains, illustrating how AI-powered systems are reshaping software development and maintenance at scale.

1. Enterprise Legacy Modernization

Many large enterprises—particularly those in finance, telecommunications, and insurance—operate extensive legacy systems written in COBOL, PL/1, or assembly-level languages. These systems, while stable and deeply integrated, are increasingly difficult to maintain due to the dwindling number of skilled legacy developers and rising infrastructure costs.

Use Case: Banking Sector

A multinational bank initiated a major digital transformation project to migrate its core banking platform from COBOL to Java. The bank employed an AI-assisted migration platform powered by a fine-tuned LLM trained on banking-specific logic patterns. The system was able to:

- Parse and segment decades-old COBOL programs into manageable functions.

- Translate logic into modern Java equivalents while maintaining financial accuracy.

- Suggest and auto-generate unit tests for each migrated module.

The human-in-the-loop framework allowed domain experts to validate critical business logic before deployment. This approach accelerated the migration timeline by over 60% and reduced manual review costs by nearly 40%.

2. Startups and Agile Teams: Accelerated Multilingual Deployment

Startups often operate under tight resource constraints but require agility to deliver features across multiple platforms and languages. LLM agents allow them to reuse logic written in one language and repurpose it for others with minimal developer intervention.

Use Case: SaaS Startup Building Cross-Platform Integrations

A mid-sized SaaS provider developing a productivity platform needed to support both Python-based APIs and a TypeScript front end. Traditionally, this would require separate teams or extensive rewrites. Instead, they utilized an LLM agent trained on both language patterns to:

- Automatically convert backend Python modules into corresponding TypeScript interfaces.

- Identify type mismatches and suggest appropriate casting or transformation strategies.

- Generate OpenAPI specs and documentation in parallel.

This allowed the team to reduce inter-team dependency and ship new integrations 2.5x faster than previously projected.

3. Government and Public Sector Initiatives

Government institutions worldwide are burdened with aging systems that manage critical operations like taxation, healthcare, and social security. These systems are often brittle and incompatible with modern cloud-first architectures.

Use Case: Government Cloud Migration Initiative

A North American government agency leveraged AI-assisted code migration tools to refactor on-prem applications written in .NET Framework into .NET Core for cloud deployment. Using an LLM agent:

- The migration engine identified obsolete Windows-specific APIs.

- It provided alternatives suitable for Linux-based containers.

- Semantic diff tools validated that workflows and UI logic were preserved.

By embedding security policies and compliance rules into the prompt templates, the system ensured that migrated code met stringent regulatory standards. This significantly reduced the risk of introducing vulnerabilities during migration.

4. Open-Source Communities and Ecosystem Maintenance

Open-source libraries and frameworks often suffer from outdated dependencies and require rework to remain relevant across evolving ecosystems. LLM agents are increasingly being used to automate these maintenance tasks.

Use Case: Community-Led Framework Modernization

A popular open-source web framework was originally built on Python 2.7, which reached end-of-life in 2020. A group of contributors utilized an open-weight LLM model to:

- Convert thousands of lines of Python 2 code to Python 3 with minimal breakage.

- Generate compatibility patches and validate them against legacy test cases.

- Flag outdated third-party libraries and suggest modern equivalents.

The use of an LLM agent dramatically sped up the modernization effort and helped retain the project's community support.

5. Cybersecurity and Risk Mitigation

Migrating older code often introduces security risks—especially when outdated encryption libraries, hardcoded credentials, or unsafe I/O operations are carried forward. LLM agents, when paired with security-aware training data, can detect and correct these vulnerabilities during the migration process.

Use Case: Secure Refactoring in Healthcare

A healthcare software company used an LLM-assisted system to migrate an electronic medical record (EMR) application to a cloud-native architecture. The model:

- Detected hardcoded authentication tokens in legacy JavaScript files.

- Suggested secure replacements using OAuth2 and environment variables.

- Annotated and encrypted sensitive data fields in compliance with HIPAA regulations.

This proactive security hardening saved the company from a costly manual audit and ensured regulatory readiness for the new platform.

Impact on Developer Productivity and Team Dynamics

One of the most profound effects of LLM-assisted code migration is on developer productivity and team dynamics. Developers are increasingly able to focus on architectural decisions, business logic, and user experience rather than repetitive syntactic conversions. Furthermore:

- Junior developers can contribute more confidently with AI-powered suggestions guiding their work.

- Senior engineers can delegate routine tasks, freeing time for design reviews and long-term planning.

- Cross-functional teams benefit from better code visibility, as LLMs often generate readable documentation and summaries during migration.

Enterprises report improved onboarding experiences, faster iteration cycles, and higher overall satisfaction among development teams adopting these tools.

Challenges in Real-World Implementation

Despite the success stories, deploying LLM agents in production code migration initiatives is not without challenges:

- Hallucinations and Logical Errors: LLMs may generate syntactically correct but logically flawed code. Human validation remains essential.

- Training Biases: Models fine-tuned on open-source data may not account for proprietary APIs or industry-specific standards.

- Tooling and Infrastructure Gaps: Enterprises must invest in integrating LLM agents into their CI/CD pipelines, developer environments, and security audit tools.

These challenges are surmountable but require planning, stakeholder alignment, and iterative testing to ensure consistent outcomes.

Real-world use cases across industries demonstrate the tangible value of LLM-assisted code migration. Whether accelerating legacy modernization in banking, enabling multi-language agility in startups, or securing cloud migration in healthcare, these tools are revolutionizing how software evolves. By enhancing speed, precision, and scalability, AI agents are not only solving technical debt—they’re redefining the role of software engineers in the modernization journey.

Limitations, Risks, and the Future

Despite the significant promise and tangible benefits of AI-assisted code migration powered by large language model (LLM) agents, this technological paradigm is not without its limitations and risks. Organizations considering adoption must weigh these factors carefully and implement robust governance frameworks to ensure successful and sustainable outcomes. In this final section, we critically assess the current challenges, explore mitigation strategies, and offer a forward-looking perspective on how this space is expected to evolve.

Current Limitations of AI-Assisted Code Migration

1. Semantic Ambiguity and Hallucinations

One of the most persistent issues with LLMs is their tendency to "hallucinate"—that is, to generate code that appears correct syntactically but fails semantically. During migration, this can manifest in subtle ways:

- Logical inconsistencies in conditional statements or loops.

- Misinterpretation of domain-specific variables and abbreviations.

- Inappropriate use of APIs that are deprecated or misaligned with platform constraints.

While these hallucinations are not always frequent, they pose serious risks in safety-critical industries such as finance, aviation, and healthcare, where a single misstep can lead to cascading failures.

2. Context Limitations and Token Constraints

Even with advancements in context window sizes, most LLMs are still bounded by input token limitations. This makes it challenging to ingest and reason over large-scale codebases comprising hundreds of files, nested dependencies, and multi-layered logic. In practice, this often requires chunking strategies or hierarchical prompting—approaches that introduce additional complexity and may fragment logical continuity.

3. Incomplete Migration Output

LLM agents often excel at translating core logic but may fall short when handling:

- Build configuration files (e.g.,

Makefile,Dockerfile,pom.xml). - Environment-specific settings or system-level integrations.

- Database migrations involving schema transformations and data integrity checks.

This partial automation means developers must still invest time in stitching together the migrated artifacts into a working whole, potentially offsetting some of the efficiency gains.

4. Lack of Interpretability and Explainability

Most LLMs operate as black boxes, generating output without offering detailed justifications or explanations. For engineers evaluating transformed code, this opacity can hinder debugging, trust, and adoption. Although some systems provide “explanation layers,” these are often rudimentary and not contextually rich.

Operational Risks and Governance Challenges

1. Data Privacy and IP Leakage

Using commercial LLM APIs (e.g., OpenAI’s Codex or Anthropic’s Claude) can raise data privacy and intellectual property concerns, especially when dealing with sensitive or proprietary code. Without strict governance, there is a risk of:

- Unintended data exposure during API calls.

- Reverse engineering of proprietary logic by model providers or malicious actors.

- Licensing issues when models trained on open-source data generate copyrighted patterns.

Organizations must evaluate self-hosted, open-weight alternatives or deploy enterprise-grade access controls to mitigate these risks.

2. Security and Compliance Drift

Automated migration systems may inadvertently weaken security postures by:

- Omitting existing validation and sanitization steps.

- Misapplying encryption or authentication mechanisms.

- Failing to propagate security policies into the new codebase.

Compliance frameworks such as GDPR, HIPAA, or PCI-DSS often require meticulous documentation and evidence of security best practices—something AI-generated code may not provide without explicit integration of compliance-aware modules.

Mitigation Strategies

While these limitations are non-trivial, they can be effectively managed through a set of best practices:

- Human-in-the-Loop Review: Mandatory developer validation of all migrated outputs ensures functional and semantic correctness.

- Layered Validation Pipelines: Static analysis, dynamic testing, semantic diffing, and security scanning should be applied post-migration.

- Custom Prompt Engineering: Tailoring prompts to incorporate organizational coding standards, architectural constraints, and migration rules reduces variance in output quality.

- Model Fine-Tuning: Fine-tuning LLMs on internal codebases can dramatically improve alignment with enterprise-specific logic and terminology.

- Adoption of Explainability Tools: Emerging LLM wrappers and interpreters can generate rationales, comparisons, and confidence scores to aid developer decision-making.

The Future of AI-Assisted Code Migration

Despite its infancy, the field of AI-assisted code migration is advancing rapidly. Several trends are shaping its future trajectory:

1. Domain-Specific LLMs

The proliferation of verticalized LLMs—fine-tuned for finance, healthcare, manufacturing, or telecom—will dramatically increase the reliability of migrations involving domain-specific systems. These models will not only understand the programming languages but also the context, regulations, and business rules underlying the code.

2. Autonomous Multi-Agent Systems

Instead of a single LLM handling all tasks, future systems may comprise multi-agent frameworks, where specialized agents handle distinct responsibilities:

- One agent for parsing and analysis.

- Another for transformation and rewriting.

- A third for validation and testing.

- A meta-agent for orchestration and decision-making.

These agent-based architectures promise greater modularity, traceability, and fault isolation.

3. Interactive Migration Assistants

Next-generation LLM tools will likely include interactive interfaces, where developers can ask questions about the transformation process, inspect decision trees, and suggest corrections in real time. This fusion of explainability and collaboration will foster greater trust and usability.

4. Self-Healing Migration Pipelines

Future systems may include self-healing capabilities—automatically retrying failed migrations, learning from previous errors, and adapting transformation logic over time using reinforcement learning techniques.

5. Integration with AI Observability Platforms

As organizations expand AI usage in code migration and beyond, AI observability—monitoring, alerting, and logging AI-generated outputs—will become essential. Integrated dashboards will help teams track model accuracy, output drift, and user feedback at scale.

AI-assisted code migration with LLM agents represents a transformative leap in how software systems evolve and modernize. While not without limitations and risks, the benefits in terms of speed, scalability, and cost-effectiveness are undeniable. With proper validation frameworks, domain-specific fine-tuning, and interactive oversight, these systems can enable enterprises to safely transition away from legacy constraints and embrace modern architectures with confidence.

The future holds immense promise: smarter agents, deeper domain alignment, interactive tooling, and autonomous feedback loops. As with all powerful tools, the key lies in judicious adoption, continuous improvement, and a collaborative approach that combines the strengths of humans and machines.

Conclusion

As organizations confront mounting pressure to modernize legacy software systems, reduce technical debt, and enhance agility, the limitations of manual code migration have become increasingly apparent. Labor-intensive, time-consuming, and error-prone, traditional migration methods are no longer scalable in today’s fast-paced digital landscape. In response, the emergence of large language model (LLM) agents offers a compelling new paradigm—one in which code migration is not merely automated, but intelligently orchestrated.

Throughout this post, we have examined the foundational challenges of code migration, from the semantic complexity of transforming legacy logic to the infrastructural demands of integrating modern architectures. We explored how LLMs—trained on vast corpora of multilingual code—are capable of not only translating syntax, but also preserving and adapting logic across contexts. Through specialized system architectures combining parsing engines, validation modules, and human-in-the-loop interfaces, these agents are now capable of executing complex migration workflows at scale.

Real-world use cases across industries—from banking to healthcare, startups to government agencies—underscore the transformative potential of LLM-assisted migration. These implementations demonstrate significant improvements in delivery timelines, code quality, and operational resilience. Yet, this transformation is not without its caveats. Risks such as hallucinated outputs, security regressions, and a lack of interpretability must be actively mitigated through robust validation, feedback loops, and governance frameworks.

Looking ahead, the trajectory of AI-assisted code migration points toward even more intelligent, explainable, and autonomous systems. With the advent of domain-specific models, multi-agent architectures, and interactive tooling, the industry is poised to enter an era where code modernization becomes not only faster and more accurate—but also more accessible and reliable.

Ultimately, LLM agents will not replace developers; they will empower them. By automating repetitive and mechanical aspects of code migration, these tools enable engineers to focus on higher-order tasks—such as architecture, design strategy, and user experience—that drive business innovation. Organizations that embrace this co-pilot approach today will be best positioned to lead tomorrow’s software ecosystems with confidence, clarity, and technological foresight.

References

- OpenAI Codex – https://openai.com/blog/openai-codex

- Meta Code Llama – https://ai.meta.com/blog/code-llama-large-language-models-for-code

- Hugging Face StarCoder2 – https://huggingface.co/blog/starcoder2

- GitHub Copilot – https://github.com/features/copilot

- DeepCoder Overview (Microsoft Research) – https://www.microsoft.com/en-us/research/project/deepcoder

- Google AI on Code Understanding – https://ai.googleblog.com/2023/03/improving-code-understanding-with-ai.html

- ServiceNow and Hugging Face Collaboration – https://huggingface.co/blog/servicenow-starcoder

- Anthropic Claude for Code – https://www.anthropic.com/index/claude-for-developers

- Papers with Code: Code Translation Benchmarks – https://paperswithcode.com/task/code-translation

- Retool: Migrating Legacy Systems to Modern Infrastructure – https://retool.com/blog/migrating-legacy-systems