OpenAI Elevates ChatGPT with Memory: A Leap Toward Personalized AI Interactions

The Evolution of Conversational AI

The trajectory of artificial intelligence, particularly in the domain of large language models (LLMs), has been marked by rapid innovation, relentless ambition, and continuous refinement. Since the public debut of ChatGPT in late 2022, powered initially by OpenAI's GPT-3.5 and subsequently enhanced through GPT-4, the technology has evolved to become one of the most widely used AI systems globally. It has found applications in writing assistance, education, customer service, software development, and personal productivity, transforming how people interact with computers on a daily basis.

Despite these advancements, one major limitation has persisted throughout the evolution of conversational AI: the absence of memory. Traditional LLMs have been reactive tools, responding intelligently within the confines of a single conversation session but lacking continuity across interactions. This stateless nature meant that users would have to reintroduce themselves, their needs, and their context every time they initiated a new chat. While LLMs have excelled at natural language generation, summarization, reasoning, and pattern recognition, their inability to retain personalized information across sessions rendered them less effective as persistent digital companions.

OpenAI has now taken a significant leap forward by introducing enhanced memory capabilities in ChatGPT. This upgrade fundamentally redefines the way users interact with the system, as it enables the model to remember facts about the user, including names, preferences, past conversations, and ongoing goals. These capabilities are not merely technical enhancements; they represent a shift in how artificial intelligence understands, adapts to, and serves human beings. In essence, ChatGPT is beginning to function not just as a language model but as a context-aware assistant with the potential to grow more intelligent, personalized, and helpful over time.

This memory upgrade is particularly noteworthy in the broader context of AI development for two reasons. First, it marks a transition from purely reactive systems to more proactive and contextually adaptive agents. Second, it bridges a crucial gap between the human expectation of continuity and the machine's former inability to fulfill it. In real-world human communication, memory is indispensable. It allows for deepening relationships, evolving conversations, and cumulative learning. By embedding a form of memory into ChatGPT, OpenAI is aligning artificial interaction more closely with human communication norms.

The implications of this advancement are multifaceted. For individual users, the memory feature enhances convenience and streamlines engagement. For enterprises, it opens new avenues for customer service, employee training, and client-specific personalization. For educators, it offers the possibility of intelligent tutoring systems that adapt to each learner's pace and knowledge gaps. For developers, it means coding assistants that understand the architecture and progress of ongoing projects. All these examples underscore one fundamental truth: memory elevates AI from a mere tool to a more intelligent agent.

However, with this powerful new capability come critical responsibilities. Memory in AI raises inevitable questions around privacy, data ownership, user control, and ethical boundaries. How does the system decide what to remember? How can users see what the model recalls about them? Can memory be modified or erased entirely? OpenAI has addressed these concerns by implementing a transparent and user-controllable memory framework, but public scrutiny and academic discourse on these matters are bound to intensify as usage scales.

This blog post will explore the memory upgrade in ChatGPT in depth. The next section will dissect the core memory features, explain how they function technically, and clarify what users can expect in terms of experience and control. Following that, we will delve into real-world use cases to showcase how memory transforms practical applications across industries. We will also examine the ethical and privacy dimensions associated with persistent memory in AI systems, highlighting the safeguards OpenAI has implemented and the open questions that remain. Finally, we will look ahead to what this development means for the future of AI — both for OpenAI's strategic direction and for the broader trajectory of conversational and autonomous systems.

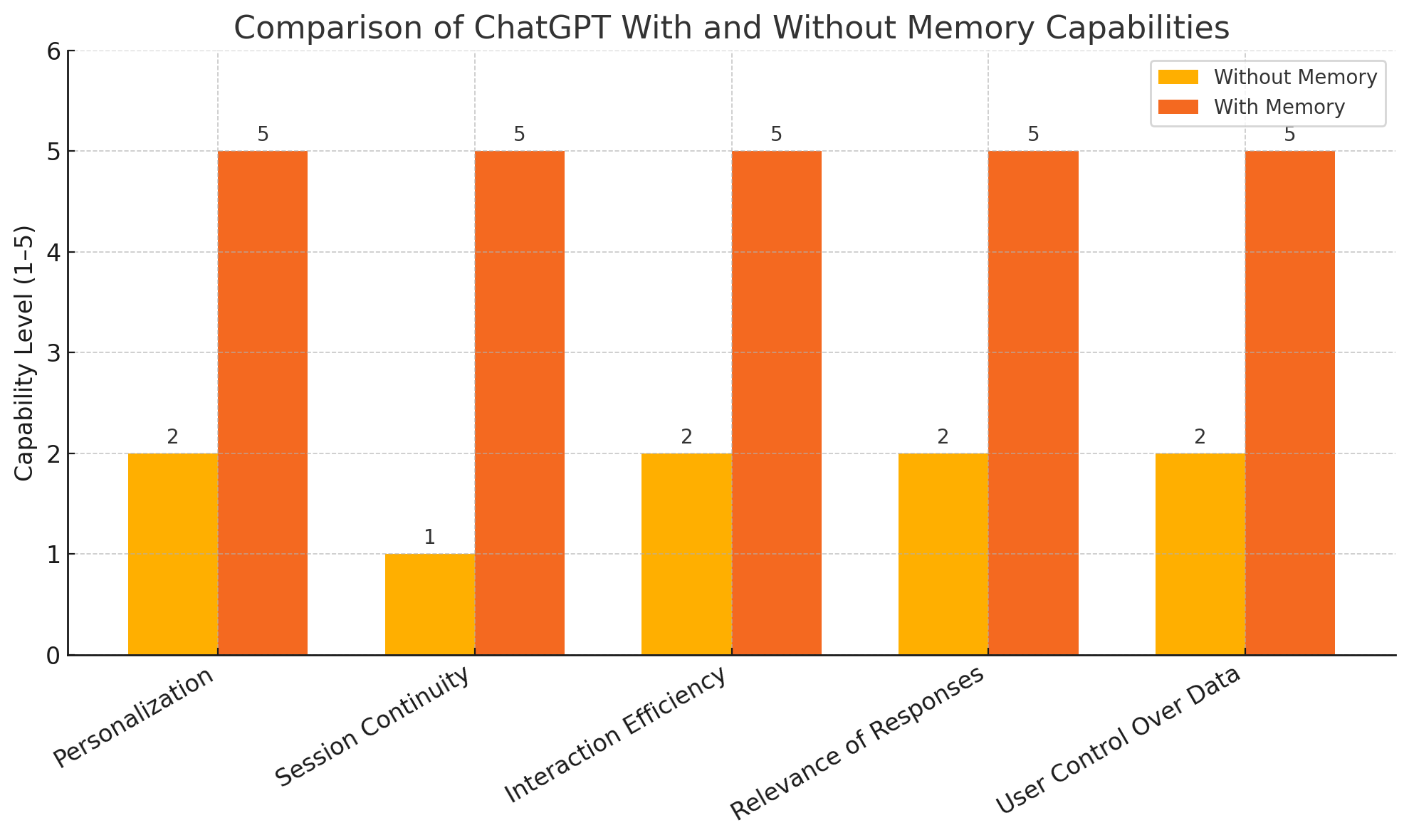

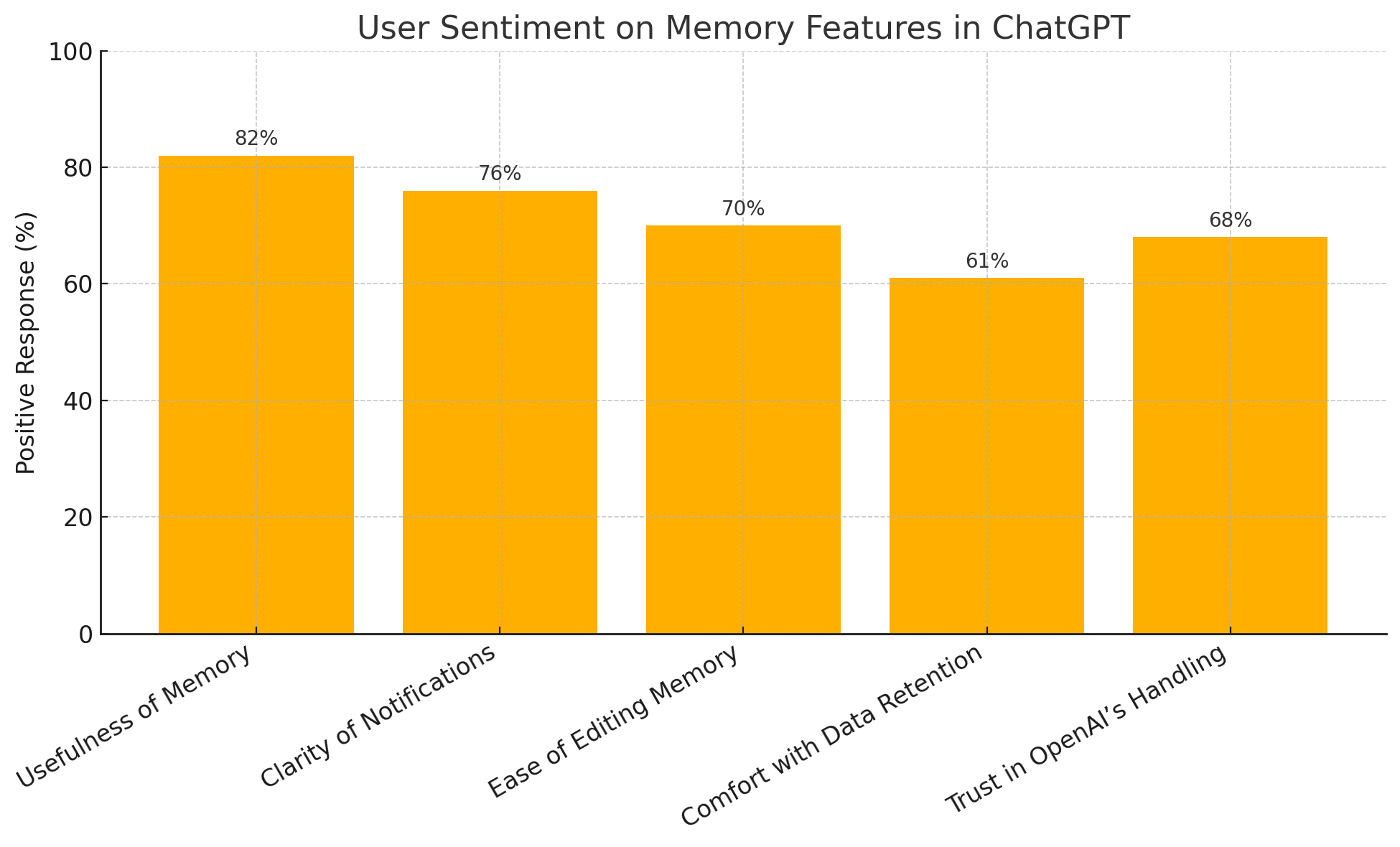

To accompany this discussion, we will include visualizations that provide a comparative analysis of user experience with and without memory functionality, alongside a detailed table that outlines use cases and how specific memory features enhance them.

In sum, OpenAI's decision to endow ChatGPT with memory represents more than a product update — it is a fundamental reimagining of the human-AI interface. It is a deliberate step toward long-term utility, personalization, and interaction fidelity. As we unpack this upgrade, the importance of memory in making AI more natural, intelligent, and human-aligned will become abundantly clear.

Understanding the New Memory Features

The incorporation of memory into ChatGPT represents a pivotal shift in how conversational AI interacts with users over time. While previous versions of ChatGPT demonstrated remarkable capabilities in language understanding and generation, each session remained isolated and ephemeral. The system would forget everything about the user once the conversation ended, regardless of how many times the user had engaged with the tool. With the new memory feature, this paradigm has changed dramatically. ChatGPT can now remember factual details about users across interactions, enabling it to deliver a far more personalized and coherent experience.

What Is Memory in ChatGPT?

In human cognition, memory allows individuals to store, retrieve, and integrate information across time, creating continuity in thought, behavior, and relationships. In the context of ChatGPT, memory refers to the model’s ability to store factual or contextually significant information about a user and retrieve it in future conversations. Unlike temporary context windows — where information from earlier turns in the same session can be used to generate appropriate responses — memory in ChatGPT spans sessions and persists even after the user has logged out or started a new chat thread.

This memory can include:

- The user’s name or preferred form of address

- Long-term goals and interests

- Communication preferences (e.g., tone or complexity)

- Past topics of conversation

- Project details or user-defined priorities

The model does not “remember” in the biological or psychological sense. Rather, it stores specific, structured data points derived from prior interactions in a secure memory store. These memory items are automatically created based on the model’s judgment of relevance and usefulness — a feature that can be toggled or deleted by the user at any time.

Short-Term Context vs. Long-Term Memory

To fully appreciate the significance of this enhancement, it is helpful to distinguish between short-term context and long-term memory in LLM systems:

- Short-term context refers to the window of text the model processes at once. In GPT-4, this context can span up to 128,000 tokens, enabling the model to understand and respond coherently to large documents, long conversations, or complex instructions within a single session.

- Long-term memory, as now implemented in ChatGPT, is separate from the immediate context window. It persists across sessions and is structured to store discrete items that have been inferred to be important for future interactions. For example, if a user mentions they are a high school teacher looking for lesson plan ideas, the system may store this profession-related information in memory to personalize future suggestions.

This separation is key to preserving both performance and privacy. Short-term context is ephemeral and session-bound, whereas long-term memory is durable but editable, empowering users to decide what the model should remember and for how long.

How Memory Works in Practice

OpenAI has designed the memory system to be transparent, non-intrusive, and user-centric. When the model decides to remember a piece of information, it informs the user through a visible notification. Users can then view, modify, or delete individual memory items at any time through the settings interface.

For instance, if the user says, “I’m working on a startup that builds solar-powered drones,” the model may respond:

"Got it. I’ll remember that you’re working on a solar-powered drone startup so I can better assist you in the future."

Users can also prompt the model directly with memory-related commands such as:

- “What do you remember about me?”

- “Forget that I’m working on a startup.”

- “Update my preferred tone to formal.”

This level of interaction gives users granular control over their data while enabling the model to build a knowledge base that supports more meaningful engagement over time.

Technical Overview: How It Works

The implementation of memory in ChatGPT draws on foundational techniques in modern AI systems, particularly embedding-based retrieval, vector stores, and metadata indexing. While the specifics are abstracted away from the end user, the general architecture can be explained as follows:

- Memory Extraction: During a conversation, the model identifies salient facts that could enhance future interactions. These facts are transformed into structured data — often in the form of key-value pairs or semantic tags.

- Embedding and Storage: Each memory item is encoded into a vector — a mathematical representation of its semantic content — and stored in a secure vector database. This allows for efficient retrieval based on similarity and contextual relevance.

- Memory Recall: When a new conversation begins, the system checks the user’s memory store and retrieves relevant items to incorporate into the initial context. This process ensures the model has access to personalized information from the outset.

- Dynamic Updates: Memory is not static. It evolves as users provide new inputs. If a user’s goals or preferences change, the model can replace or augment prior memory entries accordingly.

- Auditability and Control: Every memory item is accessible through a user dashboard. OpenAI has prioritized transparency by allowing users to view what has been remembered, receive notifications about memory updates, and manage these entries directly.

This architecture balances personalization with security, and it ensures that memory-enhanced capabilities do not compromise the performance or neutrality of the underlying model.

Who Has Access to Memory and How It Rolls Out

As of April 2025, OpenAI has begun rolling out memory capabilities to a broad segment of ChatGPT users, including those on the free tier as well as subscribers to ChatGPT Plus. The feature is being deployed incrementally, and users will receive a notification when memory becomes available on their account.

Enterprise customers and developers using the ChatGPT API may also see tailored memory capabilities, depending on their use case. For instance, an enterprise deployment may include memory domains restricted to professional tasks or project-specific data rather than personal user traits.

Notably, memory is opt-in by default. While memory can enhance usability significantly, OpenAI has emphasized the importance of user consent. Users are not required to use memory, and they can turn it off entirely through their settings if they prefer a stateless interaction model.

This chart offers a side-by-side comparison that visually underscores the enhanced utility and personalization benefits introduced by memory.

Real-World Use Cases and Benefits

The integration of memory capabilities into ChatGPT marks a significant evolution in the practical applicability of conversational AI. While prior versions of ChatGPT already offered valuable assistance in a range of domains—from education to professional services—the absence of persistent memory limited its effectiveness in scenarios requiring continuity, personalization, or long-term contextual understanding. The introduction of memory now enables ChatGPT to operate more like a human assistant, capable of adapting to the user over time and delivering tailored value across diverse settings.

This section explores how memory-enhanced ChatGPT functions in real-world contexts, emphasizing specific domains where the benefits of memory are most pronounced. Through these examples, it becomes evident that memory does not merely optimize existing interactions; it fundamentally transforms the role of AI in daily tasks, professional environments, and long-term engagements.

Personal Productivity and Lifestyle Management

One of the most immediate and intuitive applications of ChatGPT’s memory is in the domain of personal productivity. Users frequently rely on ChatGPT for goal tracking, brainstorming, task planning, and content generation. However, prior to the memory upgrade, the absence of continuity required users to restate their objectives in each session.

With memory, ChatGPT can now recall previously mentioned goals and preferences, enabling more coherent and proactive support. For example, if a user tells ChatGPT that they are preparing for a graduate school application, the model can remember details such as application deadlines, preferred programs, writing style preferences for the personal statement, and standardized test plans. This allows ChatGPT to function as a pseudo-project manager, capable of checking in on progress, reminding users of milestones, and offering relevant content suggestions as the application cycle progresses.

Similarly, users who engage ChatGPT for wellness, fitness, or dietary advice can benefit from longitudinal tracking. The model can remember the user’s dietary restrictions, workout goals, and sleep targets, thereby enabling cumulative, context-aware interactions that mimic the consistency of a human coach.

Education and Personalized Tutoring

Educational applications of ChatGPT have surged, particularly as users increasingly adopt the tool for tutoring, test preparation, language learning, and content review. However, the effectiveness of these use cases was previously hindered by the model’s inability to recall what a learner had already studied or struggled with.

With memory, ChatGPT is now capable of tracking a learner’s progress over time. For instance, a student preparing for the SAT can receive personalized quizzes that reflect areas of past difficulty. The model can also recall vocabulary words the student previously struggled with or explain recurring mathematical errors based on prior sessions. Over time, this leads to more adaptive learning strategies, akin to the functionality provided by intelligent tutoring systems.

In language learning, memory allows ChatGPT to build personalized lesson plans. A user studying Spanish may find the model tracking their vocabulary usage, correcting recurring grammatical mistakes, and gradually increasing the complexity of exercises in response to demonstrated proficiency.

The broader implication here is a democratization of adaptive learning, whereby users receive tailored instruction regardless of socioeconomic or geographic barriers. This positions memory-enhanced LLMs as powerful tools in the effort to close educational gaps.

Business and Customer Engagement

In professional environments, memory allows ChatGPT to move beyond generic task assistance into the realm of customized service delivery. This is particularly transformative in customer service and enterprise support scenarios.

For customer-facing applications, such as AI-powered chatbots on websites or internal support desks, memory enables the system to remember user history, preferences, and past issues. A returning customer engaging with a ChatGPT-powered agent no longer needs to repeat previous problems or order details. The assistant can recall prior conversations, recommended solutions, and even sentiment history to adjust tone and content accordingly.

Internally, teams can leverage ChatGPT as a collaborative knowledge assistant. If a product manager uses ChatGPT to help draft specifications and track feature priorities across multiple sessions, memory allows the model to maintain continuity in terminology, project phases, and stakeholder preferences. Developers, likewise, benefit from an assistant that remembers architecture decisions, ongoing bugs, or code style conventions. This leads to improved productivity and reduced cognitive load across iterative projects.

Furthermore, in client-facing professions such as consulting, legal advisory, or account management, professionals can use memory-enhanced ChatGPT to remember details about clients, contracts, timelines, and deliverables. This enables consistent and accurate support that feels personalized and informed.

Creative Work and Content Development

Writers, marketers, and designers often engage with ChatGPT to brainstorm ideas, draft content, and refine messaging. However, creative workflows typically span multiple sessions, involving feedback, revision, and stylistic iteration. Prior to memory, users would need to manually reintroduce context and preferences with each session—an inefficient and frustrating experience.

With memory, ChatGPT can now remember a user’s preferred tone (e.g., formal, conversational), formatting choices (e.g., AP style, Markdown), and thematic constraints (e.g., avoid industry jargon). For instance, a novelist using ChatGPT to develop a fictional world can have the model remember characters, plot points, and stylistic choices across sessions, thereby streamlining world-building efforts. Similarly, a content marketer can rely on ChatGPT to maintain brand voice consistency across blog posts, social media captions, and ad copy.

This continuity not only enhances productivity but also improves creative coherence, ensuring that iterative drafts maintain a consistent narrative and structure.

Technical Workflows and Software Development

For developers and data scientists, memory unlocks new dimensions of interaction with ChatGPT. While the model has proven useful in explaining code, debugging, and generating scripts, the statelessness of prior sessions imposed limits. Each session would begin without context, making it difficult for the model to reason across multi-file architectures or long-term development cycles.

With memory, ChatGPT can retain awareness of:

- Project architecture and goals

- Libraries and frameworks in use

- Known bugs and prior solutions

- Preferred coding conventions

This allows the model to act more like a software pair-programmer with continuity, offering suggestions that respect previously established structures and coding patterns. As a result, development teams can use ChatGPT not only as a support tool but as a long-term collaborator that evolves alongside the codebase.

In sum, the memory feature enhances ChatGPT’s utility across a wide range of applications. It allows the model to act not merely as a reactive assistant, but as a proactive and adaptive partner. The transformation is especially pronounced in tasks that require continuity, historical context, or user-specific preferences—traits previously absent in language models. As user expectations evolve, memory ensures that ChatGPT remains responsive, intelligent, and aligned with long-term objectives.

Privacy, Control, and Ethical Implications

The integration of memory into ChatGPT presents a major leap forward in functionality, but it also surfaces critical concerns around privacy, autonomy, and the responsible use of artificial intelligence. As conversational agents evolve into systems that retain long-term knowledge of their users, the dynamics of trust and control become central to the user experience. Unlike ephemeral interactions where no data persists beyond a single session, memory-enhanced systems accumulate user information over time—raising profound questions about transparency, consent, governance, and ethical accountability.

This section examines the frameworks OpenAI has established to manage these challenges, while also reflecting on broader societal and philosophical implications. Memory in AI is not merely a technical feature; it is an ethical interface that must be navigated with deliberate care and clarity.

Transparency and User Consent

OpenAI has made transparency a cornerstone of its memory architecture, aiming to ensure that users are never unaware of what the system remembers and why. When ChatGPT identifies a fact or user preference that could enhance future interactions, it explicitly informs the user that this information will be remembered. This occurs through visible notifications in the chat interface, such as:

"Got it. I’ll remember that you prefer concise explanations."

This proactive disclosure mechanism serves multiple purposes:

- It prevents inadvertent data capture without user awareness.

- It allows users to assess whether the remembered information is accurate or appropriate.

- It establishes a precedent of consent before any persistent data retention occurs.

Moreover, users are empowered to view, edit, or delete their memory entries at any time. A dedicated memory management dashboard provides access to all stored information, offering a degree of auditability uncommon in many digital platforms. Users can prompt the model to recall what it knows about them, correct inaccuracies, or delete specific facts individually. More importantly, memory can be entirely disabled through the settings menu, allowing users to opt out of persistent interactions if they so choose.

This level of user control aligns with contemporary data privacy best practices and reflects a broader shift toward ethical AI design—where functionality is balanced with transparency, user agency, and accountability.

Data Security and Confidentiality

Beyond transparency, the question of data security is paramount. Persistent memory introduces the possibility of sensitive or personally identifiable information being stored in ways that, if not appropriately safeguarded, could be vulnerable to misuse or unauthorized access.

OpenAI has stated that memory is designed to be user-specific, encrypted, and isolated from other accounts. Unlike general training data, memory items are not used to retrain the base model and are stored in a secure memory layer that is logically separated from core system functions. This means that:

- Memory content does not inform future training runs.

- User memory is not accessible to other users or instances.

- System administrators and engineers cannot access memory contents without explicit legal or support-related necessity.

Nevertheless, the existence of persistent memory introduces new vectors for privacy concerns. For example:

- Could memory be subpoenaed in a legal proceeding?

- How long is memory retained by default?

- What mechanisms exist for automatic expiration or purging of stale data?

These questions underscore the need for transparent policies and external audits. As AI systems become more integrated into personal and professional life, users must be confident not only in the functionality of memory but in its compliance with data protection standards such as GDPR, CCPA, and other emerging regulations.

Ethical Considerations of Persistent AI Memory

From an ethical standpoint, memory transforms ChatGPT from a purely transactional tool into a form of digital persona—a system capable of knowing users in nuanced and enduring ways. This changes the moral calculus of AI use in several key areas:

a. Consent and Manipulation

Persistent memory opens the door to more sophisticated personalization, but also to potential manipulation. If a model remembers a user’s emotional vulnerabilities or belief systems, could that be used to influence decisions subtly? For example, a persuasive AI could tailor responses to nudge a user toward specific political views, financial decisions, or purchasing behaviors.

OpenAI mitigates these risks through alignment research and safety reviews, but the potential for exploitation—particularly by third-party deployments or modified versions of the system—remains a live concern. Transparent memory logs and the ability to audit past interactions are essential safeguards against such outcomes.

b. Bias and Representation

Memory may inadvertently reinforce user biases. For instance, if a user consistently frames information with political or cultural slants, a memory-enabled system might begin to mirror those biases in its responses. Over time, this could create echo chambers or skew the diversity of perspectives the model offers.

To combat this, OpenAI employs moderation layers and default neutrality constraints. Nevertheless, the interplay between personalization and impartiality remains an ethical challenge—particularly in sensitive areas such as healthcare, law, or education.

c. Dependency and Anthropomorphism

As ChatGPT becomes more consistent and personalized, users may begin to attribute human-like qualities to the model. Memory amplifies this effect by creating a sense of relationship continuity. While beneficial in terms of engagement and user satisfaction, this anthropomorphic perception risks creating unhealthy dependencies, especially among vulnerable users.

Educational efforts to reinforce the artificial nature of the system, along with clear design boundaries (e.g., disclaimers about being a non-human entity), are necessary to maintain responsible engagement.

Governance and the Role of User Control

In the long term, governance of AI memory systems must go beyond technical features and include broader frameworks for ethical AI oversight. This may involve:

- Independent review boards to audit memory management systems

- Publicly available documentation of how memory decisions are made

- User feedback loops to refine memory relevance and retention policies

OpenAI’s current implementation reflects a strong foundation for such governance. However, as memory expands in scope and sophistication, governance models will need to evolve in tandem. The questions of who decides what is remembered, under what conditions, and with what consequences must be addressed not only by engineers but also by ethicists, legal experts, and civil society stakeholders.

In conclusion, the introduction of memory into ChatGPT brings immense promise—but not without significant ethical and operational complexity. As users entrust AI with more information and expectations, the responsibilities of system designers grow accordingly. Privacy, control, and transparency are not peripheral concerns; they are the very foundations upon which sustained, responsible AI usage must rest.

The final section will explore the strategic implications of memory for OpenAI and the broader AI landscape—offering a forward-looking perspective on how memory sets the stage for more autonomous and agentic AI systems.

Future Directions and Strategic Impact

The rollout of memory in ChatGPT is not merely a product enhancement; it is a signal of deeper strategic intent and long-term vision. OpenAI’s decision to equip ChatGPT with memory capabilities ushers in a new era of AI evolution—one where systems move beyond task-based assistance toward persistent, adaptive, and semi-autonomous agents. As this transition unfolds, its impact will extend far beyond the boundaries of individual user experiences. It will influence platform dynamics, developer ecosystems, competitive positioning, and the trajectory toward artificial general intelligence (AGI).

This section explores how memory-enabled ChatGPT may shape the future of AI, both for OpenAI and the broader technology landscape. It examines strategic implications, platform integrations, emerging use cases, and the growing convergence of AI memory with autonomy and agency.

From Tool to Companion: The Rise of Contextual Agents

The evolution of ChatGPT from a stateless interface into a memory-enhanced system suggests a paradigm shift from reactive tools to contextual agents. Memory grants the model the capability to adapt to long-term objectives, revisit prior exchanges, and make informed decisions based on historical interactions. This positions ChatGPT not just as an answer generator, but as a digital companion capable of building a persistent relationship with the user.

In this new paradigm, ChatGPT becomes better equipped to:

- Track ongoing workflows across days, weeks, or months

- Coordinate multi-step tasks across changing priorities

- Provide context-sensitive recommendations based on accumulated preferences

These characteristics are the building blocks of an AI agent—one that is not merely intelligent but cognitively persistent. In essence, memory turns ChatGPT into the foundation for more advanced autonomous systems, capable of operating over time with increasing fidelity to human goals.

OpenAI’s Strategic Positioning and Product Roadmap

OpenAI’s decision to embed memory into ChatGPT also speaks to its broader ambitions. As competition intensifies in the generative AI space—with companies like Google (Gemini), Anthropic (Claude), Meta (LLaMA), and Mistral developing rival models—differentiation becomes critical. Memory is a powerful differentiator that enables OpenAI to:

- Deepen user engagement through personalization

- Foster lock-in through persistent user-specific data

- Support premium features for enterprise and professional customers

OpenAI’s roadmap appears increasingly geared toward building a platform ecosystem rather than a standalone product. Memory allows for seamless integration with third-party applications, APIs, and enterprise workflows. It also supports more intelligent AI assistants across modalities—text, code, audio, and vision—further extending the model’s relevance.

This aligns with OpenAI’s investment in tools such as:

- Custom GPTs: Specialized agents that can now incorporate persistent memory

- ChatGPT Enterprise: A robust platform for business use cases, where memory is indispensable for productivity and compliance

- Multi-modal capabilities: Image and voice-based interactions benefit substantially from contextual memory, enabling real-time continuity across modalities

Thus, memory is not a peripheral feature but a core enabler of OpenAI’s next generation of AI-native services.

Implications for the Developer Ecosystem

The introduction of memory transforms how developers interact with and build on top of ChatGPT. Previously, stateless interactions imposed limitations on app design. Developers had to either simulate memory through external context management or accept discontinuities in user experience. With native memory support, developers can now build:

- Virtual assistants that improve over time

- Domain-specific agents that remember internal company workflows

- Educational tools that adapt to individual learners

- Conversational analytics systems with persistent behavioral insights

Furthermore, OpenAI’s emphasis on memory transparency and control creates trust mechanisms that developers can adopt in their own applications. This sets a new bar for responsible AI integration in third-party platforms and encourages best practices around user data consent and control.

OpenAI may eventually open access to the memory infrastructure itself—such as APIs for querying, modifying, or exporting memory. Such capabilities would vastly expand the possibilities for dynamic, stateful AI experiences within broader software ecosystems.

Competitive Implications and Industry Trends

The move toward persistent AI memory is already beginning to influence competitors. Anthropic’s Claude, Google’s Gemini, and Meta’s LLaMA-based tools are also exploring various forms of contextual and persistent learning. However, OpenAI’s implementation appears to be the most user-facing and mature as of early 2025.

In the coming months and years, we can expect:

- More platforms to introduce opt-in memory systems

- New AI assistants that span not just sessions but devices and platforms

- Expansion of memory to support team-based collaboration, where AI remembers shared context across multiple users in an organization

This trend is also influencing the design of AI governance. As memory becomes more common, standards will likely emerge around:

- Memory lifecycle policies (how long data is retained)

- Consent verification practices

- Transparency reporting on memory usage

The existence of persistent memory will become a baseline expectation in both consumer and enterprise AI solutions. Those who adopt it early—and responsibly—will enjoy a first-mover advantage in shaping the norms and expectations of the AI era.

Toward Autonomy and AGI

From a technological perspective, memory is one of the key enablers for greater autonomy in AI systems. Stateless models, while powerful, are inherently limited to reactive behavior. They require constant human input and context to remain useful. By contrast, memory allows models to:

- Maintain long-term objectives

- Reflect on past actions and adjust behavior

- Anticipate user needs based on behavioral patterns

These characteristics align with the capabilities needed for artificial general intelligence (AGI)—systems that can perform a wide range of cognitive tasks with general competence. While memory alone does not constitute AGI, it is a necessary precondition for agency, learning, and goal-directed behavior over time.

In this light, OpenAI’s memory rollout can be seen as part of its broader AGI research trajectory. It creates an infrastructure through which models can accumulate knowledge not just of the world, but of individuals, tasks, and processes. Future developments—such as self-reflection, goal prioritization, and adaptive planning—will almost certainly build on this memory substrate.

This raises critical philosophical and governance questions:

- Should users be able to “train” their own AI agents based on personal memory?

- What rights do users have over AI-generated inferences based on long-term data?

- How do we ensure fairness and transparency in memory-driven decision-making?

These are the debates that will shape the AI landscape in the coming decade, and memory will be central to each one.

A Vision of the Future

Looking ahead, the memory upgrade to ChatGPT is a harbinger of a more intelligent, personalized, and proactive AI future. It suggests a world where AI is not just a tool for solving immediate problems, but a trusted partner that evolves with the user. It redefines expectations around digital interaction, blurring the line between application and companion, assistant and agent.

In practical terms, we may soon see:

- Personalized AI agents managing projects, finances, and daily life

- Persistent AI collaborators embedded in enterprise software

- Memory-enabled tutors guiding students from kindergarten to college

- AI health coaches that monitor, motivate, and mentor over years

For all these possibilities, responsible design remains critical. As ChatGPT’s memory grows more capable, so must its safeguards. The future of memory-enhanced AI will depend not only on technical innovation, but on ethical foresight, user empowerment, and an unwavering commitment to trust.

References

- OpenAI. "Memory and New Controls for ChatGPT." https://openai.com/index/memory-and-new-controls-for-chatgpt

- The Verge. "ChatGPT Will Now Remember Your Old Conversations." https://www.theverge.com/news/646968/openai-chatgpt-long-term-memory-upgrade

- Business Insider. "ChatGPT Can Now Remember Everything You Ever Told It." https://www.businessinsider.com/chatgpt-memory-remember-everything-you-ever-told-it-2025-4

- OpenAI Help Center. "Memory FAQ." https://help.openai.com/en/articles/8590148-memory-faq

- WIRED. "How to Use ChatGPT's Memory Feature." https://www.wired.com/story/how-to-use-chatgpt-memory-feature

- Axios. "ChatGPT Will Create a Digital Memory to Help Personalize Its Responses." https://www.axios.com/2024/02/13/chatgpt-memory-digital-remember-responses-openai

- Reddit. "Sam Announces ChatGPT Memory Can Now Reference All Your Past Conversations." https://www.reddit.com/r/singularity/comments/1jw3a14/sam_announces_chat_gpt_memory_can_now_reference/

- Medium. "How Does ChatGPT's Memory Feature Work?" https://medium.com/@jay-chung/how-does-chatgpts-memory-feature-work-57ae9733a3f0

- Ars Technica. "OpenAI Experiments with Giving ChatGPT a Long-Term Conversation Memory." https://arstechnica.com/information-technology/2024/02/amnesia-begone-soon-chatgpt-will-remember-what-you-tell-it-between-sessions/

- Geeky Gadgets. "How to Use New ChatGPT Memory Feature Released by OpenAI." https://www.geeky-gadgets.com/how-to-use-chatgpt-memory/