Model Context Protocol (MCP): The Architectural Backbone for Scalable, Context-Aware AI Systems

The last few years have seen a tremendous leap in the capabilities of artificial intelligence, particularly large language models (LLMs) and other foundation models. These systems can now write code, interpret data, create images, simulate human conversation, and even autonomously reason about complex tasks. But as these models have grown more powerful, so too have the demands placed upon them—especially the need to maintain consistent, meaningful, and flexible context throughout an interaction. This is where traditional architectures begin to break down, and where the Model Context Protocol (MCP) enters the picture.

In the early days of LLMs, managing context was relatively simple. A prompt would be constructed—sometimes a few paragraphs long—given to a model, and the model would return a response. But as applications evolved into sophisticated workflows, chaining multiple interactions, coordinating between models, or even orchestrating full agents working across tasks and time, the limitations of that simplistic context model became clear. Prompt engineering alone wasn’t enough. Memory systems bolted on top of LLMs were brittle. Agent frameworks began to resemble chaotic systems with little cohesion or state continuity.

This fragmentation has led to growing frustration among developers and organizations trying to scale AI across teams, products, or services. How do you share context between models? How do you define and track memory across interactions? How can a system understand when to recall something, when to forget, and when to adapt? These are not just philosophical questions—they’re architectural ones.

The Model Context Protocol (MCP) is an emerging answer. It represents a fundamental rethinking of how context should be structured, stored, and shared across models, agents, and tasks. Rather than treating context as an afterthought or a blob of text passed through prompts, MCP treats it as a first-class citizen—a structured protocol layer that sits above the model and governs how it interacts with the world. It introduces a standardized way to define, propagate, and manage context across multiple steps, tools, and actors.

At its core, MCP isn’t a single product or API—it’s a design pattern and architecture for building scalable, stateful AI systems. Think of it as TCP/IP for intelligent agents: not concerned with what the models say, but how they coordinate, how they remember, and how they maintain integrity across time.

This blog post will explore the MCP concept in depth—from its roots in the limitations of current AI architectures to its proposed solutions, real-world implementations, and the future it promises. Along the way, we’ll examine how MCP compares to other context-handling techniques, where it excels, where it still needs work, and how it could form the backbone of the next-generation AI operating stack.

Let’s begin by understanding why context is so hard to get right—and why solving it could unlock the next big leap in AI performance and reliability.

Background: The Context Problem in AI Systems

As artificial intelligence continues to evolve, context has become one of the most critical—and most difficult—challenges to address effectively. While today's large language models (LLMs) are remarkably capable in narrow, bounded tasks, they still struggle with context retention, multi-turn understanding, and managing long-running or multi-agent tasks. This limitation becomes especially clear when AI is deployed at scale across complex workflows, business logic, or evolving user intent.

The Fragility of Prompt Engineering

For many developers, the first introduction to context management is through prompt engineering—manually crafting inputs that "remind" the model of what's important. Prompts are often overloaded with instructions, examples, history, and edge-case handling in an attempt to simulate memory or understanding. While this approach can yield surprisingly strong results in isolated cases, it quickly becomes unsustainable.

Prompt length is limited. LLMs like GPT-4 or Claude can only accept a fixed number of tokens in context, and once that limit is reached, earlier context is truncated or lost. Moreover, prompts are inherently stateless—they do not persist understanding across interactions unless manually reconstructed. As a result, each interaction resets the model's memory unless additional systems are added on top.

This leads to brittle systems where a small change in prompt wording can radically shift performance. Prompt chaining—connecting multiple prompts together to simulate reasoning—further compounds this fragility. Without a shared context layer, each step must redundantly include key information, wasting compute and increasing latency.

The Limits of Vector Memory and RAG

To address prompt limitations, developers often turn to vector memory and retrieval-augmented generation (RAG). These techniques involve encoding documents or conversational history as embeddings, storing them in a vector database, and retrieving the most relevant entries at query time.

While powerful, these methods come with trade-offs. First, the retrieval process is probabilistic, not deterministic. It may miss essential context or pull in irrelevant data. Second, these approaches lack an understanding of narrative structure, intent continuity, or temporal context. For example, a retrieved chunk of text may reference “the issue we discussed earlier” without any anchoring unless the retrieval system also reconstructs that chain of logic.

Moreover, vector stores don’t represent state—they are passive. They don’t know what the model is currently doing or what task it's about to perform. They're not coordinated across tools, steps, or agents. As such, while helpful for knowledge recall, RAG doesn’t solve the broader problem of dynamic context management across time and roles.

State Management in Agent Architectures

As the complexity of AI systems increased, agent-based frameworks such as LangChain, CrewAI, and Microsoft’s AutoGen began gaining traction. These systems simulate independent agents that work toward goals by calling models, using tools, reading memory, and coordinating with each other.

Here, the need for context becomes even more pronounced. Agents must maintain internal state, share information, adapt to changes in environment, and act with continuity. However, in many frameworks, this state is either implicit (embedded in prompts), scattered across session variables, or loosely encoded in memory systems.

Without a unifying protocol, agents often talk past each other. One agent’s state may not be available or meaningful to another. Context is frequently duplicated, dropped, or misunderstood. This leads to redundant calls, miscommunications, and a lack of trust in system outputs. The result is a network of semi-autonomous models operating in partial silos rather than a truly collaborative, intelligent system.

The Problem of Intent Drift

Beyond technical implementation, context issues manifest in how AI systems interpret and maintain user intent. In many applications—from writing assistants to dev copilots—users expect the AI to understand their goals, preferences, and situational history over time.

However, as interactions span multiple steps or sessions, models often lose sight of the original intent. They default back to generic completions, forget user-specific nuances, or introduce contradictions. This phenomenon, known as intent drift, stems from the lack of structured memory and shared state between components.

Without a governing context model, there’s no mechanism to enforce coherence or ensure that future outputs align with prior guidance. This makes AI systems feel inconsistent or even unreliable over longer timeframes, which undermines trust and limits adoption in mission-critical settings.

Coordination Across Models and Tools

Modern AI systems rarely operate in isolation. Whether powering a chatbot, an automation pipeline, or a complex decision-support system, they typically rely on multiple models, tools, and services. Each of these components must operate with shared awareness of user data, task progression, and environmental state.

Currently, context sharing between components is handled in ad-hoc ways: passing JSON blobs, using environment variables, or embedding serialized instructions in prompts. This leads to fragile interfaces and tight coupling. If one component changes its input or output format, the whole chain can break. There is no standardized “language” for context transmission between components.

This lack of interoperability makes it hard to scale systems, swap out components, or run tasks in parallel. It also prevents intelligent coordination—for example, a planning model understanding what a perception model saw, or an evaluation module knowing the constraints defined by the user earlier in the process.

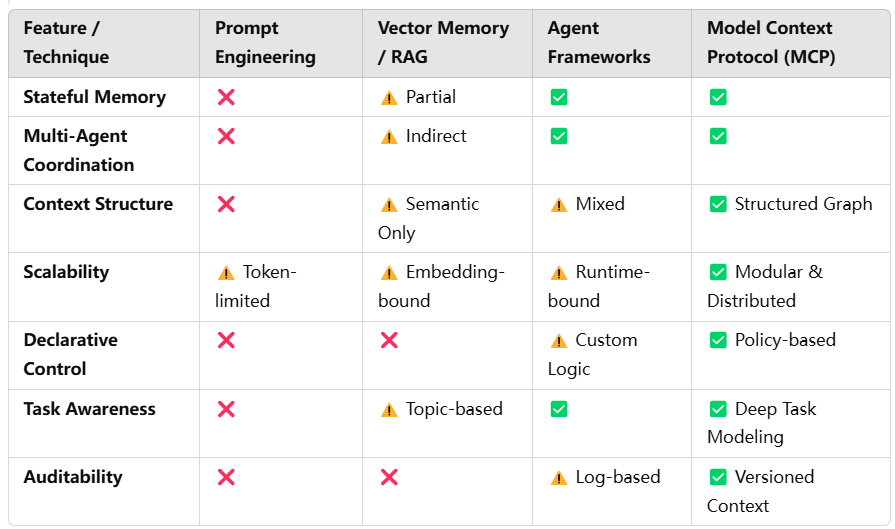

This chart compares the progression of major context-handling techniques used in AI systems—specifically across dimensions such as scalability, statefulness, coordination, and structure. MCP is positioned as the most comprehensive evolution.

- ✅ Fully Supported

- ⚠️ Partially Supported / Workaround

- ❌ Not Supported

Why We Need a Protocol, Not Just a Patch

The recurring theme across these limitations is that context is foundational, yet poorly represented in current architectures. While individual workarounds exist—prompting tricks, memory APIs, agent state containers—they don't solve the root problem: context lacks structure, control, and standards.

Just as the internet required protocols like TCP/IP to reliably transmit information across disparate systems, AI needs a protocol layer to manage and share context across models, agents, and tools. This is the role the Model Context Protocol (MCP) aims to fulfill.

MCP introduces a structured, programmable, and standardized way to encode, propagate, and govern context across AI systems. It decouples context from prompts, agents, and memory stores, instead treating it as a first-class data layer that can be queried, updated, audited, and shared. By doing so, it enables a new class of context-aware, composable, and modular AI applications.

Before we dive into how MCP works, let’s explore what it is, what it’s made of, and how it redefines the very notion of “context” in intelligent systems.

What Is the Model Context Protocol (MCP)

The Model Context Protocol (MCP) is an emerging design standard for managing context in complex AI systems. It introduces a structured, system-level approach to organizing and transmitting context across models, agents, and computational layers. Unlike traditional methods that rely on static prompts or ad-hoc memory solutions, MCP establishes a protocol-based layer—much like HTTP or TCP/IP in networked systems—that provides persistent, dynamic, and semantically rich context throughout the lifecycle of AI-driven workflows.

At its core, MCP is not a single product, API, or library. Rather, it’s a conceptual and architectural framework for treating context as a programmable, composable asset. It allows developers to build systems where context is explicitly defined, reliably updated, and automatically shared across components, rather than being an afterthought or hidden in model inputs.

Key Principles of MCP

To understand what makes MCP different from traditional context handling, it’s helpful to look at its foundational principles:

- Context as a First-Class Citizen

In an MCP-compliant system, context is not embedded passively inside prompts or scattered across memory stores. Instead, it is explicitly represented and maintained as structured data objects—context units—that can be queried, mutated, and governed programmatically. - Separation of Concerns

MCP separates task logic, model behavior, and context management into distinct components. This decoupling makes systems more modular, extensible, and debuggable. Models don’t need to “understand” context—they simply consume it, while context is orchestrated by system-level controllers. - Standardized Interfaces for Context Exchange

MCP introduces interoperable formats and schemas for passing context between agents, tools, models, and runtimes. These standardized interfaces prevent the need for bespoke glue code and enable compatibility across heterogeneous systems. - Dynamic Contextualization

Rather than static memory injection, MCP enables dynamic, situation-aware context assembly. Context can be modified in real time based on model outputs, external events, or human feedback. This makes it ideal for multi-turn, real-world workflows. - Declarative Context Control

Developers can define policies that govern how context flows—what’s visible to each agent, what’s persistent across tasks, and what should be discarded. This gives rise to context governance, where state is not just stored, but controlled and audited.

Core Components of MCP

While the protocol may be implemented in different ways depending on use case or platform, a typical MCP system involves the following key components:

1. Model Context Units (MCUs)

MCUs are the basic building blocks of context in MCP. Each unit encapsulates a self-contained piece of context—such as user intent, task state, memory reference, or environment condition. These are structured objects (often JSON-like) that are stored, updated, and shared across the system.

For example, an MCU might represent:

- A user’s long-term preference for formal writing tone

- The current progress status of a multistep task

- The results of a previous sub-agent’s operation

MCUs are modular and composable, allowing systems to construct context “views” tailored to each model’s or agent’s needs.

2. Context Graphs

A Context Graph defines the semantic relationships between MCUs. Think of it as a directed graph where nodes are context units and edges define how they depend on or interact with one another.

This allows for:

- Intelligent context pruning (only include what’s relevant)

- Dependency resolution (e.g., “this context depends on that one”)

- Scoping rules (e.g., “only share these nodes with this agent”)

The graph model is particularly powerful in multi-agent systems where roles, permissions, and task dependencies vary across participants.

3. Context State Store

This is the persistent backend where context is stored and versioned over time. It functions like a database of context, offering APIs to read, write, merge, or diff MCUs. The store supports temporal queries, making it possible to reconstruct the state of a system at any previous point—essential for debugging, auditing, or reproducibility.

4. Context Controllers

Context Controllers act as orchestrators. They determine what context each model sees, when it sees it, and how it should respond to changes. Controllers can inject context into prompts, mutate MCUs based on outputs, and enforce policy rules such as privacy constraints or memory decay.

They are the equivalent of routers and schedulers in traditional systems but focused on semantic flow control.

How MCP Differs from Existing Approaches

Traditional context mechanisms—like memory in LangChain or session stores in chatbots—are typically opaque, brittle, and one-size-fits-all. They either overload prompts with history or inject memories based on fuzzy matching. MCP, in contrast, introduces structured, context-aware computation with fine-grained control.

For instance:

- Rather than just retrieving a document that might be relevant, MCP can bind specific data to a model’s working memory, with full traceability.

- Instead of dumping chat history into a prompt, MCP allows the system to construct a narrative view based on task, user profile, and system state.

This enables a higher level of coordination, scalability, and robustness, especially in complex systems where multiple agents or tools must collaborate in real time.

Emerging Ecosystem Support

While MCP is still an emerging concept, its principles are already being reflected in newer tools and platforms. OpenAI’s System Messages, Microsoft’s AutoGen, and open-source efforts like CrewAI and LangGraph are beginning to implement elements of structured context management. These systems hint at a broader movement toward protocolized AI runtime layers, where orchestration is context-driven rather than prompt-driven.

As this movement grows, the MCP paradigm offers a powerful blueprint for building systems that are modular, context-aware, and ready for real-world scale.

Anatomy of an MCP Implementation

Implementing a Model Context Protocol (MCP) system involves far more than bolting on a memory store or fine-tuning an API wrapper. It requires a fundamental shift in how context is modeled, maintained, and exchanged throughout an AI system’s lifecycle. In this section, we’ll unpack the core mechanics of an MCP implementation—from initialization to runtime behavior—highlighting how its design enables modularity, reliability, and coordination across complex workflows.

Let’s walk through the full architecture of an MCP-based AI system and see how each piece works together in practice.

Step 1: Initialization and Context Injection

Every MCP-based system begins by defining the initial context environment, typically composed of one or more Model Context Units (MCUs). These are structured representations of relevant information such as user profile, task intent, tool availability, environmental conditions, and past interactions.

Here’s an example of an initial MCU:

{

"mcu_id": "task_001",

"type": "task_metadata",

"description": "User wants to generate a quarterly report from uploaded sales data",

"owner": "user_987",

"status": "pending",

"timestamp": "2025-04-01T08:30:00Z"

}

Once initialized, these MCUs are registered with the Context State Store, a persistent backend (usually a database or in-memory store with versioning) that serves as the “single source of truth” for all context objects.

Next, a Context Controller selects a subset of MCUs to pass to the model or agent in its prompt input. This is not just memory injection—it’s a filtered, structured view of the larger context graph. For example, a summarization model might receive only the task description, user tone preferences, and a relevant document excerpt, while a validation agent might get a broader system state snapshot.

Step 2: Context Propagation and Control Signals

As the system evolves, models and agents produce outputs—completions, evaluations, tool calls—that often affect the surrounding context. Rather than hard-coding responses or updating memory manually, MCP introduces context propagation rules governed by the Context Controller.

Controllers operate using control signals, which are structured events triggered by system actions. These signals specify:

- What output was generated

- What context units should be updated

- Which agents should be notified

- Whether new MCUs should be created or expired

For example, if a model completes a data validation task, it might emit a control signal like:

{

"event_type": "task_update",

"source": "agent_validator_1",

"mcu_target": "task_001",

"updates": {

"status": "validated",

"validator_notes": "No anomalies found"

}

}

The Context Controller interprets this and updates the MCU accordingly, then determines which downstream agents (e.g., a report generator) should receive the updated context in their next prompt.

Step 3: Runtime Context Updates and Event Hooks

To maintain coherence in real time, MCP systems utilize event hooks that respond to specific triggers—new user input, model outputs, tool completions, or time-based conditions. These hooks can invoke context merging, pruning, prioritization, or snapshotting based on predefined policies.

Hooks might include:

- OnUserInput: Append a new message to conversation history MCU and recompute user intent.

- OnModelOutput: Parse response and inject semantic tags (e.g., sentiment, topic, confidence score) into the context graph.

- OnTimeout: Decay or archive MCUs older than a defined threshold.

These hooks help implement a dynamic context lifecycle, ensuring that the system evolves with the user while maintaining relevance and minimizing noise.

Step 4: Modular Task Decomposition Across Agents

A powerful feature of MCP is its support for agent modularity. Each agent in a system—whether it’s planning, coding, summarizing, evaluating, or interacting with users—can operate with its own filtered context view drawn from the shared graph. This promotes isolation, reusability, and fault tolerance.

Let’s say a user requests a multi-step task like “Draft a marketing email based on the latest campaign metrics and A/B test performance.” In an MCP-based system, this could be handled by the following agents:

- Data Fetcher Agent: Retrieves campaign metrics

- Insights Agent: Analyzes trends and suggests key themes

- Copywriter Agent: Writes the email based on themes and tone preferences

- Reviewer Agent: Checks for brand compliance and readability

Each of these agents operates on a customized context slice, governed by the Context Controller. For example:

- The Data Fetcher doesn’t need writing preferences

- The Reviewer doesn’t need metric-level data but does need copy style rules

Because each context slice is assembled dynamically using graph traversal and access policies, the system avoids overloading prompts and leaking unnecessary information between agents.

Step 5: Output Interpretation and Context-Aware Policies

After agents complete their respective tasks, their outputs are stored as new or updated MCUs. These outputs are then interpreted by downstream systems or humans. MCP allows for context-aware output handling, where the system behavior changes based on current context state.

Example policies include:

- Escalation Policy: If the Reviewer Agent marks a copy as non-compliant and urgency level in context is high, notify a human editor.

- Optimization Policy: If user sentiment in recent interactions is negative, modify tone generation to be more empathetic.

- Memory Policy: Automatically summarize task history into a compressed MCU and discard granular logs after 24 hours.

By encoding such policies declaratively, MCP systems become adaptive and self-regulating, reducing the need for manual orchestration.

Benefits of MCP Implementation

Implementing MCP at the system level unlocks several advantages:

- Scalability: Components can be added, swapped, or run in parallel without breaking state consistency.

- Observability: Developers and operators can inspect the entire context graph at any point in time.

- Reproducibility: System behavior can be traced and re-executed using historical context snapshots.

- Security and Privacy: Fine-grained control over context visibility enables robust access controls and compliance management.

These benefits become increasingly important as AI systems move from demos and experiments to enterprise-grade deployments that demand reliability, transparency, and modularity.

Example: End-to-End Flow in a Copilot Application

To bring this to life, imagine an AI-powered financial copilot that helps an analyst summarize earnings reports, generate insights, and draft investor communications. In an MCP setup:

- User preferences, prior summaries, deadlines, and audience tone are stored as MCUs.

- A Context Controller filters context per task (e.g., summary generator gets different context than insight extractor).

- Each agent’s output is converted into new MCUs, updating the context graph.

- Event hooks ensure memory is compressed at end of day, outdated reports are purged, and daily briefings are regenerated.

All of this happens without rigid prompts, hardcoded logic, or shared global variables. Context becomes a programmable substrate, not an implicit guesswork exercise.

Applications and Use Cases of MCP

The Model Context Protocol (MCP) isn’t just a theoretical construct—it’s a practical architecture that solves real-world challenges across a wide array of domains. As AI systems become more complex, distributed, and multi-agent in nature, MCP provides the scaffolding necessary to ensure they remain consistent, interpretable, and intelligent over time.

From enterprise workflows to autonomous agents, and from developer tools to compliance auditing, MCP is beginning to emerge as a foundational layer in next-generation AI deployments. This section explores some of the most compelling use cases—and how MCP is being adopted in the wild.

1. Enterprise AI Workflows

In large organizations, AI is increasingly integrated into multi-step, multi-stakeholder workflows that span departments and tools. A typical enterprise AI pipeline might involve:

- Data ingestion and transformation

- Model-based analysis

- Decision support generation

- Human-in-the-loop review

- Report drafting or communication

Each of these steps involves different agents or models, operating asynchronously or in tandem. Without a unifying context layer, vital information is lost in transition: what decisions were already made? What assumptions were used? What tasks are in progress?

MCP enables end-to-end orchestration by allowing each step to consume and update context in a standardized way. Context flows through the pipeline like a digital twin of the business process, reducing ambiguity, duplication, and latency.

Example:

- In a legal contract review workflow, different agents extract clauses, evaluate risks, flag anomalies, and suggest revisions.

- MCP allows all agents to operate with shared understanding of contract type, client risk tolerance, and prior decisions.

- This improves accuracy, traceability, and compliance.

2. Autonomous Multi-Agent Systems

MCP shines in systems involving autonomous agents working together to solve complex tasks. Whether it’s in customer support, scientific research, or software development, agents need to share state, assign roles, coordinate actions, and resolve dependencies.

Without MCP, multi-agent systems tend to suffer from:

- Redundant execution

- Conflicting decisions

- Memory corruption or loss

- Tight coupling and maintenance overhead

MCP solves this by allowing each agent to:

- Access only relevant context slices via scoped queries

- Publish updates in the form of new or modified MCUs

- Trigger events or request help through structured protocols

In effect, MCP acts like a shared mental model, enabling agents to interact intelligently rather than blindly passing text.

Example:

- In a product development use case, a planning agent outlines milestones, a design agent proposes UI layouts, and a testing agent creates QA cases.

- MCP coordinates context flow so each agent is aware of status, decisions, blockers, and priorities—without needing a central orchestrator.

3. Context-Aware Copilots and Personal Assistants

The rise of copilots—AI tools that assist users across tasks, apps, and workflows—has created a new demand for personalized, persistent context. Users expect these systems to remember their preferences, adapt to ongoing goals, and behave consistently across sessions.

Traditional copilots store some memory, but without structured context, they often:

- Repeat irrelevant suggestions

- Forget user preferences

- Misinterpret nuanced goals

With MCP, copilots can:

- Maintain a structured graph of user preferences, past actions, and current tasks

- Dynamically filter and apply this context to each action

- Adapt tone, scope, or modality based on user profile and feedback

Example:

- A sales copilot writing customer emails tailors its tone and messaging based on account size, stage in sales funnel, prior interactions, and company branding—all stored and queried via MCP.

4. Compliance, Auditing, and AI Safety

One of the most overlooked—but critical—use cases for MCP is compliance and auditability. In industries like finance, healthcare, or law, AI-generated outputs must be traceable: who generated what, based on what context, at what time?

MCP enables:

- Versioned snapshots of context at every step

- Audit trails that show how decisions evolved

- Policy enforcement via context access rules

This makes AI systems accountable and explainable, not just efficient. It also reduces regulatory risk, especially in sensitive environments.

Example:

- In a healthcare setting, a diagnosis assistant might consult patient history, lab results, and treatment guidelines.

- MCP ensures all sources are documented, timestamped, and accessible for audit if an error or dispute arises.

5. Developer Tools and IDE Integration

Developers are increasingly relying on AI tools integrated directly into their editors and workflows. These tools need to understand not just the codebase, but the developer’s intent, habits, and ongoing work context.

MCP can power developer agents that:

- Track active tickets, files, test coverage, and recent changes

- Adapt responses based on coding standards or project type

- Preserve context across refactors, branches, or handoffs

Example:

- A code review copilot can reference recent commits, previous reviewer notes, and team guidelines—each stored as MCUs—when suggesting improvements or flagging issues.

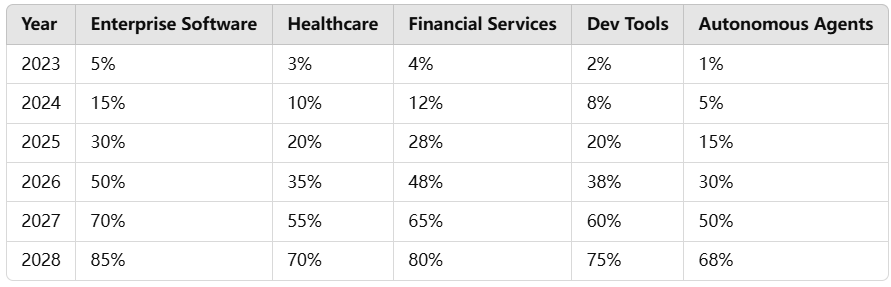

Here’s a projection of how MCP adoption is expected to spread across key industries over time:

Enterprise and financial applications are leading the charge, driven by compliance and complexity demands. Developer tools and autonomous agents follow closely as frameworks mature.

Challenges and Limitations

While the Model Context Protocol (MCP) promises a powerful shift in how context is managed within AI systems, it is far from a silver bullet. Like any architectural advancement, it brings with it a set of new complexities, implementation trade-offs, and design risks. As teams begin to adopt MCP at scale, they encounter both technical and organizational challenges that must be addressed to ensure long-term success.

This section outlines the key limitations and potential pain points associated with MCP, as well as open questions that remain as the protocol continues to evolve.

Complexity and System Overhead

Perhaps the most immediate challenge with MCP adoption is its architectural complexity. Implementing MCP requires a significant departure from traditional, stateless AI interactions. Developers must build or integrate:

- Context stores with version control and access rules

- Controllers capable of interpreting and routing structured context

- Mechanisms for context propagation, mutation, and pruning

- Policies for visibility, security, and memory decay

This means that teams must be proficient not just in LLM prompts and APIs, but also in distributed systems, data modeling, and orchestration. For smaller teams or startups, the overhead of maintaining an MCP runtime can be prohibitive, especially if tooling is immature or integration into existing infrastructure proves difficult.

Trade-off: The more powerful and precise the context management, the more complex the orchestration layer becomes. Without proper abstractions or frameworks, teams may spend more time wiring context plumbing than building intelligent agents.

Lack of Standardization and Tooling

As of now, MCP is still an emerging architecture, not a formal protocol with a governing standard. While the core ideas—structured context units, propagation rules, and controller logic—are becoming more widely adopted, different implementations often define their own schemas, data formats, and control mechanisms.

This leads to:

- Interoperability issues between agents built on different frameworks

- Fragmentation of context data models

- Challenges in portability, debugging, and observability

Efforts like LangGraph, AutoGen, and other agent ecosystems are starting to converge on similar patterns, but no single specification currently defines “MCP compliance.” Without standardization, vendor lock-in and duplicated engineering efforts are real risks.

Future direction: The community may benefit from an open MCP specification or reference implementation—similar to what Kubernetes did for container orchestration—to drive adoption and compatibility.

Context Bloat and Information Overload

As more context units accumulate over time, systems run the risk of context bloat—where agents are overwhelmed with too much information, even if technically relevant. While MCP allows for modular and filtered views of context, these filters must be implemented thoughtfully.

Problems that arise from bloat include:

- Increased token usage and model latency

- Semantic drift due to over-referenced past events

- Conflicting instructions or outdated data still lingering in memory

To combat this, MCP systems need smart pruning policies:

- Temporal decay: Remove or summarize old context

- Relevance scoring: Prioritize MCUs based on task-specific signals

- Role scoping: Restrict what each agent can see based on function

Lesson learned: More context isn't always better. Effective MCP design is about curated, purposeful context delivery, not just infinite memory.

Privacy, Security, and Access Control

Because MCP centralizes and structures all context interactions, it can become a high-value target for privacy violations or security exploits if improperly managed. For instance, an unauthorized agent with access to the full context graph might expose sensitive information, override policy boundaries, or leak user data.

Key challenges include:

- Defining fine-grained access control lists (ACLs) for MCUs

- Preventing context leakage between agents or tasks

- Implementing robust auditing and logging of all context interactions

- Managing user consent and data governance in compliance-heavy industries (e.g., healthcare, finance)

These security requirements add overhead and require careful design of context visibility layers, often enforced via the Context Controller. Unlike traditional prompt-based systems, where data exposure is limited to one-shot interactions, MCP requires ongoing protection of structured, persistent memory.

Latency and Performance Bottlenecks

MCP introduces more moving parts into the inference pipeline—graph lookups, context merging, policy checks, mutation hooks—all of which can introduce latency into the system. While MCP was designed for scalability, real-world performance depends heavily on implementation details like:

- Query optimization in the context store

- In-memory caching strategies

- Asynchronous event handling for context updates

In high-throughput applications (e.g., customer support, trading systems), even small slowdowns can degrade user experience. This tension between control and speed is one of the major engineering challenges facing MCP adoption at scale.

Mitigation strategies:

- Use lightweight MCUs for runtime tasks, archive heavier context in cold storage

- Pre-assemble context views for known patterns or workflows

- Employ caching and lazy evaluation wherever feasible

Human Interpretability and Debugging

One of the strengths of MCP is that it brings structure and traceability to AI behavior. But paradoxically, the complexity of context graphs and propagation logic can make it harder for humans to debug when something goes wrong.

Common issues include:

- Agents failing due to unseen context dependencies

- Outputs changing unexpectedly after seemingly unrelated context updates

- Difficulty in tracing back what specific context caused a model response

To overcome this, teams need observability tools that let them inspect context snapshots, visualize graphs, simulate agent views, and replay historical workflows. These tools are still in their infancy compared to traditional application debuggers.

The goal: To make MCP systems as transparent to developers as APIs and databases are today.

Open Questions and Research Areas

As promising as MCP is, many questions remain:

- Can MCP be extended to multi-modal systems, coordinating vision, text, and code?

- How do we design context languages—structured schemas for domain-specific MCUs?

- Will LLMs evolve to become context-native, accepting MCUs directly rather than serialized prompts?

These are the next frontiers for research and tooling as the AI community continues to push toward modular, intelligent, and memory-aware systems.

The Future of AI with MCP

Standards, Ecosystems, and Beyond

The Model Context Protocol (MCP) represents a significant architectural step toward realizing scalable, modular, and intelligent AI systems. While the current adoption of MCP remains limited to advanced prototypes and early implementations, its foundational principles—structured context, agent coordination, and policy-driven memory—are gaining momentum across the AI ecosystem. Looking ahead, MCP is poised to evolve from a conceptual framework into a foundational standard that underpins the next generation of AI platforms.

This section explores the prospective trajectory of MCP in terms of formal standardization, ecosystem maturity, tooling advancement, and its broader impact on AI system design.

Toward a Formalized MCP Standard

At present, MCP remains an architectural philosophy rather than a codified specification. However, as more developers, researchers, and organizations converge on similar approaches to structured context management, the call for standardization grows louder. A formal MCP specification would likely include:

- A schema for Model Context Units (MCUs), including metadata, visibility rules, and data types.

- A Context Graph model that defines dependencies, relationships, and propagation logic.

- A well-defined API for Context Controllers, including access, mutation, and versioning operations.

- Guidelines for policy enforcement, including audit trails, lifecycle management, and security constraints.

The benefits of such a standard are multifold: interoperability between tooling vendors, reduced integration costs, and improved adoption across industries. Drawing parallels from cloud computing, where container orchestration matured through Kubernetes and infrastructure provisioning through Terraform, a formal MCP standard could act as a similar unifying force in AI infrastructure.

Open collaboration between AI labs, academic researchers, and industry consortia will be key to defining and ratifying this standard. The emergence of MCP-centric open-source frameworks may catalyze this process.

Emergence of MCP-Native Runtimes and Platforms

As MCP principles mature, they are likely to influence the design of entire AI runtime environments. Just as operating systems manage memory, processes, and scheduling, future AI runtimes will manage context flow, agent interactions, and semantic state. In this paradigm, MCP would serve as a foundational protocol layer.

We are already witnessing early signs of this evolution. Frameworks like LangGraph, Microsoft AutoGen, and Meta’s ReAct architectures are beginning to implement context-aware routing, agent-specific memory, and structured task decomposition. These platforms, while not yet full MCP implementations, demonstrate that runtime-level context control is not only feasible but desirable.

The likely outcome is the rise of MCP-native AI platforms, which offer:

- Declarative workflows where context is passed and mutated automatically.

- Built-in observability and replay functionality.

- Modular agent frameworks capable of dynamic role assignment and inter-agent context synchronization.

These systems will move beyond the static prompt–response loop and toward contextualized computation environments, where each agent or model operates within a shared and persistent context ecosystem.

Tooling and Abstraction Layers

For MCP to reach mainstream adoption, the development of user-friendly tooling will be critical. Most current implementations require significant custom engineering, including data modeling, controller design, and manual debugging of context flows. As with any complex system, usability is a primary driver of adoption.

Several key tooling areas are expected to emerge:

- Visual context editors, allowing developers to build and inspect context graphs through low-code interfaces.

- MCP debuggers, which enable step-by-step replay of agent workflows and context state mutations.

- Agent test harnesses, where scenarios can be simulated under various context configurations.

- Declarative policy languages, enabling context control logic to be defined using readable, high-level syntax (akin to SQL or YAML).

These abstractions will reduce implementation complexity and open the door for adoption by smaller teams and non-specialist developers. Over time, these tools may become integral components of integrated development environments (IDEs) for AI, much like build pipelines and debuggers in traditional software engineering.

Integration with AI Governance and Compliance Frameworks

As regulatory scrutiny of AI systems intensifies, MCP offers a uniquely strong alignment with emerging governance principles. Key areas where MCP can contribute include:

- Transparency: By maintaining structured, versioned records of all context inputs and mutations, MCP enables full traceability of model decisions.

- Fairness and Bias Auditing: Access control layers within MCP can restrict exposure of sensitive attributes or enable targeted interventions.

- Reproducibility: Version-controlled context enables auditors or regulators to recreate historical AI outputs based on the exact state at the time of inference.

Governments and standards bodies seeking to mandate explainability, safety, and control in AI systems will find MCP-aligned architectures inherently more compliant and auditable. As such, we may see MCP principles embedded into AI assurance frameworks and compliance checklists in the near future.

Distributed and Federated Context Models

Looking further ahead, the future of MCP may extend beyond centralized context management and into distributed and federated environments. This evolution will be essential for deploying intelligent systems in settings where data sovereignty, privacy, or autonomy are critical.

Consider a global supply chain scenario in which multiple companies operate their own AI agents, but must still coordinate decisions. A federated MCP architecture could allow:

- Shared context graphs with selective visibility, where each participant accesses only their permitted subset.

- Cryptographically signed MCUs, ensuring tamper-proof records of decisions and tasks.

- Cross-organization context propagation that respects privacy and compliance boundaries.

Such a model aligns closely with broader trends in edge AI, zero-trust architectures, and decentralized intelligence. It opens the possibility for AI ecosystems that are collaborative, modular, and sovereign—each system acting with contextual awareness while maintaining autonomy.

Philosophical Shift: From Prompting to Context Programming

Beyond the technical and operational advancements, MCP signals a deeper paradigm shift in how developers interact with AI systems. The current state of AI engineering remains largely rooted in prompt engineering—a brittle and imprecise method of steering model behavior. MCP, by contrast, introduces the concept of context programming: designing structured, evolving, and programmable environments in which models operate.

This shift brings AI system development closer to traditional software engineering, where:

- Behavior is shaped by state and configuration, not just ad-hoc inputs.

- Modules interact through well-defined protocols.

- Systems can be tested, audited, and evolved in a controlled manner.

As MCP adoption increases, developers will spend less time writing clever prompts and more time defining rules, policies, and structures that govern model behavior in a principled way. This, ultimately, is the foundation for AI systems that are not only more capable, but more reliable, explainable, and human-aligned.

Conclusion

The Model Context Protocol (MCP) represents a pivotal advancement in the architectural foundation of artificial intelligence systems. As AI becomes increasingly embedded in enterprise workflows, developer tools, autonomous agents, and decision-support platforms, the need for robust, persistent, and interpretable context management has become both urgent and unavoidable. MCP addresses this need by reframing context not as a transient byproduct of interaction, but as a structured, dynamic layer that is central to intelligent behavior.

Throughout this article, we have explored the limitations of traditional context-handling mechanisms—ranging from prompt engineering and vector memory to ad hoc agent memory. These methods, while effective in narrow scopes, fall short when AI systems are required to operate continuously, adaptively, and in coordination with other agents or tools. MCP overcomes these constraints by introducing a formalized approach to context propagation, versioning, access control, and semantic linking across multiple models and components.

By implementing structured units of context, governed by programmable controllers and organized within dynamic graphs, MCP enables AI systems to exhibit properties once thought to be exclusive to human cognition: memory retention, task decomposition, contextual awareness, and goal continuity. It paves the way for a new generation of AI platforms that are not only more powerful but also more transparent, controllable, and secure.

However, the implementation of MCP is not without its challenges. It introduces architectural complexity, requires thoughtful design of access and mutation policies, and demands a level of observability that few current AI tools provide. Nonetheless, as the ecosystem matures—with the development of open standards, visual tools, and reference runtimes—these challenges are increasingly surmountable.

In the coming years, MCP may well emerge as a foundational element of the AI software stack, much like APIs and protocols have done for traditional computing. It offers a vision of AI that is less reliant on opaque prompts and more grounded in structured, auditable, and modular systems. For developers, researchers, and organizations seeking to build intelligent, reliable, and scalable AI systems, MCP offers not just a framework, but a forward-looking blueprint for the future.

References

- System Message Basics

https://platform.openai.com/docs/guides/gpt/system-message - Memory and Context Handling

https://docs.langchain.com/docs/components/memory/ - Multi-Agent Conversational Framework

https://microsoft.github.io/autogen/ - Stateful Multi-Agent Workflows with LLMs

https://langgraph.dev/ - Orchestrating Collaborative AI Agents

https://docs.crewai.io/ - Reasoning and Acting in Language Models

https://arxiv.org/abs/2210.03629 - Using Function Calling and Memory

https://github.com/openai/openai-cookbook/blob/main/examples/Function_calling_and_memory.ipynb - Vector Database for Semantic Search and RAG

https://www.pinecone.io/ - Open-Source Vector Search Engine

https://weaviate.io/ - Composable Agent Framework

https://huggingface.co/blog/hugginggpt - AI Orchestration Framework

https://github.com/microsoft/semantic-kernel - Composable LLM Agents with Shared Memory

https://openagents.github.io/ - Logging, Observability, and Prompt Debugging

https://www.promptlayer.com/ - LLM Observability and Tracing

https://arize.com/llm-observability/ - Constitutional AI and Context Windows

https://www.anthropic.com/index/claude