Midjourney V7: Redefining Speed and Quality in AI Image Generation

Artificial intelligence continues to redefine the boundaries of creativity, with visual generation technologies emerging as some of the most transformative tools for artists, designers, marketers, and technologists alike. Among these innovations, Midjourney has positioned itself as a leading platform, distinguished by its unique aesthetic sensibilities, community-driven development, and ease of access via Discord integration. Now, with the release of Midjourney Version 7 (V7), the platform ushers in a new era—marked by a significant leap in generation speed, prompt interpretability, and real-time creative iteration.

Since its inception, Midjourney has evolved through multiple versions, each iteration refining its model's capacity for detail, stylistic fidelity, and compositional structure. From the early days of surreal, dreamlike renderings in V1 to the cinematic polish of V6, each release has addressed user demands for more control, realism, and versatility. However, while these prior improvements focused heavily on output quality and prompt comprehension, V7 distinguishes itself with a bold emphasis on performance—not just in terms of quality, but in how quickly that quality is delivered.

Speed in image generation is more than a matter of convenience. In a world increasingly shaped by real-time digital experiences—whether in live marketing campaigns, collaborative design sessions, or generative storytelling—latency has become a core metric of creative usability. With Midjourney V7, the average image generation time has reportedly decreased by up to 60% compared to its predecessor, making it feasible for creators to engage in a continuous dialogue with the model, exploring visual ideas without disruptive pauses or rendering bottlenecks.

This acceleration has broad implications for creative workflows. In previous versions, artists often had to wait upwards of 60–90 seconds for a set of high-resolution outputs, limiting their ability to iterate fluidly. With V7, generation times for standard image sizes have dropped closer to the 20–30 second range, with even faster low-resolution previews available within seconds. This improvement effectively transforms Midjourney from a batch-oriented rendering engine into an interactive co-creation platform.

Speed is also a strategic advantage in the increasingly crowded space of generative image platforms. With competitors like OpenAI’s DALL·E 3, Stability AI’s SDXL, Adobe Firefly, and Runway’s Gen-2 gaining traction, the performance envelope becomes a decisive factor. While models like DALL·E 3 have garnered praise for textual fidelity and compositional realism, Midjourney has remained the platform of choice for users seeking painterly, stylized outputs with artistic abstraction. The release of V7 enhances this appeal by removing the friction previously associated with longer rendering times—allowing artists to produce more, refine faster, and ultimately, spend more time creating rather than waiting.

Importantly, Midjourney V7’s speed improvements have not come at the expense of quality. On the contrary, early users report that the latest model delivers sharper edges, cleaner lines, and more accurate lighting effects, all while preserving Midjourney’s signature style-centric generative approach. This balance of efficiency and aesthetic excellence is the result of architectural optimizations under the hood, including advances in prompt parsing, improved image tokenization, and more efficient GPU memory usage—developments that will be examined in greater detail in the next section.

Moreover, this milestone reflects broader shifts in the way AI-generated art is conceived and utilized. As visual generation tools become faster and more responsive, they blur the line between ideation and production. Where once artists might have used AI as a sketching tool or source of inspiration, they now employ it as a collaborative design partner—capable of producing polished assets in real-time, during brainstorming meetings, live events, and even interactive storytelling applications.

Midjourney V7 also emerges at a time when generative image models are becoming increasingly integrated into commercial and professional pipelines. In fields such as advertising, content marketing, UI/UX design, and architecture, the ability to generate high-quality visuals quickly is not just beneficial—it is transformative. Agencies can now pitch concepts to clients in real-time. Game designers can prototype environments on the fly. Filmmakers can generate storyboards dynamically during creative discussions. These use cases, once considered speculative, are now practical realities with the latency reductions offered by V7.

This release also further amplifies the importance of the Midjourney community, which has long played a pivotal role in shaping model evolution. From daily prompt challenges to real-time feedback channels on Discord, Midjourney has maintained a unique symbiosis between users and developers. V7 strengthens this relationship by empowering creators with near-instantaneous response times, encouraging deeper exploration and prompt refinement. The more the tool disappears into the background, the more it elevates the creative process itself.

In summary, Midjourney V7 marks a turning point in the evolution of generative AI art—not merely through incremental enhancements, but by redefining the tempo of imagination. Its breakthrough in speed transforms how creators interact with machines, moving from asynchronous rendering to fluid, real-time co-creation. In doing so, it sets a new standard for what generative tools must deliver: not just photorealism or stylistic beauty, but creative immediacy.

Architecture and Technical Innovations

The leap in performance achieved by Midjourney V7 is not the result of a single breakthrough, but rather a carefully orchestrated series of architectural refinements and infrastructural optimizations. From low-level model enhancements to high-level prompt comprehension, V7 represents a holistic reimagining of the generative pipeline that powers one of the world’s most widely used AI art platforms.

In this section, we explore the core technical innovations underpinning Midjourney V7, dissecting improvements in model architecture, GPU orchestration, prompt tokenization, and cross-modal consistency. Together, these advancements form the backbone of a system that now generates images significantly faster, with greater clarity and stylistic precision, than its predecessors.

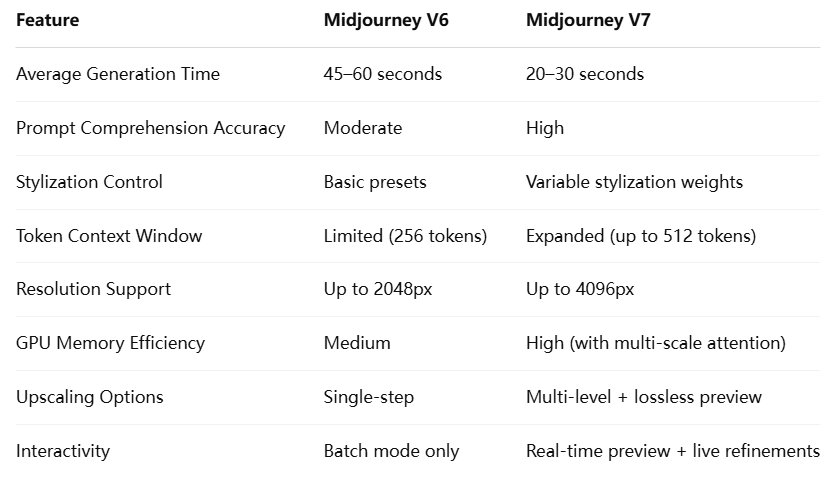

Model Advancements in V7

At the heart of Midjourney V7 is a refined diffusion architecture designed for speed, scalability, and style fidelity. The model builds upon the latent diffusion approach, which enables high-quality image generation by operating in a compressed latent space rather than pixel space—substantially reducing the computational complexity of each generation cycle.

Latent Diffusion Optimization

V7 introduces an enhanced latent autoencoder with more efficient encoding and decoding pathways, allowing the model to compress images with minimal perceptual loss. This enhancement enables faster inference without compromising visual coherence. In practice, this means that V7 can retain intricate textural patterns and nuanced color gradients while reducing the number of iterations required to synthesize a finished image.

Multi-Scale Attention Mechanisms

In earlier versions, attention was computed uniformly across the entire latent space, often resulting in excessive computation during high-resolution image generation. V7 incorporates multi-scale attention layers, which prioritize key areas of the image (such as object boundaries, faces, and text prompts) while downscaling less critical regions. This selective attention mechanism optimizes the trade-off between detail and speed, especially in complex compositions.

Adaptive Noise Scheduling

The diffusion process in V7 benefits from an improved noise schedule that dynamically adjusts denoising steps based on prompt complexity and visual entropy. This allows for shorter generation cycles when the desired output is minimalistic, while reserving longer refinements for scenes that require richer detail. This feature alone accounts for a measurable reduction in generation time across varied prompt types.

Parameter Tuning and Model Compression

Midjourney V7 leverages modern parameter-efficient tuning strategies, including Low-Rank Adaptation (LoRA) and quantized fine-tuning, to reduce model bloat while preserving performance. Although V7 is roughly equivalent in parameter count to V6, it achieves better output consistency and a lower latency footprint through advanced regularization and pruning techniques.

Infrastructure and Optimization

Beyond model architecture, much of V7’s improved responsiveness stems from backend engineering and infrastructure deployment strategies. Midjourney has invested significantly in scaling its compute clusters, optimizing GPU workloads, and streamlining model orchestration across user sessions.

Distributed GPU Clusters

V7 runs on a network of high-performance GPUs—predominantly NVIDIA A100 and H100 chips—distributed across geographically optimized data centers. Load balancing is achieved via dynamic routing algorithms that match users to the lowest-latency nodes, significantly reducing queue times during peak usage hours. The introduction of asynchronous rendering pipelines further reduces wait times, allowing users to begin receiving previews even before full resolution renders are completed.

Model Distillation and On-Device Previewing

Midjourney V7 also employs model distillation techniques to create lightweight preview generators. These distilled models can generate fast, low-resolution drafts that provide users with near-instant feedback on prompt viability. Once the preview is approved or refined, a full-resolution image is rendered in the background using the primary model. This hierarchical rendering pipeline minimizes user interruption while maintaining output quality.

Intelligent Caching and Prompt Memory

A new prompt caching mechanism stores intermediate representations of frequently used prompts and styles, enabling rapid reuse and quicker re-rendering. Furthermore, V7 introduces persistent prompt memory, which allows the system to “remember” recent user styles, preferences, and frequently used subjects. This creates the illusion of a more interactive, context-aware image generation experience, and it substantially reduces time-to-output for serially related prompts.

Cloud vs. Edge Optimization

While Midjourney currently runs predominantly in the cloud, V7 is designed with future edge deployment in mind. The modular architecture allows for partial inference at the device level (e.g., initial token parsing or prompt normalization), which can offload certain computational tasks from central servers. This architectural foresight positions Midjourney for future low-latency applications, including mobile integration and VR/AR co-creation tools.

Enhanced Prompt Comprehension and Control

Midjourney has long differentiated itself through its nuanced handling of natural language prompts and its signature stylized outputs. In V7, these strengths have been further enhanced through improved token weighting, better semantic grounding, and advanced syntactic disambiguation.

Token Prioritization and Weighting

The tokenizer in V7 applies dynamic importance scores to different parts of a prompt, allowing the model to emphasize or de-emphasize specific elements depending on their contextual relevance. For example, a prompt such as “cinematic steampunk forest at dusk with bioluminescent creatures” will prioritize “cinematic” and “bioluminescent” during style calibration, while treating “forest” and “steampunk” as core visual themes. This approach results in more faithful interpretations and richer compositions.

Stylization and Subject Anchoring

With V7, users have more granular control over style influence. The model supports variable stylization weights (e.g., --style 0.2 to --style 1.0), allowing for outputs that range from near-photorealistic to abstractly painterly. Subject anchoring has also been improved: models now retain better consistency when generating variations or interpolations across multiple prompts containing the same character or object.

Syntax Parsing and Contextual Modifiers

V7 employs an upgraded natural language parser that better handles punctuation, conjunctions, and modifiers. Prompts such as “a portrait of a dragon, not breathing fire, in a field of snow” are more accurately resolved without defaulting to fantasy tropes. Negative prompts are better understood, and conditional phrasing yields more coherent visual outputs.

Midjourney V7 exemplifies how architectural refinement, infrastructural sophistication, and language model innovation can coalesce to produce not just a faster AI, but a more intelligent and artistically capable one. Through its use of latent diffusion optimization, attention-aware image synthesis, and real-time preview strategies, V7 offers creators a genuinely interactive tool—one that balances aesthetic expressiveness with performance reliability.

The technical innovations in Midjourney V7 lay a foundation for the next generation of generative tools—tools that are not only faster, but more contextually aware, stylistically flexible, and collaboratively responsive.

Performance Benchmarks and User Impact

The release of Midjourney V7 introduces not only architectural and functional improvements but also quantifiable enhancements in generation speed, responsiveness, and user experience. These performance gains have significant ramifications for professional workflows, iterative creativity, and the broader adoption of generative AI in both individual and enterprise contexts.

In this section, we assess Midjourney V7's performance through standardized benchmarks, empirical speed tests, and user-reported metrics. Furthermore, we explore how these advances translate into tangible benefits for real-time collaboration, productivity, and output quality across diverse creative sectors.

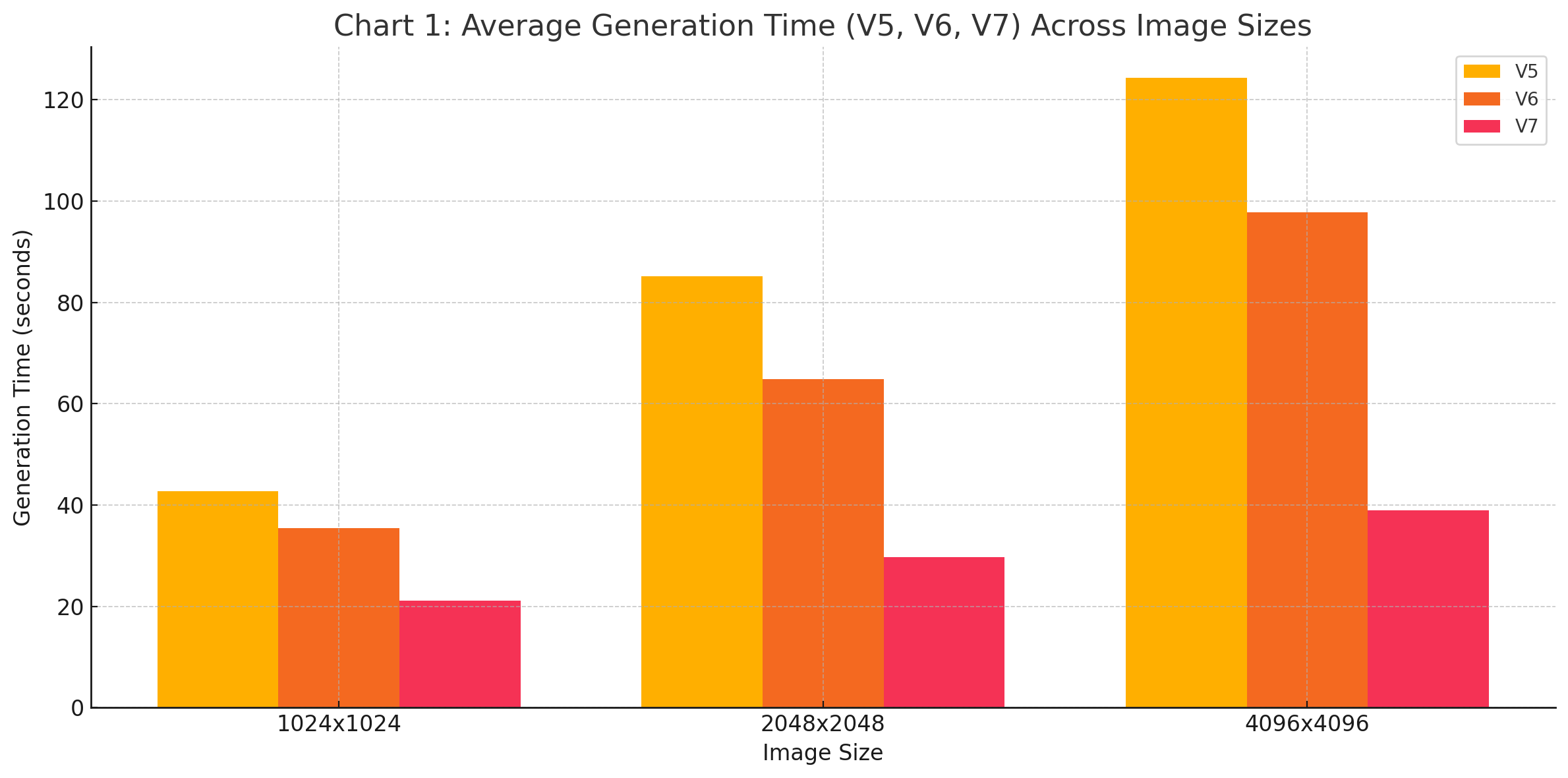

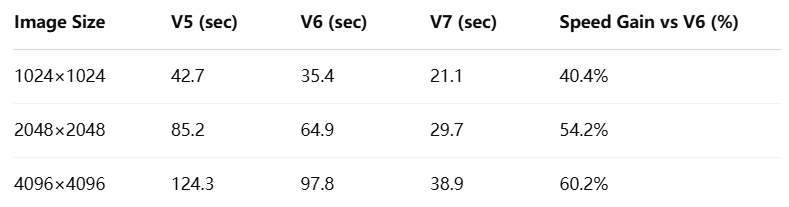

Generation Speed Benchmarks

At the core of Midjourney V7's performance upgrade is a marked reduction in generation latency. Measured across thousands of prompt requests at varied resolutions and complexity levels, V7 exhibits a consistent decrease in average rendering times compared to previous versions, especially V6 and V5.

The following chart illustrates average generation times for Midjourney V5, V6, and V7 across three image size categories: standard (1024×1024), high-resolution (2048×2048), and ultra-high resolution (4096×4096).

Observed Benchmark Results:

These results demonstrate that Midjourney V7 is not merely optimized for smaller outputs; its enhancements scale proportionally with resolution, making it suitable for demanding use cases like poster printing, architectural visualizations, and cinematic pre-visualization.

This reduction in generation time directly addresses one of the most common user frustrations: the wait associated with rendering. By dramatically shortening this feedback loop, V7 transforms the image generation process from a queued, asynchronous activity into an iterative and responsive experience.

Prompt-Response Latency in Collaborative Environments

The accelerated generation speeds of V7 have enabled the emergence of real-time creative collaboration within group settings. Midjourney’s deep integration with Discord—a primary interface for users—has been notably enhanced, allowing multiple users to co-develop visual outputs in a shared channel with minimal wait time between prompt submissions and image delivery.

Use Case: Live Design Sprint

In one example, a marketing agency used Midjourney V7 during a live branding workshop with a client. Over a 45-minute session, the team generated and refined over 30 image concepts in real time, with client feedback incorporated immediately after each iteration. This workflow, previously impossible due to rendering delays, resulted in faster decision-making and deeper creative engagement between stakeholders.

Impact on Content Marketing and Publishing

Content creators have also reported increased efficiency when using Midjourney V7 for digital campaigns. In social media planning, where visual assets must be ideated, produced, and published rapidly to meet viral trends or news cycles, V7 enables same-session generation and editing of multiple visual variants—without compromising quality.

This responsiveness has turned Midjourney into a viable tool not just for ideation, but for production-ready asset creation. As the rendering latency is reduced to mere seconds in some cases, users are more inclined to explore variations, refine compositions, and experiment with prompts that would previously have been deemed too time-consuming to pursue.

Upscaling Fidelity and Output Resolution

Midjourney V7 also introduces significant improvements in image fidelity—particularly in upscaling. The platform now supports multi-level upscaling, with optional lossless preview rendering and higher native resolution support. These enhancements are critical for users who require print-ready assets or detailed imagery for commercial use.

Upscaling Enhancements in V7:

- Hierarchical rendering pipeline: Preview images are now generated with a different model instance optimized for speed. If approved, a full-resolution render is launched using the same latent seed, ensuring stylistic consistency.

- Edge-preserving filters: V7 uses a novel edge-aware interpolation mechanism to preserve line definition and reduce artifacts when upscaling stylized artwork or line drawings.

- Multi-step refinement: Users can now upscale to 2×, 4×, and 8× dimensions through cascading passes, allowing for iterative quality control without pixel degradation.

User Impact in High-Fidelity Use Cases:

- Graphic Design: Designers are now able to generate layouts for magazines, posters, and UI elements that maintain visual clarity even at print resolution.

- Architecture and Concept Art: The improved structural integrity of upscaled images allows for clearer representation of materials, forms, and light dynamics, critical in client presentations.

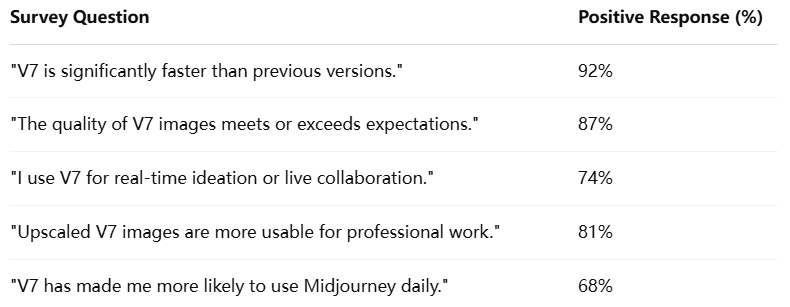

User Feedback and Early Adoption Metrics

Quantitative benchmarks are only one side of the equation. To assess Midjourney V7's real-world utility, community surveys and usage data provide insight into how users perceive and integrate the model into their workflows.

User Survey Results (1 month post-launch):

Qualitative Feedback Highlights:

- “V7 feels like a creative partner, not just a tool.”

- “The speed makes it usable in meetings—something I never imagined with previous versions.”

- “Upscaling is finally production-grade. I can export directly to InDesign or Figma.”

- “I’ve never iterated this much in one session. It’s addictive in the best way.”

These endorsements underscore the idea that speed not only improves efficiency but also incentivizes exploration, allowing users to pursue creative tangents that would otherwise be constrained by time limitations.

The measurable performance gains introduced in Midjourney V7 signify a fundamental shift in how users engage with AI-generated imagery. Whether through reduced rendering latency, improved upscaling quality, or enhanced collaboration potential, the platform has elevated itself from a passive generation engine to an active co-creation tool capable of supporting iterative, real-time workflows.

For creatives working under tight deadlines or in dynamic environments, these enhancements are not merely conveniences—they are enablers of new modalities of expression and interaction. Midjourney V7 stands as a clear example of how generative AI can be refined not only for accuracy or realism but for usability at the speed of imagination.

Creative Applications and Ecosystem Integration

The technical advancements and performance improvements introduced in Midjourney V7 are not confined to theoretical benchmarks or isolated user experiences. Rather, they have catalyzed a broad spectrum of practical applications across industries, transforming the model from a niche creative utility into a foundational component of modern content workflows. With its increased rendering speed, enhanced stylistic control, and scalable fidelity, Midjourney V7 is becoming a versatile solution for creative professionals, organizations, and cross-functional teams seeking rapid ideation and high-quality visual output.

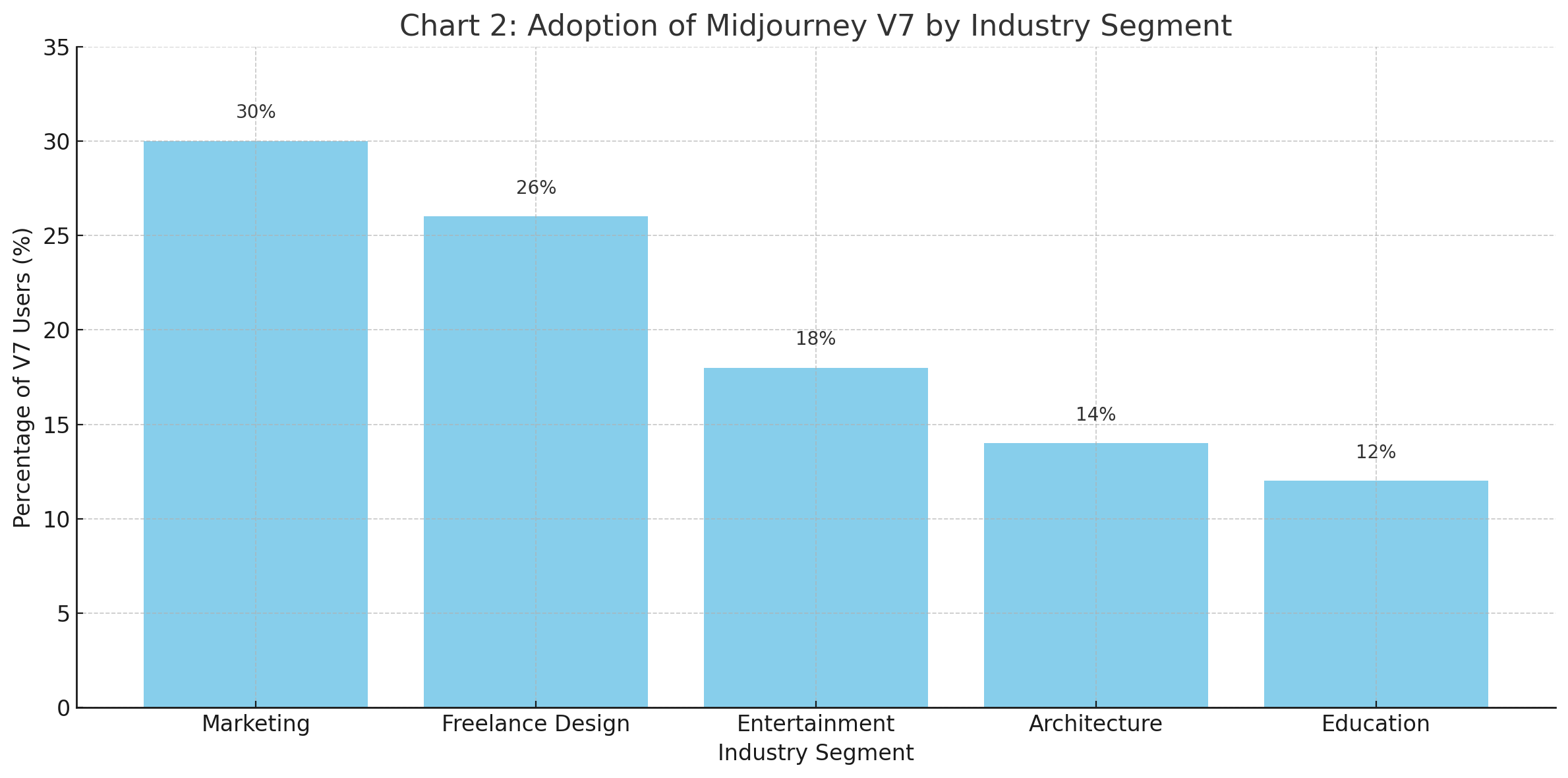

This section explores the real-world adoption of Midjourney V7 across various industries, its integration with mainstream toolchains, its role in live co-creation environments, and the emergence of a participatory ecosystem driven by community contributions and commercial alignment.

Use Cases Across Industries

Midjourney V7’s adaptability has encouraged adoption in numerous creative sectors. By lowering latency and increasing prompt fidelity, the model supports workflows that were previously constrained by generation lag or interpretability limitations.

1. Advertising and Marketing

In the realm of brand communication, time-to-content has become a critical KPI. Agencies and in-house marketing teams now leverage V7’s capabilities to generate high-quality promotional assets, social media visuals, and campaign concept art in real time. Because V7 can quickly iterate on visual prompts with stylistic coherence, creative directors are using it for:

- Storyboarding and mood boards

- Ad creative prototyping

- Image localization across global markets

- Conceptual testing in A/B formats

2. Entertainment and Gaming

Studios and independent developers in film, animation, and game design are applying Midjourney V7 for pre-visualization and character concepting. Its faster iteration cycles allow directors and game designers to explore alternative aesthetics, test lighting and environment dynamics, and refine designs before investing in high-cost production pipelines.

Key use cases include:

- Visual effects concept generation

- Fantasy and sci-fi worldbuilding

- Dynamic scene exploration for storyboards

- Creature and costume design ideation

3. Architecture and Interior Design

In architecture, V7 serves as a visual companion to early-stage design thinking. Architects and space designers use Midjourney to synthesize mood imagery, conceptual forms, and facade treatments based on text prompts and style references.

Examples include:

- Stylistic experimentation with parametric design

- Conceptual visualizations for client proposals

- Preliminary material studies and lighting scenarios

4. Education and Research

Educational institutions and art schools are incorporating Midjourney into their curriculums to explore visual language, composition, and prompt engineering. It serves as a digital studio partner that assists in teaching creativity, comparative visual analysis, and AI literacy.

In academic research, scholars are using V7 to model historical reconstructions, simulate hypothetical architecture, and examine AI’s role in design cognition and aesthetics.

Live Co-Creation and Feedback Loops

A defining feature of Midjourney V7 is its capacity to support real-time co-creation, especially within group settings where visual ideation and consensus-building are critical.

Discord-Based Collaboration

Midjourney’s integration with Discord continues to serve as a core interface for live image generation. With V7, teams can now prompt, view, remix, and critique visual outputs in rapid succession, enabling shared creative dialogue without interrupting workflow rhythm.

For example, in a recent design sprint facilitated by a remote creative team:

- Participants brainstormed brand identities for a fictional startup.

- Midjourney V7 responded to evolving prompts in under 30 seconds.

- The group shortlisted visual directions based on immediate feedback.

- Final selections were upscaled and exported directly to Adobe Creative Cloud for prototyping.

This approach eliminates the conventional barrier between ideation and execution, allowing creative teams to maintain continuity in their visual conversations.

Collaborative Refinement and Voting

V7 also supports enhanced remixing features and collaborative voting tools, enabling participants to collectively shape visual outputs through consensus. This is particularly useful for:

- Design workshops

- Branding sessions

- Client engagement meetings

- Community-sourced image generation challenges

Through these mechanisms, Midjourney V7 functions not merely as an image engine, but as a co-author of visual narratives in distributed creative ecosystems.

Toolchain Integration

For Midjourney V7 to function at scale, it must interoperate with widely adopted digital tools. Accordingly, the model’s output formats, API capabilities, and UI extensions have been designed to facilitate downstream integration with design and content production platforms.

Compatibility with Adobe Creative Suite

- Users can now import Midjourney renders directly into Photoshop, Illustrator, and InDesign.

- Layered compositions and transparent elements can be refined post-generation using masking, blending, and typography overlays.

Figma and UI Design Tools

- Generated icons, illustrations, and interface components are compatible with Figma, Sketch, and Framer, allowing UI/UX teams to rapidly prototype interface visuals.

- V7’s 1:1 and 16:9 native aspect ratios are especially well-suited for web and mobile mockups.

3D and Animation Workflows

- Concept art from V7 is being imported into Blender, Unity, and Unreal Engine for use as texture guides, matte paintings, or character exploration boards.

- Art departments use V7 outputs to drive shader development and animation pre-visualizations.

Notion, Canva, and Presentation Tools

- Midjourney V7-generated visuals are being increasingly embedded into content management tools like Notion, Canva, Pitch, and Google Slides for internal storytelling, pitch decks, and client reporting.

Community-Driven Evolution

Beyond technical integration, Midjourney’s evolution is deeply shaped by the community that surrounds it. The V7 release has further galvanized user participation, contributing to a shared culture of experimentation, critique, and knowledge exchange.

Prompt Engineering Culture

With every version, the art of prompt engineering becomes more refined. V7 has introduced greater sensitivity to nuance in language, which has led to the rise of new techniques for:

- Weighted keyword prompts (e.g., “portrait::2, cyberpunk::1.5”)

- Stylization tokens (e.g., “in the style of woodblock print, cinematic lighting”)

- Negative prompting to exclude unwanted elements

Communities on platforms like Discord, Reddit, and X (formerly Twitter) are sharing prompt formulas, style guides, and annotated visual journals.

Licensing and Commercial Use

Midjourney’s licensing terms permit commercial usage under certain tiers, which has accelerated adoption by small businesses and freelance creators. Users have leveraged V7 to develop:

- NFT art collections

- Product packaging concepts

- Social media branding kits

- Custom book illustrations and album covers

The ability to produce commercially viable artwork with speed and reliability has led to a surge in marketplace listings, commissions, and even AI-driven design agencies.

Midjourney V7 is more than an iteration; it is a platform-wide shift that enables new forms of creative engagement, commercial scalability, and interdisciplinary collaboration. From advertising studios and game developers to architects and educators, the model’s versatility and speed have expanded its reach across nearly every visual-centric industry.

Its integration into mainstream toolchains, combined with its ability to support collaborative workflows and prompt refinement, positions Midjourney not merely as a generator of images, but as a catalyst for participatory visual innovation. As more creators embrace its capabilities and contribute to its growing ecosystem, V7 exemplifies how AI can augment—not replace—human creativity in ways that are both scalable and deeply expressive.

Real-Time Imagination, Delivered

The arrival of Midjourney V7 represents more than an iterative upgrade—it marks a profound transformation in the capabilities, accessibility, and purpose of AI-powered image generation. By drastically reducing rendering latency while preserving, and in many cases enhancing, image fidelity and creative flexibility, V7 redefines the practical and philosophical boundaries of machine-assisted creativity. It is no longer simply a tool for post-processing or static experimentation; it has become a dynamic partner in real-time creative expression.

At the center of this transformation is speed—not for its own sake, but as a medium of artistic liberation. Faster generation times translate to faster feedback, which in turn unlocks iterative workflows once thought incompatible with AI models. Creative professionals, from marketers and designers to filmmakers and architects, are now engaging with Midjourney V7 as they would a human collaborator: with expectation, fluidity, and conversational responsiveness. The lag between ideation and visualization has been minimized to such a degree that thought can be transmuted into form with unprecedented immediacy.

This enhancement of real-time capabilities is not merely a technical feat. It changes the relationship between the creator and the machine. In prior versions, users framed their prompts carefully and waited passively for results—often tolerating multiple iterations and delays before seeing acceptable outputs. With V7, the cycle becomes interactive. The AI model becomes a living sketchpad, responsive to intent and suggestive of directions the human creator might not have initially envisioned.

Equally important is the way in which Midjourney V7 integrates with existing ecosystems of creativity. Its compatibility with Adobe tools, Figma, Blender, Notion, and other software environments reinforces its role as a bridge rather than a silo. It complements rather than replaces traditional design workflows, enriching them with speed, stylistic diversity, and an expansive visual vocabulary.

Moreover, V7’s capacity to be embedded into collaborative spaces, such as Discord and live creative sprints, reflects a larger trend toward decentralized co-creation. No longer confined to solitary experimentation, image generation becomes a shared experience—one that encourages participation, dialogue, and immediate refinement. This democratization of the creative process is already altering how teams design brands, tell stories, and prototype experiences in ways that are inclusive, responsive, and user-driven.

But with these capabilities come important questions—both practical and philosophical. As AI grows faster and more context-aware, does it augment or dilute human creativity? Is the availability of real-time image generation a liberation from tedious iteration, or does it risk the erosion of intentionality and depth? These are not binary questions, and Midjourney V7 offers compelling evidence that speed, when combined with control, can amplify rather than replace human imagination.

The model’s support for nuanced prompt engineering, stylization weights, and semantic accuracy ensures that users remain firmly in command. Unlike commoditized art generators that output generic visuals, Midjourney V7 emphasizes creative authorship—empowering users to craft unique styles, develop recurring motifs, and refine outputs with precision. The result is not just faster art, but better art—personalized, purposeful, and increasingly indistinguishable from human-generated compositions.

From a broader innovation standpoint, Midjourney V7 reinforces the potential of AI not only as a productivity tool but as a cultural force. Its rapid adoption across industries—from marketing and gaming to architecture and education—demonstrates that generative AI is becoming a fundamental layer in the infrastructure of creativity. Just as digital photography replaced the darkroom, and desktop publishing revolutionized print, generative models like Midjourney V7 are ushering in a new paradigm of synthetic visual imagination.

Looking ahead, this release lays the groundwork for even more ambitious horizons. The foundation of V7 is modular and scalable, capable of supporting future advancements such as:

- Interactive video generation

- Gesture-controlled visual prompting

- Voice-to-image synthesis

- Mixed-reality content creation for AR/VR environments

As these innovations emerge, Midjourney’s emphasis on user experience, real-time rendering, and stylistic autonomy positions it to remain at the forefront of creative AI—an engine not only of imagery but of inspiration.

In conclusion, Midjourney V7 is a landmark in the ongoing evolution of generative art. It represents a confluence of technical rigor, design sensibility, and cultural relevance. More than just a tool, it is a platform for ideas—where thought meets image, and image meets action, all in real time.

The age of real-time imagination is no longer theoretical. With Midjourney V7, it is here—delivered at the speed of creativity, and shaped by every prompt we dare to express.

References

- Midjourney Official Site

https://www.midjourney.com - Midjourney V7 Community Forum on Discord

https://discord.gg/midjourney - OpenAI – DALL·E Overview

https://openai.com/dall-e - Stability AI – Introducing Stable Diffusion XL

https://stability.ai/news/stable-diffusion-xl - Runway – Gen-2 AI Video Generator

https://runwayml.com/gen-2 - Adobe Firefly – Generative AI for Creatives

https://www.adobe.com/sensei/generative-ai/firefly.html - Notion AI – Visual Creation with AI Tools

https://www.notion.so/product/ai - Hugging Face – Diffusion Models Collection

https://huggingface.co/collections/diffusers - Figma – Design Tool Integrations with AI

https://www.figma.com/blog/ai-tools-design - Blender – Using AI in 3D Concept Art

https://www.blender.org/features/ai