Midjourney Launches V1: How Its First AI Video Model Disrupts the Generative Video Landscape

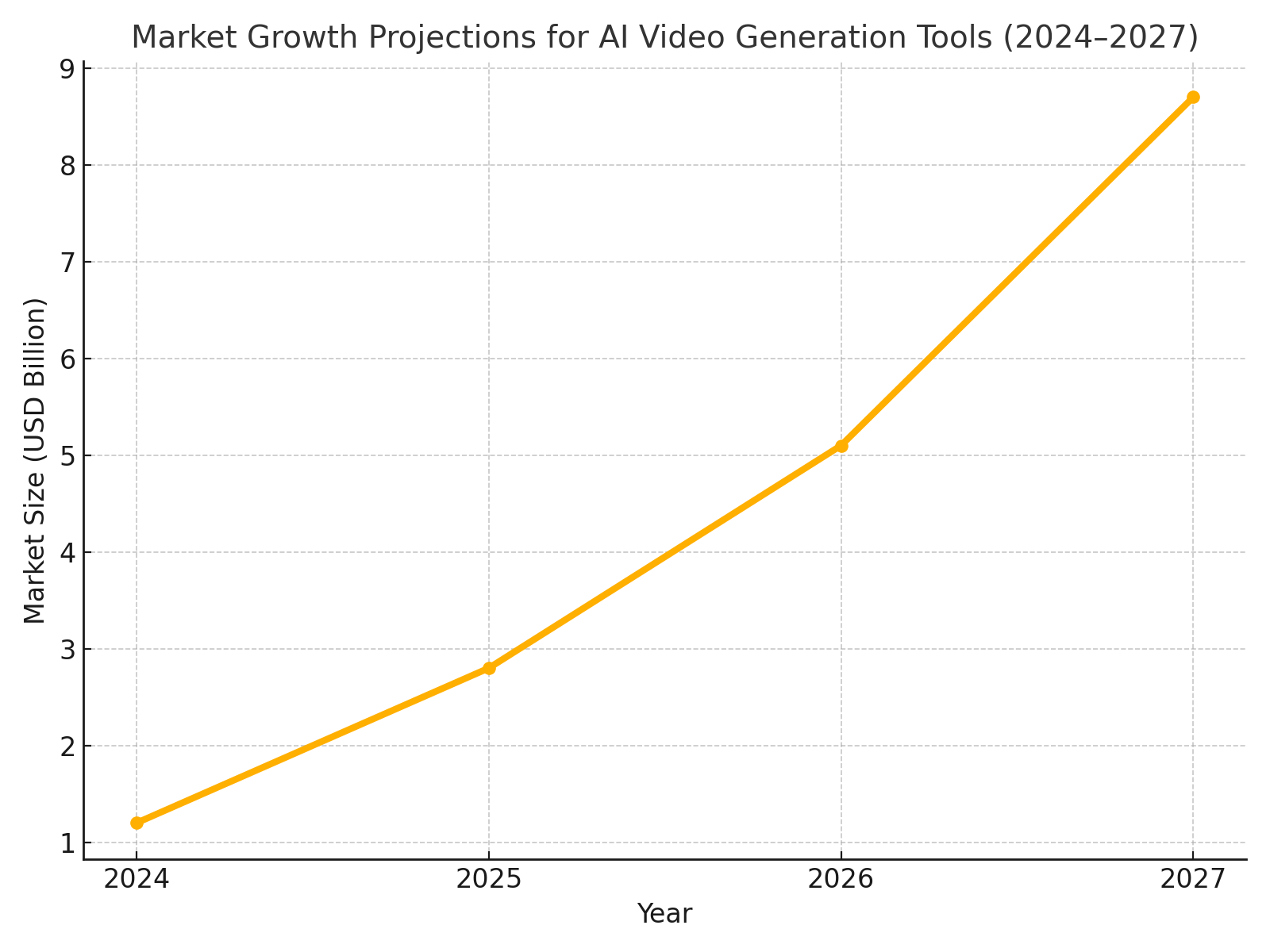

Artificial intelligence (AI) has rapidly evolved from generating static text and images to crafting dynamic, visually rich content. Among the most closely watched frontiers in this domain is AI-powered video generation—a field poised to reshape industries ranging from entertainment and advertising to education and corporate communications. With several pioneering models already demonstrating the potential of text-to-video synthesis, the global community of AI enthusiasts, creators, and technologists has been eagerly anticipating Midjourney’s entrance into this fast-growing arena.

Founded in 2022, Midjourney initially captured the imagination of millions with its image generation platform that balanced photorealism with artistic flair. The platform quickly became a favorite among artists, designers, and marketers seeking to produce striking visuals with minimal friction. Building on this foundation of innovation and community-driven engagement, Midjourney has now officially launched its first AI video generation model—Midjourney V1. This release marks a significant milestone not only for the company but also for the broader AI video ecosystem.

The debut of Midjourney V1 comes at a pivotal moment. The surge of interest in video-based content—fueled by platforms like TikTok, YouTube, and Instagram—has created unprecedented demand for tools that can generate video at scale. Simultaneously, generative AI technologies have matured rapidly, enabling more coherent, high-resolution, and stylistically consistent video outputs than were thought possible just a year ago. By moving decisively into video generation, Midjourney is signaling its ambition to compete in a space currently led by players such as OpenAI’s Sora, Runway Gen-3, Pika Labs, and Stability AI’s Stable Video Diffusion.

This blog post will provide an in-depth analysis of the launch of Midjourney V1, exploring its technical innovations, user experience, competitive positioning, and the broader implications for content creation industries. It will also compare Midjourney V1 with existing alternatives, highlight key market trends, and offer a forward-looking perspective on how this technology is likely to evolve. Through charts, tables, and structured insights, readers will gain a comprehensive understanding of how Midjourney’s venture into video AI could reshape the creative landscape in the months and years ahead.

The Evolution of Midjourney — From Images to Videos

Midjourney’s trajectory from a niche AI art tool to a key player in the generative AI ecosystem has been both rapid and transformative. Originally launched as a research lab and platform focused on AI-driven image synthesis, Midjourney distinguished itself through a singular combination of aesthetic sensibility and cutting-edge technical development. While many early image generation models prioritized photorealism or accuracy, Midjourney embraced a vision of creative exploration—delivering images that felt both artistically inspired and visually arresting. This focus resonated with a growing community of designers, marketers, and creative professionals seeking to unlock new visual possibilities.

From its inception, Midjourney capitalized on the growing appetite for generative art, building a strong user base through an accessible Discord-based interface and an iterative model release cycle. The platform’s early success with its image generation models—spanning versions V1 through V6—demonstrated an impressive capability to translate textual prompts into high-quality images. Each successive model improved upon the last in terms of coherence, style diversity, resolution, and prompt responsiveness. By the time Midjourney V6 launched in late 2023, the platform had firmly established itself as a leader in AI image generation, competing effectively with other major players such as OpenAI’s DALL·E, Stability AI’s Stable Diffusion, and Adobe Firefly.

As Midjourney’s community and reputation expanded, so too did user expectations. Increasingly, creators sought the ability to move beyond still images and into the dynamic realm of video—a medium that offers far greater expressive power and market value. The broader industry context reinforced this demand: video-based platforms such as TikTok, YouTube, and Instagram Reels had already transformed global media consumption patterns, while brands and marketers increasingly relied on video content to drive engagement and conversion. Simultaneously, advances in large language models (LLMs), diffusion models, and transformer architectures were making generative video not only feasible but commercially viable.

Recognizing this convergence of user demand and technical opportunity, Midjourney began investing in AI video research in mid-2023. The company’s leadership articulated a clear vision: to extend Midjourney’s signature blend of aesthetic quality, ease of use, and community-driven development into the domain of video generation. Unlike some of its competitors—who pursued enterprise partnerships or high-end production tools—Midjourney remained committed to democratizing creative AI, aiming to empower a broad spectrum of users, from hobbyists to professional creators.

The launch of Midjourney V1 in June 2025 marks the fruition of this strategic evolution. As the company’s first video generation model, V1 represents both a technical milestone and a bold new chapter in Midjourney’s growth story. It signifies a deliberate expansion beyond the constraints of still imagery, enabling users to craft short video sequences that maintain the visual coherence, stylistic richness, and imaginative spark for which the platform is known.

Importantly, Midjourney’s entry into video AI also reflects a broader trend in the industry: the convergence of multimodal generative AI. As models increasingly integrate text, image, audio, and video capabilities, the boundaries between creative disciplines are dissolving. In this context, Midjourney V1 serves not only as a tool for video generation but as a stepping stone toward more comprehensive creative ecosystems—where storytelling, branding, and visual expression can unfold seamlessly across multiple media formats.

In the following sections, this blog will explore in greater depth the capabilities of Midjourney V1, the user experience it offers, and its positioning within a competitive and rapidly evolving market landscape. The analysis will also consider the broader implications of Midjourney’s evolution for the future of AI-powered content creation.

Technical Features and Capabilities of Midjourney V1

The release of Midjourney V1 marks a significant leap forward in the company’s generative AI capabilities, extending its creative prowess from static images to dynamic video content. As with its earlier image-generation models, Midjourney V1 emphasizes a synthesis of aesthetic quality, user-friendliness, and technical sophistication. This section offers a comprehensive analysis of the model’s architecture, input/output modalities, performance benchmarks, stylistic attributes, and current limitations—providing a clear picture of how Midjourney V1 fits into the rapidly evolving landscape of AI video generation.

Model Architecture and Training Paradigm

Midjourney V1 builds upon the foundational principles that underpinned the success of its image-generation models, but adapts them for the temporal complexity of video synthesis. The core architecture employs a hybrid framework that combines elements of diffusion models with transformer-based temporal modules. Specifically, the model uses a spatiotemporal diffusion process, which generates individual frames while ensuring consistency across time—a challenge that has historically limited the realism and coherence of AI-generated videos.

To achieve this, Midjourney’s engineers have integrated a temporal attention mechanism into the diffusion pipeline. This allows the model to “remember” the visual state of preceding frames, ensuring that motion appears fluid and consistent. Additionally, the model has been trained on a diverse and carefully curated video dataset, encompassing a wide range of subjects, scenes, lighting conditions, and motion types. The emphasis on diversity during training has enabled V1 to generalize effectively across various video genres, from abstract art and cinematic sequences to natural scenes and synthetic environments.

Midjourney has also opted for a relatively compact model size—balancing performance with accessibility. Unlike some competitors whose models require extensive cloud resources or proprietary hardware, V1 has been optimized for deployment within Midjourney’s existing platform architecture, making it readily accessible to the company’s global user base.

Input Methods and Prompt Engineering

A key strength of Midjourney V1 lies in its flexible input modalities, which allow creators to tailor video outputs to their specific needs. The model currently supports three primary forms of input:

- Text Prompts

Users can generate videos purely from natural language descriptions. As with Midjourney’s image models, the text-to-video interface encourages expressive prompt engineering. Users can specify subjects, environments, camera angles, lighting, mood, and motion dynamics—all of which are interpreted by the model to craft visually compelling video sequences. - Image Conditioning

V1 supports image-to-video workflows in which users upload a still image to guide video generation. This input helps establish a visual baseline for the video, such as character appearance, color palette, or scene composition. The model then extrapolates motion and temporal progression from the static frame, enabling more targeted creative outputs. - Combined Text + Image Prompts

For maximum control, users can combine text descriptions with image conditioning. This hybrid method allows for nuanced storytelling, where the static image anchors the visual style while the text guides the narrative and motion.

These input modalities empower creators with varying levels of expertise to achieve desirable outcomes. Early community feedback indicates that combined prompts yield the most sophisticated results, particularly when users engage in iterative refinement—an approach consistent with how Midjourney’s community has historically leveraged image models.

Output Quality: Resolution, Frame Rate, and Duration

Midjourney V1’s output parameters reflect a deliberate balancing act between model efficiency and creative potential. The model currently generates video clips with the following default specifications:

- Resolution: 1280×720 pixels (HD), with plans to offer 1920×1080 (Full HD) in future updates.

- Frame Rate: 24 frames per second (fps), providing a cinematic feel suitable for storytelling and creative content.

- Duration: 2 to 4 seconds per clip, extendable through prompt chaining or future roadmap features.

While these specifications may appear modest compared to full-length video production standards, they are well aligned with contemporary content consumption trends—particularly on social platforms where short-form video dominates. Moreover, the model’s aesthetic quality at these settings is notably high, delivering videos with rich textures, consistent lighting, and minimal temporal artifacts.

Midjourney’s engineers have prioritized stylistic coherence and motion fluidity over sheer length or resolution. This focus distinguishes V1 from earlier-generation AI video tools, which often suffered from jittery motion, flickering artifacts, or abrupt scene transitions. The result is a model that excels at generating short, visually polished video loops—ideal for applications such as marketing teasers, visual storytelling, animated art, and experimental film.

Stylistic Attributes and Creative Potential

One of Midjourney’s defining characteristics has always been its aesthetic sensibility. Rather than aiming for strict photorealism, the platform encourages visual experimentation and stylistic diversity. This philosophy carries through to V1, which offers a broad range of artistic outputs depending on user intent.

In comparative benchmarks with competing models, Midjourney V1 demonstrates several notable strengths:

- Color Harmony: Videos generated by V1 exhibit a cohesive color palette and lighting scheme, contributing to visual appeal.

- Texture Fidelity: The model maintains high levels of texture detail across frames, avoiding the “blurry” or “washed out” look common in early video AI efforts.

- Motion Consistency: Thanks to the integrated temporal attention modules, V1 handles movement with greater coherence—particularly for natural phenomena (e.g., flowing water, swaying foliage) and abstract animations.

- Stylistic Range: Users can generate outputs ranging from painterly and surreal to near-photorealistic, depending on prompt style and training data domain.

These strengths align with Midjourney’s brand identity and cater to its core user base of creatives. Unlike enterprise-oriented video generators that emphasize documentary realism or corporate use cases, V1 invites playful, artistic exploration—continuing Midjourney’s tradition of democratizing creative AI.

Current Limitations and Known Challenges

While Midjourney V1 represents a major advancement, it remains an early-stage video model with several acknowledged limitations:

- Clip Length: At present, video clips are limited to 2–4 seconds. While this aligns with certain creative applications, users seeking longer sequences must employ manual stitching or await future updates.

- Audio Support: V1 does not yet support synchronized audio generation. Users must add soundtracks externally using video editing tools.

- Character Consistency: Like most contemporary video models, V1 can struggle with maintaining character continuity across frames, especially in complex scenes with multiple subjects or fine-grained details (e.g., facial expressions, hand gestures).

- Fine Motion Dynamics: Certain fast or intricate motions may introduce artifacts such as motion blur or stutter.

- Temporal Narrative: While the model excels at generating aesthetically rich loops, it is less adept at crafting videos with clear narrative arcs or scene transitions—an area of active research for future versions.

Midjourney has been transparent about these constraints, positioning V1 as a creative tool rather than a turnkey solution for full production workflows. Notably, the company has outlined an ambitious roadmap that targets improvements in clip length, motion realism, character modeling, and multimodal integration—including audio and interactive elements.

Summary

In technical terms, Midjourney V1 reflects a sophisticated and thoughtful approach to AI video generation. It combines advanced diffusion and transformer techniques with accessible input methods, delivering video outputs that excel in visual quality and creative flexibility. While current limitations remain, they are consistent with the state of the art in this nascent field—and Midjourney’s track record suggests that rapid iteration and improvement are likely.

As we will explore in the next section, the user experience of creating videos with V1 is equally notable—underscoring Midjourney’s commitment to democratizing AI-powered creativity.

User Experience — Creating Videos with Midjourney V1

One of the defining factors in the adoption and success of AI creative tools is the quality of the user experience they deliver. From its earliest versions, Midjourney distinguished itself by prioritizing simplicity, accessibility, and community-driven innovation. These values are clearly reflected in the launch of Midjourney V1, which extends the platform’s intuitive approach into the realm of video generation. This section explores the user experience of working with V1—covering the interface, workflow, community feedback, licensing considerations, and emerging use cases.

Interface and Workflow

True to Midjourney’s established model, V1 operates within the familiar Discord-based interface. This approach maintains continuity for existing users while lowering the barrier to entry for new adopters. The process of generating video with V1 begins in a dedicated #video-beta or #video-v1 channel, where users interact with the model via slash commands (e.g., /video or /v1).

The workflow is straightforward:

- Prompt Input

Users compose a prompt that can consist of text alone, an image upload, or a combination of both. The platform supports rich descriptive language, allowing users to specify not only subjects but also style, color palette, motion dynamics, mood, and cinematic techniques. - Render Settings

Optional parameters enable users to adjust output characteristics—such as video duration (within current model limits), aspect ratio, or creative weighting. Advanced users may also experiment with seed values to encourage variety or reproducibility. - Generation and Preview

Upon submission, V1 typically requires 1–2 minutes to render a video clip. The output appears inline as an embedded video (MP4), with download links provided for high-quality versions. The relatively fast rendering cycle promotes experimentation and iteration—mirroring the iterative image-generation workflows that Midjourney users are accustomed to. - Iteration and Refinement

Users can easily request variations on a generated video by invoking /variation or reusing and modifying previous prompts. This encourages a cyclical process of refinement, enabling creators to hone in on desired visual effects or narrative flow.

Overall, the process is designed to be highly interactive and accessible even to users with no formal video production experience. By abstracting away the technical complexity of diffusion and transformer-based video generation, Midjourney empowers a broad user base to engage creatively with the technology.

Learning Curve and Community Resources

One of Midjourney’s enduring strengths is its vibrant and supportive community, which plays a crucial role in onboarding new users and accelerating creative skill development. This dynamic continues with the introduction of V1, where early adopters have quickly begun sharing techniques, prompt templates, and best practices for video creation.

Key community resources include:

- Prompt libraries: Collections of well-crafted text prompts and image-prompt pairings that produce strong video results.

- Video showcases: Channels where users post their best outputs, providing inspiration and examples of the model’s potential.

- Tutorial threads: Step-by-step guides created by experienced users, often accompanied by annotated examples and settings explanations.

- Peer feedback loops: Interactive discussions in which users critique each other’s videos and suggest improvements—fostering a collaborative learning environment.

These resources significantly shorten the learning curve for new users. Even those with no prior background in prompt engineering or video editing can begin producing visually compelling results within hours of trying the tool.

Early Community Feedback

Since its launch, Midjourney V1 has garnered largely positive feedback from the community. Several themes have emerged from early user reports:

- Visual Quality: Many users praise the richness of color, texture, and motion in V1 outputs. The platform’s heritage in artistic image generation is evident, with videos often described as “dreamlike,” “painterly,” or “cinematic.”

- Ease of Use: The intuitive Discord interface and rapid rendering times are frequently cited as key advantages. Users appreciate the ability to experiment freely without the need for specialized hardware or software.

- Creative Freedom: V1’s support for abstract, stylized, and experimental videos resonates strongly with the artistic community. Many users view the tool not merely as a utility, but as a medium for creative exploration.

- Limitations: Some users note predictable constraints, such as short clip duration, lack of audio, and occasional character inconsistencies. However, these are generally framed as expected trade-offs for an early video model.

Overall, community sentiment suggests that Midjourney V1 has succeeded in capturing the imagination of its user base—opening new avenues for creative expression while maintaining the platform’s hallmark accessibility.

Content Licensing and Usage Rights

An important consideration for any AI content generation tool is the question of licensing and permissible usage. Midjourney has established clear terms of service that apply to V1-generated videos:

- Commercial Use: Paid subscribers retain broad rights to use generated videos commercially—including in marketing materials, social media content, branded videos, and personal projects.

- Attribution: While not legally required, attribution to Midjourney is encouraged in contexts where AI-generated content is a material part of the creative output.

- Content Guidelines: Users must adhere to Midjourney’s community guidelines, which prohibit the generation of harmful, illegal, or abusive content. Videos that violate these guidelines are subject to moderation or removal.

These policies mirror those of Midjourney’s image models and align with broader industry practices. By offering permissive commercial usage rights, the platform ensures that V1 serves as a viable tool for creators, marketers, and businesses—not merely as an experimental novelty.

Emerging Applications and Use Cases

Even at this early stage, a wide range of applications is emerging for videos generated with Midjourney V1. Notable examples include:

- Marketing and Advertising: Short AI-generated video loops are being used in promotional campaigns, branded social media posts, and product teasers. The ability to produce distinctive visuals at scale offers clear value for marketing teams.

- Entertainment and Art: Artists and experimental filmmakers are using V1 to create mood pieces, visual poetry, and abstract narratives—pushing the boundaries of AI-assisted video art.

- Education and E-learning: Instructors are incorporating AI-generated video snippets into presentations, training materials, and explainer videos to enhance engagement.

- Social Media Content Creation: Influencers and content creators are leveraging V1’s outputs to diversify their video portfolios—particularly on platforms like Instagram Reels, TikTok, and YouTube Shorts.

- Moodboards and Concept Development: Designers and creative directors are using V1 to prototype visual concepts and develop moodboards for client presentations and storyboarding.

These early use cases highlight the model’s versatility. While V1 is not yet positioned to replace traditional video production workflows, it is rapidly becoming an invaluable tool for ideation, experimentation, and supplementary content generation.

Subscription Model and Access

Access to Midjourney V1 is provided as part of the platform’s standard subscription tiers. Users on the Pro or Mega plans gain priority access to video generation features, with flexible quotas that encourage experimentation. Entry-level subscribers can also access V1 but may experience longer render queues during peak usage periods.

By bundling video generation with existing subscriptions, Midjourney ensures that its user community can easily explore this new capability without additional friction or cost barriers. This approach supports organic adoption and facilitates a virtuous cycle of user feedback and model improvement.

Summary

Midjourney V1 offers a highly accessible and creatively empowering user experience. Its intuitive interface, rapid iteration cycles, and vibrant community ecosystem make AI video generation approachable even for non-experts. Early feedback from creators suggests strong potential for a wide range of applications—particularly in marketing, art, education, and social media.

As the platform evolves, enhancements to clip length, resolution, and multimodal integration are likely to further expand its creative possibilities. In the meantime, Midjourney’s signature emphasis on aesthetic quality and community-driven innovation ensures that V1 is already making a meaningful contribution to the generative video landscape.

Competitive Landscape and Industry Implications

The launch of Midjourney V1 comes at a critical juncture in the evolution of AI-driven video generation, a field characterized by rapid technological progress, intense competition, and growing commercial interest. While Midjourney made its name as a leader in artistic image generation, its entry into video AI now positions it within a much broader ecosystem of players, each vying for dominance in this high-stakes market. To fully appreciate the significance of V1’s release, it is essential to examine the competitive landscape in which it operates, the strategic moves of rival companies, and the broader implications for industries such as media, entertainment, marketing, and creative production.

Current State of AI Video Generation Market

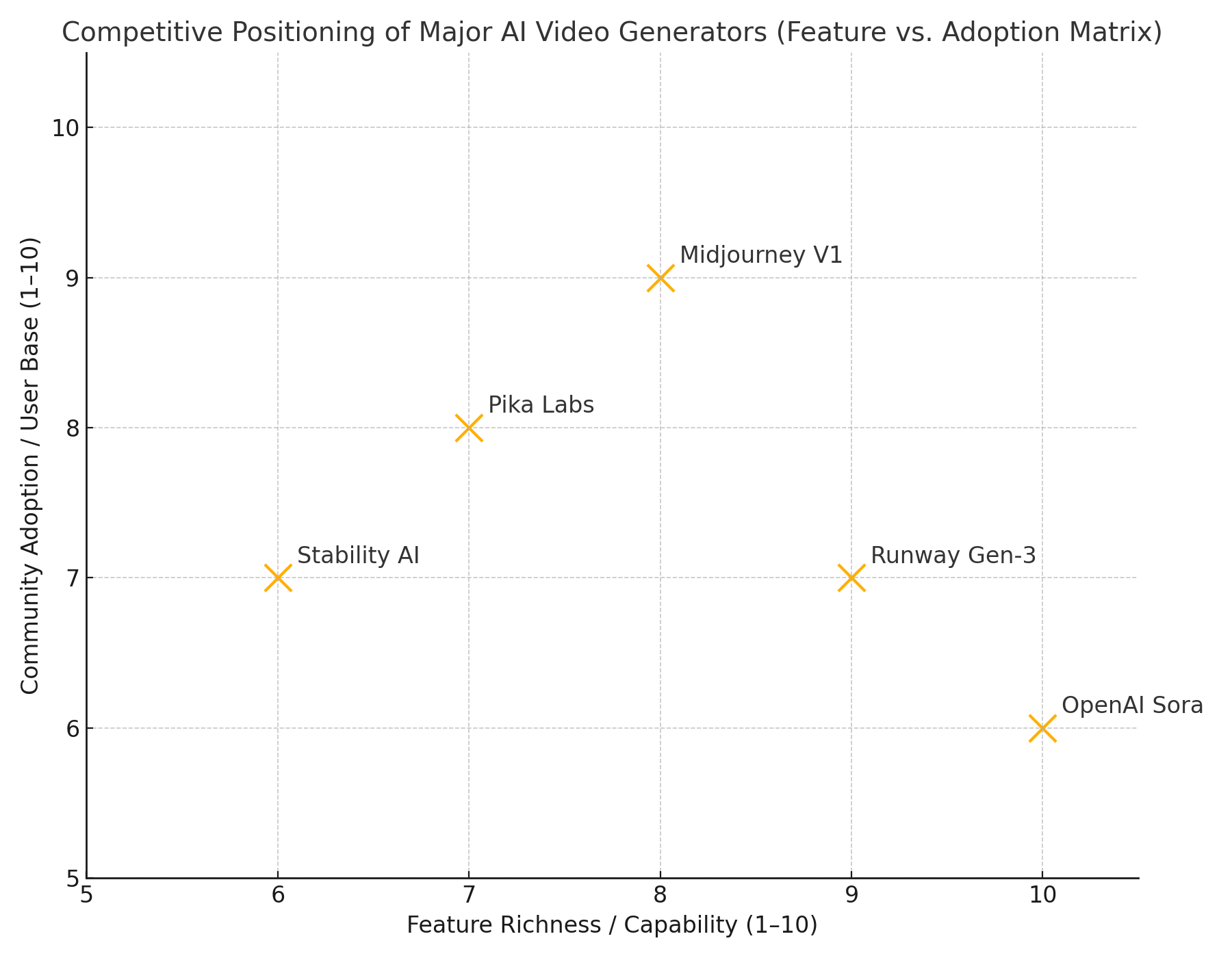

In recent years, AI video generation has progressed from experimental prototypes to commercially viable products. Early text-to-video models struggled with basic coherence, low resolution, and flickering motion. However, as of 2025, a new generation of diffusion-based and transformer-enhanced video models is reshaping the possibilities of this medium. The following key players currently dominate the AI video generation market:

- Runway Gen-3

Among the most mature offerings, Runway’s Gen-3 model is known for its emphasis on realism and cinematic quality. The platform targets professional creators, offering tools for film previsualization, marketing content, and digital production. Runway’s integration with existing editing workflows gives it strong appeal among creative professionals. - OpenAI Sora

Sora remains one of the most technically advanced models, capable of generating high-fidelity, long-duration videos with complex narratives and consistent characters. Sora is also highly scalable, with enterprise partnerships in advertising and entertainment. However, its access remains limited to select partners, with no broad consumer release as yet. - Pika Labs

Pika has carved out a niche by focusing on rapid, lightweight video generation with user-friendly interfaces. Its outputs are optimized for social media, making it popular among content creators and influencers. - Stability AI (Stable Video Diffusion)

Stability AI brings an open-source philosophy to video generation. Its models are widely accessible to developers and researchers, fostering innovation and community-driven improvements. However, outputs remain somewhat less polished than those of closed, highly curated platforms. - Meta and Google

Both Meta and Google are actively developing proprietary video models, with a focus on integration into their respective ecosystems (social media, advertising platforms, AR/VR experiences). Public availability is still limited but expected to expand.

Midjourney V1’s Market Position

Against this backdrop, Midjourney V1 enters the competitive field with a distinctive positioning:

- Artistic Focus

Where competitors such as Runway and Sora emphasize realism and long-form narrative, Midjourney remains committed to its artistic roots. V1 excels at producing visually striking, stylized, and abstract videos that appeal to artists, designers, and experimental creators. - Community Engagement

Unlike enterprise-focused competitors, Midjourney has cultivated a large, passionate community. This enables rapid feedback cycles and viral content sharing, which can drive organic growth and platform loyalty. - Accessibility

With an intuitive interface and subscription model that integrates video generation into existing tiers, V1 lowers barriers to entry for casual users and hobbyists—a contrast to the enterprise-heavy focus of some rivals. - Short-Form Emphasis

Current output lengths (2–4 seconds) align with the booming market for short-form video on platforms such as TikTok, Instagram, and YouTube Shorts. While some may view this as a limitation, it is a strategic fit for today’s most dynamic content channels.

In sum, Midjourney V1 does not seek to directly compete with the narrative or professional production strengths of models like Sora or Runway Gen-3. Instead, it carves out a complementary niche, leveraging the company’s brand, community, and aesthetic strengths to target the burgeoning creator economy.

Responses from Competitors

The release of V1 has already spurred responses across the AI video ecosystem:

- Feature Acceleration: Competing platforms are fast-tracking updates to style and artistic controls, seeking to match Midjourney’s visual creativity.

- Community Building: Several rivals are investing more in creator engagement, mirroring Midjourney’s community-first approach.

- Short-Form Tools: Some enterprise tools, originally built for longer video formats, are now adding support for short clips and social-first outputs.

These dynamics suggest that Midjourney’s market entry is helping to shape not only feature priorities but also business strategies across the sector.

Opportunities for Monetization and Disruption

AI video generation promises significant disruption—and monetization potential—across multiple industries:

- Marketing and Advertising

AI-generated video enables brands to scale creative production, experiment rapidly, and personalize content for segmented audiences. Agencies already report strong ROI on AI-driven campaigns, particularly in digital and social channels. - Entertainment and Media

Independent creators, music labels, and streaming platforms are exploring AI-generated video as a means of enhancing content libraries, producing visuals for music tracks, and prototyping narrative content. - E-commerce

Retailers can use AI video to generate dynamic product showcases, explainer videos, and augmented reality assets—driving higher engagement and conversion rates. - Education and Training

AI-generated videos can augment e-learning platforms, providing rich visual explanations and immersive scenarios at a fraction of traditional production costs. - Enterprise Communication

Corporate training, internal communications, and executive messaging can all benefit from scalable AI-generated video, improving both efficiency and engagement.

Midjourney’s artistic emphasis positions it particularly well for creative applications—offering tools for visual storytelling, mood creation, and brand expression in ways that resonate with modern audiences.

Challenges and Risks

Despite the excitement surrounding AI video generation, several challenges loom:

- Content Authenticity

As AI video tools improve, distinguishing between human-generated and AI-generated content will become increasingly difficult, raising issues around misinformation, media manipulation, and trust. - Intellectual Property

The legal landscape surrounding AI-generated video remains unsettled. Questions about data provenance, derivative works, and content ownership continue to evolve. - Ethical Concerns

As with AI image tools, video generation faces risks of misuse—ranging from deepfakes to non-consensual content. Robust community guidelines and enforcement mechanisms will be critical. - Saturation and Quality

The ease of generating video content may lead to oversaturation, making quality and creativity more important than ever for standing out in crowded content markets.

Midjourney’s transparent community guidelines and emphasis on creative rather than deceptive applications may mitigate some of these risks. However, ongoing vigilance will be required as V1 matures and adoption scales.

Broader Industry Implications

The arrival of accessible, high-quality AI video tools such as Midjourney V1 signals broader shifts in the creative economy:

- Redefining Creativity: AI-assisted tools are expanding what individual creators can achieve—democratizing capabilities once reserved for studios and professionals.

- Shifting Production Models: Brands and agencies will increasingly adopt hybrid models, blending traditional production with AI-driven content pipelines.

- New Career Pathways: AI prompt engineering, video iteration, and curation will emerge as valued creative skills—creating opportunities for new types of creative professionals.

- Platform Convergence: As tools like Midjourney V1 add audio, interactivity, and longer video capabilities, boundaries between static images, video, and interactive experiences will blur—reshaping the digital content landscape.

In this context, Midjourney’s evolution into video generation is not merely a product expansion—it is a strategic move toward participating in the next generation of creative media.

Summary

The competitive landscape of AI video generation is dynamic, fast-evolving, and rich with opportunity. Midjourney V1 enters this space with a distinctive blend of artistic focus, community engagement, and accessibility. While it faces formidable competitors in both the enterprise and open-source segments, its niche alignment with the creator economy and short-form video market positions it for rapid growth.

As the industry continues to mature, AI video generation will likely move from experimental novelty to mainstream creative tool—transforming how media is conceived, produced, and consumed. In this unfolding narrative, Midjourney V1 represents both an important milestone and a signal of even greater shifts to come.

What’s Next for Midjourney and AI Video Generation

The debut of Midjourney V1 marks a pivotal moment not only for the company but also for the broader creative AI landscape. As AI-powered video generation accelerates toward mainstream adoption, Midjourney’s entry brings new creative possibilities to a growing global community of artists, designers, marketers, and technologists. V1’s launch underscores several key themes that are likely to define the next phase of this dynamic sector.

Continued Model Evolution

Midjourney’s development roadmap suggests that V1 is merely the starting point for a deeper investment in video AI. Based on the platform’s historical cadence of rapid iteration in image models, it is reasonable to anticipate significant enhancements in video capabilities over the coming months. Priority areas likely include:

- Extended Clip Duration

Moving beyond 2–4 second loops toward 10-second and even 30-second segments will open new storytelling possibilities for creators. - Higher Resolution and Frame Rate

Improvements in visual fidelity—such as support for Full HD (1920×1080) and 4K outputs—will increase the professional utility of AI-generated videos. - Audio Integration

The addition of synchronized audio (whether generative or user-provided) will further expand the model’s applicability across entertainment and marketing domains. - Character and Scene Consistency

Advances in temporal coherence will improve the model’s ability to sustain character identity and scene logic across longer narratives. - Interactive Features

The fusion of video generation with interactive elements could enable new forms of dynamic media experiences, such as personalized video content or responsive storytelling.

These enhancements will help Midjourney remain competitive as the broader field of AI video generation matures and diversifies.

Integration into Multimodal AI Ecosystems

More broadly, the launch of V1 reflects an industry-wide shift toward multimodal AI—systems capable of understanding and generating content across text, image, audio, and video modalities. In this context, video generation is not an isolated feature but part of a broader convergence.

For Midjourney, the challenge and opportunity lie in building integrated creative workflows that seamlessly combine its strengths in image and video generation. Future platform updates may offer unified interfaces for developing comprehensive multimedia content, spanning still imagery, animations, and interactive video narratives.

Such multimodal integration will position Midjourney to meet the needs of a new generation of digital storytellers—creators who fluidly navigate between formats and seek tools that support their cross-disciplinary practices.

Implications for the Creative Industry

The broader creative industry faces profound implications from the rise of accessible AI video tools:

- Democratization of Production

Video production, historically gated by high costs and specialized skills, is becoming accessible to a far wider range of creators. Individuals and small teams can now prototype and iterate visual concepts at unprecedented speed and scale. - Shifts in Creative Labor

While AI video tools will not replace traditional filmmakers or animators, they are reshaping roles within creative workflows. Prompt engineering, AI curation, and hybrid editing are emerging as new creative disciplines. - Platform Transformation

Social media platforms, e-learning systems, and marketing agencies are rapidly adopting AI-driven video generation to diversify content offerings and personalize user experiences. - Cultural Shifts

The growing prevalence of AI-generated video content will inevitably shape audience expectations and aesthetics. The ability to produce infinite variations of visual narratives may usher in new forms of digital art, remix culture, and participatory storytelling.

Midjourney’s community-driven ethos ensures that these transformations will not be confined to corporate or institutional actors. By empowering everyday creators, the platform fosters a more pluralistic and experimental creative ecosystem.

Ethical and Regulatory Considerations

As the field advances, ethical questions and regulatory challenges will also intensify. Key areas of concern include:

- Authenticity and Disclosure

The indistinguishability of AI-generated video from human-created content necessitates new norms for disclosure and transparency. - Content Moderation

Platforms must develop robust guidelines and enforcement mechanisms to prevent misuse—such as the creation of misleading or harmful videos. - Intellectual Property

Ongoing legal debates around data provenance, derivative works, and AI authorship will influence both creative practices and platform governance.

Midjourney’s commitment to responsible innovation and community standards provides a solid foundation for navigating these complexities. However, collaboration with broader industry and policy stakeholders will be essential as the technology scales.

A Transformative Road Ahead

In sum, the release of Midjourney V1 is more than a product update—it is a strategic move that signals the company’s intent to shape the future of AI-driven video creation. V1’s distinctive strengths—artistic quality, community engagement, and accessibility—position Midjourney to thrive in a competitive yet expanding market.

As video generation technology continues to evolve, it will fundamentally transform how media is conceived, produced, and experienced. Whether in marketing, entertainment, education, or art, AI-powered video tools will empower new voices, accelerate creative cycles, and democratize storytelling.

For creators and audiences alike, this is only the beginning. Midjourney’s V1 launch offers a glimpse of a future where imagination and AI converge to produce experiences that are dynamic, diverse, and deeply human.

References

- https://www.midjourney.com

- https://runwayml.com/gen-3

- https://openai.com/sora

- https://pika.art

- https://stability.ai/news/introducing-stable-video-diffusion

- https://techcrunch.com/tag/ai-video-generation

- https://venturebeat.com/ai/ai-video-generation-tools-overview

- https://www.forbes.com/sites/forbestechcouncil/ai-video-generation-trends

- https://www.theverge.com/ai-artificial-intelligence/ai-video-tools-market

- https://www.cnbc.com/ai-video-content-creator-economy