How Teachers Can Use AI to Save Time on Marking: Strategies, Tools, and Impact

In recent years, educators around the world have been grappling with an ever-growing list of responsibilities. While teaching remains at the core of their profession, the administrative burden on teachers has intensified, stretching their capacity and often encroaching on time meant for lesson planning and student engagement. Among the most time-consuming of these tasks is marking, which demands not only careful attention to detail but also a significant emotional and cognitive investment. For many teachers, grading student work after hours and over weekends has become an entrenched reality, contributing to rising stress levels and burnout across the profession.

As education systems globally strive to adapt to digital transformation, artificial intelligence (AI) has emerged as a promising solution to this long-standing challenge. No longer confined to the realm of science fiction or high-level research labs, AI technologies are becoming increasingly accessible and user-friendly for educators at all levels. Today’s AI-driven tools are capable of evaluating written assignments, scoring objective questions, and even generating personalized feedback—tasks that previously required hours of human effort. This shift presents a unique opportunity to reimagine how assessment is managed in classrooms, freeing up educators to focus more on instruction and less on paperwork.

Importantly, the rise of AI in education is not merely about automation for efficiency’s sake. At its best, AI can complement the teacher's role by enhancing the quality and consistency of feedback, supporting differentiated instruction, and making assessments more timely and actionable for students. By leveraging AI, educators can transform grading into a more agile and dynamic component of the learning process, where real-time insights and scaffolded support are the norm rather than the exception.

However, the integration of AI into marking also raises critical questions about data privacy, accuracy, teacher agency, and the potential for unintended consequences. It is therefore essential to explore not only the potential benefits of this technological shift but also the practical considerations and limitations involved in its implementation.

This blog post delves into how AI can alleviate the marking burden for teachers, outlining the types of tools currently available, the measurable benefits they offer, and the strategic approaches required to implement them effectively. It also examines the broader implications of AI-assisted assessment on pedagogical practice, educational equity, and institutional policy. Through a detailed and data-driven analysis, we aim to provide educators, administrators, and policymakers with a clear understanding of how to harness AI as a valuable ally in the pursuit of more efficient and meaningful education.

The Marking Burden in Modern Education

The task of marking remains one of the most time-intensive responsibilities in a teacher’s professional life. Across different educational systems and grade levels, teachers are expected to evaluate a wide variety of student work—ranging from multiple-choice assessments and short answers to essays, portfolios, projects, and presentations. While assessment is an integral component of effective pedagogy, ensuring that students receive timely and constructive feedback, the manual process of grading can often overwhelm even the most experienced educators. As expectations around personalization, accountability, and performance metrics have grown in recent decades, so too has the demand for thorough, consistent, and often repetitive marking. This has led to what many educators now describe as a “grading crisis.”

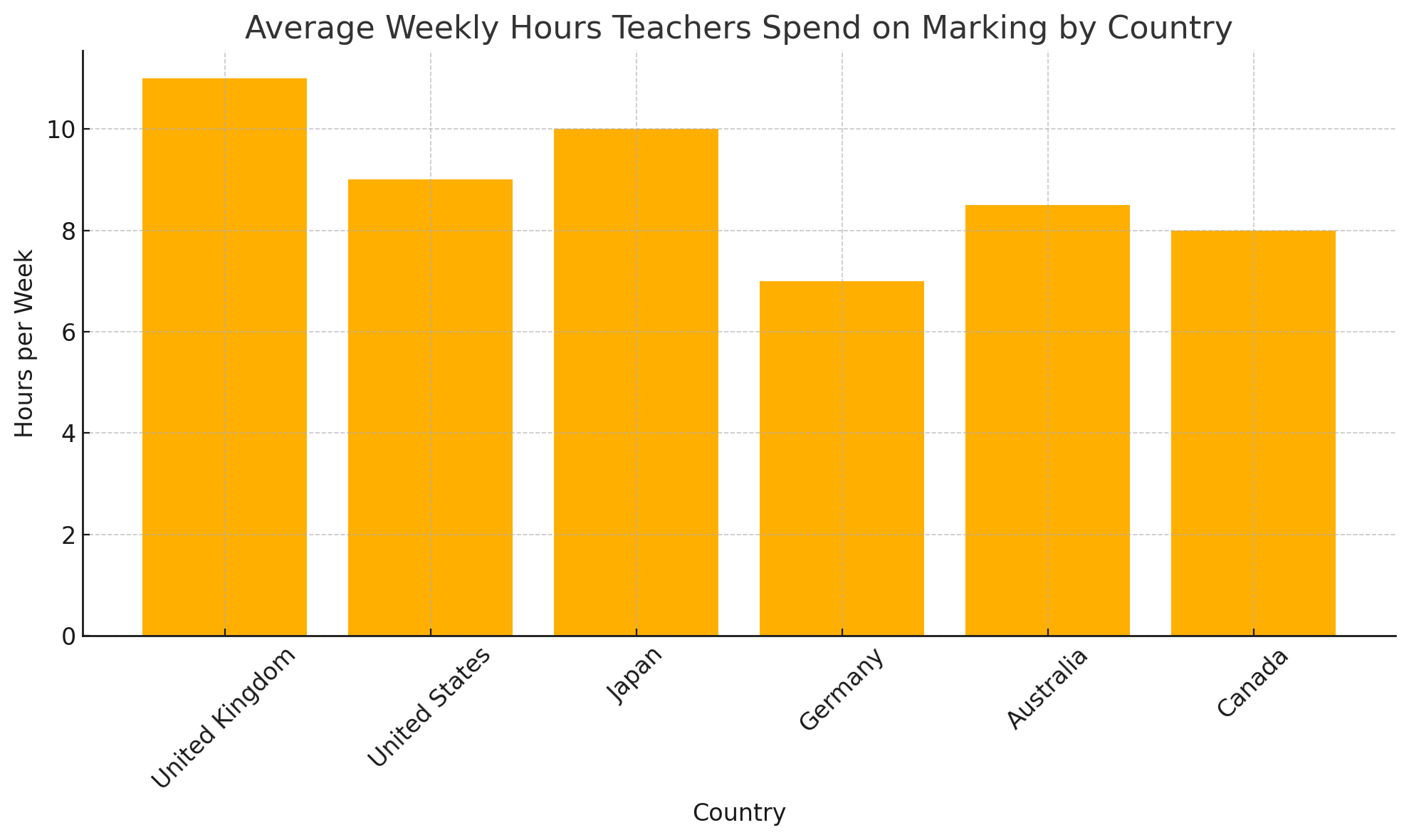

Surveys conducted across multiple countries consistently reveal that teachers dedicate a substantial proportion of their working hours to marking. In the United Kingdom, for instance, a report by the Education Policy Institute found that teachers spend an average of 11 hours per week on tasks related to marking and feedback. Similarly, a study by the U.S. Department of Education indicates that American teachers typically spend 8 to 10 hours per week outside of classroom time grading assignments, particularly in subjects like English, Social Studies, and History. These figures are further compounded when accounting for teachers who manage large class sizes, teach multiple subjects, or work in under-resourced schools with limited administrative support.

The nature of the assignments being assessed plays a critical role in the time demands placed on teachers. Objective assessments such as multiple-choice tests or fill-in-the-blank quizzes can often be graded relatively quickly, especially with the aid of standardized templates or scanning devices. However, these types of assessments are limited in their ability to measure complex learning outcomes, critical thinking, or creativity. In contrast, open-ended responses, essays, and long-form projects provide richer insights into student understanding but also require significantly more time to evaluate. Teachers must consider not only the accuracy of the response but also coherence, originality, structure, grammar, and alignment with rubrics—each of which adds layers of complexity to the marking process.

Moreover, the increased emphasis on formative assessment has placed further strain on educators. Unlike summative assessments that are typically administered at the end of a unit or term, formative assessments are continuous and designed to inform instructional decisions in real-time. This requires teachers to provide feedback that is not only accurate but also actionable and timely, necessitating frequent updates to students on their progress and suggestions for improvement. While this approach is undoubtedly pedagogically sound, it dramatically increases the frequency and volume of grading tasks, especially when implemented in classrooms with high student-to-teacher ratios.

One of the less frequently acknowledged aspects of the marking burden is its emotional toll. Marking is not simply a mechanical process of checking for correctness; it often involves making subjective judgments, balancing fairness with encouragement, and navigating the psychological impact of assigning low scores or negative feedback. Many teachers report feeling emotionally drained after extended grading sessions, especially when faced with underperformance, plagiarism, or poorly constructed arguments that require extensive commentary. The emotional labor inherent in providing sensitive, constructive feedback is rarely accounted for in discussions of teacher workload, yet it plays a significant role in burnout and job dissatisfaction.

Additionally, the demands of marking are not distributed equally across all subjects or educational levels. Humanities teachers—particularly those in English, History, and Philosophy—report significantly higher marking loads due to the qualitative nature of their assessments. Similarly, teachers at the secondary and tertiary levels often encounter a greater volume of student submissions, longer assignments, and more complex evaluation criteria. In vocational and project-based learning environments, instructors must assess portfolios, peer assessments, and group work, all of which involve nuanced, multidimensional feedback processes. These differences underscore the importance of considering both subject and context when analyzing the impact of marking on teacher workload.

Institutional policies and cultural expectations further shape how marking is approached. In high-stakes education systems—such as those found in East Asia, the United Kingdom, and parts of Europe—teachers face intense pressure to ensure grading accuracy and accountability. Schools in these regions often mandate frequent assessments, double marking, and detailed performance tracking, which, while aimed at improving educational outcomes, inadvertently contribute to teacher fatigue. Furthermore, parental expectations and administrative oversight can increase scrutiny of grading practices, leading educators to invest additional time in justifying their assessment decisions and refining feedback language to avoid misinterpretation.

Attempts to reduce the marking burden through traditional means have met with limited success. Delegating marking to teaching assistants, implementing peer assessment, and using standardized rubrics can help distribute the workload, but these strategies come with trade-offs in terms of quality control and consistency. Technological interventions, such as optical mark recognition (OMR) and online grading platforms, have improved efficiency for certain types of assessments but remain inadequate for handling subjective, high-level tasks like essay evaluation or creative project assessment. As such, the education sector has long awaited a solution that can balance the need for depth and quality with the demand for speed and scalability.

It is in this context that artificial intelligence presents itself as a potentially transformative force. The confluence of natural language processing (NLP), machine learning, and large language models (LLMs) now makes it feasible to automate not only scoring but also the generation of meaningful feedback. Unlike past technologies that focused solely on binary correctness, modern AI tools are increasingly capable of understanding nuance, recognizing tone, identifying logical coherence, and suggesting areas for improvement—all in a fraction of the time it would take a human. While not without limitations, these advancements signal a new chapter in the evolution of assessment practices.

To illustrate the scope of the issue, the chart below presents comparative data on the number of hours teachers spend weekly on grading across selected OECD countries.

In summary, the marking burden in modern education is multifaceted, rooted not only in time and volume but also in emotional, cognitive, and institutional factors. While educators have demonstrated resilience and commitment in managing this responsibility, the toll is increasingly unsustainable. With the advent of more advanced AI tools, a rare opportunity has emerged to alleviate this burden meaningfully, enabling teachers to redirect their energies toward pedagogical enrichment and student engagement.

AI-Powered Tools That Support Marking

The proliferation of artificial intelligence in education has brought with it a new generation of tools designed to alleviate the marking burden for teachers. These tools harness the power of machine learning, natural language processing (NLP), and large language models (LLMs) to evaluate student work with a degree of accuracy and sophistication that was previously unattainable through traditional educational technologies. By automating repetitive grading tasks and enhancing the quality of feedback, AI-powered tools are enabling educators to spend less time on administrative duties and more time engaging directly with students and curriculum development.

At the most basic level, AI-driven assessment tools began as simple auto-marking systems for multiple-choice and short-answer questions. Platforms such as Google Forms and Moodle were among the early adopters of rule-based algorithms capable of instantly grading quizzes based on predetermined answer keys. These systems significantly reduced grading time for objective tests but offered little in terms of qualitative feedback or the ability to handle open-ended responses. However, recent advancements have extended the capabilities of AI well beyond the constraints of binary evaluation.

One of the most notable developments in this domain is the rise of tools that apply NLP to assess student writing. For instance, platforms like Gradescope utilize AI to assist teachers in grading both handwritten and digital responses across a wide variety of disciplines. Originally developed at the University of California, Berkeley, Gradescope uses AI to recognize patterns in student answers, identify common errors, and even suggest rubric-based grades. It is particularly useful in STEM fields, where instructors can batch-grade similar responses to streamline the evaluation process. The tool also facilitates re-grading and peer review workflows, providing transparency and consistency.

Similarly, Turnitin’s Gradescope AI and Turnitin Feedback Studio offer robust solutions for evaluating written content. While Turnitin is widely known for its plagiarism detection capabilities, its integrated grading tools now allow educators to use AI-powered rubrics and automated comment banks. These features help ensure that feedback is not only consistent across assignments but also tailored to student needs. Instructors can customize comments for recurring issues such as grammar, argument structure, citation style, and clarity, allowing for faster yet still individualized evaluations.

Another transformative tool is Socratic by Google, a mobile AI-powered homework assistant that not only aids students in understanding their assignments but also provides teachers with analytics on areas where students struggle most. While Socratic is student-facing, teachers benefit from its diagnostic capabilities, which inform targeted instruction and assessment design. When integrated with platforms like Google Classroom, educators can leverage this data to create auto-graded quizzes and assignments tailored to student learning gaps.

A more advanced category of AI tools involves the use of large language models (LLMs) such as OpenAI’s GPT-4 and Anthropic’s Claude. These models can evaluate open-ended responses, essays, and even project reflections with remarkable fluency. Tools built on top of LLMs can generate feedback that mimics the tone, language, and depth of a human grader. For example, ScribeSense and Write & Improve by Cambridge English use AI to offer grammar correction, structure evaluation, and content-level insights in real time. These systems learn from vast datasets to approximate human-level marking, which proves particularly valuable in language learning and humanities education.

Moreover, AI-based rubric aligners are being introduced to match teacher-created rubrics with student work automatically. Tools like Criterion from ETS and Ecree enable educators to set evaluation criteria and allow the AI to score assignments accordingly. These platforms not only save time but also support pedagogical consistency by enforcing objective grading practices. For higher education and large-scale assessment programs, such alignment tools reduce variability in marking across different instructors and institutions.

In subjects involving diagrams, graphs, and handwritten submissions, computer vision complements NLP to extend AI’s capabilities. Gradescope’s handwriting recognition is a prime example, enabling the automated collection and organization of handwritten answers. The tool segments scanned submissions into individual responses, allowing for efficient batch grading and reducing the logistical complexity of grading paper-based tests. Emerging research in AI for visual content assessment suggests that the future will include even more sophisticated tools capable of grading charts, lab notebooks, and other non-textual outputs.

Despite the benefits, AI-based grading tools are not one-size-fits-all. Their effectiveness often depends on subject matter, assessment type, and integration with existing learning management systems (LMS). For example, while AI performs exceptionally well in evaluating grammar, coherence, and factual correctness, it is less reliable when assessing creativity, emotional tone, or ethical reasoning—domains where human judgment remains essential. As such, educators must be discerning in selecting and deploying these tools, ensuring that they supplement rather than supplant human evaluative insight.

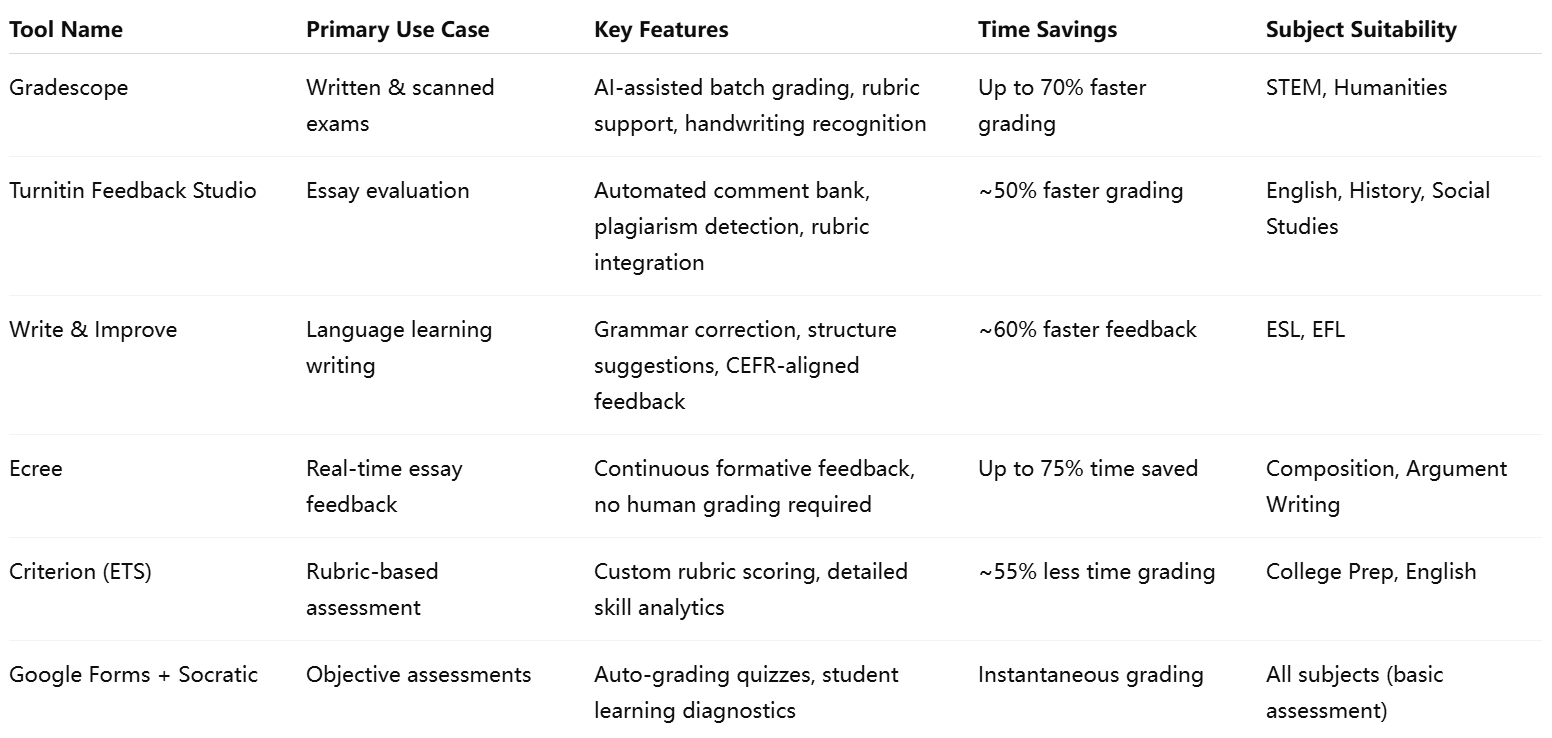

To support informed decision-making, the table below presents a comparison of leading AI-powered marking tools currently used in schools and universities.

The expanding ecosystem of AI-powered tools reflects a clear trend: educational technology is increasingly moving from passive content delivery to active instructional support. Where earlier tools simply digitized existing paper workflows, current AI systems understand, interpret, and act on student submissions in dynamic ways. This not only accelerates the grading process but also enhances the feedback loop, allowing students to receive more timely and targeted guidance.

Furthermore, many of these tools offer integrations with LMS platforms such as Canvas, Blackboard, Microsoft Teams for Education, and Google Classroom. Seamless integration ensures that grading data is automatically synced with gradebooks, assignment trackers, and progress dashboards. This eliminates duplicate work for teachers and improves transparency for students and parents alike. The ability to generate reports on class-wide performance trends, commonly missed concepts, and individual progress further amplifies the pedagogical value of these AI systems.

The increasing adoption of AI grading tools has been accompanied by a growing body of empirical evidence supporting their efficacy. A study conducted by the University of Michigan showed that instructors who used AI-assisted grading systems reduced their marking time by 60% on average, while maintaining high levels of student satisfaction with feedback quality. Similarly, research from EdTech Impact in the UK found that 74% of secondary school teachers using AI tools reported feeling “less overwhelmed” during exam periods. These findings suggest that AI is not only a productivity booster but also a contributor to educator well-being.

Nevertheless, challenges remain. The risk of over-reliance on AI-generated evaluations, potential biases embedded in algorithms, and concerns about data security must all be addressed. Developers and educational institutions must collaborate to ensure transparency in AI decision-making, provide opt-out mechanisms, and implement robust training for teachers to use these tools responsibly. As AI becomes more embedded in the grading process, its ethical deployment will be just as important as its technical performance.

In conclusion, AI-powered marking tools represent a significant advancement in the quest to reduce teacher workload while maintaining educational quality. By intelligently automating repetitive grading tasks and enhancing the feedback process, these tools empower educators to redirect their time and energy toward instruction, mentorship, and innovation.

Benefits and Limitations of Using AI in Marking

The adoption of artificial intelligence (AI) in educational assessment has introduced a paradigm shift in how marking is approached, executed, and interpreted. While the core function of grading remains centered on evaluating student performance, AI tools now enable educators to carry out this task with enhanced speed, consistency, and insight. The benefits are numerous and significant, but the implementation of AI-assisted marking is not without its caveats. As with any transformative technology, the integration of AI into pedagogical processes must be critically assessed to balance its advantages with its inherent limitations and ethical implications.

Benefits of AI in Marking

Time Efficiency and Teacher Workload Reduction

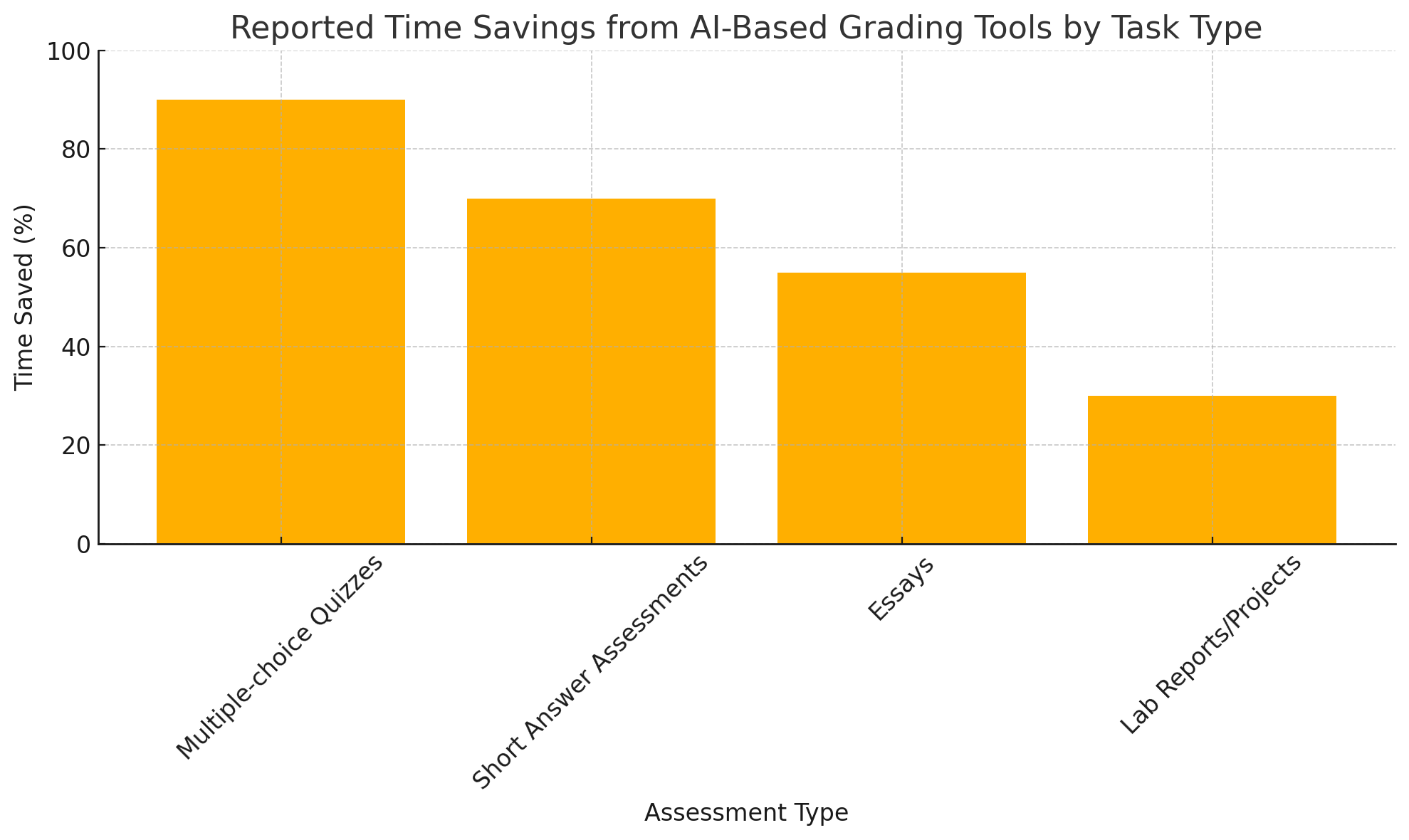

Perhaps the most immediate and measurable benefit of AI in marking is the dramatic reduction in time required to assess student work. By automating repetitive tasks such as scoring multiple-choice questions, checking grammar, or identifying rubric-aligned errors, AI tools allow educators to reclaim hours otherwise spent grading. Tools like Gradescope, Turnitin Feedback Studio, and GPT-based platforms can process batches of assignments in a fraction of the time it would take human teachers. According to a survey conducted by EdTech Digest, educators using AI grading tools report saving between 40% to 75% of their time, depending on the complexity and volume of assessments. This efficiency not only reduces stress and fatigue but also allows teachers to allocate more energy to lesson planning, student support, and professional development.

Consistency and Objectivity in Evaluation

Human grading is subject to variability, both inter-rater and intra-rater. Factors such as fatigue, implicit bias, mood, or contextual distractions can influence how a teacher scores student work. AI tools, by contrast, apply the same algorithmic logic to every submission, ensuring a standardized evaluation process. When well-calibrated and aligned with rubrics, these tools can deliver consistent outcomes across large student cohorts. This is particularly valuable in institutions seeking fairness and transparency, especially for high-stakes assessments. Additionally, automated systems do not exhibit favoritism or unconscious bias linked to gender, ethnicity, or handwriting—common issues in manual grading environments.

Scalable Feedback Generation

AI tools not only score assignments but also provide explanatory feedback. Advanced models such as OpenAI’s GPT-4 and Google's Gemini can generate personalized feedback comments based on specific student responses. This feature is invaluable in formative assessment contexts where actionable feedback is a key component of the learning cycle. Educators can use AI to provide suggestions on grammar, coherence, argument structure, and content gaps—feedback that students can use to improve their work in real time. The scalability of such feedback systems is especially beneficial in large classrooms or online courses, where personalized attention is often limited.

Enhanced Data Insights and Progress Tracking

AI marking systems often come with built-in analytics tools that provide educators with actionable insights. These may include performance trends, concept mastery levels, common misconceptions, and progress over time. Dashboards offered by platforms like Turnitin, Gradescope, and Criterion by ETS help teachers make data-driven decisions about instruction, interventions, and curriculum alignment. In this way, marking evolves from a terminal task to a continuous feedback loop that informs pedagogy and improves learning outcomes. Furthermore, such analytics can be shared with students and parents, promoting transparency and collaborative goal-setting.

Support for Differentiated Instruction

The granular data generated by AI tools allows educators to identify students who need additional support or enrichment. Rather than applying a one-size-fits-all approach, teachers can tailor instruction based on individual or group needs revealed through AI-generated assessment reports. This capability is particularly advantageous in inclusive classrooms, where learners present a wide spectrum of abilities, languages, and learning styles. For example, language learners may receive feedback focused on syntax and vocabulary, while native speakers are guided toward higher-order thinking and argumentation.

Limitations and Challenges of AI in Marking

Despite the compelling advantages, the use of AI in marking is accompanied by notable limitations. These challenges must be acknowledged and addressed to ensure ethical, effective, and sustainable implementation.

Inability to Fully Grasp Subjective or Creative Responses

While AI has made remarkable strides in processing natural language and recognizing logical structures, it still struggles with evaluating subjective, creative, or emotionally nuanced work. Assignments in literature, art, philosophy, or reflective writing often require human judgment to interpret tone, style, originality, or moral complexity. For example, an essay exploring the philosophical dimensions of a literary character may receive a technically sound but contextually shallow evaluation from an AI. In such instances, AI can complement human grading, but it cannot substitute the nuanced discernment of an experienced educator.

Risk of Algorithmic Bias and Model Limitations

AI models are trained on large datasets, and these datasets may contain biases that are inadvertently learned and perpetuated. If not properly mitigated, AI tools can reflect and even amplify systemic inequities present in their training data. For example, if a language model has been trained primarily on American English, it may unfairly penalize non-native expressions or regional variations in student writing. Furthermore, models trained on historical grading patterns may internalize past inconsistencies and propagate them forward. Transparency in model development, continuous auditing, and inclusive data sourcing are essential to minimize such risks.

Limited Contextual Awareness

AI systems evaluate responses based on patterns and probabilities but lack genuine contextual understanding. This means they may misinterpret references, humor, irony, or rhetorical devices that a human reader would comprehend. Additionally, AI may struggle to evaluate interdisciplinary assignments that draw upon multiple frameworks or unconventional presentation formats. In such cases, teachers may need to override AI decisions or manually review specific assignments to ensure fairness and accuracy.

Dependence on Quality Input and Rubric Design

The effectiveness of AI in marking is highly dependent on the quality of the rubrics and prompts provided by the teacher. Poorly constructed or overly generic rubrics can lead to inconsistent or superficial evaluations. Likewise, assignments that are ambiguous or not AI-compatible (e.g., creative multimedia projects) may yield unsatisfactory results. Teachers must be adequately trained in prompt engineering and rubric development to leverage AI tools effectively. This represents a shift in professional skillsets that not all educators may be prepared or resourced to acquire.

Data Privacy and Ethical Considerations

The deployment of AI in education raises important questions about data security and student privacy. Many AI grading tools require access to sensitive academic records, essays, and personal identifiers. If stored or processed by third-party providers, this data may be vulnerable to misuse, breaches, or unauthorized surveillance. Educators and institutions must therefore conduct due diligence on AI vendors, ensure compliance with regulations such as GDPR and FERPA, and obtain informed consent from students and guardians when necessary.

Potential Over-Reliance and Deskilling

There is also concern that excessive dependence on AI for grading could lead to a deskilling of the teaching profession. As educators delegate more of their evaluative responsibilities to machines, they may gradually lose the practice and discernment necessary to provide rich, qualitative feedback. Moreover, the relational and pedagogical value of teacher-student interactions during the assessment process may be diminished. It is essential that AI be positioned as a support tool—not a replacement—for professional judgment and instructional engagement.

Reported Time Savings from AI-Based Grading Tools by Task Type

To quantify the operational impact of AI marking tools, the chart below illustrates average reported time savings across different assessment types.

Striking the Right Balance

The future of AI in marking depends not only on technological advancements but also on institutional policies, teacher training, and stakeholder trust. A hybrid model—where AI handles high-volume, low-subjectivity tasks while educators focus on more nuanced evaluations—appears to be the most promising approach. In this model, AI acts as a time-saving assistant, flagging areas of concern, suggesting feedback, and performing routine grading, while human teachers retain final authority and provide interpretive depth.

Professional development programs must evolve to equip educators with the competencies required to evaluate, select, and responsibly implement AI grading tools. Similarly, edtech companies must prioritize transparency, explainability, and user control in the design of their products. Only through this collaborative, human-centric approach can the benefits of AI in marking be fully realized without compromising educational values or equity.

Implementing AI Marking Tools Effectively

The integration of artificial intelligence (AI) into the educational assessment process is no longer a speculative proposition but a tangible and growing reality. While the benefits of AI-assisted marking—ranging from efficiency gains to enhanced feedback quality—are well established, the actual implementation of such tools in educational environments requires strategic foresight, institutional support, and an emphasis on responsible usage. This section outlines best practices for deploying AI in marking, identifies critical success factors, and discusses the systemic considerations necessary for sustainable integration across schools, universities, and online learning platforms.

Professional Development and Training

The effective use of AI in marking begins with the educator. Even the most advanced AI tools require human oversight, contextual alignment, and informed calibration. Therefore, a foundational requirement for successful implementation is comprehensive professional development. Teachers must be trained not only on the technical operation of AI tools but also on their pedagogical implications, ethical boundaries, and customization capabilities.

Workshops and training modules should include:

- Rubric creation and alignment strategies tailored for AI interpretation

- Understanding AI-generated feedback and interpreting analytics

- Managing exceptions and refining machine-generated assessments

- Addressing algorithmic limitations and recognizing biased outputs

In particular, training must emphasize that AI is a support mechanism—not a replacement—for teacher judgment. The purpose is to augment human capability, not diminish it. A well-trained educator using AI tools is better positioned to identify student needs, provide targeted interventions, and make informed instructional decisions.

Institutional Readiness and Policy Alignment

Beyond individual preparedness, institutions must establish clear guidelines and frameworks that govern the adoption of AI technologies. School districts, university departments, and online education providers must assess their digital infrastructure to determine compatibility with AI-enabled marking systems. Integration with existing learning management systems (LMS)—such as Canvas, Google Classroom, Moodle, or Blackboard—is crucial for streamlined workflows.

Institutional policies should address:

- Data ownership, security, and storage protocols

- Student consent and transparency around AI involvement in grading

- Review mechanisms for contested grades or AI-generated feedback

- Minimum human oversight thresholds for high-stakes assessments

Moreover, institutions should develop AI implementation roadmaps that include pilot phases, stakeholder consultations, and feedback loops. Early trials in limited subjects or with specific assignment types allow educators to build familiarity and evaluate effectiveness before full-scale deployment.

Tool Selection and Customization

Not all AI tools are created equal, and selecting the right platform is a critical determinant of success. Administrators and educators should evaluate available options based on functionality, subject applicability, integration ease, and pricing models. Ideally, the chosen solution should support multiple assessment formats (e.g., multiple-choice, essays, coding assignments), provide actionable analytics, and allow rubric customization.

Key selection criteria include:

- Alignment with curricular goals and learning outcomes

- Language and regional adaptation capabilities

- Flexibility in setting feedback tone and depth

- Support for multilingual learners and accessibility standards

Customization is particularly important for educators seeking to retain pedagogical autonomy. Tools that allow users to define rubrics, create comment banks, and adjust grading thresholds provide a more personalized experience and better reflect institutional values.

Phased Implementation and Feedback Monitoring

A phased approach to implementation helps mitigate risk and promotes adaptation. Institutions should begin with low-stakes assessments—such as formative quizzes, homework exercises, or practice essays—to test the AI tool's performance and gather user feedback. Teachers can compare AI-generated evaluations with their own to identify discrepancies and refine rubric inputs.

Throughout this phase, it is essential to monitor:

- Accuracy and reliability of grading outputs

- Student perception and acceptance of AI-generated feedback

- Teacher satisfaction and time saved

- Any observable changes in student performance or engagement

Qualitative feedback from both students and teachers should inform ongoing adjustments. Schools may consider forming implementation committees or focus groups to review AI performance and resolve emergent issues.

Ensuring Ethical and Transparent Use

Trust is a central pillar in any educational system, and the use of AI in grading must adhere to ethical standards that reinforce this trust. Students and parents should be informed when AI is used to assess work, and the scope of its application should be clearly communicated. Consent protocols may need to be established, especially in jurisdictions where educational data is tightly regulated.

Transparency involves:

- Disclosing which components of the grade were AI-assessed

- Providing students access to AI-generated feedback and scoring logic

- Offering opportunities for human review upon request

- Ensuring AI decisions can be explained in comprehensible terms

Ethical implementation also requires awareness of equity concerns. Developers and educators must work together to ensure that AI tools do not inadvertently disadvantage certain student populations due to linguistic, cultural, or neurodiversity-related factors. Periodic audits of grading data should be conducted to check for algorithmic bias or systematic discrepancies.

Teacher-AI Collaboration Models

An emerging best practice is the use of hybrid grading systems in which AI performs initial evaluations, followed by human review and final validation. This collaborative model leverages the speed and consistency of AI while preserving the nuance and critical thinking of human judgment.

For example:

- AI may draft preliminary scores and comments, which teachers can approve, edit, or expand

- Teachers may use AI feedback as a starting point for student conferences or one-on-one discussions

- AI can identify common errors across submissions, enabling targeted re-teaching efforts

Such collaborative workflows not only maintain grading integrity but also enhance the quality of interactions between teachers and students. Importantly, they allow educators to retain agency and intervene where necessary, which is especially valuable in high-impact assessments.

Case Studies and Institutional Examples

Several institutions have already demonstrated successful AI integration in marking. For instance, the University of Queensland piloted Turnitin’s Feedback Studio across large undergraduate cohorts and reported a 50% reduction in grading time without a drop in student satisfaction. In the United Kingdom, Ark Schools deployed Gradescope in mathematics and science departments, resulting in faster turnaround times and improved feedback consistency.

Similarly, KIPP Charter Schools in the United States adopted AI-supported formative assessment tools aligned with Common Core standards, which provided real-time diagnostic feedback to both teachers and students. The result was increased instructional responsiveness and a notable improvement in student writing performance over two academic terms.

These examples underscore the importance of institutional vision, teacher involvement, and continuous feedback in driving successful implementation.

Effectively implementing AI marking tools is not merely a technical upgrade but a transformative process that touches on pedagogy, policy, and ethics. When thoughtfully deployed, these tools can significantly enhance educational outcomes by reducing teacher workload, improving feedback quality, and supporting data-informed instruction. However, this transformation depends on adequate training, infrastructure readiness, stakeholder communication, and a commitment to ethical practices. As educational institutions continue to explore AI’s role in assessment, a strategic, human-centered approach will be essential to ensure that innovation leads to meaningful and equitable improvements in teaching and learning.

Conclusion

The integration of artificial intelligence into educational assessment marks a pivotal shift in how marking is conceptualized and executed. For decades, teachers have borne the brunt of repetitive and time-consuming grading responsibilities, often at the expense of more impactful pedagogical work. The rise of AI-powered marking tools offers a compelling solution to this challenge, enabling educators to reclaim valuable time while maintaining—and in many cases enhancing—the quality of feedback provided to students.

As this blog has explored, AI can deliver significant benefits across the educational landscape. From improving grading efficiency and consistency to facilitating personalized feedback and generating actionable analytics, the applications of AI in assessment are broad and increasingly sophisticated. When implemented thoughtfully, these tools allow teachers to transition from administrative burden-bearers to learning facilitators and student mentors, thereby strengthening the core mission of education.

However, this transition is not without its caveats. The limitations of AI—such as its difficulty with subjective interpretation, potential for algorithmic bias, and sensitivity to rubric design—underscore the importance of preserving the teacher’s role in oversight and contextual judgment. Moreover, responsible deployment requires clear ethical frameworks, institutional readiness, and robust professional development to ensure that AI complements rather than supplants human expertise.

The future of AI in marking lies not in automation alone, but in collaboration. A hybrid model—where AI handles repetitive, large-scale assessment tasks while teachers focus on complex evaluation and relational pedagogy—offers the most promising path forward. In this model, technology becomes a trusted ally, enabling teachers to work smarter without compromising the depth or integrity of their instructional practices.

Ultimately, the responsible adoption of AI in marking can catalyze a more efficient, equitable, and responsive education system. As institutions embrace this evolution, the focus must remain firmly on empowering educators, supporting learners, and upholding the values of fairness, transparency, and academic integrity. With the right strategy, AI can become not just a tool for saving time, but a catalyst for elevating the entire teaching and learning experience.

References

- EdTech Digest – Time-Saving Benefits of AI for Teachers

https://www.edtechdigest.com/ai-saving-time-for-teachers - Gradescope by Turnitin – Automate Grading with AI

https://www.gradescope.com - OpenAI – GPT-4 and Education Applications

https://openai.com/gpt-4 - Turnitin – Feedback Studio Overview

https://www.turnitin.com/products/feedback-studio - Cambridge English – Write & Improve Tool

https://writeandimprove.com - Google for Education – Socratic Learning Assistant

https://edu.google.com/intl/ALL_us/products/socratic - Ecree – Real-Time AI Essay Feedback

https://ecree.com - Criterion Online Writing Evaluation – ETS

https://www.ets.org/criterion - Education Policy Institute – UK Teacher Workload Insights

https://epi.org.uk - UNESCO – AI in Education Policy Guidance

https://unesdoc.unesco.org/ark:/48223/pf0000376709