How Machine Learning Is Revolutionizing Cloud-Native Container Security

The rise of cloud-native technologies has ushered in a new era of software development and deployment, defined by agility, scalability, and modular architecture. At the heart of this transformation are containers—lightweight, portable units that encapsulate applications and their dependencies. Technologies such as Docker and Kubernetes have revolutionized how organizations build and manage applications, enabling microservices-based architectures and continuous delivery pipelines at unprecedented speed and scale. However, this innovation has also introduced new and complex security challenges that traditional approaches are ill-equipped to handle.

In cloud-native environments, security is no longer a static perimeter defense. Containers are ephemeral by design; they are spun up and down within seconds, often running across hybrid or multi-cloud infrastructures. These transient workloads interact with numerous APIs, services, and environments, increasing the attack surface and introducing a multitude of dynamic security risks. From misconfigured container images to unauthorized lateral movement and runtime privilege escalation, the spectrum of vulnerabilities has expanded significantly. This necessitates a shift from conventional, rule-based security mechanisms to more intelligent, adaptive solutions capable of operating in real time.

Machine learning (ML) has emerged as a critical enabler in this evolution. As a subset of artificial intelligence (AI), ML leverages algorithms and statistical models to learn patterns from data, detect anomalies, and make predictions without being explicitly programmed for every specific scenario. In the context of container security, ML offers the capability to analyze vast volumes of telemetry data generated by containerized workloads—including logs, network traffic, and system calls—to detect suspicious behaviors that might otherwise go unnoticed.

Unlike signature-based tools, which rely on pre-defined rules and known attack indicators, ML models can proactively identify unknown threats, adapt to new container behaviors, and evolve alongside the applications they are designed to protect. For example, by learning what constitutes “normal” container activity in a given environment, ML algorithms can flag deviations in behavior that suggest malicious intent—even in the absence of a known exploit signature.

The integration of ML into container security is not merely a technological trend but a strategic imperative. As attackers become more sophisticated and the cloud-native stack becomes more layered and interconnected, reactive security models fall short. What is needed is a predictive, context-aware, and continuous approach to threat detection and prevention—an approach that ML is uniquely suited to deliver.

Moreover, ML-driven security aligns well with the principles of DevSecOps, where security is embedded throughout the software development lifecycle (SDLC) rather than treated as an afterthought. With ML models capable of real-time monitoring and adaptive response, security teams can shift from being bottlenecks to becoming proactive enablers of innovation and resilience.

This blog post explores the pivotal role of machine learning in enhancing the security posture of cloud-native container environments. It will begin by examining the shifting nature of containerized application security, followed by an in-depth look at how ML is transforming container protection mechanisms. Real-world case studies and current industry applications will illustrate the impact of these technologies, and the final sections will address the ethical considerations, challenges, and future directions of ML in container security. Through this analysis, we aim to provide security practitioners, DevOps leaders, and enterprise architects with a comprehensive understanding of how ML is reshaping the future of cloud-native security.

Cloud-Native Container Security – A Shifting Attack Surface

The adoption of cloud-native architectures—particularly containers and Kubernetes-based orchestration—has redefined the paradigm of application development and deployment. This technological shift brings undeniable benefits in terms of scalability, efficiency, and speed. However, it also introduces a profoundly different and rapidly evolving attack surface. Understanding the nuances of this transformation is essential for grasping why traditional security mechanisms fall short and why adaptive, intelligent systems such as machine learning are increasingly indispensable.

Anatomy of a Cloud-Native Environment

At its core, a cloud-native environment is built around microservices deployed in containers, often orchestrated using tools like Kubernetes. These containers run on dynamic infrastructure that may span across public clouds, private clouds, or hybrid environments. Unlike monolithic applications, microservices are loosely coupled, independently deployable, and communicate with each other using APIs.

Each container encapsulates a specific function or service, bundled with its dependencies and libraries. Containers are managed by orchestrators like Kubernetes, which automate deployment, scaling, and management. While this setup enables rapid innovation, it also creates layers of abstraction and dynamic interactions that are difficult to monitor and secure with static policies or perimeter-based firewalls.

Evolving Threat Landscape in Containers

Traditional application security was built around a well-defined network perimeter and relatively stable system configurations. In contrast, cloud-native environments are dynamic, distributed, and decentralized. Containers can be created, destroyed, or moved in seconds. Their short lifespan and mutable nature make them invisible to conventional security solutions unless continuous, intelligent monitoring is in place.

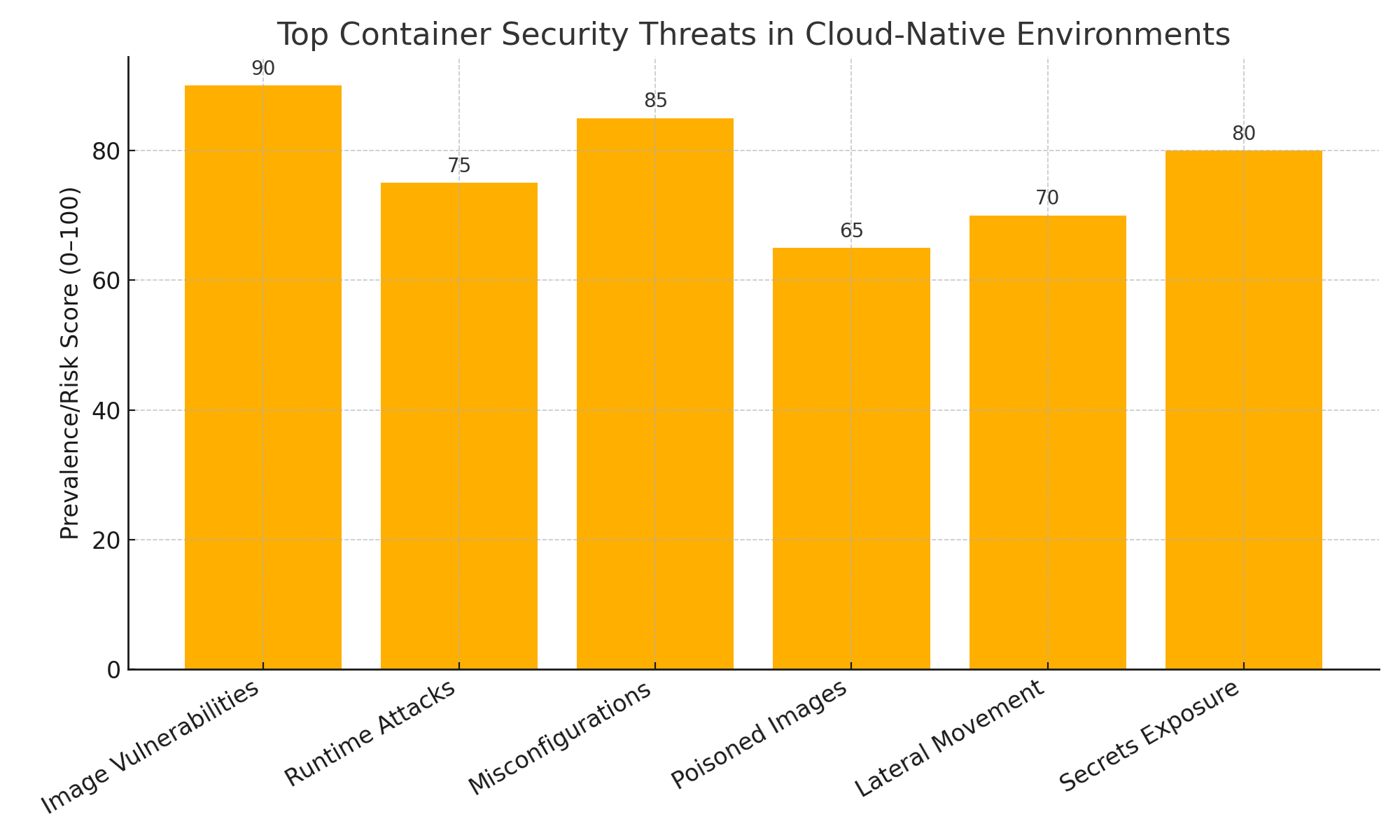

Several categories of threats have emerged as particularly relevant in cloud-native environments:

- Image Vulnerabilities: Containers are instantiated from images. If the base image includes outdated libraries or vulnerable components, every container derived from it inherits those vulnerabilities.

- Poisoned or Malicious Images: Attackers may upload compromised images to public registries. If such an image is pulled into a production environment, it can provide a direct backdoor.

- Configuration Drift: Manual changes, inconsistent policies, or drift between development and production environments may introduce security gaps over time.

- Misconfigured Secrets and Environment Variables: Containers often need access to API keys, tokens, or credentials. Improper handling of secrets can lead to unauthorized access and data leakage.

- Runtime Attacks: Even if a container is secure at deployment, it may be exploited at runtime. Threats include container escape (gaining access to the host system), privilege escalation, or injection of malicious processes.

- Lateral Movement: Once inside a container, attackers may attempt to move horizontally across services using internal APIs or exploiting vulnerabilities in adjacent containers.

These threats are compounded by the velocity of deployment. Continuous integration and continuous delivery (CI/CD) pipelines accelerate release cycles, often pushing code into production multiple times a day. Security, in such scenarios, must be able to keep pace without impeding development agility.

Limitations of Traditional Security Tools

Traditional security solutions—firewalls, intrusion detection systems (IDS), antivirus software—were designed for static environments. They rely heavily on signature-based detection and operate at the network or operating system level. While effective against known threats in fixed infrastructure, these tools falter in cloud-native scenarios for several reasons:

- Lack of Context: Signature-based tools cannot understand the internal logic of containerized applications or the expected behavior of microservices.

- Static Rules: Predefined rules do not adapt well to dynamic, ephemeral workloads. As containers scale up or down, old rules may become irrelevant, or new attack vectors may emerge unnoticed.

- Blind Spots in East-West Traffic: Internal container-to-container communications (east-west traffic) often occur within the same node or cluster, bypassing perimeter security tools entirely.

- Insufficient Granularity: Traditional monitoring tools operate at coarse-grained levels and cannot inspect individual containers’ processes, system calls, or namespaces effectively.

In practice, this means that traditional tools may either generate excessive false positives—wasting security team resources—or fail to detect novel attacks that do not match known patterns.

Container Security Lifecycle Considerations

Securing cloud-native containers requires a lifecycle approach that addresses vulnerabilities at multiple stages:

- Build-Time Security: Scanning images for known vulnerabilities and ensuring base images are sourced from trusted registries.

- Deploy-Time Security: Applying policy-as-code frameworks (e.g., Open Policy Agent) to enforce security controls during deployment, such as mandatory RBAC (role-based access control) or network segmentation.

- Runtime Security: Monitoring container behavior during execution to detect anomalies such as unauthorized file access, network connections, or unexpected process spawns.

Each stage presents opportunities—and challenges—for threat detection. However, as the number of containers and their interconnections scale, the volume of telemetry and event data generated becomes overwhelming for human analysts or rule-based engines. This is the inflection point where machine learning becomes essential.

A Complex Web of Interdependencies

One of the key reasons why securing containers is inherently more difficult is the web of interdependencies that exists in modern deployments. A single microservice may rely on multiple third-party libraries, APIs, and services. Furthermore, container orchestrators like Kubernetes introduce their own layers of abstraction and configuration, such as network policies, service meshes, and pod security standards.

Misconfigurations in these layers—such as over-privileged service accounts, exposed dashboards, or unvalidated ingress controllers—have been exploited in numerous high-profile attacks. Because containers operate in clusters, a compromise in one service may lead to a cascading failure or a full-cluster breach.

Growing Regulatory and Compliance Pressures

Finally, the security posture of containerized workloads is also being shaped by regulatory requirements. Industries such as finance, healthcare, and government services are now mandated to comply with frameworks like HIPAA, PCI DSS, GDPR, and FedRAMP, all of which require robust auditability, data protection, and incident response capabilities.

As organizations migrate to the cloud and adopt containers, they must ensure their security controls extend beyond infrastructure to the application layer. This further increases the demand for real-time analytics and automated response systems—an area where ML excels.

In conclusion, the attack surface in cloud-native container environments is not only broader but more dynamic and opaque than ever before. The limitations of traditional tools, the speed of modern DevOps practices, and the complexity of container orchestration collectively demand a new approach to security. As the next section will detail, machine learning offers a powerful paradigm shift—bringing intelligence, adaptability, and scale to container security in ways that were previously unattainable.

How Machine Learning Transforms Container Security

As organizations increasingly rely on cloud-native architectures to power their digital infrastructure, the complexity and dynamism of these environments demand an equally advanced approach to security. Traditional security solutions—though foundational—lack the speed, adaptability, and contextual intelligence required to defend containerized workloads at scale. In this context, machine learning (ML) emerges as a transformative force, enabling a shift from reactive to proactive security practices. This section explores how ML technologies are being applied to enhance every layer of container security, from anomaly detection to real-time behavioral analytics.

The Need for Intelligence in Container Security

Cloud-native applications, composed of containers and microservices, generate a massive volume of telemetry data including logs, metrics, traces, network traffic, system calls, and runtime behavior. Manually analyzing this deluge of information or relying on rule-based engines quickly becomes infeasible. Machine learning offers a scalable alternative—automating pattern recognition, behavior modeling, and anomaly detection to help security teams identify potential threats before they cause damage.

What makes ML uniquely suited to this task is its ability to adaptively learn from data. By training models on historical and real-time datasets, ML algorithms can understand what constitutes “normal” behavior within a containerized environment. Once this baseline is established, any deviation from the norm—such as unusual network connections, unexpected process executions, or abnormal resource utilization—can be flagged as suspicious, even if it does not match any known attack signature.

Core ML Techniques in Container Security

Several machine learning approaches have been adapted to serve container security needs. These include:

Supervised Learning

Supervised learning algorithms are trained on labeled datasets, where each data point is tagged with a known outcome (e.g., malicious or benign). In container security, this method can be used to detect known threats, classify vulnerabilities, or identify container images likely to be compromised based on previous attacks.

Unsupervised Learning

Unsupervised learning is critical in detecting unknown or zero-day threats. These models identify outliers and anomalies without prior knowledge of what constitutes a threat. Clustering algorithms like K-Means or DBSCAN are used to segment container behaviors into normal and abnormal groups, allowing for the detection of new attack vectors.

Reinforcement Learning

Although still emerging in this space, reinforcement learning allows systems to learn optimal defense strategies through trial and error. Applied to container security, it could dynamically adjust firewall rules or network segmentation based on evolving threat landscapes.

Deep Learning

Deep learning models, especially those using neural networks, are adept at extracting complex patterns from unstructured data such as log files or container system call traces. Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) are commonly employed to model temporal behaviors in microservices communication.

Applications Across the Container Lifecycle

Machine learning enhances security at every phase of the container lifecycle:

Build-Time

- Image Vulnerability Prediction: ML models can predict the risk score of a container image based on its software stack and dependencies.

- Malicious Code Detection: Static code analysis enhanced with ML can identify malicious code patterns during the image build phase, reducing risk before deployment.

Deploy-Time

- Policy Enforcement: ML can recommend or auto-generate deployment policies based on historical usage patterns, enabling tighter controls without manual configuration.

- Access Control Optimization: Behavioral modeling can identify overprivileged roles or service accounts based on access patterns and usage history.

Runtime

- Anomaly Detection: Real-time analysis of system calls, network activity, and process behavior enables the detection of advanced persistent threats (APTs) and container escapes.

- Threat Hunting and Forensics: ML-driven clustering and sequence analysis help in reconstructing attack timelines, identifying indicators of compromise (IoCs), and accelerating incident response.

Case in Point: ML vs. Traditional Tools

To illustrate the difference in capabilities, consider a scenario where an attacker injects a cryptocurrency miner into a running container. Traditional tools might detect abnormal CPU usage but may not correlate this behavior to other signals like outbound connections to mining pools or unusual process execution patterns. An ML-based system, on the other hand, could correlate these indicators, recognize the behavioral deviation, and trigger alerts or initiate auto-remediation workflows.

The adaptive nature of ML also means that as container workloads evolve—with updates, configuration changes, or scaling events—the security model learns and adapts. This continuous learning cycle is crucial in fast-moving DevOps environments, where static policies become obsolete quickly.

ML-Driven Risk Scoring and Prioritization

Given the volume of alerts generated by modern container security tools, prioritization becomes critical. ML helps address this challenge through context-aware risk scoring. Rather than treating all alerts equally, ML models assess multiple variables—such as severity of the vulnerability, exploitability, the container’s access privileges, and its proximity to sensitive data—and assign a risk score.

This enables security teams to focus on high-impact threats, improving incident response efficiency. For example, a low-severity misconfiguration in a development container may receive a lower risk score than a medium-severity vulnerability in a production-facing container with public access.

Integration with DevSecOps Pipelines

A key advantage of ML-driven security is its alignment with DevSecOps principles. ML models can be embedded in CI/CD workflows to provide security insights without delaying releases. For instance:

- Automated scans of code commits for suspicious patterns.

- ML-informed approval gates for container image promotion.

- Feedback loops that retrain models based on newly discovered threats.

These integrations foster a culture of security as code, where protection mechanisms evolve alongside application logic and infrastructure changes.

Limitations and Interpretability

Despite its potential, machine learning is not without challenges. One significant issue is model interpretability—security analysts must be able to understand why a model flagged a particular container or behavior as suspicious. This is especially important in high-stakes environments like finance or healthcare, where decisions must be explainable for compliance and audit purposes.

Moreover, ML systems are not immune to manipulation. Adversarial attacks, where malicious actors feed misleading data into training sets to bias outcomes, pose a growing threat. Ensuring data integrity and model robustness is an ongoing area of research in ML for security.

The Role of Open-Source and Commercial Tools

The adoption of ML in container security is being accelerated by both open-source projects and commercial solutions. Open-source tools like Falco—a Kubernetes-native runtime security project—can be extended with ML plugins for advanced behavioral detection. On the commercial side, platforms such as Palo Alto Networks Prisma Cloud, Aqua Security, Sysdig Secure, and Lacework leverage ML to provide real-time threat detection and compliance monitoring.

These solutions typically include features such as:

- Behavioral baselining of container activity.

- ML-powered risk assessments of container images.

- Automated policy recommendations.

- Alert correlation engines that reduce false positives.

The integration of these capabilities into centralized dashboards allows security teams to operate with greater confidence, scalability, and precision.

In summary, machine learning represents a powerful advancement in securing cloud-native container environments. Its ability to learn from and adapt to the vast, fast-moving telemetry generated by container workloads offers a clear advantage over legacy security systems. As containerized infrastructures continue to evolve, ML’s role will only grow in prominence, offering enterprises the intelligence, automation, and agility needed to stay ahead of increasingly sophisticated threats.

Real-World Applications and Industry Case Studies

The theoretical promise of machine learning in enhancing cloud-native container security is compelling, but it is in real-world deployments that its transformative potential is truly realized. Across sectors—from finance and healthcare to e-commerce and SaaS—organizations are deploying machine learning to safeguard containerized workloads from evolving threats. This section examines how leading security vendors, open-source platforms, and DevSecOps teams are applying ML technologies in practice. Through specific use cases and industry case studies, it becomes evident that ML is not only viable but essential in modern container security strategies.

Industry Adoption of ML-Driven Container Security Solutions

The security needs of modern enterprises vary widely depending on the industry, regulatory landscape, and the complexity of their software environments. However, a common thread among security-forward organizations is the embrace of behavioral analytics and machine learning-based detection engines as foundational elements of their cloud-native security architecture.

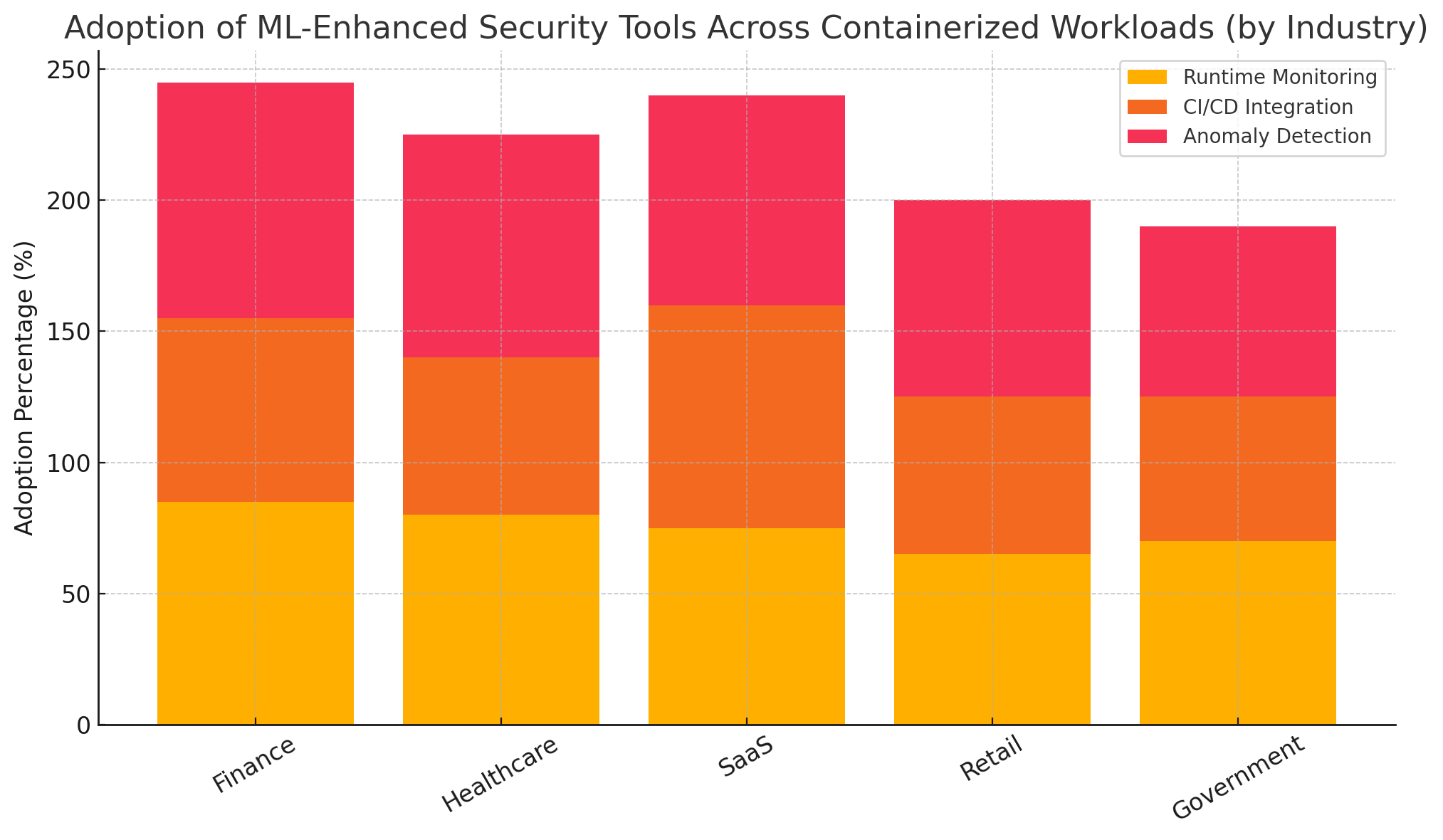

Financial Services

Financial institutions, which deal with sensitive customer data and are subject to stringent compliance standards such as PCI DSS and SOX, have been early adopters of ML-enhanced container security. Leading banks and fintech firms have integrated ML-driven threat detection systems into their Kubernetes clusters to monitor anomalous behavior, identify insider threats, and prevent lateral movement across containerized applications.

One prominent financial firm deployed Aqua Security’s Behavioral Engine, which uses unsupervised learning to analyze real-time container activity. The system detected an internal analytics service attempting to exfiltrate data to an unfamiliar IP address—activity that matched no known signature and would have gone unnoticed by traditional security tools. The ML system flagged the behavior, triggering an automated quarantine of the container, thereby averting a potential breach.

Healthcare and Life Sciences

Organizations in the healthcare sector face dual challenges: high-value data targets and rigorous compliance mandates (e.g., HIPAA). ML tools have proven effective in ensuring secure container orchestration of electronic health records (EHR) systems and genomic data pipelines.

A U.S.-based healthcare provider implemented Lacework’s Polygraph Data Platform, which constructs behavioral baselines of cloud workloads using ML. The platform detected an anomalous connection between a Kubernetes pod and an unverified data storage endpoint. Investigation revealed an outdated container image running a vulnerable SSH service—an entry point exploited by an attacker. The ML system provided full forensics, including timeline reconstruction and privilege escalation tracking, allowing the provider to close the vulnerability before data was accessed.

DevSecOps Use Cases in CI/CD Pipelines

Security automation is a key priority for DevSecOps teams managing rapid deployment cycles. Integrating ML into CI/CD pipelines enhances pre-production container security in several ways:

- Predictive Risk Scoring: ML models assess container images and rank them based on the likelihood of containing vulnerabilities, outdated packages, or insecure configurations. This enables teams to prioritize remediation in build pipelines without slowing down releases.

- Anomalous Code Commit Detection: ML models can be trained to analyze historical code commit patterns. When a developer commits suspicious changes—such as the addition of base64-encoded strings or obfuscated code—the system can flag the behavior and initiate peer review.

- Behavioral Modeling for Service Meshes: In environments using Istio or Linkerd for service mesh architectures, ML can model microservice interaction patterns and alert on deviations that might suggest lateral movement or command-and-control activity.

For example, an e-commerce platform deploying multiple microservices across regions used Sysdig Secure with ML-enabled runtime profiling. By modeling baseline behaviors of containers during staging, Sysdig identified a post-deployment divergence in production—an API gateway container making outbound requests to a crypto mining pool. This behavior was immediately isolated, and a patched version of the service was deployed within minutes.

Open-Source ML Integration: Falco and Beyond

The open-source community has also played a significant role in making ML-based container security accessible to a wider range of organizations. Falco, an open-source Kubernetes-native runtime security engine developed by Sysdig and now a CNCF (Cloud Native Computing Foundation) project, has recently introduced ML-compatible extensions.

Falco normally uses a rules-based engine to detect suspicious syscalls. However, by integrating external ML plugins, teams can feed telemetry data from Falco into anomaly detection models. These models, trained on historical behavior, can uncover threats that evade static rule sets. For example:

- Detecting previously unseen container execution paths.

- Identifying rare but high-impact kernel module loads.

- Flagging containers communicating with IPs outside expected geographies.

Several open-source security teams are experimenting with combining eBPF (extended Berkeley Packet Filter) kernel tracing with ML models in Prometheus + Grafana pipelines. By analyzing patterns in eBPF traces, ML algorithms can identify early signs of kernel-level exploits or unexpected syscall chains—providing powerful low-level visibility that traditional tools cannot match.

ML in Multi-Cloud and Hybrid Cloud Environments

In multi-cloud and hybrid environments, consistent visibility and enforcement are especially difficult to achieve. Here, ML adds value by abstracting the complexities of different cloud platforms and providing unified behavioral intelligence across environments.

One multinational enterprise with workloads spread across AWS, Azure, and on-prem Kubernetes clusters employed Palo Alto Networks Prisma Cloud to unify container and cloud workload protection. The ML engine monitored over 50,000 containers and learned normal runtime behavior across multiple cloud contexts. During a red team exercise, an attacker simulated a container escape and attempted lateral movement across regions. The ML engine detected unusual port scanning behavior and unauthorized cross-cluster authentication, issuing high-severity alerts to the SOC team, which acted within minutes.

This example underscores how ML can provide contextual correlation across cloud boundaries, ensuring container security policies scale as fluidly as the environments they protect.

Benefits Realized by Enterprises

Across these real-world implementations, several consistent benefits have been observed:

- Reduced Dwell Time: ML models can detect malicious activity in minutes rather than days or weeks, significantly reducing attacker dwell time.

- Lower False Positives: Unlike rigid signature-based tools, ML improves precision by understanding contextual nuances, thus reducing alert fatigue for security analysts.

- Faster Incident Response: Automated risk scoring and forensic modeling accelerate triage, enabling faster remediation and root cause analysis.

- Proactive Defense: By identifying unusual patterns early, ML tools often detect indicators of compromise before an exploit fully executes, preventing damage altogether.

These outcomes translate into improved security postures, lower operational costs, and enhanced regulatory compliance.

In conclusion, the deployment of machine learning in container security is not merely experimental—it is operational, proven, and growing rapidly in scope. From high-compliance sectors like finance and healthcare to fast-moving tech startups and global enterprises, ML is empowering organizations to secure their containerized workloads with intelligence, automation, and speed. These real-world applications demonstrate not just technical feasibility but also strategic necessity, as container-based infrastructures continue to scale in complexity and criticality.

Challenges, Ethics, and the Future of ML in Container Security

While machine learning has introduced a paradigm shift in how organizations approach container security, its implementation is not without significant challenges and ethical considerations. As the technology matures, enterprises must confront issues ranging from technical limitations and operational complexity to concerns about explainability, accountability, and the long-term sustainability of ML in dynamic cloud-native environments. This section critically evaluates these challenges and offers insights into the future trajectory of ML-powered container security solutions.

Technical Challenges and Limitations

Model Accuracy and False Positives

One of the most immediate challenges facing ML in container security is the trade-off between detection sensitivity and specificity. While ML models are capable of flagging anomalous behavior with impressive speed, they can also produce false positives—legitimate behavior misclassified as malicious—especially in environments with fluctuating usage patterns. In a containerized architecture, where services may auto-scale or evolve rapidly, even normal operational deviations can be misinterpreted, leading to alert fatigue and reduced trust in the system.

Data Drift and Model Degradation

Cloud-native workloads are inherently dynamic. Containers are frequently rebuilt, configurations are updated, and services are deployed using different base images and dependencies. This introduces data drift, where the environment that the ML model was trained on no longer reflects the current operational state. Over time, this degrades model accuracy and increases the risk of missed detections or false alarms. Without a robust mechanism for continuous retraining and validation, the effectiveness of ML models can diminish significantly.

Resource Constraints

While ML-based detection mechanisms offer enhanced accuracy, they often require significant computational resources for model training, real-time inference, and telemetry analysis. In resource-constrained environments—such as edge deployments or high-density Kubernetes clusters—these resource demands may affect performance or incur additional infrastructure costs. Balancing performance with security insight remains a critical design consideration.

Explainability and Accountability

The “Black Box” Problem

Many ML models, especially those using deep learning, function as black boxes, offering little transparency into their decision-making processes. In security operations, this lack of explainability is problematic. Analysts need to understand why a particular event was flagged as suspicious in order to assess its validity, respond appropriately, and maintain audit trails for compliance purposes.

A flagged container engaging in anomalous network activity may be the result of a legitimate update or a misconfigured service. Without contextual reasoning provided by the model, analysts are left to investigate from scratch, reducing the utility of the ML system and slowing response times. As regulatory frameworks (e.g., GDPR, HIPAA, and NIST) increasingly emphasize transparency and accountability in automated decision-making, explainability becomes not only a technical necessity but a legal imperative.

Bias in Training Data

Like all AI systems, ML-driven security models are only as good as the data they are trained on. If training data is incomplete, imbalanced, or biased—favoring certain environments or threat types over others—the model may fail to generalize or misidentify edge-case behaviors. In heterogeneous container environments that span multiple clouds, regions, and application architectures, the risk of bias must be carefully mitigated through diverse, representative training datasets.

Ethical Considerations in Autonomous Threat Response

As ML-powered security tools become more sophisticated, some platforms are introducing autonomous remediation capabilities. These include auto-quarantining containers, revoking credentials, or blocking network connections based solely on ML-detected threats. While this accelerates response times, it also raises ethical and operational concerns.

Risk of Overreach

An autonomous system that incorrectly quarantines a production container can cause service outages, financial loss, or reputational damage. When ML models act without human oversight, the line between proactive defense and overreach becomes blurred. Organizations must strike a balance between automated response and manual validation, particularly in high-stakes industries like healthcare and finance.

Human-in-the-Loop Design

To mitigate these risks, many experts advocate for a human-in-the-loop approach. Here, ML models assist by triaging alerts, generating insights, and recommending actions, while human analysts retain ultimate decision-making authority. This hybrid model not only preserves human accountability but also fosters continual feedback, improving the model’s performance over time.

Vendor Lock-In and Interoperability

Another concern is the growing reliance on proprietary ML models developed by cloud and security vendors. While these platforms offer advanced features, they often lack interoperability and exportability. Organizations that invest heavily in one ecosystem may face vendor lock-in, making it difficult to integrate ML security insights across multi-cloud or hybrid deployments.

To address this, there is a growing push toward open standards and open-source ML tools that support pluggable architectures. Tools like Kubescape, Falco, and Open Policy Agent (OPA) are fostering community-driven approaches to container security, allowing ML models to be customized, audited, and shared across environments.

Future Directions: Toward Intelligent, Self-Healing Security

Despite current limitations, the future of ML in container security holds great promise. Several trends are poised to reshape the landscape in the coming years:

Federated Learning and Privacy-Preserving Models

Federated learning allows ML models to be trained across decentralized data sources—such as different Kubernetes clusters or cloud accounts—without transferring sensitive data. This approach enhances model generalization while preserving data privacy, a major concern in regulated industries.

Self-Healing Infrastructure

Future ML-powered security systems will not only detect and respond to threats but also enable self-healing capabilities. For instance, if a vulnerability is detected in a container image, the system could automatically trigger a rebuild using a patched base image and redeploy the updated container. These actions could be logged, validated, and rolled back as needed, bringing a new level of resilience and autonomy to cloud-native infrastructure.

Context-Aware Security Orchestration

Emerging platforms are beginning to fuse ML with contextual orchestration engines that consider application state, network topology, business impact, and compliance obligations when responding to threats. This allows for more intelligent prioritization and action, minimizing disruption while maximizing protection.

Model-as-a-Service (MaaS)

As the ML ecosystem matures, we can expect to see pretrained security models offered as a service, allowing organizations to subscribe to continuously updated ML capabilities without having to develop or train their own models. This democratizes access to advanced security intelligence and lowers the barrier to adoption for smaller enterprises.

Strategic Recommendations for Enterprises

To prepare for this future, enterprises should consider the following strategic actions:

- Invest in Explainable AI (XAI) to enhance transparency and accountability.

- Prioritize continuous learning and retraining to keep models relevant in dynamic environments.

- Implement hybrid models that combine automation with human oversight.

- Participate in open-source communities to contribute to and benefit from collaborative ML innovation.

- Adopt modular, interoperable tools that support multi-cloud environments and reduce lock-in risks.

In conclusion, the integration of machine learning into cloud-native container security is both a technological breakthrough and a strategic frontier. While the path is fraught with challenges—ranging from model drift and explainability to ethical dilemmas around autonomous action—these are surmountable with the right design choices, governance frameworks, and cultural alignment. By embracing a future-oriented, responsible approach to ML adoption, organizations can not only secure their containerized workloads but also set the foundation for resilient, intelligent, and self-defending infrastructure.

Conclusion

As cloud-native technologies continue to redefine the architecture of modern digital infrastructure, securing containerized environments has become an increasingly complex and urgent priority. The microservices-driven paradigm, characterized by high scalability, rapid deployment cycles, and distributed execution, presents a fundamentally different attack surface—one that is too dynamic and multifaceted for traditional security approaches to manage effectively. Against this backdrop, machine learning has emerged not merely as a supplementary tool but as a critical enabler of intelligent, proactive, and adaptive security.

Throughout this analysis, it has become evident that machine learning transforms the landscape of container security in several profound ways. From its ability to establish behavioral baselines and detect anomalies in real time, to its capacity for predicting risk and automating threat prioritization, ML offers capabilities that are well-aligned with the inherent volatility of containerized workloads. Organizations leveraging ML-enhanced security tools report significant improvements in detection speed, reduction in false positives, and faster incident response—outcomes that directly translate to stronger operational resilience and regulatory compliance.

Real-world deployments across industries such as finance, healthcare, retail, and technology further validate the utility of ML in securing containers. Whether it's identifying insider threats in a Kubernetes cluster, uncovering zero-day vulnerabilities during runtime, or flagging anomalous behavior in a CI/CD pipeline, ML is proving indispensable across the container lifecycle. Open-source projects like Falco and commercial platforms like Prisma Cloud, Lacework, and Sysdig Secure are enabling enterprises to operationalize these benefits at scale, while fostering greater transparency and automation.

At the same time, the integration of machine learning is not without challenges. Issues related to data drift, false positives, model interpretability, and resource overhead must be addressed thoughtfully to maintain trust in automated systems. Furthermore, ethical concerns—particularly around autonomous remediation and explainability—necessitate a deliberate balance between automation and human oversight. As ML capabilities continue to advance, enterprises must establish governance frameworks that prioritize transparency, accountability, and fairness in algorithmic decision-making.

Looking ahead, the future of ML in container security is poised for even greater innovation. Emerging trends such as federated learning, self-healing infrastructure, and context-aware orchestration will push the boundaries of what is possible in autonomous defense. Meanwhile, developments in explainable AI and model-as-a-service platforms will make advanced ML capabilities more accessible and auditable across organizations of all sizes.

Ultimately, securing cloud-native environments is not a one-time endeavor but a continuous, evolving process. In this dynamic context, machine learning offers a sustainable path forward—one that complements the agility of modern DevOps practices while fortifying container workloads against increasingly sophisticated cyber threats. Organizations that strategically embrace ML-driven security, invest in responsible deployment practices, and integrate these systems into their broader DevSecOps culture will be well-positioned to thrive in the face of accelerating technological change and rising security demands.

By turning data into defense, and complexity into actionable intelligence, machine learning is not only enhancing container security—it is redefining the very foundation of how digital infrastructure is protected in the cloud-native era.

References

- Aqua Security – Cloud Native Application Protection

https://www.aquasec.com/solutions/cloud-native-security/ - Sysdig Secure – Runtime Security for Containers

https://sysdig.com/products/secure/ - Lacework – AI-Driven Cloud Security Platform

https://www.lacework.com/platform/ - Palo Alto Networks Prisma Cloud – Container Security

https://www.paloaltonetworks.com/prisma/cloud - CNCF Falco – Cloud Native Runtime Security Tool

https://falco.org/ - Open Policy Agent (OPA) – Policy-Based Control for Cloud-Native

https://www.openpolicyagent.org/ - NIST – Secure Software Development Framework

https://csrc.nist.gov/publications/detail/white-paper/ssdf/final - Kubernetes Security Best Practices – CNCF

https://kubernetes.io/docs/concepts/security/overview/ - Red Hat – Securing Containers in CI/CD

https://www.redhat.com/en/topics/security/container-security - Google Cloud – Applying ML in Security Operationshttps://cloud.google.com/blog/topics/security/machine-learning-for-cybersecurity