How Generative AI Is Transforming Military Surveillance and Global Defense Strategy

The defense sector is undergoing a paradigm shift as generative artificial intelligence (AI) systems are increasingly integrated into military surveillance and strategy. Generative AI refers to algorithms (like advanced neural networks) capable of producing new content – from drafting battle plans or simulating combat scenarios to controlling autonomous drones in real time. Militaries around the world are investing heavily in these technologies to gain a strategic edge. In 2024 alone, the global market for military AI was nearly $10 billion and is projected to roughly double by 2029, reflecting the rapid adoption trend. This surge in funding and interest underscores how transformative generative AI has become for modern warfare.

Generative AI’s impact spans from the tactical level – improving reconnaissance and target identification – to the strategic level, where it can assist commanders in wargaming and decision-making. At the same time, these advancements raise crucial ethical and geopolitical questions. How do autonomous AI weapons fit within the laws of war? Could an AI arms race destabilize global security? Defense establishments and international institutions are now grappling with regulatory frameworks to ensure these powerful tools are used responsibly. In this post, we delve into real-world case studies of generative AI in military applications, explore how they are shaping defense strategies globally, and discuss the far-reaching implications for ethics and governance.

Generative AI on the Battlefield: Autonomous Drones and Surveillance

Generative AI has revolutionized battlefield surveillance and the use of autonomous systems. One prominent application is in autonomous drones and unmanned vehicles, which leverage AI to operate with minimal human control. For example, during the ongoing conflict in Ukraine, swarms of AI-enabled drones have been deployed for reconnaissance and strikes. A Ukrainian startup called Swarmer developed edge-AI software (“Styx”) that can command a swarm of drones to collaboratively scout and attack targets. In trials, about 10 drones were networked as an autonomous team; if one drone was shot down, the rest would automatically regroup and attack from different angles without needing new orders. The system relies on on-board generative AI algorithms that give each drone situational awareness and the ability to react in real time. The goal is to scale this to swarms of 100+ drones, which could overwhelm defenses by acting in concert faster than a human operator could direct. As Swarmer’s CEO noted, “drone swarming is the next big thing…it’s about a group of autonomous drones” working together dynamically.

Such AI-driven swarms are blazingly fast and adaptive, highlighting a key advantage of generative AI in combat. Decisions that once took minutes or hours for a human command chain (detecting a threat, deciding on a response, coordinating multiple units) can now be generated in seconds by the AI. “This is what makes the technology so powerful if you have it – and so scary if you don’t,” said Swarmer’s CEO. Indeed, multiple nations are pursuing similar drone swarm capabilities. In Germany, defense firms collaborated to demonstrate swarms of AI-controlled drones for the Bundeswehr, where the drones could perceive their environment, share target information, and even analyze enemy intent collectively. France’s Thales has also built an AI swarm architecture enabling drones to autonomously prioritize missions and respond to threats as a group. And under the AUKUS security alliance, the UK’s BlueBear AI demonstrated a diverse drone swarm controlled by a single operator. These examples show a clear trend: generative AI is enabling highly autonomous robotic units – from small quadcopters to unmanned fighter jets – that dramatically extend surveillance reach and reaction speed on the battlefield.

Another facet of surveillance enhanced by generative AI is intelligence processing. Modern militaries collect vast amounts of sensor data (satellite imagery, radar scans, signal intercepts, etc.). Generative AI helps analyze and interpret this deluge of information. For instance, AI models can generate composite reconnaissance images or fill in gaps, improving target detection. Generative adversarial networks (GANs) are used to create realistic synthetic images that augment training data for object recognition algorithms. By training on both real and AI-generated imagery of, say, enemy vehicles or installations, detection AI becomes more robust in varied conditions. AI can also enhance low-quality surveillance feeds: a generative model can extrapolate a sharper image from a blurry infrared scan, making it easier for analysts (or automated systems) to discern what’s there. These capabilities effectively act as a force multiplier for surveillance – fewer sensors or sorties are needed when each collected image or signal is analyzed deeply and even “imagined” into multiple possibilities by AI.

Beyond imagery, generative AI aids signals and media intelligence. The U.S. Marine Corps recently experimented with generative AI tools during a long deployment in the Pacific. One tool automatically sifted through foreign news media, flagging every mention of the Marine unit and producing concise summaries for commanders. This AI-generated media analysis gave officers rapid insight into how their operations were perceived globally, without needing an intel staff to manually monitor dozens of news sources. Marines also used generative AI to summarize daily situation reports and operational updates, greatly reducing the burden of information overload. According to Major Victor Castro of the 15th Marine Expeditionary Unit, the unit would feed in raw reports and the generative model would produce useful summaries, helping staff “facilitate” and speed up internal communications. These real-world trials underscore the practical value: generative AI can rapidly condense and contextualize battlefield data, whether it’s sensor readings or newsfeeds, allowing human decision-makers to grasp the situation faster and more accurately.

Perhaps the most eye-opening use of AI on the battlefield has been in autonomous combat and dogfighting. Advanced AI agents developed through deep reinforcement learning – a technique where the AI “learns” optimal tactics via millions of simulated engagements – have now been tested in real military aircraft. In 2024, the U.S. Air Force demonstrated an AI fighter pilot aboard a modified F-16 jet (the X-62A VISTA). The AI, developed by defense company Shield AI and trained through DARPA’s AlphaDogfight trials, autonomously flew the jet and executed tactical maneuvers against a human-piloted aircraft in a dogfight scenario. Secretary of the Air Force Frank Kendall personally flew in the AI-driven plane (with a safety pilot on board) as the autonomous agent engaged a human F-16 opponent. This marked a milestone: a generative AI policy (based on reinforcement learning) successfully translated from simulator to real-world flight. Shield AI’s system had learned aggressive aerial combat moves via trial-and-error in simulation, essentially generating its own maneuvering strategies beyond what programmers explicitly taught. The company’s EVP Brett Darcey noted they proved the AI “can fly and it is successful… and the way we approach the problem is scalable”, pointing to future integration on various platforms. Such AI “pilots” could eventually control uncrewed combat jets or drone swarms in coordination with manned fighters, reacting to threats at machine speed.

The implications for surveillance and engagement are profound. An autonomous wingman drone equipped with generative AI can scout ahead of manned aircraft, identify targets or hazards, and even engage enemies directly with onboard weapons. All the while, it can generate adaptive tactics – for example, performing evasive maneuvers or coordinated flank attacks without waiting for human direction. This capability has been likened to a battlefield “co-pilot”: always present, scanning, and ready to act. It dramatically shortens the observe–orient–decide–act (OODA) loop in combat. Instead of relaying sensor data up a chain of command for analysis and orders, the AI agent can interpret the data and generate an action immediately (like jamming an enemy radar or repositioning for advantage), then inform the human operator of what it’s done. Militaries envision using this not just in the air but on land (autonomous vehicles/robots) and sea (uncrewed submarines or ships), fundamentally changing surveillance and response in all domains.

It’s worth noting that despite these leaps in autonomy, keeping humans “in the loop” remains a doctrinal requirement for most nations. In practice, that means AI might generate target recommendations or even initiate an attack run, but a human supervisor authorizes the final trigger pull – at least with current policy. In the Marine Corps example, AI summarized intel and suggested insights, but human intelligence officers still made the final interpretations and decisions. Likewise, the AI F-16 had a safety pilot ready to intervene. This pairing of AI and human expertise is often described as centaur-like teaming: the AI provides superhuman speed and analytical breadth, while the human ensures judgment, ethics, and strategic context. As we’ll see later, finding the right balance between autonomous AI initiative and human control is one of the central ethical challenges of this revolution.

AI-Generated Simulations and Wargaming

Generative AI is also transforming how militaries train, plan, and simulate conflicts. Traditional military planning often relies on experienced officers to imagine scenarios and manually war-game different strategies – a process that is time-consuming and limited by human cognitive bandwidth. Today, large-scale simulations augmented by AI can generate and play out thousands of battlefield scenarios far faster than humans, providing invaluable insights.

A vivid illustration comes from the Johns Hopkins Applied Physics Laboratory, where researchers integrated large language models (LLMs) into a military simulation environment called AFSIM. In a typical computerized wargame, human analysts would script the behavior of friendly and enemy forces and then run the simulation to see the outcomes. The APL team instead had an AI serve as the “brain” for some of the forces: given a mission and parameters, the LLM would generate operational plans (concepts of operations) for how units should behave in each scenario. The results were startlingly effective – the AI-driven entities could explore novel strategies beyond their human counterparts’ foresight, and do so much faster. The combination of an LLM with the simulation framework cut the planning and execution cycle of wargames from months to mere days. In one demonstration, they got a new military scenario up and running in under two weeks, whereas traditionally scenario design might take several months.

This speed-up means military planners can iterate through many more possibilities than before. Instead of a handful of tabletop exercises, an AI-enabled system might run hundreds of simulated battles varying parameters each time – akin to Monte Carlo analysis for warfare. For example, the Navy could fight an AI-simulated adversary “over and over — until it finds the key to victory,” as one Naval Institute article noted about AI-generated battle simulations. The AI can quickly tweak tactics, explore unconventional maneuvers, or stress-test strategies under different conditions (weather, enemy posture, etc.), essentially generating a rich spectrum of possible futures. Commanders reviewing these simulation outputs gain a deeper understanding of which strategies are robust and which fail under certain circumstances. This is a fundamental shift: war planning moves from relying on a few educated guesses to being informed by probabilistic analysis of thousands of AI-simulated engagements.

One notable project involved a three-player wargame scenario – two allied “blue” forces and one “red” enemy – where any of the three could be controlled either by a human or an AI agent. This flexibility allowed researchers to pit AI vs AI, AI vs human, or hybrid teams. The AI, by generating moves for its side, effectively becomes a tireless adversary (or ally) for training purposes. Military officers can practice against AI opponents that continuously learn and change their tactics, a much more dynamic training partner than repetitive scripted exercises. The APL team’s long-term vision is a user-friendly tool where commanders at various levels can run such AI-powered war games on demand on their own computers. Instead of assembling large in-person exercises that occur infrequently, leaders could routinely test their plans through “virtual repetitions” powered by generative models. This democratizes wargaming insights, pushing them out to more echelons of command.

Crucially, these AI systems are being designed with explainability in mind, given the high stakes. Analysts are working on features to interpret and explain the AI’s simulated actions – essentially to translate why an AI made certain tactical decisions. By building a degree of transparency (for instance, highlighting that “the AI attacked the supply line because it inferred the enemy’s fuel was low”), they hope to make AI-generated strategies more understandable and trustworthy to human decision-makers. This addresses a potential skepticism: military leaders won’t adopt AI suggestions blindly; they need confidence in the reasoning. Bob Chalmers, a lead on the project, explained that a commander typically asks officers for three courses of action, then they war-game those – an AI could play that role, but exploring thousands of variations instead of just a few. With proper explainability, future commanders might have AI staff officers proposing truly innovative plans, along with rationale, vastly expanding the strategic options considered.

Beyond planning, training and simulation environments are becoming more realistic with generative AI. AI can populate virtual battlefields with believable behavior – for instance, civilian crowds, enemy patrols, or environmental events – all generated on the fly. In cyber defense training, generative AI is used to simulate cyberattacks based on patterns learned from past incidents, giving defenders a lifelike sparring partner. In physical training, there’s the concept of a “digital twin” battlefield: generative models help create rich 3D replicas of real terrain and then simulate how battles might unfold there, including unpredictable elements like insurgent ambush tactics or civilian movements. This was not easily achievable with older, rule-based simulation engines alone.

Case studies from 2023-2024 highlight these advancements. The Marine Corps University experimented with a custom large language model for AI “generative wargaming” – essentially an LLM that can write scenario injects and potential adversary responses in exercises. Meanwhile, the U.S. Special Competitive Studies Project (SCSP), an independent defense think tank, reported that training AI on military doctrine and intel to draft battle plans is already “an active area of experimentation”. Their analysts emphasize that the Pentagon should explore these possibilities so as not to fall behind rivals. In September 2023, SCSP experts told Breaking Defense that nothing in theory prevents chatbots from summarizing secret intelligence or writing detailed operations orders – it’s mostly a matter of careful development and ensuring human oversight. In other words, if generative AI can plan your vacation, as one quip went, it can plan a military mission – you just need to train it on the right data and constraints.

The Pentagon is indeed moving to harness these tools for planning and training. In early 2024, the Department of Defense’s Chief Digital and AI Officer launched a Generative AI initiative to accelerate such capabilities across “15 warfighting and enterprise use cases ranging from command and control and decision support to… cybersecurity”. This includes developing AI co-planners that assist with contingency planning, logistics, and even drafting after-action reports. The aim is not to replace human judgment, but to drastically cut down the toil in producing and analyzing plans. An AI model might instantly generate multiple draft operation plans (OPLANs) for a given mission, each with pros/cons annotated, which human officers can then refine and approve. This could save enormous time in crisis situations where planning speed is critical.

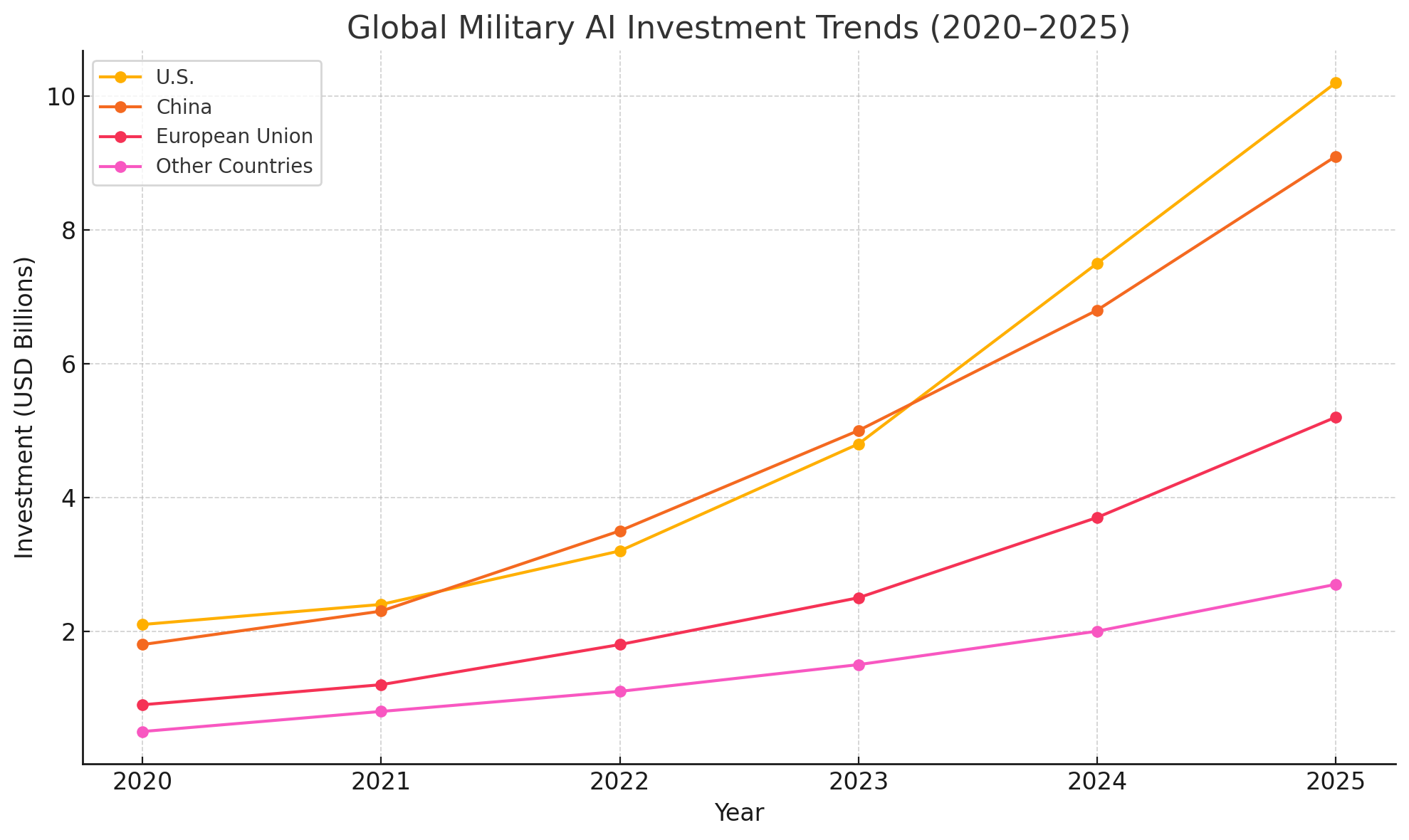

To visualize the growing adoption of AI in defense, consider the U.S. military’s spending on AI and simulations. According to a Brookings analysis, the DoD’s commitments to AI-related contracts tripled from around $190 million in 2022 to $557 million in 2023. The chart below illustrates this surge in just one year as the Pentagon moved from small-scale AI experiments to larger implementations:

This explosion in investment is fueled by success stories in simulations and other applications. In fact, when including the full potential value of multi-year AI contracts, the DoD’s AI outlays could be over $4 billion – a 1,200% increase in a year. The military is effectively betting that generative AI will be a cornerstone of next-generation training and operations, and it is racing to integrate these systems into its strategic processes.

Strategic Planning and Decision Support with Generative AI

Generative AI is not only useful on the tactical battlefield or in simulated exercises – it has begun to influence high-level military strategy and decision-making. By analyzing vast data and generating insights, AI systems serve as cognitive aids to human commanders and policy-makers. In the realm of intelligence and planning, large language models and other generative tools act as “strategic copilots.”

One immediate use is in intelligence analysis and fusion. Military and defense intelligence agencies have the monumental task of piecing together information from spies, satellites, cyber intercepts, and open sources. Generative AI can help connect the dots by ingesting all this information and generating summaries, threat assessments, or even predictive scenarios. For example, an AI might review thousands of raw intelligence reports and generate a concise briefing highlighting emerging risks or recommended actions. We saw a microcosm of this with the Marine expeditionary unit using AI to summarize situational reports daily. Scale that up to a national intelligence center: AI models fine-tuned on military intelligence could rapidly draft analyses that would take human analysts weeks – identifying patterns (like unusual troop movements or new propaganda narratives) that humans might miss. The Special Competitive Studies Project (SCSP) highlighted that experiments are underway where generative AI is trained on classified military data and doctrine specifically to craft operational plans and analytic reports. The goal is not to hand decision-making entirely to AI, but to allow human strategists to explore a wider solution space with AI-generated options at hand.

Consider strategic war planning. Normally, developing a complex campaign plan (say, how to respond to a potential crisis in multiple domains) could take planners months of work, coordinating input from every branch of the armed forces. AI assistance can speed up compiling these plans. The generative model can be instructed with the commander’s intent and key objectives, and it can then draft out detailed options for force deployment, sequencing of operations, and logistics support – all in natural language or annotated maps. Indeed, “Generative AI will improve all aspects of military capabilities… across the entire DoD”, notes one industry analysis, from generating operations orders to parsing intelligence data. Early prototypes have shown that an AI fed with prior operational plans and doctrine can produce a plausible draft plan for a new scenario, which human experts can then adjust. In essence, the AI does the first 90% of the staff work in seconds, and the officers do the final 10% polishing and judgment.

Another burgeoning capability is using AI for course-of-action generation and decision gaming at the strategic level. This is related to wargaming but in a more abstract, planning-focused sense. For instance, if a crisis emerges, senior officers can ask an AI system to generate several possible responses (diplomatic posturing, limited strike, full intervention, etc.) complete with predicted outcomes or risks for each. The AI, having been trained on historical data and current intelligence, might highlight an unconventional solution or a risk factor that the human team hadn’t fully considered. By having these AI-suggested courses of action (COAs), leaders can weigh more alternatives. Importantly, the AI can also play devil’s advocate by stress-testing each COA: generating adversary reactions or second-order effects (like “if we move troops to border X, AI predicts adversary will mobilize in region Y and cyber-attacks might spike”). This helps strategists foresee cascade effects of their decisions.

Such capabilities are not purely theoretical. The U.S. Indo-Pacific Command and European Command are reportedly among the first to receive new generative AI tech for operational planning support. These tools likely assist with fusing multi-source intelligence for their theaters and generating contingency responses to various potential flashpoints. On the other side of the world, China’s People’s Liberation Army (PLA) has been pursuing what it calls “intelligentized warfare,” incorporating AI at all levels from surveillance to strategy. Chinese military writings discuss AI for rapid decision support, such as automatically identifying the enemy’s center of gravity or vulnerabilities by processing all available data through AI models. In essence, both great power militaries see algorithmic decision support as key to faster and more informed command decisions in any future conflict.

An interesting use case of generative AI in strategy is in logistics and maintenance planning – often an unheralded aspect of defense but absolutely crucial. Modern militaries have extremely complex supply chains and maintenance cycles for equipment. Generative AI can optimize these by predicting needs and generating plans to meet them. For example, an AI system could take operational plans and generate a detailed sustainment plan: how many tons of fuel, ammunition, parts, and rations are needed each day, and which routes or depots should supply them. It can dynamically adjust these plans as variables change (combat intensity, damaged infrastructure, etc.), regenerating new logistics schedules on the fly. This was alluded to by a Marine Corps commander who said their use of AI was just the “tip of the iceberg” and that he sees significant opportunity in using AI to better prepare and supply expeditionary units deployed abroad. If AI can ensure the right supplies are at the right place at the right time, commanders can focus more on the fight and less on the resupply.

One real-world example: the Pentagon’s Joint Logistics Operations Center might use a generative model to simulate various disruption scenarios (like a key port being knocked out) and have the AI generate contingency supply routes and methods (perhaps shifting to airlift or alternate ports) in seconds. Previously, such contingency planning would be a war-game or staff drill consuming days. Now it becomes a quick AI-assisted analysis, which humans then validate and implement if needed. This kind of resilience planning is increasingly important as military supply lines could be targeted in future conflicts.

Generative AI is also edging into policy and geopolitical analysis for defense leaders. National security decisions often involve anticipating an adversary’s moves or the geopolitical fallout of an action. Advanced AI models that ingest diplomatic cables, economic data, and past conflict outcomes might generate assessments like, “If Country A invades territory B, AI predicts with 80% confidence that Country C will impose sanctions and mobilize its forces as a deterrent.” While not infallible, such AI-generated analysis offers another data point for officials, one derived from patterns in vast historical datasets. Think tanks and defense analysts have begun to use AI in this fashion to model great-power escalations and outcomes in a semi-automated way, to assist human wargaming of scenarios like a South China Sea clash or a Baltic crisis.

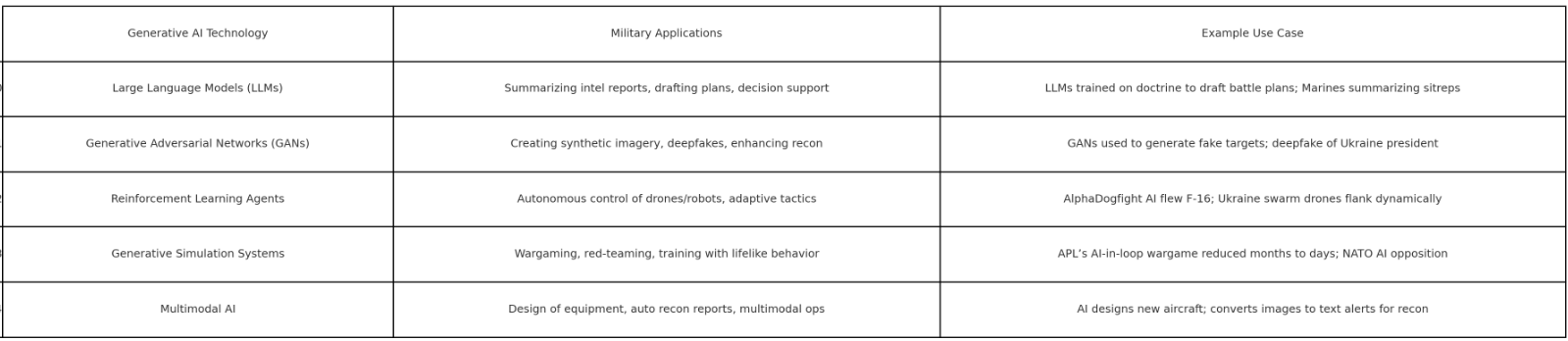

To summarize the broad range of generative AI technologies and their military applications, the table below provides a structured comparison of key types and use cases:

Generative AI spans a spectrum from text-generation to image-creation to autonomous decision agents – together enhancing many aspects of military operations.

It must be emphasized that in all these applications, human oversight remains pivotal. The consensus in democratic militaries is that AI can recommend or even take preliminary actions, but a human commander bears responsibility and must have the ability to intervene. The U.S. Department of Defense, for instance, follows ethical AI principles requiring that AI usage be responsible, equitable, traceable, reliable, and governable. In practice, this means any AI system should have a clear chain of human accountability and fail-safes if it starts to behave undesirably. In the AI-enabled F-16 test, a human pilot could disconnect the AI if neededshield.ai. In drone swarms, typically a human operator sets objectives or “vetoes” strikes for compliance with rules of engagement. As generative AI’s role in strategy grows, new doctrines like “human-on-the-loop” (continuous monitoring of autonomous systems) are being developed to make sure that speed and autonomy do not come at the cost of control or ethics.

Ethical, Geopolitical, and Regulatory Implications

The rise of generative AI in military affairs brings not only opportunities but also significant ethical and geopolitical challenges. As nations deploy AI-guided weapons and decision systems, questions arise about accountability, legality, and the risk of an AI-fueled arms race. Here, we examine these implications and the ongoing efforts (and gaps) in governance.

Ethical considerations are at the forefront. A key concern is the prospect of lethal autonomous weapons systems (LAWS) – often dubbed “killer robots” – that could make life-and-death engagement decisions without direct human judgment for each strike. Generative AI is a step toward that reality: an AI that can independently generate a target list and deploy a drone swarm to attack, for instance. Such autonomy challenges the established norms of human responsibility in warfare. If an AI makes a mistake – say, misidentifies a civilian vehicle as hostile and engages it – who is accountable? The developer, the commander, or the machine itself? Current laws of war and military justice systems do not have clear answers, since they presume human intent behind every attack. This has led to calls for ensuring meaningful human control over any AI that can use lethal force. Many ethicists argue that machines should not have the power to decide to kill a human, as this undermines the moral agency and accountability frameworks that underpin international humanitarian law.

Relatedly, the possibility of AI errors (“false positives” in targeting, or an AI misreading an ambiguous situation) causing unintended escalation is worrying. An AI might interpret an innocuous movement as hostile and fire, potentially sparking conflict without a deliberate decision by leadership. This could happen at machine speeds, giving humans little chance to intervene. Geopolitical instability could be heightened by such accidents. Researchers Kanaka Rajan and colleagues caution that AI-powered weapons, as they grow more widespread, “may lead to geopolitical instability” and could trigger conflicts or arms races that also undermine trust in civilian AI research. Essentially, an AI mishap in a tense standoff could be the 21st-century version of an accident that ignites a war – except faster and potentially harder to de-escalate.

There is also the risk of proliferation. Unlike nuclear technology, which requires rare materials and complex industrial capacity, AI software can spread easily. Once cutting-edge military AI algorithms are developed, they could be copied or stolen, or eventually open-sourced. This means even smaller states or non-state actors might get access to advanced generative AI for malicious purposes. For example, terrorist groups could use open-source generative models to program drone attacks or create deepfake videos of military commanders to sow confusion. The barrier to entry for dangerous AI is lower than for traditional WMDs. This ease of proliferation is why experts worry that generative AI could become a “trillion-dollar arms race” in the tech sector, with companies and states vying for supremacy in foundation models. Unlike past arms races confined to governments, the generative AI race includes private tech giants building ever more powerful models (often with military applicability) without clear international rules. The Carnegie Endowment noted that corporate tech players have joined a “trillion-dollar arms race in generative AI”, competing for investment in large models, which blurs the line between economic and military competition.

We are essentially in a situation where governance is lagging behind technology. As of 2025, there is no comprehensive international treaty or framework specifically governing military AI use. This “perilous regulatory void” leaves a powerful technology largely unchecked on the global stage. The United Nations has started to take steps: in December 2024, the UN General Assembly adopted a resolution on “Artificial intelligence in the military domain and its implications for international peace and security.”. The UN has invited member states and organizations to submit views on the opportunities and challenges of military AI, focusing beyond just lethal weapons. This could be a precursor to formal discussions or a working group on AI in warfare. However, these are early days – the international community is basically at the stage of information-gathering and norm advocacy.

Some non-binding agreements and principles have emerged. In February 2023, the U.S. and dozens of other countries (now 51 nations as of early 2024) endorsed the Political Declaration on Responsible Military Use of AI and Autonomy. This declaration outlines guidelines like ensuring AI usage is consistent with international law, that humans remain accountable for decisions, and that AI is developed with safety measures. It explicitly encourages maintaining human control over weapons and decision systems. While it was a significant diplomatic move to acknowledge the issue, it’s important to note this declaration is voluntary and not a legally binding treaty. Critics, including advocacy groups like the Campaign to Stop Killer Robots, argue that such declarations, while positive, are “feeble” compared to a binding ban or regulation on autonomous weapons. They fear it might provide a fig leaf for continued AI weapon development without strong oversight mechanisms.

Nations have also set their own policies. The United States Department of Defense has Directive 3000.09, which governs autonomy in weapons – essentially requiring human involvement for lethal force decisions and high-level review for any truly autonomous system. This policy, dating originally to 2012, has been guiding U.S. AI weapons development and was reinforced by the 2023 declaration as an extension of it. Similarly, NATO released a set of AI principles in 2021 committing to lawful, responsible use of AI in defense. China has publicly supported negotiations on preventing an AI arms race (at least rhetorically in UN forums), but at the same time is heavily investing in military AI. Russia has been more resistant to binding limits, emphasizing potential military advantages. So, global consensus is hard to come by – much like early nuclear arms control debates – because each country weighs the strategic risk of constraints against the risk of uncontrolled competition.

Geopolitically, an AI arms race is underway, and generative AI is a hot front. U.S. defense officials frequently point to China’s rapid advances in AI as a spur for American efforts. “AI adoption by adversaries like China, Russia… is accelerating and poses a significant national security risk,” warned the U.S. Chief AI Officer in late 2024. This perception – that whoever leads in AI will have a military edge – is driving competition reminiscent of the Cold War in some ways. Unlike the nuclear race, however, there’s also a strong commercial and economic dimension. Cutting-edge AI research is largely happening in the private sector. As noted in a TIME report, private companies spend vastly more on AI R&D than governments do. This means defense establishments must collaborate with tech companies or risk falling behind. It also means any regulatory moves have to account for civilian AI advances, not just military labs.

An arms race in AI could lead to instability if nations rush deployments of unproven systems for fear of falling behind. This “race to the bottom” in safety is a real concern: if Country A deploys semi-autonomous weapons, Country B might do the same quickly, even if not fully tested, lest they appear inferior. The result could be unpredictable AI systems facing off, with higher chance of accidents. International norms could help mitigate this by, for example, agreeing on testing standards or red lines (like no autonomous nuclear launch decisions – a topic even the 2023 U.S. declaration touched on, with the U.S. stating algorithms should not control nuclear launch authority). There have been calls for a global ban on killer robots, supported by the UN Secretary-General and some states, but major powers have been reluctant to agree to any outright prohibition.

Another ethical dimension is misuse of generative AI for disinformation and cyber warfare. Militaries could deploy AI-generated propaganda at unprecedented scale – producing fake videos of enemy leaders, or creating entire networks of false personas to influence opinions (a more insidious form of psychological operations). We already saw a primitive example when a deepfake video of Ukraine’s President Zelenskyy was circulated in 2022, falsely showing him telling Ukrainian troops to surrender – presumably aimed at eroding Ukrainian morale. In the future, such deepfakes will be more sophisticated and harder to detect. Generative AI can mimic voices, faces, even battlefield radio chatter. This raises the stakes for trust and verification in wartime communication. Forces might need to implement anti-AI authentication (like digital watermarks or code words of the day) to ensure troops know an order or broadcast is genuine and not AI-generated deception. International law does ban perfidy (treachery) in war, but a deepfake of a leader might be a grey zone. The ethical line between legitimate psychological operations and unacceptable deception will be tested by these technologies.

Privacy and civil liberties also come into play as military AI blurs into domestic spheres. Surveillance capabilities built for battle could be turned inward by authoritarian regimes – for instance, using generative AI to analyze domestic social media and generate lists of “troublemakers” or to create fake evidence to frame dissidents. While this is more a concern under authoritarian governments, it underscores why democratic governance of AI is critical to uphold human rights even outside conflict scenarios.

In summary, generative AI offers a double-edged sword. It bolsters military effectiveness, but also amplifies risks – from unintentional escalation and civilian harm to a destabilizing arms competition and erosion of established norms. The world is only beginning to grapple with these issues. Experts widely agree that a proactive approach is needed. As Carnegie Endowment recommends, the EU and others should spearhead inclusive initiatives to set global standards and ensure responsible use of AI in warfare. Such standards could include transparency requirements (e.g., notifying when AI is in use), testing and validation protocols for military AI, and perhaps agreements on certain limits (for example, not arming autonomous systems with weapons of mass destruction, or not interfering with nuclear command/control using AI). The hope is to avoid an “extinction-level” threat or runaway scenario, and instead manage this powerful technology in a way that maintains international peace and security.

Shaping Global Defense Strategies

Generative AI is increasingly a strategic factor shaping defense policies and military doctrines around the world. Its influence can be seen in how major powers modernize their forces, how alliances like NATO craft collective defense strategies, and how smaller states plan asymmetric tactics. We are witnessing a shift in the very character of warfare – often compared to the impact of the advent of aviation or nuclear weapons in prior eras.

For the United States and its allies, one clear trajectory is the pursuit of an AI-augmented force to maintain a competitive edge. The U.S. National Defense Strategy and supporting documents have, in recent years, emphasized AI as a key enabler for the future force. The Department of Defense’s 2023 AI Strategy (nicknamed “AI and Autonomy Initiative”) explicitly calls for rapidly fielding AI systems from the back office to the tactical edge. This involves not just adopting individual AI tools, but reorganizing how the military fights – moving toward what’s termed Joint All-Domain Command and Control (JADC2), where AI helps network the forces across land, sea, air, space, and cyber domains. Generative AI can accelerate the JADC2 vision by intelligently routing information and suggesting cross-domain tactics (for example, an AI might recommend an electronic warfare action by the Air Force to support an Army unit under drone attack). The U.S. military is also investing in human-AI teaming as a doctrinal concept – pairing soldiers with AI “wingmen” or decision support systems in every unit. Over the next decade, we can expect to see commanders at all levels routinely consult AI advisors for quick analysis, much as they consult human intelligence officers or analysts today.

A tangible measure of this importance is the funding and organizational changes in defense establishments. The Pentagon stood up a Chief Digital and AI Office (CDAO) and initiatives like the Algorithmic Warfare Cross-Functional Team (Project Maven) specifically to inject AI into operations. The rapid increase in AI contract spending (as charted earlier) shows budgets being realigned toward these technologies. Similarly, countries like the UK and France have established defense AI centers and are funding projects to automate imagery analysis and logistics. Israel, known for its high-tech military, reportedly used an AI-assisted target selection process in recent conflicts to rapidly strike targets (though details are classified). These developments all indicate that military planning now assumes AI as a core component. Scenarios in war games and drills factor in AI capabilities on both sides, and strategists consider questions like “How do we fight if communications are degraded but our AI can still operate?” or conversely, “How do we defend if the enemy has superior AI surveillance?”

For China and Russia, generative AI is seen as a way to offset Western conventional superiority. China’s PLA has invested heavily in AI for everything from swarm drones to AI-enabled submarines and information warfare units. Chinese doctrine frequently mentions achieving a “system destruction warfare” approach – paralyzing the enemy’s networks and decision-making, presumably using cyber and AI tools, before a shot is even fired. Part of China’s global strategy is also to become a leader in AI and thus shape international norms to its advantage (for example, exporting AI surveillance tech to allied states). Russia, despite a smaller tech base, has shown interest in AI for electronic warfare and robotics (such as experimenting with AI-powered unmanned ground vehicles in Syria). Russian military thinkers have written about “automated control systems” that in theory could coordinate battlefield operations at machine speed – though Russia’s actual capabilities are likely behind the U.S. and China at this point.

Importantly, the use of AI is not limited to superpowers. Middle powers and smaller states are also innovating, often in asymmetrical ways. For instance, Turkey has employed loitering munitions (a form of AI-guided drone that can patrol an area and attack targets of opportunity) in conflicts like Nagorno-Karabakh, giving them an edge against traditional armored forces. Ukraine’s resourceful use of AI-driven drone tactics against a larger adversary (Russia) demonstrates how even a country without the most advanced jets or ships can leverage commercially available AI tech (like hobby drones fitted with improvised AI targeting) to punch above its weight. In essence, generative AI is democratizing certain warfare capabilities – what once required satellites and huge command centers might be achieved with drones and laptops running AI algorithms. This shapes defense strategies: countries expecting to face superior forces might invest in swarms of autonomous drones, AI-guided missiles, or cyber-AI units to level the field. It also influences alliance behavior – sharing of AI tools becomes a part of security assistance (for example, the U.S. providing AI software to help Ukraine analyze drone footage quickly).

On the alliance front, NATO has made AI a priority in collective defense. NATO’s 2022 Strategic Concept mentions emerging disruptive technologies including AI as key areas for cooperation. The alliance set up an AI Initiative and even held its first-ever exercises focused on joint AI integration. A relevant point for strategy: interoperability. If multiple allied nations use AI, their systems need to communicate seamlessly. NATO is working on standards so that, say, a French AI surveillance system can feed data into an American AI command system in a coalition operation. This is a new kind of integration challenge (akin to making sure radios were on the same frequency in past wars, now it’s ensuring AI share data formats and confidence measures). Alliances are also concerned with ethical alignment – NATO’s AI principles mirror those of the U.S. to ensure no ally deploys an AI that might do something out of line in a joint mission.

Generative AI is shaping doctrine in another way: by accelerating the tempo of warfare. Military theorists predict that OODA loops will compress dramatically for those who effectively deploy AI. Wars might be decided by who can process information and act on it fastest, a concept often called “hyperwar.” Strategies globally are adapting to this by emphasizing flexibility, delegation of authority, and pre-approved responses. For example, a naval task force might give an AI-enabled defense system pre-authorization to respond to certain threats (like incoming missiles) without asking command, because in a hypersonic missile era, waiting for human clearance could be too slow. However, this also means militaries need mechanisms to de-escalate automated processes if needed – a sort of strategic parachute. Some have suggested concepts like an “AI hotlines” between adversaries, similar to the Cold War hotlines, to quickly clarify any AI-caused incidents or to agree on halts if systems get out of control.

The global balance of power could be affected by differential AI adoption. Countries leading in AI may develop entirely new doctrines that render older weapons or strategies obsolete (much as blitzkrieg did to static trench warfare). We might see a form of offset strategy: instead of matching an opponent tank for tank, you counter their tanks with swarms of cheap AI drones. U.S. defense thinkers have proposed exactly that – swarms of autonomous systems as a “third offset” to counter numerical advantages of adversaries. China likewise might try to offset U.S. naval superiority by using AI to coordinate large numbers of anti-ship missiles and drone subs in a saturation attack that human defenders can’t keep up with. Thus, defense strategies are increasingly about human-AI team vs human-AI team, rather than just troop vs troop or plane vs plane.

We also see changes in military organizational structures in response to AI. Forces are standing up new units like the U.S. Army’s Project Convergence teams or the Air Force’s AI Task Force, dedicated to experimenting with AI in operations. Job roles are evolving – for instance, the concept of an “AI trainer” or “AI auditor” on a commander’s staff, someone who understands the AI’s workings and can liaise between the technology and the leadership. Education and training for officers now include AI literacy, so that future generals understand what tools are available and their limits. This is happening internationally: many military academies and war colleges have added AI strategy to the curriculum. Countries that effectively educate their military in AI will likely be better at integrating it. This soft factor – human capital – is a strategic asset in its own right.

In terms of defense economics, generative AI might shift investment patterns. High-tech, AI-centric systems could be cost-effective compared to traditional hardware. A basic AI-driven drone can be far cheaper than a fighter jet or tank, yet have significant impact. This might allow militaries to do more with less, or at least force a rebalancing of procurement. Already, budgets are allocating more to software and less to some legacy programs. However, truly reaping the benefits of AI may require up-front investment in data infrastructure (sensors, cloud computing, secure communications). Strategies are being shaped by how to protect and leverage data advantage – the notion that having more and better data to feed your AI (and denying the enemy theirs) is critical. So we see things like militaries investing in secure cloud networks and satellite constellations to ensure their AI systems are well-fed with information, and conversely, thinking of ways to blind or confuse the enemy’s AI (perhaps through hacking or by feeding false data – an emerging form of counter-AI strategy).

On a global scale, defense diplomacy now includes AI norms discussions. Countries are positioning themselves as responsible AI leaders to win trust. For example, European states often emphasize the ethical use of AI as part of their strategic communications, setting themselves apart from authoritarian adversaries. The idea is to rally coalitions around shared values in AI use (much like during the Cold War there was a values-based contrast in the use of certain weapons or tactics). This also plays into export control: nations are coordinating on restricting exports of advanced AI chips and software to rivals (the U.S. export restrictions on high-end Nvidia GPUs to China, supported by allies, is one instance). The control of the AI supply chain – semiconductors, algorithms, talent – is now a strategic element of defense planning. Alliances may invest collectively in secure supply of AI compute power to ensure independence from potentially compromised supply chains.

In conclusion of this section, generative AI has established itself as a catalyst for strategic evolution in defense. It influences not just how militaries fight, but also how they organize, plan, and align with each other. We are likely at the beginning of this revolution. Just as early aircraft in World War I were first seen as reconnaissance aids and later evolved to strategic bombers by World War II, today’s AI systems – currently assisting with surveillance and planning – could evolve into central strategic tools that define the outcome of conflicts. The nations and coalitions that adapt fastest and most responsibly will shape the norms and balance of power in the AI-driven security era.

Conclusion

From autonomous drone swarms reacting in unison on the battlefield to AI engines churning through scenarios in command centers, generative AI is revolutionizing military surveillance and defense strategies at every level. The case studies we explored – whether it’s a naval wargame accelerated by AI simulation or a Marine unit leveraging an AI assistant for intel summaries – all demonstrate that this technology is no longer science fiction but an active part of military operations. Generative AI’s ability to create content and strategies (not just analyze them) is a game-changer: it enables militaries to anticipate and act with unprecedented speed and creativity.

However, this revolution comes with challenges as profound as its promises. Ethical boundaries must be defined so that human values and laws govern the use of lethal force, even as machines assume bigger roles. The international community faces the urgent task of crafting norms and possibly treaties to prevent misuses and maintain strategic stability. As one analyst aptly noted, leaving military AI unchecked is perilous, heightening risks to peace and security. In response, initiatives for responsible AI use – from the UN to NATO and national policies – are beginning to take shape, aiming to ensure AI acts as a force for deterrence and defense, not destabilization.

Looking globally, generative AI is now embedded in the geopolitical competition of our time. It is altering the calculations of deterrence and power projection. Military leaders who once pondered how many tanks or aircraft an adversary has must now also ask: How advanced are their algorithms? In this sense, AI capabilities have become as strategically significant as traditional arsenals. The coming years will likely see even more integration of AI: swarms of land, sea, and air robots coordinating via generative models, commanders relying on AI advice by default, and conflicts potentially won by superior algorithmic decision-making as much as by brave troops or precise weapons.

Ultimately, the integration of generative AI in defense is about amplifying human capability. It offers the potential to reduce the fog of war by rapidly clarifying situations, to save lives by taking humans out of some dangerous roles, and to deter aggression by denying adversaries any hope of outthinking a well-equipped force. Yet it also serves as a reminder of our responsibility to guide powerful technologies with wisdom. In warfare, as in other domains, AI will reflect the intents of its users. The revolution is here – it falls on military and political leaders, and indeed all of us in the international community, to steer this revolution in a direction that enhances security and upholds the values of humanity, even amidst the crucible of conflict.

References

- Brandon Bean (GDIT) interview on DoD generative AI use casesgdit.com

- EETimes – Rebecca Pool, Drones with Edge AI: The Future of Warfare?, on Ukraine’s Swarmer drone swarmseetimes.eueetimes.eu

- EETimes – Swarmer CEO on autonomous swarm tactics and speedeetimes.eueetimes.eu

- Shield AI/DefenseScoop – Easley, Inside the AI Pilot that Flew Sec. Kendall in a Dogfight, on the X-62A autonomous F-16 testshield.aishield.ai

- JHU Applied Physics Lab – Ajai Raj, Generative AI Wargaming, on LLM-driven wargames and speedupjhuapl.edujhuapl.edu

- Breaking Defense – Freedberg, Beyond ChatGPT: AI should write – but not execute – battle plans, quoting SCSP on experimentation in AI-generated plansbreakingdefense.combreakingdefense.com

- DefenseScoop – Jon Harper, Marines use generative AI in Pacific deployment, on AI summarizing intel and media for the 15th MEUdefensescoop.comdefensescoop.com

- TIME – Will Henshall, US Military’s Investments in AI Skyrocketing, on DoD AI contract surge and budgetstime.comtime.com

- Carnegie Endowment – Raluca Csernatoni, Governing Military AI Amid a Geopolitical Minefield, on lack of global AI framework and generative AI arms racecarnegieendowment.orgcarnegieendowment.org

- Wikipedia – Political Declaration on Responsible Military AI (2023), noting 51 nations signed and its scopeen.wikipedia.org

- Defense.gov – Radha Plumb (CDAO) on adversaries’ rapid AI adoption and DoD generative AI effortsdefense.govdefense.gov

- Harvard Medical School News – Caruso, Risks of AI in Weapons Design, warning of geopolitical instability from AI armshms.harvard.edu