How AI in Marketing is Crossing the Line: The Rise of Automated Pushy Sales Tactics

In the ever-evolving landscape of marketing, one constant remains: the persistent pursuit of consumer attention and conversion. From traditional door-to-door salesmen to telemarketing calls and pop-up ads, the tactics may change, but the core objective remains unchanged—persuading consumers to take action. In recent years, artificial intelligence (AI) has emerged as a transformative force in the marketing world, automating tasks and enabling hyper-personalization at scale. However, as AI becomes more adept at mimicking human behavior, it has also begun to emulate some of the less desirable traits of human salespeople—most notably, being pushy.

Pushy sales tactics are often characterized by aggressive persuasion, a lack of respect for personal boundaries, and psychological pressure techniques designed to elicit immediate responses. These methods, while sometimes effective in the short term, can alienate consumers and erode trust in the long term. As AI systems gain access to increasingly granular behavioral data and sophisticated algorithmic capabilities, they are now capable of replicating these pressure-based techniques with striking accuracy and scale.

This trend raises a critical question: Are we programming machines to simply optimize for engagement and conversion, or are we inadvertently teaching them to manipulate and pressure consumers? AI-driven marketing tools now deploy real-time nudges, pop-ups, countdown timers, and socially validated messaging that mirror the behavior of overzealous sales representatives. In some instances, these systems are even more persistent than their human counterparts—operating across platforms and time zones without fatigue or oversight.

The rise of AI-powered marketing prompts a reexamination of the ethical boundaries between persuasion and manipulation. While automation and personalization offer undeniable advantages, they also come with responsibilities. Marketers and developers must confront the reality that AI, when left unchecked, can exploit human psychological vulnerabilities in ways that may be deemed intrusive or unethical.

In this blog post, we will explore how AI mimics pushy salespeople in modern marketing, analyze the psychological principles at play, examine real-world examples, and consider the regulatory and ethical implications of these trends. Through this analysis, we aim to foster a more nuanced understanding of AI's role in shaping consumer experiences—and outline a path forward that prioritizes transparency, consent, and human-centric design.

The Evolution of AI in Digital Marketing

The transformation of marketing over the past two decades has been nothing short of revolutionary. From static banner advertisements and generic email campaigns to dynamic, personalized interactions, digital marketing has become increasingly data-driven and automated. At the heart of this evolution lies artificial intelligence (AI), which has fundamentally altered how businesses understand, target, and communicate with consumers. AI's integration into digital marketing has expanded from basic data analytics to the deployment of predictive models, natural language processing (NLP), and autonomous decision-making systems, ushering in an era defined by efficiency, personalization—and increasing levels of psychological influence.

From Manual Effort to Machine-Led Precision

In the early days of digital marketing, much of the work was manual. Marketers segmented audiences based on rudimentary demographic data and crafted messages accordingly. As the internet matured, the volume of available consumer data exploded. Clickstream analysis, behavioral targeting, and customer relationship management (CRM) platforms offered deeper insights into consumer preferences. However, it was the advent of AI and machine learning that enabled marketers to extract actionable intelligence from this data at unprecedented speed and scale.

Today, AI algorithms can process vast quantities of structured and unstructured data to predict user behavior, recommend products, and automate entire customer journeys. Natural language models generate personalized email content, computer vision systems analyze user-generated images for brand insights, and reinforcement learning frameworks optimize digital ad spend in real time. These capabilities have transformed marketing from a reactive function to a proactive and highly adaptive discipline.

The Shift from Personalization to Persuasion

While personalization has long been a goal of digital marketing, AI has elevated it to new heights. Algorithms now tailor experiences based on micro-moments—analyzing not just who a user is, but where they are, what device they are using, how they have interacted with similar content, and even what time of day they are most likely to engage. This hyper-personalization creates the illusion of individual attention at scale, enhancing engagement rates and conversion metrics.

However, the line between personalization and persuasion has become increasingly blurred. Many AI systems are now optimized not merely to inform but to influence. Predictive analytics can identify moments of emotional vulnerability or decision fatigue, and deploy persuasive prompts designed to capitalize on these states. For example, an AI-driven system may detect that a user has hovered over a product for more than 10 seconds without clicking and respond by flashing a limited-time discount offer—a tactic reminiscent of a persistent in-store sales associate offering a “today-only” deal.

The sophistication of these techniques reflects AI’s ability to mimic not just human cognition, but human persuasion strategies. Tools that once served to assist are now often designed to convert, leading to questions about autonomy, consent, and the ethical design of digital experiences.

Behavioral Targeting and Retargeting

One of the most powerful ways AI is deployed in modern marketing is through behavioral targeting and retargeting. Behavioral targeting uses machine learning algorithms to segment audiences based on real-time and historical data. These segments are then used to deliver personalized advertisements that anticipate the user’s needs, interests, or fears. Retargeting, on the other hand, involves tracking users after they leave a website and serving them ads that nudge them to return.

While effective from a business perspective, these strategies often lead to the perception of being followed or surveilled—a hallmark trait of pushy sales tactics. Consumers may encounter the same ad across multiple websites and devices, creating a sense of intrusion and urgency. The AI systems behind these strategies are designed to maximize engagement, but in doing so, they replicate the relentless follow-ups of a high-pressure sales environment.

AI Chatbots and Conversational Marketing

Another key development is the use of AI-powered chatbots and conversational agents in customer engagement. Initially introduced to handle simple queries, these bots have evolved into sophisticated entities capable of guiding users through the entire sales funnel. Utilizing NLP and sentiment analysis, AI chatbots can identify buying signals, respond empathetically, and escalate their tactics as needed.

For instance, a chatbot may begin with polite assistance but progressively introduce limited-time offers, bundle deals, or scarcity messages as the conversation progresses. The underlying algorithm is programmed to optimize for conversion, often using A/B testing and reinforcement learning to identify the most effective persuasive sequence. In this way, the AI system effectively performs the role of a digital salesperson—often indistinguishable in behavior from a human trained in high-pressure sales techniques.

The Conversion Funnel: Now Fully Automated

AI's capabilities have also enabled full-funnel automation. From top-of-funnel awareness campaigns driven by programmatic advertising to bottom-of-funnel conversion strategies involving personalized checkout nudges, AI systems now manage every stage of the customer journey. These systems operate continuously, making real-time adjustments based on performance metrics, customer behavior, and external variables such as time, location, and even weather.

For example, e-commerce platforms use AI to dynamically alter pricing, inventory messaging (“Only 2 left in stock!”), and delivery times based on demand forecasts and user profiles. These adjustments are not random; they are strategically designed to increase urgency and close the sale—paralleling the tactics used by human salespeople trained to overcome objections and compel a decision.

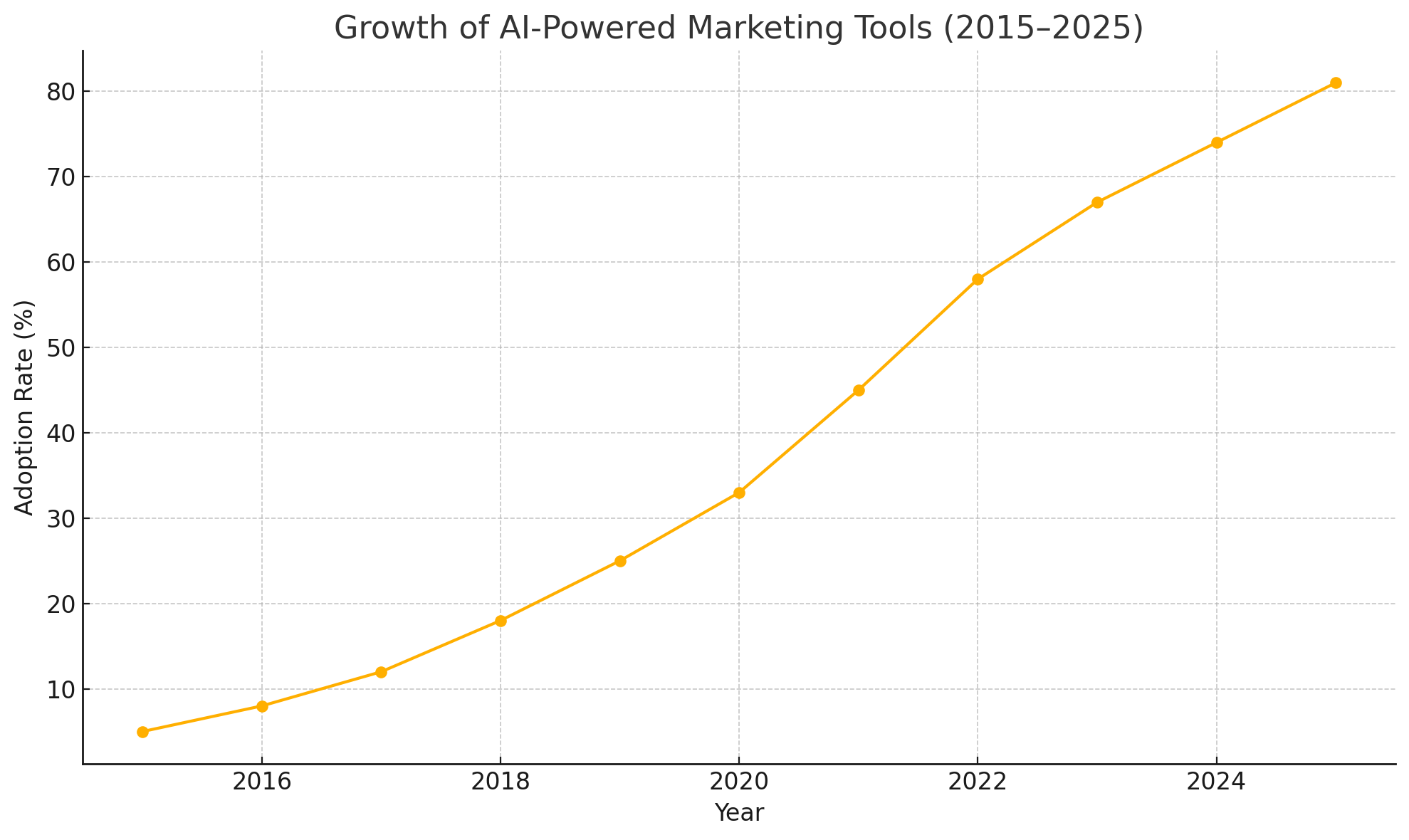

The following chart illustrates the rapid growth in adoption of AI-powered marketing tools across industries from 2015 to 2025:

The Psychology of “Pushy” Sales – Human vs. Machine

The psychology behind pushy sales tactics has long been studied in the context of human interaction. At its core, the term “pushy” denotes a style of communication that is overly aggressive, insistent, or intrusive—often leveraging psychological pressure rather than genuine value to drive a desired outcome. These tactics tap into cognitive biases, emotional triggers, and social cues to influence consumer behavior. Today, artificial intelligence systems are increasingly capable of replicating these behaviors, not through conscious intention, but by optimizing for engagement and conversion metrics that often prioritize results over experience.

Understanding how AI mimics these persuasive strategies requires a deeper exploration of the psychological principles traditionally employed by human salespeople and how algorithms are now operationalizing these tactics at scale.

Classical Sales Psychology and Its Core Principles

Human salesmanship has historically relied on a set of well-documented psychological techniques. These include:

- Scarcity: The perception that a product or offer is limited in quantity or availability.

- Urgency: A time-sensitive incentive designed to prompt immediate action.

- Social Proof: Demonstrating that others have made similar purchases or decisions.

- Reciprocity: Creating a sense of obligation through free offers or favors.

- Commitment and Consistency: Encouraging a small initial action that leads to a larger one.

- Authority: Referencing credible endorsements or expert validation.

These methods, when used judiciously, can enhance decision-making and build trust. However, when overused or misapplied, they cross into manipulative territory—pressuring consumers into decisions they may later regret.

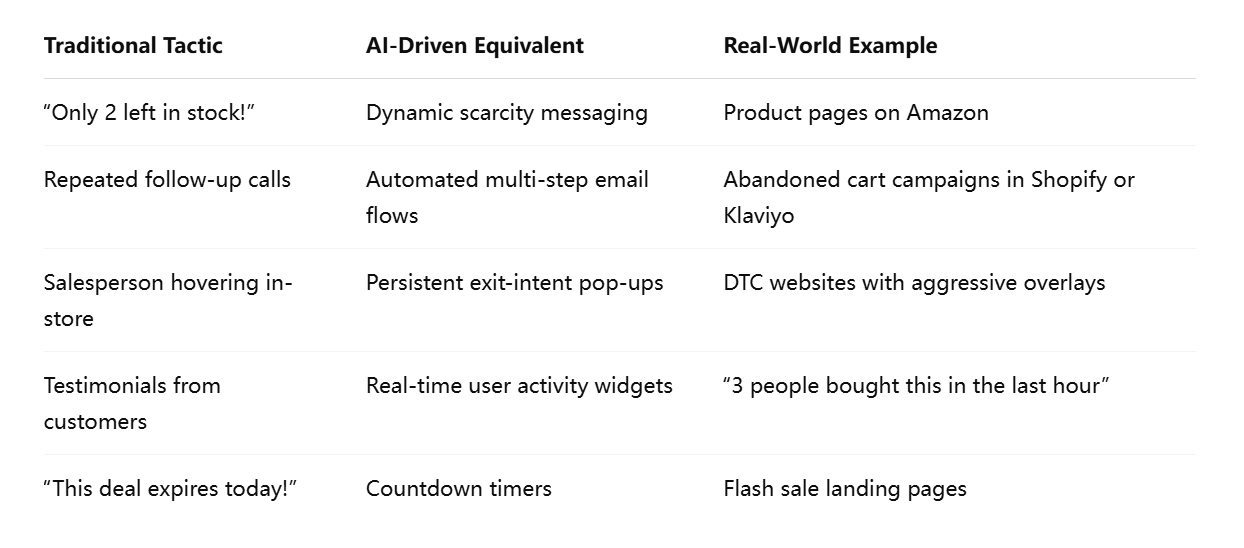

Translating Human Tactics into Algorithms

AI-driven marketing tools are not inherently pushy. Rather, their behavior is a reflection of the goals they are programmed to achieve—namely, to maximize click-through rates, reduce cart abandonment, and increase conversions. In the process of optimizing for these outcomes, AI systems begin to emulate the very behaviors that define high-pressure sales tactics.

Consider the use of urgency triggers. E-commerce platforms routinely employ countdown timers during flash sales or display messages such as “Hurry! Only 3 items left in stock.” These features are generated algorithmically based on inventory data and behavioral models predicting that urgency increases the likelihood of purchase. Similarly, social proof mechanisms—like “12 people are viewing this item” or “User X from New York just bought this product”—are dynamically rendered using real-time data, reinforcing the perceived popularity of a product.

Such features are not coincidental; they are the output of A/B tested, machine-learned strategies that consistently demonstrate higher conversion rates. Over time, the algorithms learn which combinations of text, visuals, and prompts are most effective at nudging users toward a desired action, regardless of how persistent or psychologically coercive these tactics may feel to the consumer.

Dark Patterns and Manipulative Design

A critical manifestation of pushy AI is the increasing use of dark patterns in digital design. Coined by UX designer Harry Brignull, dark patterns refer to user interface elements that are intentionally designed to deceive or mislead users. These include:

- Hidden opt-out links

- Pre-checked boxes for subscriptions

- Confusing cancellation flows

- Repeated pop-ups despite user dismissals

AI systems can be trained to test and deploy dark patterns by analyzing user behavior and identifying design variations that minimize bounce rates or maximize form submissions. This machine-led experimentation can lead to interface experiences that feel overwhelmingly persistent or even deceptive—akin to a human salesperson who ignores verbal cues or repeatedly asks for a commitment after being told “no.”

AI and Emotional Manipulation

Beyond dark patterns, AI systems are also capable of emotional manipulation through sentiment analysis and real-time behavior tracking. By analyzing keystroke patterns, mouse movement, dwell time, and scrolling behavior, AI can make inferences about a user’s emotional state. For instance, if a user lingers on a refund policy page, the system may infer indecision or hesitation and respond by offering a limited-time discount or a “surprise” gift—mirroring a salesperson who counters resistance with sweeteners.

These techniques may be effective in closing sales, but they also raise significant ethical concerns. Users are often unaware that they are interacting with systems that are continuously learning from and adapting to their behavior. The result is a marketing experience that can feel tailored, yet oddly intrusive—a dynamic that blurs the line between helpful guidance and algorithmic coercion.

The Illusion of Human Persuasion

One of the most compelling reasons AI is effective at mimicking pushy salespeople is that many consumers cannot distinguish between algorithmic and human-generated pressure. AI-powered chatbots, for instance, now employ natural language models that simulate empathy, urgency, and authority. They can escalate their messaging, shift tone based on responses, and use language that implies human authorship. A chatbot might say, “Let me check if I can get you a better offer,” or “We’ve just restocked this popular item—don’t miss out again,” thereby invoking the same psychological triggers as a trained salesperson.

The illusion of human persuasion, powered by sophisticated language generation models and reinforcement learning, enhances user trust but also creates a new form of cognitive vulnerability. Consumers often underestimate the algorithmic intent behind these messages, attributing them instead to helpful service or genuine concern.

Cognitive Load and Decision Fatigue

Another psychological dimension of pushy marketing is its impact on cognitive load. When users are bombarded with too many prompts, options, or notifications, they experience decision fatigue—a decline in the ability to make considered decisions due to mental exhaustion. AI systems, particularly in high-stakes industries like travel or finance, sometimes exploit this fatigue by escalating calls to action at moments when users are most vulnerable to persuasive pressure. Examples include layered upsell prompts, aggressive cross-selling, or urgency notifications during checkout.

By mimicking this aspect of pushy sales behavior, AI marketing systems inadvertently contribute to poor user experience, mistrust, and even post-purchase regret—a far cry from the promise of frictionless, consumer-friendly automation.

In summary, the psychological principles that underpin pushy sales tactics have not disappeared in the digital age—they have been translated into code. AI systems, in their pursuit of performance optimization, now emulate the very behaviors that once defined aggressive human salesmanship. This convergence raises serious questions about agency, consent, and the nature of influence in an age of intelligent automation. As AI continues to shape the future of marketing, it is imperative that these systems be guided not only by what is effective, but also by what is ethical and respectful of the consumer experience.

Real-World Use Cases of AI Acting Like a Pushy Salesperson

While theoretical discourse around AI-driven marketing is essential, it is in real-world applications that the implications of “pushy” AI behavior become most apparent. Across industries—from e-commerce to software-as-a-service (SaaS), travel, and finance—AI systems are actively shaping consumer interactions with techniques that, in many instances, closely mirror the behavior of insistent human salespeople. These techniques are not merely experimental; they are deliberately designed to boost key performance indicators (KPIs) such as click-through rates, engagement time, and conversion rates. However, as these systems become increasingly assertive, the line between intelligent automation and manipulative persuasion continues to blur.

E-Commerce Platforms: The Algorithmic Hard Sell

The e-commerce sector has emerged as a pioneer in the deployment of AI for personalized and persuasive marketing. Platforms such as Amazon, Alibaba, and Shopify-powered retailers utilize AI to track user behavior in real time and deploy dynamic content designed to prompt purchase decisions.

A notable example is the use of scarcity messaging, such as “Only 1 item left in stock,” or real-time urgency prompts, such as “15 people viewed this item in the last hour.” These messages are automatically generated based on real-time inventory and user data, and their effectiveness has been repeatedly validated through A/B testing and behavioral analytics. While these features enhance the perception of popularity and limited availability, they also create psychological pressure akin to the presence of a pushy in-store sales associate.

Further, many e-commerce platforms now deploy exit-intent overlays—pop-up messages triggered when a user attempts to leave the site without making a purchase. These overlays often include discount codes, time-sensitive offers, or free shipping incentives. The underlying AI systems are trained to deliver these prompts based on behavioral patterns that suggest hesitation or abandonment, effectively attempting to “close the deal” before the user exits the site.

Travel Booking Sites: Countdown Clocks and Fear of Missing Out

AI’s ability to induce action is particularly pronounced in the travel and hospitality sector, where platforms like Booking.com, Expedia, and Skyscanner rely heavily on real-time behavioral data and psychological triggers to drive bookings. For example, users browsing hotel listings are frequently shown alerts such as “Only 2 rooms left at this price” or “Booked 6 times in the last 24 hours.” These messages are designed to create a fear of missing out (FOMO) and often compel users to act quickly—sometimes before they have had the opportunity to fully evaluate their options.

Another common feature is the countdown timer, prominently displayed during checkout or promotional periods. These timers, often counting down from 10 or 15 minutes, signal that the user has a limited window in which to secure a deal. Although these may be based on genuine time constraints, they are often generated as part of a broader algorithmic strategy to accelerate decision-making and reduce abandonment rates.

In practice, these AI-generated elements replicate the behavior of a time-pressuring travel agent urging customers to “book now or miss out.” While effective, they can also lead to hasty decisions, particularly when users are overwhelmed by multiple calls to action and limited transparency around actual availability.

SaaS and Subscription Services: Persistent Nudging and Email Automation

In the realm of SaaS, where user engagement and retention are paramount, AI plays a critical role in nurturing leads and reducing churn. Platforms such as HubSpot, Intercom, and ActiveCampaign employ AI to manage multichannel drip campaigns—automated sequences of emails and messages triggered by user behavior.

One prevalent strategy involves tracking user inactivity or partial sign-ups and triggering a sequence of personalized emails aimed at encouraging completion. These emails often escalate in urgency, beginning with friendly reminders and culminating in limited-time offers or exclusive bonuses. Some even include AI-generated subject lines optimized to induce clicks, such as “You forgot something important!” or “Your offer expires in 2 hours!”

Moreover, many platforms integrate chatbots that proactively engage users on websites or within applications. These bots are not merely reactive; they initiate conversations based on user context and behavior. For instance, if a user lingers on a pricing page or hesitates on the checkout screen, the bot may offer assistance or a time-sensitive discount—mimicking the behavior of a salesperson who senses indecision and steps in to close the sale.

Financial Services and Lending Platforms: Automated Pressure to Convert

Financial services have traditionally relied on trust and transparency, but the integration of AI has introduced new dynamics in customer acquisition and engagement. Fintech platforms offering loans, insurance, or investment products use AI to analyze user data and offer pre-qualified options. While these services add convenience, they also introduce persistent follow-ups and upsell tactics.

For example, a user who fills out a partial loan application may receive automated reminders across email, SMS, and app notifications, often with messages emphasizing urgency: “Rates may change—complete your application now!” or “Your offer is reserved for the next 24 hours.” These messages are generated by AI systems that analyze drop-off points and optimize timing for maximum engagement. In some cases, users report feeling overwhelmed by the frequency and assertiveness of these communications—particularly when dealing with high-stakes financial decisions.

The use of AI-driven chatbots in banking apps is another emerging practice. These bots not only assist with account queries but also promote financial products, investment tips, or credit card upgrades, often with persuasive scripts that attempt to elicit immediate action. This is especially concerning when the target audience includes individuals with limited financial literacy, as it increases the risk of misinformed decisions under pressure.

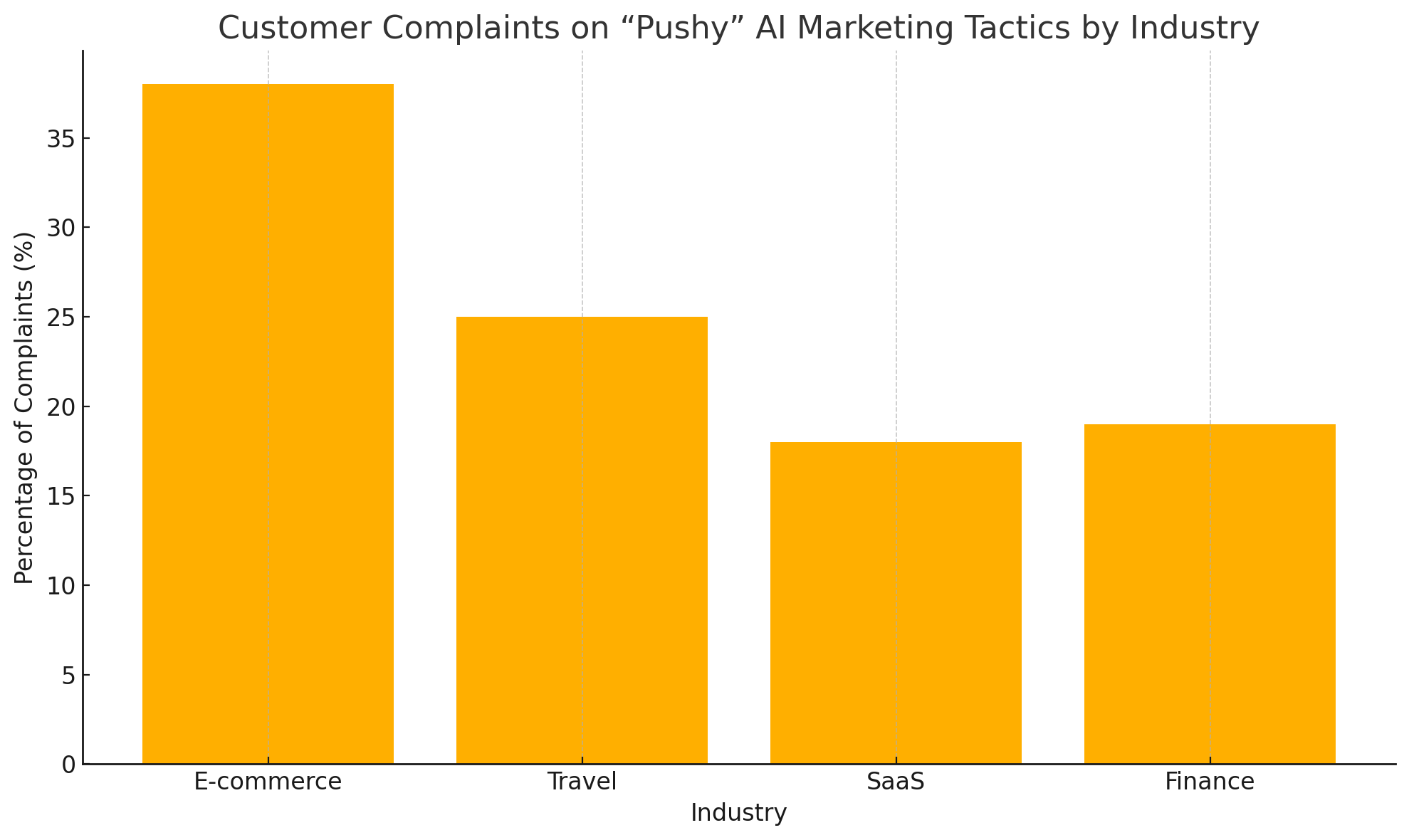

The chart below illustrates the distribution of customer complaints regarding pushy AI tactics across key industries:

Consumer Feedback and Backlash

A significant body of consumer feedback reflects growing discomfort with these AI-driven tactics. On platforms such as Trustpilot, Reddit, and app review forums, users frequently cite intrusive overlays, incessant email follow-ups, and manipulative design patterns as key pain points. Comments like “I felt stalked by the ads,” or “It wouldn’t stop prompting me even after I unsubscribed,” reflect a sense of violation—akin to being followed around a store by a salesperson who ignores every signal of disinterest.

This backlash has prompted some companies to reassess their use of AI in customer engagement. For instance, a number of retailers have begun offering “reduced personalization” modes or granular consent options, allowing users to opt out of aggressive targeting. While still rare, these features represent a growing recognition that not all personalization is welcome, and that respectful digital experiences can foster long-term loyalty over short-term gains.

In conclusion, the deployment of AI in marketing has reached a level of sophistication where it frequently replicates the behaviors of assertive, and at times overbearing, human salespeople. Across industries, these systems employ real-time data, predictive modeling, and algorithmic experimentation to drive conversions—often at the cost of user comfort and consent. As AI continues to evolve, the need for ethical oversight and human-centric design becomes paramount. Ensuring that AI marketing strategies respect boundaries, preserve trust, and empower rather than pressure the consumer will be essential in defining the future of responsible digital commerce.

Ethics, Regulation, and the Future of Responsible AI Marketing

The intersection of artificial intelligence and marketing is a potent one, capable of delivering exceptional value to both businesses and consumers. However, as AI systems increasingly mimic the behaviors of pushy salespeople, the ethical, legal, and strategic implications warrant urgent attention. While efficiency and personalization have driven AI adoption in marketing, questions around transparency, fairness, and consumer autonomy are now central to discussions about its responsible deployment.

The future of AI marketing depends not only on technological innovation but also on the establishment of ethical frameworks, regulatory guardrails, and industry-wide commitments to building trust in the digital marketplace.

Ethical Concerns: Autonomy, Consent, and Manipulation

A core ethical challenge posed by AI marketing systems is their ability to manipulate user behavior under the guise of personalization. While traditional marketing relied on overt persuasion, AI leverages subtle cues and data-driven insights to shape decisions—often without the user’s full awareness. This raises critical concerns about autonomy and informed consent.

Autonomy in digital environments implies that users should have the freedom to make choices without undue pressure or deception. When AI systems use tactics such as fabricated urgency, real-time surveillance, or misleading interface elements (e.g., dark patterns), they encroach on this freedom by nudging users toward decisions they might not otherwise make. Although such strategies may not be illegal, they can be ethically questionable—particularly when deployed on vulnerable populations, such as minors, the elderly, or individuals with cognitive impairments.

Furthermore, the issue of consent becomes problematic in the context of opaque data practices. Most consumers are unaware of the extent to which their behavior is tracked and analyzed, and even when privacy policies are disclosed, they are often too complex to understand. This asymmetry of information places consumers at a disadvantage and undermines their ability to make informed choices about how they are being targeted and influenced.

The Role of Regulation: Global Trends and Policy Responses

In response to growing concerns about digital manipulation and algorithmic harm, several jurisdictions have begun to implement regulatory frameworks aimed at curbing unethical practices in AI-driven marketing.

The European Union’s General Data Protection Regulation (GDPR), for instance, requires organizations to obtain explicit, informed consent before processing personal data and mandates transparency about automated decision-making processes. The proposed AI Act in the EU takes this a step further by classifying certain AI applications as “high risk,” subjecting them to stricter compliance measures, including human oversight and algorithmic accountability.

In the United States, the Federal Trade Commission (FTC) has taken steps to address the use of dark patterns, deceptive interfaces, and manipulative design in digital marketing. In 2022, the FTC issued guidelines warning companies that practices designed to “trick or trap” consumers into making purchases or disclosing personal information could be considered unfair or deceptive under the law. Recent enforcement actions signal a more aggressive posture toward companies that abuse AI for unethical marketing.

Globally, countries such as Canada, Australia, and Brazil are also exploring legislative responses to AI in commercial settings, with an emphasis on data rights, algorithmic transparency, and consumer protection. These developments underscore a growing consensus that while innovation should be encouraged, it must be tempered by safeguards that protect individual rights and democratic values.

Toward a Framework for Ethical AI Marketing

While regulation provides a necessary baseline, ethical marketing practices must also be guided by principles that go beyond legal compliance. Companies that seek to build long-term trust and brand equity should consider the following pillars in their AI marketing strategies:

- Transparency: Clearly communicate when AI is being used in consumer interactions. This includes disclosures about automated recommendations, dynamic pricing, and personalized messaging.

- Consent: Offer meaningful, easy-to-understand choices regarding data collection and usage. This includes the ability to opt out of personalization without degrading the core user experience.

- Respect for Boundaries: Avoid persistent prompts, excessive notifications, and exploitative design elements that pressure users into taking immediate action.

- Fairness: Ensure that AI systems do not discriminate or create disparate impacts based on race, gender, age, or socioeconomic status.

- Accountability: Implement robust oversight mechanisms, including regular audits of AI systems and the ability for users to challenge or appeal automated decisions.

- Human-Centric Design: Prioritize user wellbeing, trust, and satisfaction over short-term conversion gains. This includes aligning marketing strategies with broader organizational values and ethical standards.

By embedding these principles into product development and marketing workflows, organizations can shift from a transactional mindset to a relational one—where AI serves as an enabler of meaningful, respectful engagement rather than a tool for relentless conversion.

Industry Self-Governance and Best Practices

In addition to regulatory and internal ethics programs, there is growing interest in industry self-governance to establish standards for responsible AI use in marketing. Organizations such as the Partnership on AI, the World Economic Forum, and the Digital Advertising Alliance have begun developing frameworks, certifications, and best practices to promote accountability and trust.

For instance, the AI Marketing Ethics Toolkit developed by the World Federation of Advertisers encourages brands to evaluate the fairness, transparency, and inclusivity of their algorithmic marketing practices. Meanwhile, some leading technology firms have created AI ethics boards or algorithmic review panels to assess the societal impact of their AI systems.

Although these efforts are still in early stages, they represent a promising shift toward shared responsibility within the marketing ecosystem. They also signal to consumers that companies are taking their obligations seriously—a factor that can increasingly influence brand loyalty and public perception.

The Path Forward: Designing for Trust in a Data-Driven World

As AI becomes further entrenched in the marketing landscape, the challenge is no longer whether to use it, but how to use it responsibly. The future of AI marketing lies in systems that are not only intelligent but also ethical—capable of optimizing outcomes without compromising user dignity, privacy, or trust.

Technological progress should be paired with moral clarity. Companies must ask not just Can we do this? but Should we do this? AI that mimics pushy sales behavior may deliver short-term gains, but it also risks alienating customers and attracting regulatory scrutiny. In contrast, AI designed with ethical foresight can deepen customer relationships, enhance brand integrity, and build a more sustainable digital marketplace.

Marketers, developers, and executives must work together to ensure that the pursuit of efficiency does not override the principles of fairness and transparency. Through responsible innovation, clear guidelines, and a shared commitment to ethical standards, AI can be a powerful force for good in the marketing world—helping businesses connect with customers in ways that are not only effective but also respectful and empowering.

Conclusion

The emergence of artificial intelligence as a core pillar of modern marketing has undoubtedly transformed how businesses engage with consumers. From hyper-personalized recommendations to real-time nudges and adaptive chatbots, AI has introduced a level of efficiency and scale that human sales teams alone could never achieve. However, as this technology advances, so too does its capacity to replicate—and even amplify—the pressure tactics once synonymous with aggressive human salespeople.

Throughout this discussion, we have examined how AI systems, often optimized for performance metrics, can inadvertently adopt behaviors that feel intrusive, manipulative, or overbearing. These include dynamic scarcity messaging, persistent follow-ups, emotionally driven prompts, and automated interface elements that mirror psychological sales techniques. While these tactics may improve short-term conversions, they can erode trust, compromise user autonomy, and spark consumer backlash.

More importantly, this trend compels us to confront deeper ethical and regulatory questions. What responsibilities do developers and marketers bear when deploying AI systems that influence decision-making? How do we protect consumers—especially vulnerable groups—from the hidden pressures embedded in algorithmic persuasion? And what frameworks must be established to ensure that automation remains a tool of empowerment rather than coercion?

As AI continues to reshape marketing, the imperative is not to reject technological progress but to channel it responsibly. Transparency, informed consent, and respect for user boundaries must become foundational elements of AI system design. Regulatory bodies around the world are already moving to curb the misuse of AI in commerce, but voluntary industry standards and internal ethical governance will also play a critical role.

The future of marketing will be defined not only by how intelligent our systems become but also by how humanely they operate. AI should serve to enhance the customer journey, offering value, convenience, and relevance—without compromising trust or dignity. Organizations that understand this distinction and embed ethical principles into their marketing architecture will not only comply with evolving regulations but also earn the long-term loyalty of an increasingly aware and empowered consumer base.

Ultimately, the question is not whether AI can sell more—it clearly can. The question is whether it can sell better: with integrity, fairness, and a genuine respect for the people it seeks to persuade. That is the challenge for marketers in the age of intelligent automation—and the benchmark by which success should be measured.

References

- How to Build Ethical AI for Marketing

https://hbr.org/2021/01/how-to-build-ethical-ai-for-marketing - AI and Ethics in Marketing

https://www.weforum.org/agenda/2020/07/artificial-intelligence-ai-ethics-marketing/ - Bringing Dark Patterns to Light

https://www.ftc.gov/news-events/blogs/business-blog/2022/09/bringing-dark-patterns-light - The Deceptive Design of AI Interfaces

https://www.technologyreview.com/2021/11/16/1039070/deceptive-design-dark-patterns-ai-interfaces/ - UX Dark Patterns in E-Commerce

https://www.patterns.design/blog/dark-patterns-in-ecommerce/ - Regulating AI in Consumer Markets

https://www.brookings.edu/articles/regulating-ai-in-consumer-markets/ - AI Principles and Consumer Trust

https://www.oecd.org/going-digital/ai/principles/ - Responsible Practices for AI in Advertising

https://partnershiponai.org/responsible-practices-for-advertising/ - AI Marketing Ethics Toolkit

https://wfanet.org/knowledge/item/2022/07/13/WFA-launches-AI-Marketing-Ethics-Toolkit - Manipulative UX: The Ethics of Persuasive Design

https://uxdesign.cc/the-ethics-of-persuasive-design-4b358cd0f4b5