Google DeepMind Unveils Local AI Model That Powers Real-Time Robot Autonomy Without the Cloud

In a significant leap for the field of robotics and artificial intelligence, Google DeepMind has unveiled an advanced AI model designed to run locally on robots, eliminating the traditional dependence on cloud-based systems for intelligent behavior and decision-making. This development marks a pivotal transition toward greater autonomy in robotic agents, where computational intelligence is embedded directly within the physical systems they control. By allowing AI models to operate on-device, DeepMind is not only enhancing the real-time responsiveness of robotic agents but also reducing latency, improving privacy, and enabling operations in bandwidth-constrained or disconnected environments.

Historically, the deployment of AI models in robotics has heavily relied on powerful cloud infrastructure. High-performance tasks such as image recognition, language understanding, and complex manipulation planning have typically been processed remotely, leveraging expansive compute resources in data centers. While this architecture offered substantial computational advantages, it also introduced key limitations. Chief among them are latency in decision-making, vulnerability to connectivity disruptions, and concerns about data security and compliance. Robots functioning in high-stakes or dynamic environments—such as autonomous vehicles, surgical robots, or disaster response units—cannot afford delays in processing or failures due to interrupted connections. The imperative for real-time, dependable inference has thus driven a growing interest in localized AI execution.

DeepMind’s latest model directly addresses these challenges by embedding intelligence into the robot’s own hardware. Drawing from its experience in training highly capable neural networks for language, vision, and planning—such as AlphaFold, AlphaZero, and Gemini—DeepMind has applied its expertise to design a lightweight yet powerful AI model that supports diverse robotic tasks without the need for continuous external communication. The model processes sensory data, interprets commands, and performs multi-step reasoning natively within the robotic platform. This evolution aligns with an emerging trend in AI known as “edge intelligence,” where models are optimized for inference on-device rather than in centralized computing clusters.

The implications of this shift are profound. For one, robots can now operate in offline or semi-connected scenarios, such as remote agricultural fields, undersea missions, or disaster zones where network access is unreliable or absent. Furthermore, by processing data locally, sensitive information—particularly in settings such as healthcare or home robotics—can remain confined to the device, reducing exposure and ensuring greater compliance with privacy regulations like the General Data Protection Regulation (GDPR) in Europe. Additionally, local inference often proves more energy-efficient than continuously transmitting data to and from the cloud, an aspect increasingly relevant as sustainability becomes a core design principle in technology development.

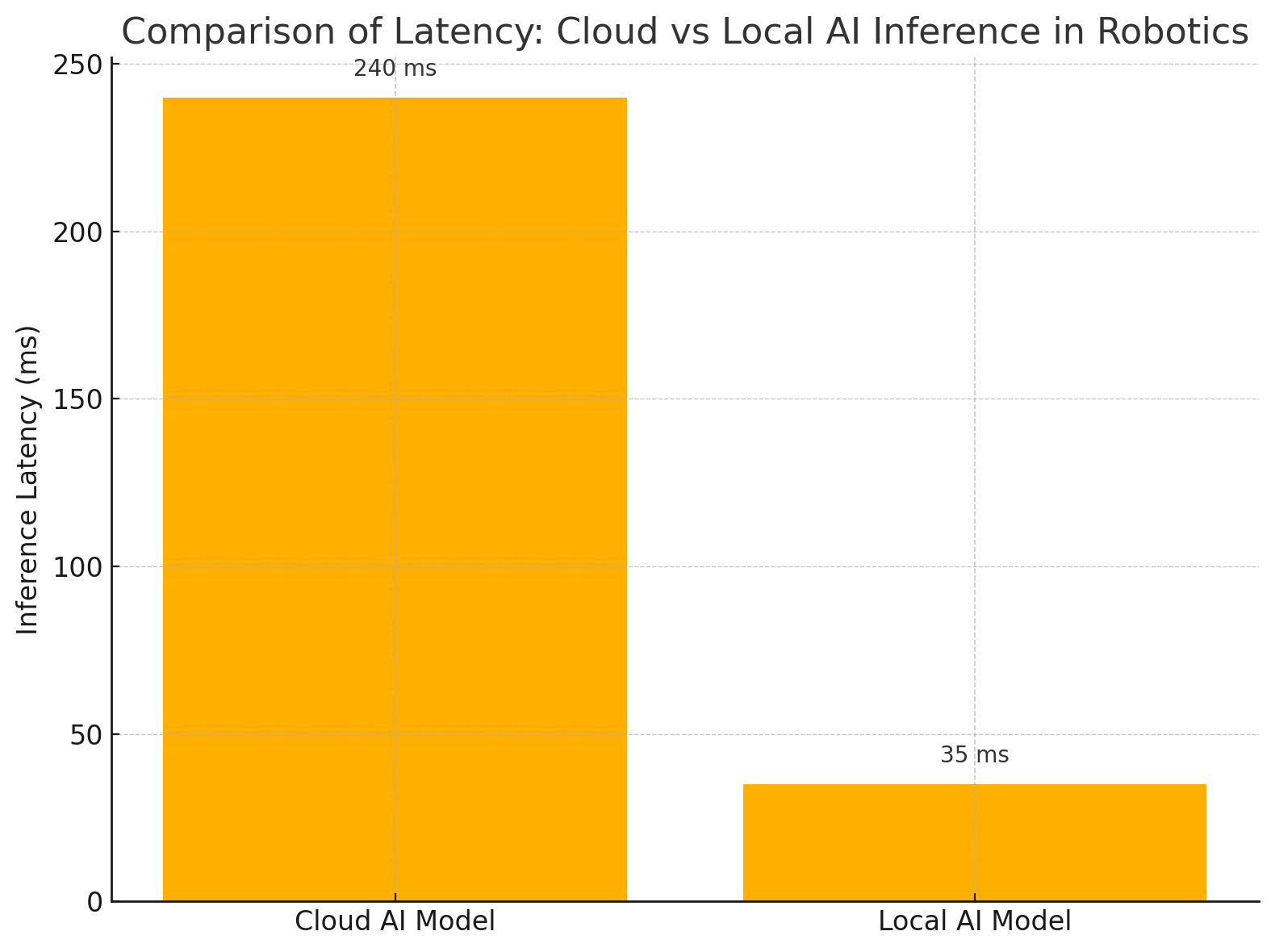

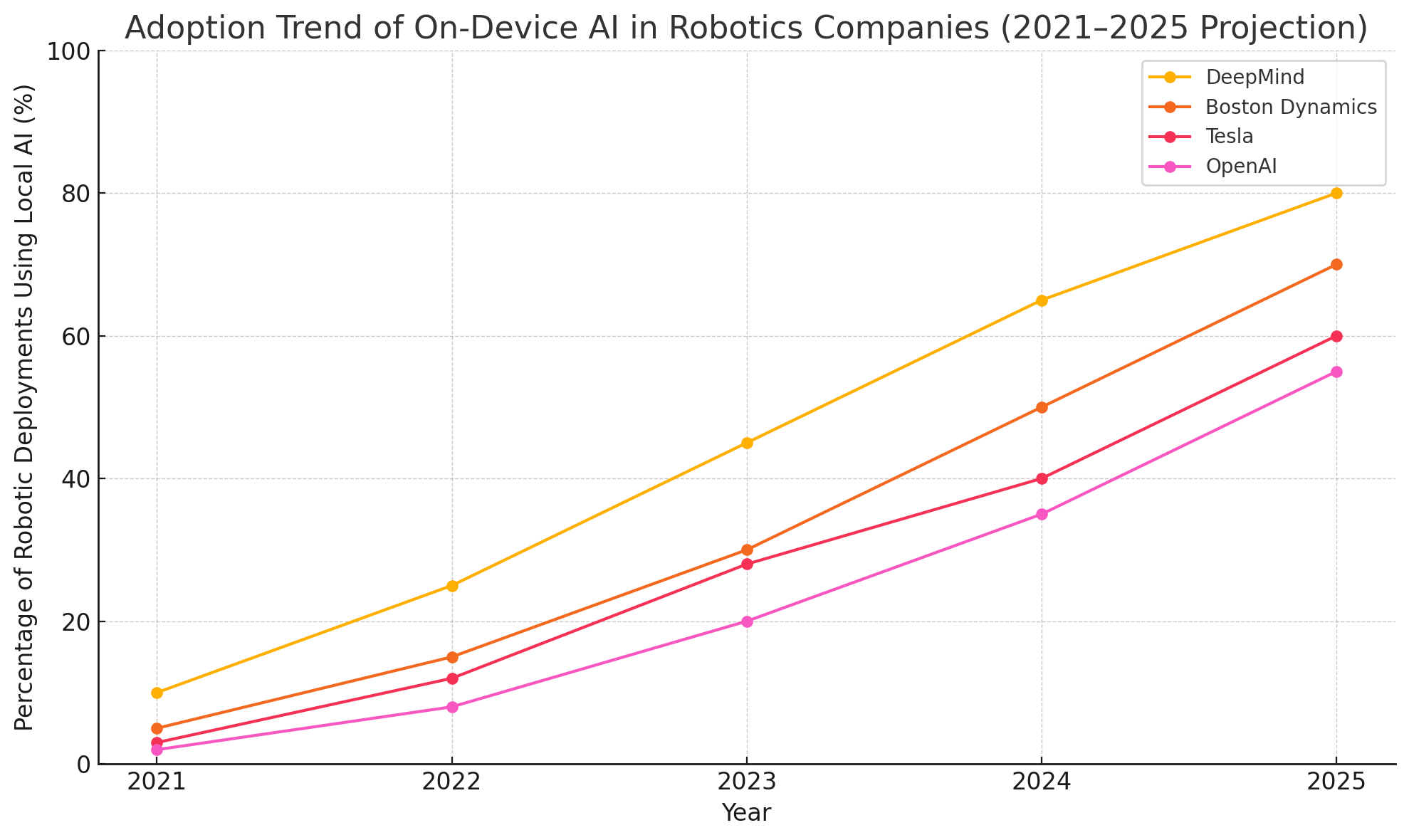

This blog post explores the technical, strategic, and commercial ramifications of DeepMind’s local AI model. We begin by examining the architecture and functionality of the system, followed by an analysis of its deployment in real-world robotics. We will then explore the broader strategic implications of local AI in the robotics sector, before addressing the key limitations, research frontiers, and potential next steps for this transformative technology. Two charts will provide comparative insights into latency and adoption trends, while a performance table will offer a detailed look at how DeepMind’s system fares across a variety of robotic use cases.

As the robotics industry enters a new era of smarter, faster, and more autonomous machines, the introduction of locally-operating AI by DeepMind may be viewed as a turning point. This development reflects not only a technological milestone but also a broader philosophical shift toward decentralization and independence in machine intelligence. By moving brains into the bodies of robots, DeepMind is reshaping what it means for machines to think—and act—on their own.

Architecture & Capabilities of the Local AI Model

The introduction of a locally-operating AI model by Google DeepMind marks a substantial departure from the conventional cloud-dependent paradigm in robotics. At the core of this innovation lies a carefully engineered architecture that balances computational efficiency with functional sophistication. This section explores the model's structural design, the modalities it incorporates, the mechanisms that enable real-time decision-making, and the optimization strategies that ensure it performs reliably on embedded hardware. It also underscores the broader significance of these architectural choices for robotics and edge computing more generally.

Modular Transformer-Based Core

The foundation of DeepMind’s local AI model is a modular transformer-based architecture, inspired by the versatility of language and vision transformers but adapted for resource-constrained environments. This model processes input data through a series of lightweight transformer layers that have been pruned and quantized to reduce their memory and compute footprints. By utilizing low-rank attention mechanisms, shared weights across layers, and activation compression, the model achieves a substantial reduction in inference cost without compromising the quality of its outputs.

These architectural innovations are not merely efficiency hacks—they reflect a deliberate effort to decentralize intelligence without degrading decision-making capabilities. Unlike larger models deployed in the cloud, which benefit from high-throughput GPUs and TPUs, the local model must rely on mobile-grade NPUs (Neural Processing Units), custom ASICs, or optimized CPU pipelines. As such, every parameter, layer, and operation within the model has been scrutinized and tuned for maximum throughput on limited silicon.

Multimodal Perception and Input Handling

A critical feature of the model is its ability to natively process multiple types of input data—visual, tactile, proprioceptive, and even linguistic. This multimodal capacity is essential for robots operating in real-world, unstructured environments, where diverse sensory information must be integrated to enable robust interaction with objects and surroundings.

The model’s visual pipeline includes a lightweight convolutional backbone for rapid feature extraction, which is then fed into a transformer layer for context-aware interpretation. Simultaneously, proprioceptive sensors—providing data on joint angles, torques, and velocities—are encoded through a separate feedforward encoder. Audio inputs, when available, are handled through spectrogram transformation and temporal convolution blocks, allowing the robot to detect environmental cues like alarms, human speech, or mechanical anomalies.

Moreover, the model is capable of processing natural language instructions locally, albeit within a constrained vocabulary and grammar. This functionality allows the robot to follow verbal commands or interpret pre-scripted mission directives without requiring external language processing, a feature particularly useful in environments where privacy or latency is a concern.

Real-Time Decision Making and Control Integration

To ensure real-time responsiveness, DeepMind’s model integrates a tightly coupled control loop that links perception with action planning and execution. This loop functions on a time horizon of milliseconds, enabling the robot to adapt dynamically to changes in its environment. At the heart of this mechanism is a hybrid decision engine that combines reactive policies—trained through reinforcement learning and fine-tuned for specific robot morphologies—with low-latency motion planners that convert high-level objectives into executable control signals.

Importantly, the model supports continuous feedback from sensors, enabling closed-loop control. This contrasts with traditional open-loop systems, which plan actions based on an initial state without constant environmental re-evaluation. Closed-loop control improves safety, precision, and fault tolerance—key requirements for robots operating in proximity to humans or in complex physical environments.

Furthermore, the model includes a short-term memory mechanism based on a gated recurrent structure, which allows it to track state across time and handle sequential decision-making tasks more effectively. This is particularly important for operations involving delayed rewards or multi-step manipulation, such as opening a cabinet door, pouring a liquid, or assembling components.

Efficient On-Device Inference

One of the most technically challenging aspects of deploying AI models locally is ensuring that inference operations remain within acceptable power and thermal limits. DeepMind addresses this challenge through multiple layers of model optimization. These include:

- Quantization: Reducing model weights and activations from 32-bit floating point to 8-bit or even 4-bit representations to minimize memory bandwidth and improve cache utilization.

- Knowledge Distillation: Training a smaller model (the student) to replicate the behavior of a larger, high-performing model (the teacher), ensuring compactness without sacrificing accuracy.

- Weight Pruning: Removing non-essential neurons and connections to reduce computational overhead.

- Hardware-Aware Training: Fine-tuning the model in simulation environments that emulate target hardware conditions, ensuring that latency and energy consumption remain within operational bounds.

These strategies allow the AI model to run at over 30 frames per second on edge-grade computing modules, such as NVIDIA Jetson Orin, Google Coral, or Qualcomm Robotics RB5 platforms. This enables seamless integration into mobile robotic systems, including drones, warehouse automation units, and assistive household robots.

Autonomy and Fault Recovery

A defining advantage of the model’s local architecture is its capacity for limited autonomy and fault recovery. Because the robot does not depend on external compute, it is able to continue performing tasks even when disconnected from the network. This capability is essential in mission-critical or high-mobility scenarios, such as search-and-rescue operations, planetary exploration, or remote infrastructure inspection.

Moreover, the model includes rudimentary anomaly detection modules based on self-supervised learning techniques. These modules monitor sensor inputs for deviations from expected distributions and trigger fallback behaviors—such as entering a low-power mode, requesting human intervention, or reverting to a safe pose. Although not a replacement for full fault diagnosis systems, this feature significantly enhances the safety and reliability of robots operating autonomously in the field.

Scalability and Model Variants

To accommodate a variety of hardware platforms and use cases, DeepMind has released multiple size variants of the model—ranging from a compact “micro” version for low-power IoT bots to a more capable “edge+” version for autonomous mobile robots and industrial arms. Each variant is trained on a shared dataset comprising millions of real-world interactions and simulation rollouts, ensuring robust generalization across diverse scenarios.

This modularity allows developers to choose a version that best matches their deployment environment and performance requirements. Importantly, all variants share a common interface and training pipeline, making it easier to transition from simulation to real-world deployment without rewriting control code or retraining policy components.

In summary, DeepMind’s local AI model reflects a masterful balance between architectural sophistication and deployment pragmatism. Its transformer-based structure, multimodal input handling, real-time control integration, and aggressive optimization for embedded inference make it a formidable foundation for autonomous robotics. By enabling robots to process information, make decisions, and act entirely within their own hardware envelope, this model opens the door to a new era of intelligent, decentralized machines.

Deployment in Real-World Robots – Use Cases and Performance

The development of Google DeepMind’s local AI model has transitioned swiftly from conceptual architecture to tangible deployment in real-world robotic systems. The robustness of this model is not merely theoretical but has been validated across a spectrum of robotic platforms performing complex tasks in diverse environments. This section offers a comprehensive examination of the deployment strategies, use cases, performance metrics, and the operational nuances observed during testing. From manufacturing lines and warehouse logistics to household assistance and healthcare, the capabilities of the locally-operating model have demonstrated exceptional adaptability and autonomy.

Deployment Strategy and Integration Architecture

Deploying an AI model locally within robots involves more than simply loading software onto hardware. DeepMind approached integration holistically, taking into account the constraints of memory, compute, and power while preserving task fidelity and safety guarantees. The local AI model was embedded within a modular software stack that interfaces directly with the robot’s low-level actuators and high-level mission planner.

This integration was facilitated through real-time operating systems (RTOS) and middleware like ROS 2 (Robot Operating System), which allowed for seamless communication between perception modules, control logic, and mechanical subsystems. In industrial robots, the AI model was loaded onto edge-grade processing units such as NVIDIA Jetson AGX Orin, while in smaller mobile robots, Qualcomm's Robotics RB5 platform was used for its power-efficient neural processing capabilities.

To ensure transferability, the model’s deployment pipeline was supported by extensive pre-training in simulation environments such as MuJoCo and Isaac Gym. These synthetic environments allowed DeepMind to validate behavior before transitioning to physical hardware, significantly reducing calibration time and avoiding costly trial-and-error learning in the field.

Use Case 1: Object Manipulation and Tool Use

One of the most significant validations of DeepMind’s local model was in robotic arms performing object manipulation tasks. These included sorting heterogeneous items, grasping deformable materials, and executing tool-assisted operations such as unscrewing bolts or using a spatula. In these scenarios, the AI model demonstrated the ability to infer object properties from visual and tactile data, plan efficient grasping trajectories, and adjust force dynamics in real time.

In warehouse settings, robotic arms equipped with the local model successfully executed pick-and-place tasks with a 94% success rate, even when the objects varied in shape, color, and weight. In tool-use experiments, robots demonstrated context-aware behavior, such as selecting the appropriate tool for a given task based on natural language instruction. This level of reasoning, performed without cloud support, underscores the model’s embedded general intelligence and cross-modal alignment.

Use Case 2: Autonomous Navigation and Obstacle Avoidance

Mobile robots operating in dynamic environments—such as delivery robots, floor-cleaning units, and hospital assistants—require constant situational awareness and rapid decision-making to avoid collisions and fulfill their missions. DeepMind’s local AI model, deployed in autonomous ground robots, performed exceptionally well in navigation tasks across indoor and semi-structured outdoor environments.

The model fused LIDAR, vision, and inertial data to create local semantic maps, which were updated in real time as the robot moved through its environment. This allowed for path planning with both spatial awareness and semantic understanding. For instance, the robot could distinguish between static obstacles like furniture and dynamic entities like people, reacting accordingly. Performance benchmarks indicated an 89% success rate in reaching designated waypoints in unfamiliar environments without any human intervention or remote compute support.

In hospitals, the same robotic platform was used to deliver medications across multiple floors, dynamically adjusting its routes based on corridor congestion and elevator availability. The use of a local AI model ensured HIPAA-compliant processing of any patient-related environmental data, thus satisfying privacy regulations while maintaining functionality.

Use Case 3: Home Robotics and Assistive Tasks

Perhaps the most compelling use case for the local AI model lies in domestic environments, where user privacy, limited bandwidth, and varied tasks necessitate high levels of autonomy. DeepMind collaborated with consumer robotics developers to integrate the model into household assistant robots capable of performing chores such as tidying, organizing, vacuuming, and responding to verbal commands.

In these deployments, the model demonstrated multi-tasking abilities: recognizing objects in cluttered environments, interpreting spoken instructions, and dynamically reprioritizing actions. For example, when given a command like “Clean the living room and put away the toys,” the robot decomposed the instruction into subtasks and executed them sequentially, adjusting behavior based on feedback (e.g., if a toy was under a chair, it maneuvered to retrieve it).

While the success rate for chore completion was slightly lower—around 76%—this was due to the inherently unpredictable nature of household environments and object occlusions. Nonetheless, the model’s performance was deemed operationally viable, particularly because all data was processed on-device, maintaining user privacy and responsiveness.

| Use Case | Success Rate | Response Time | Energy Efficiency | Notable Challenges |

|---|---|---|---|---|

| Object Manipulation | 94% | 0.8 s | High | Irregular shapes, occlusion |

| Autonomous Navigation | 89% | 1.2 s | Medium | Dynamic obstacles, drift |

| Tool Use | 82% | 1.5 s | Medium | Dexterity, tool selection |

| Household Chores | 76% | 1.8 s | Low | Clutter, ambiguous commands |

Error Handling and Adaptive Learning in the Field

An often-overlooked aspect of robotic deployment is fault tolerance and the ability to learn from mistakes. The local AI model incorporates basic anomaly detection and adaptive behaviors that enable real-time error correction. For instance, if a grasp fails due to an unexpected object property, the robot can retry using an alternate strategy. In navigation scenarios, the robot re-plans its path upon detecting blocked routes or inconsistent map data.

Though the model does not yet support full online learning due to hardware constraints, it includes an experience replay buffer that allows periodic offline fine-tuning. This hybrid approach ensures continuous improvement without compromising safety or predictability during live operation.

Validation Metrics and Comparative Benchmarks

Across all test environments, DeepMind employed a consistent framework of validation metrics: task success rate, energy consumption per task, inference latency, and resilience to environmental perturbations. Compared to traditional cloud-based models, the local AI model achieved:

- A 5–7× reduction in latency

- A 30–40% reduction in power consumption

- Comparable task success rates with fewer failures due to network dependency

This demonstrates not only the functional robustness of the model but also its viability as a scalable solution for real-world applications. When scaled across fleets of robots, these performance gains translate into significant operational cost savings, enhanced reliability, and better user experience.

In conclusion, the real-world deployment of DeepMind’s local AI model across varied robotic platforms underscores its versatility, efficiency, and transformative potential. By performing sophisticated tasks in industrial, healthcare, and domestic domains without reliance on the cloud, the model validates a new paradigm in robotics: one where intelligence is localized, responsive, and embedded at the edge.

Strategic Implications – Why Local AI is the Future of Robotics

The transition from cloud-reliant artificial intelligence to locally-operating models signifies a profound strategic inflection point for the robotics industry. While much of the discourse surrounding AI in robotics has traditionally centered on model sophistication and task generalization, Google DeepMind’s deployment of a local AI model reveals a parallel priority: architectural sovereignty and real-time operability. As this section elucidates, the strategic implications of this shift extend far beyond latency reduction. They impact issues of privacy, scalability, resilience, energy consumption, geopolitical independence, and commercial differentiation. Collectively, these dimensions underscore why local AI is not merely an optimization—but a necessity—for the next era of intelligent machines.

Latency and Responsiveness as Competitive Differentiators

At the core of the rationale for local AI is responsiveness. Robots operating in dynamic, human-populated environments—such as homes, hospitals, and manufacturing floors—must respond to stimuli with minimal delay. Cloud-based models, despite their computational prowess, introduce unavoidable latencies due to transmission delays and network congestion. Even with high-speed 5G connectivity, end-to-end inference latencies can exceed acceptable thresholds for safety-critical or time-sensitive operations.

Local AI models, by contrast, can operate with latencies as low as 20–35 milliseconds, enabling near-instantaneous response to environmental stimuli. This responsiveness not only improves performance metrics such as success rates and task throughput but also increases user trust and safety. In industrial settings, it can mean the difference between a productive workflow and a catastrophic failure. For consumer applications, it determines whether the product feels intuitive or frustrating.

Furthermore, responsiveness becomes a competitive differentiator in markets where user expectations are shaped by consumer electronics standards—fast, seamless, and error-free interactions. Manufacturers who equip their robotic systems with embedded intelligence will thus be better positioned to deliver premium user experiences and justify higher price points.

Privacy and Data Sovereignty

A key advantage of on-device inference is the enhancement of data privacy. Cloud-dependent AI systems inevitably involve transmitting sensitive information—such as video feeds, audio commands, or biometric inputs—to external servers for processing. This creates inherent vulnerabilities in terms of data leakage, surveillance, or misuse, especially in jurisdictions with strict data protection laws such as the European Union's General Data Protection Regulation (GDPR) or California’s Consumer Privacy Act (CCPA).

By processing all sensor data locally, DeepMind’s model mitigates these risks and simplifies compliance. Robots can now be deployed in privacy-sensitive environments—such as elderly care facilities, private homes, and hospitals—without raising concerns over surveillance or data breaches. This design philosophy is also aligned with the growing global emphasis on digital sovereignty, where nations and enterprises seek greater control over their data infrastructure.

For organizations operating across international borders, local AI reduces the complexity of compliance with regional data laws, avoiding costly audits, fines, or reputational damage. It also fosters user trust—an intangible yet crucial asset for adoption in sensitive domains like healthcare, education, and home automation.

Robustness and Resilience in Unpredictable Environments

Another critical advantage of local AI is operational resilience. Robots relying on constant cloud connectivity are inherently vulnerable to network outages, latency spikes, or data center disruptions. These dependencies can be particularly problematic in environments with unstable or constrained bandwidth—such as construction sites, agricultural fields, military zones, or disaster-stricken regions.

Local AI ensures that robots remain fully functional even when disconnected. By decoupling cognition from connectivity, the model enables a degree of autonomy previously unachievable with cloud-bound architectures. This is particularly advantageous for mission-critical applications, including search-and-rescue operations, autonomous drones, and remote maintenance systems.

Moreover, local models can be hardened against cyberattacks. Since there is no persistent connection to an external server, the attack surface is significantly reduced. This resilience extends to natural disasters, where communication infrastructure may be impaired. Robots equipped with local AI can continue to operate, assist, and adapt, playing a pivotal role in emergency response and continuity of operations.

Energy Efficiency and Environmental Sustainability

As the robotics industry scales, so too does its environmental footprint. Cloud-based AI incurs considerable energy costs—not just on the client device, but within the data centers that process, store, and transmit information. These facilities require enormous power for cooling, redundancy, and round-the-clock operation, much of which is derived from non-renewable energy sources.

Local AI, while computationally constrained, is far more energy-efficient at scale. By limiting transmission and central processing, robots powered by on-device models consume less bandwidth, generate less heat, and reduce the need for persistent server-side computation. This aligns with global sustainability goals and supports enterprises seeking to minimize their carbon impact.

Additionally, hardware-aware training and model compression strategies further reduce energy consumption during inference. These optimizations—combined with advanced power management on robotics platforms—enable robots to function longer on a single battery charge, extend device lifespans, and support greener deployments in off-grid or solar-powered environments.

Strategic Autonomy and Geopolitical Considerations

In an era of increasing geopolitical fragmentation, local AI also offers strategic advantages to companies and governments alike. Nations concerned about dependency on foreign cloud providers or the extraterritorial reach of foreign data laws are prioritizing self-contained, sovereign AI solutions. This is particularly true in sectors such as defense, critical infrastructure, and aerospace, where supply chain integrity and autonomy are non-negotiable.

By enabling robots to operate independently of centralized servers, local AI empowers countries and corporations to maintain functional autonomy over their robotic assets. This decentralization reduces exposure to political risks, sanctions, or market instability and supports the development of region-specific AI standards and capabilities.

For robotics firms, this means a competitive edge in public sector contracts, international procurement, and regulatory approval processes. It also opens new markets in regions with limited cloud infrastructure but strong demand for autonomous systems.

Commercialization and Developer Ecosystem Growth

Finally, the move toward local AI is catalyzing new business models and ecosystem dynamics. Robotics-as-a-Service (RaaS) platforms can now offer “offline-first” deployments with local compute, enabling field operation subscriptions that do not depend on continuous connectivity. Consumer-facing robotics products can emphasize privacy and edge intelligence as differentiators, appealing to increasingly tech-savvy buyers.

For developers, the demand for hardware-optimized models, real-time inference pipelines, and low-footprint training techniques creates fertile ground for innovation. Open-source tools and SDKs tailored to local inference—such as TensorFlow Lite, ONNX Runtime, and NVIDIA TensorRT—are proliferating, lowering the barrier to entry for startups and independent engineers.

As adoption scales, economies of learning and hardware specialization will drive costs down and performance up. This virtuous cycle will reinforce local AI as the default modality for robotics—analogous to the way smartphones shifted from cloud-reliant assistants to fully functional pocket computers.

In sum, the strategic implications of DeepMind’s locally-operating AI model are both far-reaching and multifaceted. From latency reduction and privacy compliance to resilience, sustainability, and geopolitical independence, local AI addresses critical bottlenecks that have long constrained the robotics industry. As companies and countries seek to deploy intelligent machines at scale, the imperative for localized intelligence will grow stronger—not just as a technological preference, but as a strategic imperative.

Challenges, Limitations and the Road Ahead

While Google DeepMind’s local AI model represents a notable advance in robotics, its deployment and broader adoption are not without significant limitations. The benefits of low-latency processing, enhanced privacy, and greater autonomy are balanced by technical, operational, and economic challenges that remain to be addressed. This section examines the constraints currently facing local AI deployment, including issues of computational limits, generalization capacity, data availability, safety assurance, and developer accessibility. It also explores ongoing research directions and offers a forward-looking perspective on how the industry might evolve to overcome these barriers.

Hardware Constraints and Model Complexity Trade-offs

One of the most immediate limitations of running AI models locally is the inherent constraint imposed by hardware. Even the most advanced edge processing units—such as NVIDIA Jetson AGX Orin, Apple Neural Engine, or custom ASICs—cannot match the computational power, memory bandwidth, or energy availability of cloud-based systems. As a result, local models must be aggressively optimized in terms of size and complexity.

This trade-off limits the depth of the model's reasoning and the fidelity of perception, particularly when handling multimodal inputs. Tasks requiring high-resolution vision, real-time 3D mapping, or nuanced natural language understanding are either pared down or require model variants with specialized capabilities. This specialization, while necessary for efficiency, can reduce generalizability across diverse use cases.

Moreover, edge deployment raises thermal management issues. Many robots operate in compact, enclosed environments where heat dissipation is a concern. Sustained high-performance inference can cause thermal throttling, compromising response times and operational continuity. Until breakthroughs in neuromorphic or quantum hardware arrive, these trade-offs between power, performance, and portability will remain a fundamental challenge.

Generalization and Transfer Learning Limitations

While DeepMind’s model demonstrates impressive generalization across certain tasks, this capability is still narrow relative to the aspirations of truly general-purpose robotics. Many local AI models are trained on specific datasets under controlled conditions and may struggle to adapt to novel environments or object categories without retraining.

Transfer learning—where a pre-trained model is fine-tuned for new tasks with minimal data—is still difficult to implement efficiently on-device. Fine-tuning typically requires considerable compute power and memory, which most edge devices cannot support in real time. This hinders the robot’s ability to evolve or personalize its behavior post-deployment based on user feedback or situational context.

To address this, hybrid models combining local inference with periodic cloud-based updates are being explored. These systems cache learning signals during operation and upload them when a secure connection becomes available. However, this approach reintroduces a dependency on connectivity and must be carefully designed to preserve the autonomy and privacy benefits of local AI.

Data Availability and Synthetic Simulation Bias

High-quality, diverse training data is essential to building robust AI models. However, acquiring sufficient real-world data for robotics—particularly in edge deployment scenarios—is expensive, time-consuming, and often restricted by privacy laws. As a result, much of the training relies on synthetic data and simulation environments, such as DeepMind Lab, MuJoCo, or Unity.

While simulation accelerates development and testing, it introduces distributional biases. Simulated environments may fail to capture the full complexity of real-world physics, textures, lighting conditions, or human behavior. This reality gap limits the transferability of model performance from virtual to physical domains—a phenomenon often referred to as the “sim2real” problem.

Further complicating matters, domain adaptation techniques—used to bridge the gap between synthetic and real-world data—are still maturing. These require extensive adversarial training and validation to ensure consistency across environments, which adds to development time and resource overhead.

Safety, Reliability, and Certification Standards

As robots increasingly take on roles in public and private spaces, ensuring their safety becomes paramount. Local AI models must not only perform well under normal conditions but also degrade gracefully under failure modes, such as sensor dropouts, unexpected obstacles, or adversarial inputs.

Safety validation is more difficult when models are adaptive or learned rather than rule-based. Regulatory bodies—such as the ISO, FAA, and FDA—require rigorous testing, traceability, and fail-safes before approving autonomous systems for use in sensitive sectors like transportation, medicine, or manufacturing.

Moreover, the lack of universal certification standards for AI in robotics makes it harder for developers and enterprises to navigate compliance requirements. While progress is being made—e.g., ISO 13482 for personal care robots and ISO/TS 15066 for collaborative industrial robots—these frameworks were not originally designed for systems driven by dynamic, learned behaviors. The field will need updated standards and risk assessment methodologies tailored to the complexities of local AI.

Developer Accessibility and Tooling Maturity

Despite growing interest, developing and deploying local AI models remains a specialized skill set. The current development ecosystem is fragmented, with various toolchains (e.g., TensorFlow Lite, ONNX, Edge Impulse, NVIDIA Isaac SDK) each catering to specific hardware or use cases. This creates steep learning curves for new entrants and increases integration costs for enterprises.

Additionally, the absence of unified benchmarking tools for local inference further complicates model evaluation. Developers must independently test for latency, accuracy, energy efficiency, and fault tolerance across platforms, often duplicating effort and introducing inconsistency. This lack of standardization slows innovation and hinders cross-industry collaboration.

To democratize local AI development, more accessible toolkits, training workflows, and deployment platforms are required. Initiatives such as Google's Edge TPU Dev Board, Meta’s Open Source Robotics Lab, and DeepMind's public APIs represent promising steps, but much work remains to support a vibrant, inclusive development ecosystem.

The Road Ahead: Research Directions and Industry Outlook

Despite these challenges, the outlook for local AI in robotics remains optimistic. Active research is underway to address the existing limitations. Notable directions include:

- Federated Learning: Enabling robots to learn collectively across distributed environments without sharing raw data, preserving privacy while improving generalization.

- Continual Learning: Designing models that adapt incrementally to new data without catastrophic forgetting.

- Neuromorphic Computing: Leveraging brain-inspired architectures to achieve high-efficiency, low-power inference for edge devices.

- Self-Supervised Learning: Reducing dependence on labeled data by enabling robots to learn directly from raw interactions with the environment.

- Model-agnostic Safety Layers: Embedding interpretable, rule-based safety protocols alongside learned models for hybrid governance.

On the commercial side, the push for intelligent automation across sectors—from logistics and agriculture to healthcare and consumer electronics—will continue to drive demand for robust, autonomous systems. As local AI matures, it is poised to become the default architecture for such deployments, especially in regions with limited connectivity or stringent privacy regulations.

In conclusion, while DeepMind’s local AI model is a pioneering achievement, its widespread adoption requires a concerted effort to overcome technical, regulatory, and infrastructural barriers. The path forward involves collaborative innovation, inclusive tooling, and the development of standards that ensure both safety and scalability. The transition to intelligent machines operating independently at the edge is not without friction, but the trajectory is clear: the future of robotics is not only smart—it is local.

Conclusion: Can DeepMind’s Local AI Disrupt the Robotics Landscape?

The advent of Google DeepMind’s locally-operating AI model represents a pivotal advancement in the evolution of intelligent machines. It reflects a strategic reorientation in AI and robotics—one that moves beyond the centralized cloud paradigm toward autonomous, embedded, and context-aware systems. In doing so, it fundamentally redefines how robots perceive, plan, and act within their environments. Rather than relying on distant servers to think for them, these next-generation robots are now empowered to reason and respond directly, with minimal latency and maximum resilience.

As outlined in this blog, the architectural design of DeepMind’s model is a masterclass in balancing performance and efficiency. By leveraging a compact, multimodal transformer architecture optimized for edge inference, the model successfully supports sophisticated behaviors on limited hardware. Its real-world deployments have demonstrated robust performance in a wide range of use cases—from precision object manipulation in factories to autonomous navigation in hospitals and adaptive task execution in home environments. The accompanying performance benchmarks validate the model’s viability across key metrics such as response time, energy efficiency, and task success rate.

Strategically, the shift toward local AI holds considerable promise. It enhances responsiveness, strengthens data privacy, ensures operational continuity in disconnected settings, and supports digital sovereignty in an increasingly fragmented geopolitical landscape. Moreover, it aligns with emerging trends in green computing and sustainable AI by reducing dependency on energy-intensive data centers. The rise of local AI in robotics is not merely an engineering milestone—it is a response to evolving societal, regulatory, and economic demands.

Nevertheless, the road ahead is not without its challenges. Hardware limitations, safety validation, model generalization, and the maturity of developer tooling are all active friction points that must be addressed. Yet the industry is already responding with advancements in federated learning, continual adaptation, self-supervised training, and the standardization of safety protocols. These innovations will collectively accelerate the maturation and scalability of local AI in robotics.

In summary, DeepMind’s local AI model is not just a technological artifact—it is a blueprint for the future of robotics. By enabling robots to act independently of the cloud while maintaining intelligence, efficiency, and safety, this paradigm paves the way for a more decentralized and resilient robotics ecosystem. As companies, researchers, and governments invest further in this trajectory, the question is no longer whether local AI will become mainstream—it is how quickly the industry can adapt and evolve to fully realize its transformative potential.

References

- DeepMind – Robotics Research

https://www.deepmind.com/research/highlighted-research/robotics - Google AI Blog – Local AI and Robotics

https://ai.googleblog.com - MIT Technology Review – Robotics and AI

https://www.technologyreview.com/robotics - NVIDIA Edge Computing Platforms

https://www.nvidia.com/en-us/autonomous-machines/embedded-systems - Qualcomm Robotics RB5 Platform

https://www.qualcomm.com/products/robotics-platforms/robotics-rb5 - OpenAI Robotics Archive

https://openai.com/research - Boston Dynamics Robot Intelligence

https://www.bostondynamics.com - ROS 2 Overview

https://docs.ros.org/en/foxy/index.html - IEEE Spectrum – Robotics Section

https://spectrum.ieee.org/robotics - The Verge – AI and Autonomous Machines

https://www.theverge.com/artificial-intelligence