Germany Moves to Block Chinese AI App DeepSeek: Privacy, Power, and the Future of Global AI

In an era defined by the global race for artificial intelligence supremacy, the collision between innovation and regulatory sovereignty has grown increasingly inevitable. On June 27, 2025, Germany’s top data protection officer, Meike Kamp, issued a directive to tech giants Apple and Google to block the Chinese AI app DeepSeek from their app marketplaces. Citing violations of the European Union’s stringent General Data Protection Regulation (GDPR), Germany has become the first EU member state to initiate formal action to remove a widely used Chinese AI chatbot from public access. The decision signals a deepening rift in the digital landscape, where data privacy, national security, and geopolitical alignment are redefining access to next-generation technologies.

DeepSeek, developed by a consortium of Chinese researchers and AI engineers, has risen to prominence as a leading AI assistant application. Since its release in early 2025, it has been installed millions of times across Android and iOS devices, offering users fast and seemingly intelligent responses, multilingual support, and low-latency performance that rival the best of Western models like OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude. However, beneath the app’s polished interface lies a core concern: data governance. According to German regulators, DeepSeek collects and transmits sensitive user information—including IP addresses, interaction histories, device identifiers, and potentially biometric metadata—to servers located in China. The concern is not simply about data collection per se, but about who controls that data once it leaves European jurisdictions.

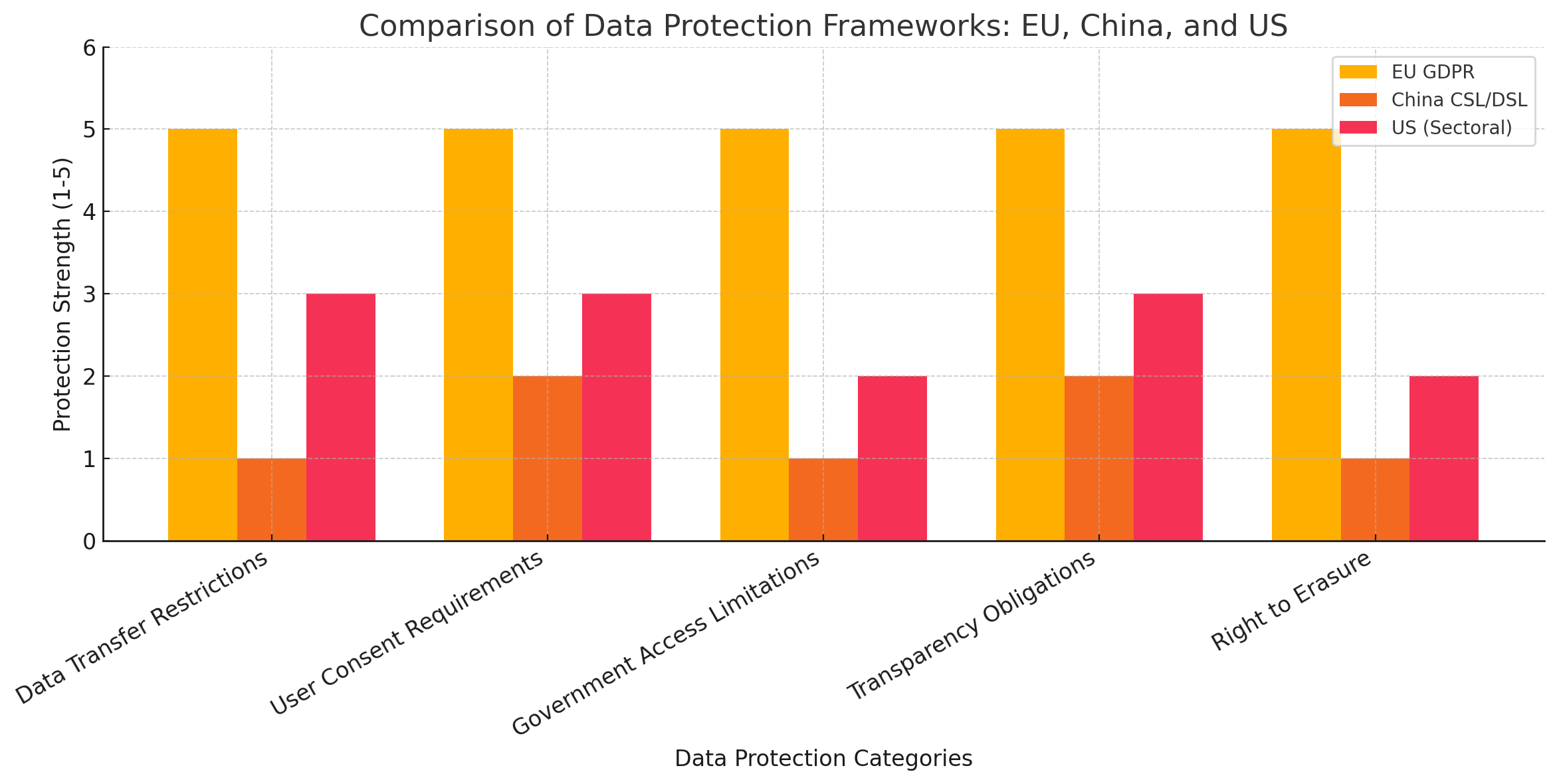

The German government’s intervention is grounded in more than just technical compliance with the GDPR. At stake is the question of whether AI platforms developed under vastly different legal and political regimes should be permitted unfettered access to Western digital ecosystems. Chinese laws governing data access and cybersecurity effectively enable state agencies to compel private firms to disclose stored information. This foundational incompatibility with European data protection principles has triggered not only regulatory scrutiny but also broader questions about strategic trust, ethical alignment, and digital sovereignty.

Germany’s directive follows a growing global pattern. Earlier in 2025, countries such as Australia, the Netherlands, and South Korea imposed various restrictions on DeepSeek, often barring its use in government offices and public institutions. Italy went further by suspending DeepSeek from app stores entirely while it conducted a full-scale privacy audit. In the United States, lawmakers have called for a review of Chinese AI applications under national security statutes, though no official bans have been implemented thus far. As the regulatory pressure mounts, DeepSeek finds itself at the center of a geopolitical maelstrom that goes beyond its technical capabilities or commercial appeal.

This blog post delves into the implications of Germany’s unprecedented move, examining the app’s data practices, the legal justifications cited, the international response, and the potential long-term outcomes for both DeepSeek and the global AI governance framework. In doing so, it sheds light on the broader challenges posed by the globalization of artificial intelligence—especially when it intersects with divergent national ideologies, legal doctrines, and technological ambitions.

DeepSeek’s Data Practices & Privacy Concerns

As artificial intelligence applications permeate nearly every facet of digital life, the collection, transmission, and protection of user data have emerged as central issues in determining their legitimacy and market access. DeepSeek, the Chinese-developed AI app currently facing mounting regulatory scrutiny across Europe, epitomizes this growing tension. While the application has achieved rapid user adoption owing to its intelligent conversational abilities and low-latency performance, its underlying data practices have drawn alarm among privacy regulators, civil society advocates, and cybersecurity experts alike. At the core of the controversy lies a fundamental incompatibility between DeepSeek’s operational model and the European Union’s rigorous data protection standards.

Data Collection and Transfer Mechanisms

DeepSeek’s privacy policy, as dissected by privacy researchers and legal analysts, indicates that the application collects a range of user data well beyond what is typically expected of AI-driven chatbots. This includes, but is not limited to, unique device identifiers, IP addresses, language preferences, geolocation data, interaction histories, and possibly even biometric information such as voice recordings when voice input features are activated. These data are not processed exclusively on-device or within a decentralized architecture. Instead, they are transmitted to remote servers operated and maintained within the jurisdiction of the People's Republic of China.

The practice of storing data offshore—particularly in countries whose legal systems lack transparency and judicial independence—raises immediate red flags under the European Union’s General Data Protection Regulation (GDPR). The regulation imposes stringent requirements for cross-border data transfers, including the need for adequate levels of data protection in the recipient country. As the European Court of Justice clarified in its landmark "Schrems II" ruling, the absence of enforceable rights and effective remedies for EU citizens in the receiving jurisdiction renders such transfers unlawful unless specific safeguards, such as Standard Contractual Clauses (SCCs) or Binding Corporate Rules (BCRs), are implemented. DeepSeek has offered no public documentation suggesting such mechanisms are in place.

Legal Incompatibilities with the GDPR

The German data protection authority’s move to block DeepSeek from app stores is predicated on two primary legal violations. First is the unauthorized transfer of user data to China without proper safeguards or legal justifications. Second is the opaque nature of the company’s privacy disclosures, which fall short of GDPR’s transparency obligations under Articles 12–14. End-users are not clearly informed about which categories of their personal data are processed, for what purposes, by whom, and under what legal basis. This undermines their ability to exercise fundamental rights, such as the right to access, rectify, or erase personal information.

Moreover, GDPR places a heavy emphasis on data minimization and purpose limitation—principles requiring that only the data strictly necessary for a given function be collected, and that it not be repurposed beyond the original intent without additional consent. DeepSeek’s sweeping data practices suggest a disregard for these principles, heightening concerns over potential secondary uses of European users' data for training models or even for state-level surveillance.

The contrast between GDPR and Chinese cybersecurity and intelligence laws further exacerbates the risk landscape. Under China’s 2017 Cybersecurity Law and its 2021 Data Security Law, domestic firms are legally obligated to share stored data with state authorities upon request. These obligations apply even to foreign subsidiaries and joint ventures. Consequently, any personal data stored in China could be accessed by the Chinese government without judicial oversight or recourse for European users—an arrangement fundamentally at odds with GDPR’s core tenets.

Algorithmic Transparency and Consent Deficiencies

Another major area of concern pertains to the opacity of DeepSeek’s underlying algorithms and the consent framework surrounding their use. While many Western AI providers—such as OpenAI, Google, and Anthropic—have begun issuing technical documentation, transparency reports, and independent audit summaries, DeepSeek remains largely opaque in its disclosures. There is little publicly available information about the R1 model’s training datasets, data retention timelines, fine-tuning policies, or mechanisms for redress in case of model misuse.

Equally problematic is the manner in which DeepSeek obtains user consent. Consent, under GDPR, must be freely given, specific, informed, and unambiguous. In practice, DeepSeek’s onboarding process offers users few opportunities to understand the scope and implications of their data usage. The language used in privacy prompts is highly generalist and, in some instances, not localized into all official EU languages—a violation that undermines accessibility and legal clarity. Users are often defaulted into agreeing to broad data practices without any option to opt out of non-essential processing activities.

This lack of meaningful consent not only violates GDPR Articles 6 and 7 but also places DeepSeek in a vulnerable position legally, especially if class action lawsuits or collective redress mechanisms are initiated by privacy advocacy groups.

Risk of Model Retraining and Surveillance Capabilities

Beyond compliance failures, there is growing apprehension about how user data could be utilized to refine DeepSeek’s language models in ways that may contravene European values and security interests. The use of personal and conversational data for model training—especially without explicit consent—is already contentious in Western jurisdictions. In the context of a Chinese-operated AI system, such practices acquire an additional layer of concern: the potential for training data to be analyzed, filtered, or even weaponized in the service of foreign propaganda, behavioral prediction, or disinformation campaigns.

European cybersecurity agencies have noted that AI systems can be co-opted to gather soft intelligence about user behavior, interests, political affiliations, and personal vulnerabilities. Even if this is not DeepSeek’s express intent, the sheer volume of data collected, coupled with the lack of legal constraints in China, makes the app a latent vector for strategic exploitation. As trust in the provenance and management of data becomes a prerequisite for AI adoption, DeepSeek’s practices appear increasingly incompatible with European digital norms.

Public and Institutional Backlash

Public trust in AI systems is fragile, and news of privacy violations or data misuse can rapidly erode user confidence. DeepSeek’s lack of compliance with GDPR and its association with Chinese state influence have prompted widespread backlash across civil society. German media outlets, privacy watchdogs, and political stakeholders have been vocally critical of the app’s continued availability, framing its presence in app stores as a failure of enforcement by platform gatekeepers such as Apple and Google.

The fact that Germany, a country with some of the most robust privacy frameworks in the world, has stepped forward with a formal request for de-platforming indicates that DeepSeek is not just a regulatory concern but a political issue as well. The risk calculus has shifted: the cost of inaction now outweighs the perceived benefits of technological pluralism or market neutrality. This could set a precedent for further interventions, both by individual EU member states and by the European Commission itself.

In conclusion, DeepSeek’s data practices represent a case study in the widening chasm between AI innovation and responsible data governance. From unlawful data transfers and inadequate transparency to flawed consent frameworks and geopolitical vulnerabilities, the app embodies the challenges regulators face when assessing AI systems developed under foreign legal and political paradigms. As the digital ecosystem becomes increasingly borderless, DeepSeek’s operational model underscores the urgent need for harmonized, enforceable global standards in AI data protection.

Global Regulatory Pushback & Comparative Analysis

The regulatory response to DeepSeek’s expansion across international markets illustrates a rapidly evolving global consensus around data sovereignty, AI governance, and national cybersecurity. Although Germany’s directive to Apple and Google marks a high-profile European development, it is not an isolated event. Rather, it is part of a broader pattern of rising governmental scrutiny targeting Chinese-developed AI systems. From Asia to North America, public authorities are assessing the risks posed by AI tools operating outside their domestic legal frameworks—particularly when those tools originate from jurisdictions with expansive state surveillance capabilities.

International Restrictions on DeepSeek

Since its launch in early 2025, DeepSeek has been under review in multiple jurisdictions. Early adopters praised its powerful R1 language model, multilingual fluency, and responsiveness. However, its origin, architecture, and opaque data flows have provoked a wave of restrictions by democratic governments and regulators concerned about systemic privacy and national security risks.

Europe

In addition to Germany’s regulatory intervention, Italy’s data protection authority (Garante) took preemptive action by temporarily suspending DeepSeek from national app stores in March 2025. The Italian regulator cited multiple violations of GDPR, including the absence of an adequate legal basis for data collection, insufficient transparency, and a failure to localize privacy policies. Italy also launched an antitrust investigation into DeepSeek’s opaque market practices, probing whether the application’s aggressive user acquisition model had created an unfair competitive environment for local and EU-based AI providers.

The Netherlands followed with a ministerial advisory recommending the prohibition of DeepSeek on all government-issued mobile devices. This was part of a wider Dutch initiative to strengthen national cybersecurity postures following revelations about potential espionage vectors embedded in consumer-facing technology.

Meanwhile, the European Commission has expressed interest in developing a union-wide position. In a statement released in April 2025, EU Commissioner for Justice Didier Reynders noted that DeepSeek “raises critical questions about cross-border data transfers and AI accountability.” The Commission is reportedly working on a set of guidelines that may trigger enforcement under the upcoming AI Act—Europe’s landmark regulation establishing oversight mechanisms and risk categorization for AI systems.

Asia-Pacific

In the Asia-Pacific region, concerns over Chinese AI influence have led to swift action. Australia issued a formal advisory in May 2025 barring public sector employees from downloading or using DeepSeek on government devices. The advisory, issued by the Australian Signals Directorate (ASD), pointed to the app’s failure to meet baseline security controls. New Zealand followed with similar restrictions in June 2025.

South Korea, although traditionally more ambivalent about U.S.-China technology rivalry, issued a suspension on DeepSeek for all state-level agencies following a joint briefing by its National Intelligence Service (NIS) and the Ministry of Science and ICT. Taiwanese authorities, citing similar concerns, initiated their own ban, underscoring fears that user data could be exploited by adversarial state actors.

United States

In the United States, no formal federal ban has been issued as of June 2025, but Congressional voices have grown louder. Bipartisan lawmakers have introduced the “Foreign AI Surveillance Prevention Act,” which, if passed, would require the Committee on Foreign Investment in the United States (CFIUS) to vet AI tools for potential national security risks. In parallel, the Federal Trade Commission (FTC) has initiated a preliminary investigation into whether DeepSeek misled consumers about its data practices—potentially violating Section 5 of the FTC Act.

State governments are also taking initiative. The State of New York was the first to restrict DeepSeek’s installation on publicly funded university devices, citing “significant ambiguity” in the app’s data sharing policies. California’s Office of Privacy Protection has opened a consultation process on whether such apps should fall under the state’s Consumer Privacy Act (CCPA) regime, potentially forcing a reevaluation of their data processing protocols.

Comparative Overview of Regulatory Actions

To provide a structured view of the regulatory landscape surrounding DeepSeek, the following table outlines major national and supranational responses to the app across key jurisdictions:

This comparative snapshot reveals an emerging trend: governments are not only concerned with personal privacy in the consumer sense but increasingly with the systemic risk foreign AI platforms may pose to national resilience, public sector integrity, and democratic governance.

A New Geopolitical Lens on AI Regulation

The scrutiny surrounding DeepSeek also reflects a shift in how governments approach AI not just as a technological development but as a geopolitical variable. In previous decades, international regulation of software platforms typically involved post-market enforcement—fining companies after violations occurred. In contrast, the modern AI regulatory wave is increasingly preemptive, focused on excluding high-risk entities before they can entrench themselves within sensitive information ecosystems.

Much of this caution stems from recent geopolitical developments. The growing confrontation between the U.S. and China over semiconductor exports, 5G infrastructure, and quantum computing has spilled over into the AI domain. Western democracies increasingly see AI tools not just as products but as proxies for strategic influence. When data generated by citizens in Europe or North America are processed and stored in jurisdictions like China—where rule of law is subordinate to party oversight—it is viewed not as commercial inconvenience but as a strategic vulnerability.

This lens shapes enforcement behavior. Authorities are now more inclined to assess AI apps not just for their technical merit or consumer benefit, but for their provenance, end-use risks, and alignment with liberal democratic norms. DeepSeek, despite its impressive linguistic capabilities and real-time responsiveness, is seen as lacking the institutional and legal insulation necessary for trusted deployment in these environments.

Fragmented Enforcement and the Need for Coordination

Despite this global convergence of concern, enforcement remains largely fragmented. While some countries have pursued outright bans, others remain in a regulatory grey zone, relying on voluntary compliance by app platforms or engaging in passive monitoring without issuing formal decisions. This inconsistent enforcement presents challenges for both users and technology platforms.

For end-users, it creates uncertainty about what tools are safe or permissible. For platforms like Apple and Google, it introduces reputational and legal risk depending on how proactively they respond to national directives. Both companies have historically claimed to support data privacy and user protection, but they now face difficult decisions about balancing local compliance with global product integrity.

One possible path forward is enhanced multilateral cooperation. The G7, European Union, and Quad nations have each expressed interest in building shared AI governance principles. Including enforceable mechanisms for cross-border AI scrutiny—such as joint audits, shared registries of high-risk apps, or harmonized labeling systems—could help reduce regulatory fragmentation and streamline enforcement. Without such mechanisms, cases like DeepSeek will continue to spark unilateral responses that, while justified, risk creating a balkanized internet.

Precedent and Future Implications

The regulatory treatment of DeepSeek sets a powerful precedent. It signals to developers worldwide that access to lucrative digital markets will be conditional upon more than just innovation or performance metrics. Legal transparency, institutional accountability, and geopolitical neutrality may soon become preconditions for widespread AI adoption—especially in democratic states.

It also places pressure on Western AI firms. If regulators impose stricter rules on foreign AI platforms while tolerating similar lapses from domestic ones, accusations of digital protectionism may follow. Therefore, to preserve legitimacy, enforcement bodies must apply principles consistently, regardless of the developer’s nationality.

Ultimately, DeepSeek represents more than a regulatory case study—it is a bellwether for the future of AI globalization. Whether the world’s digital ecosystems become open and interoperable or fragmented and nationalized may depend on how consistently and collaboratively governments approach these novel challenges.

Tech‑Industry & Platform Response

The regulatory actions targeting DeepSeek have cast a spotlight on the roles that technology gatekeepers—specifically Apple and Google—play in shaping access to artificial intelligence applications. As app stores operated by these companies have become the de facto distribution infrastructure for mobile software, their policy decisions now carry geopolitical weight. In response to the German regulator’s request to block DeepSeek over alleged data protection violations, the tech industry finds itself at a critical intersection of corporate responsibility, compliance with national laws, and the global governance of AI.

1. Apple and Google: Gatekeepers in a Global Regulatory Landscape

Apple’s App Store and Google Play are not merely commercial venues; they are platforms that mediate access to billions of users and enforce baseline standards for privacy, security, and legal compliance. In the European context, these companies must adhere not only to national laws but also to the broader framework established by the European Union’s Digital Services Act (DSA) and the GDPR. These regulations impose a duty of care on digital platforms, particularly when distributing services that process personal data on a large scale.

In this environment, Germany’s request that Apple and Google delist DeepSeek raises important questions about the thresholds for removal. While both companies have acted swiftly in the past—for example, removing apps that violated COVID-19 misinformation rules or failed to meet child privacy standards—the request to de-platform DeepSeek due to data transfer concerns places them in unfamiliar territory. It is no longer a matter of adjudicating user safety in a narrow sense, but of aligning their distribution policies with geopolitical and regulatory pressures.

Apple has thus far remained silent on the issue, while Google issued a brief statement indicating it is reviewing the request in light of its legal obligations in the German market. These measured responses reflect the delicate balance both companies must maintain: on one hand, upholding user trust and regulatory compliance; on the other, avoiding actions that could be perceived as politically motivated or protectionist.

2. Challenges of Content Moderation at the App Level

Delisting DeepSeek is not as simple as flipping a switch. Apple and Google must assess whether the app is in material breach of their internal policies regarding user privacy, data storage, and developer transparency. Both companies require app developers to disclose their data collection practices during the app submission process, and to maintain transparency through in-app prompts and privacy labels.

However, enforcement is often inconsistent. Many privacy violations only come to light after external audits or regulatory investigations. In DeepSeek’s case, the issue is compounded by the app’s Chinese origin, the opacity of its backend infrastructure, and the legal asymmetry between EU data protection rules and Chinese cybersecurity laws. These factors make it difficult for app stores to independently verify compliance, placing pressure on national authorities to take the lead.

This dynamic has important implications for future enforcement. As AI applications become more sophisticated and data-intensive, the burden on app distribution platforms to act as quasi-regulatory bodies will increase. Unless governments and platform providers develop clearer coordination mechanisms, the current patchwork of responses may leave significant gaps in enforcement.

3. Risk Calculus: Business Continuity vs. Political Compliance

From a business standpoint, removing DeepSeek may seem inconsequential—it is not a flagship app with significant revenue implications for Apple or Google. However, the precedent it sets carries weight. If these companies begin removing apps based on geopolitical risk assessments, they could be drawn into wider disputes between national governments and foreign software developers. This introduces long-term reputational and strategic risks.

At the same time, failure to act on legitimate regulatory requests could result in enforcement actions against Apple and Google themselves. Under the GDPR, companies that facilitate unlawful data processing may be held jointly liable. Additionally, the Digital Markets Act (DMA) and DSA allow for significant fines—up to 6% of global annual turnover—for non-compliance with core obligations.

Thus, the tech industry faces a complex risk calculus. Compliance with national laws is non-negotiable, but selective enforcement or political signaling could trigger accusations of digital protectionism or even retaliatory measures in other markets. In China, for instance, both Apple and Google have already faced censorship demands and operating restrictions. Taking action against DeepSeek could jeopardize their standing in one of the world’s most strategically important markets.

4. Precedents from Prior Content Removals

Both Apple and Google have previously removed applications due to regulatory pressure. In 2020, they removed the HKmap.live app amid tensions surrounding the Hong Kong protests, prompting criticism over their willingness to comply with authoritarian demands. In 2021 and 2022, several COVID-19 misinformation apps were delisted following pressure from Western health authorities.

In 2023, Apple removed several Russian state media apps in response to European sanctions following the escalation of the Ukraine conflict. Similarly, Google’s YouTube blocked Kremlin-backed content within EU territories. These cases suggest that both companies are willing to act when there is a clear legal or reputational mandate to do so—especially within jurisdictions where regulatory regimes are robust and public scrutiny is high.

What distinguishes the DeepSeek case is its direct connection to emerging AI governance frameworks. It signals a shift in enforcement targets—from traditional media and social platforms to frontier technologies that process vast amounts of personal and behavioral data in real time.

5. Broader Implications for Developers and Competitors

The removal or restriction of DeepSeek from major app stores would carry ripple effects across the developer ecosystem. Smaller AI startups, particularly those based in countries with limited data protection oversight, may preemptively withdraw from European markets or modify their architectures to include local data hosting and privacy-by-design features.

For major Western AI firms—such as OpenAI, Anthropic, and Cohere—this development could offer competitive breathing room. DeepSeek’s R1 model has been recognized for its efficiency and cost-effectiveness, achieved partly through alternative chip architectures and aggressive training optimization. Its removal would narrow the field of consumer-facing AI assistants in Europe, potentially reinforcing the dominance of American and European incumbents.

However, this advantage comes with a caveat: regulators are unlikely to tolerate double standards. If Western AI developers fail to demonstrate robust compliance with data protection laws—especially when retraining models using user interactions—they could face similar scrutiny. Thus, the DeepSeek precedent may compel the entire industry to adopt higher standards of data transparency, user consent, and lawful processing.

6. Toward Platform Accountability in AI Governance

The DeepSeek controversy exemplifies the growing importance of platform accountability in the context of artificial intelligence. App stores, cloud providers, and infrastructure vendors increasingly act as choke points through which software must pass to reach the end user. This gives them enormous influence over the diffusion of AI technologies—but also subjects them to new expectations.

European policymakers have begun to recognize this. Provisions in the forthcoming AI Act stipulate that distributors of high-risk AI systems—including app stores—must ensure that such systems meet conformity assessments and provide adequate user safeguards. This marks a decisive shift from voluntary moderation to shared legal liability.

In this evolving landscape, Apple and Google are likely to invest more heavily in compliance engineering, risk auditing, and AI-specific governance tools. They may also adopt AI model registries, provenance disclosures, and real-time data flow controls to meet the demands of increasingly assertive regulators.

In summary, the tech industry's response to Germany’s call to block DeepSeek represents a turning point in the governance of global AI platforms. While Apple and Google have not yet acted decisively, the pressure to align platform policies with national and supranational legal frameworks is mounting. As AI continues to shape the digital public sphere, the responsibility for ensuring lawful, ethical, and secure deployment will rest not only with developers, but also with the platforms that bring these tools to market.

DeepSeek’s Strategic Positioning & Future Outlook

As regulatory pressures mount and Western governments reassess their tolerance for foreign AI systems operating under opaque legal jurisdictions, DeepSeek faces an inflection point. From its rapid emergence as a competitive alternative to leading Western AI models to its current embroilment in privacy and geopolitical controversies, the Chinese AI platform must now recalibrate its strategic posture. This section examines the current position of DeepSeek in the global AI landscape, evaluates its core technological assets, and considers possible scenarios for its future within increasingly fragmented digital markets.

The Rise of DeepSeek: Strategic and Technological Context

DeepSeek emerged in January 2025 as a breakthrough AI assistant from a Chinese research consortium focused on optimizing performance across cost-efficient architectures. At the heart of the application is the R1 large language model (LLM)—a highly efficient, multilingual transformer trained on a broad corpus of Chinese, English, and cross-cultural datasets. Unlike some U.S.-based models, DeepSeek R1 was designed to perform well on low-cost hardware, particularly domestic GPUs produced in China in response to export restrictions on Nvidia’s H100 and A100 series.

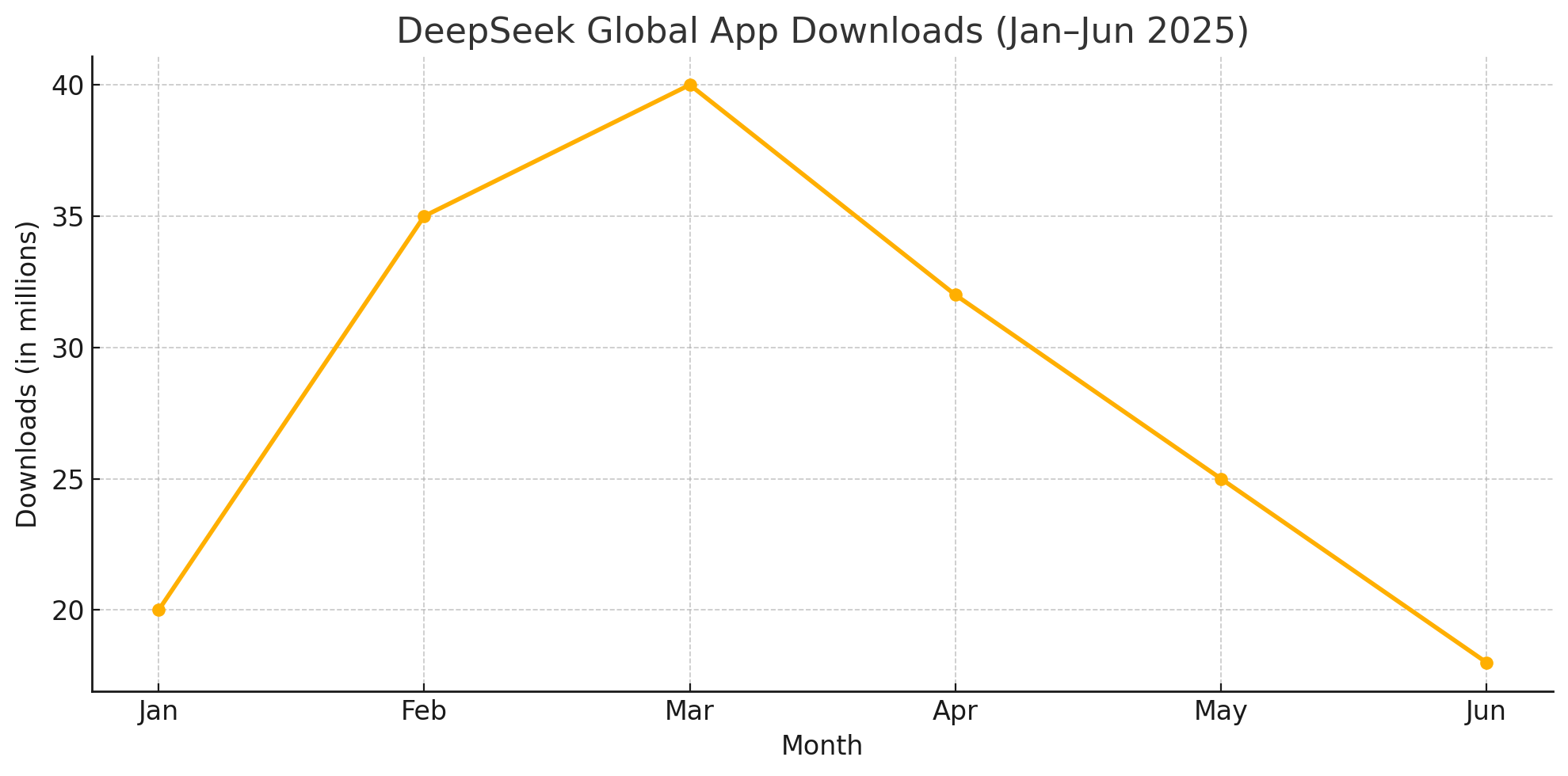

This design philosophy—AI made accessible for mobile and edge computing—allowed DeepSeek to scale rapidly across markets in Asia, Eastern Europe, Latin America, and parts of Africa. Its ability to function smoothly even on mid-range Android smartphones positioned it as a viable alternative to heavier, cloud-dependent models like GPT-4, which often require robust infrastructure and high-latency cloud connections. By mid-2025, DeepSeek had surpassed 80 million downloads globally, with over 12 million of those originating from within the EU.

DeepSeek’s strategic advantage rested not only in accessibility, but also in performance. Benchmarks from independent testers in Q1 2025 showed that DeepSeek’s R1 performed comparably to GPT-3.5 in conversational coherence and language generation—especially in non-English languages—while consuming fewer compute resources. Its response time was generally faster in latency-constrained markets, making it attractive to users seeking efficiency over advanced reasoning capabilities.

However, these gains have come at a cost. The lack of transparency in its model training pipeline, the absence of independent audits, and the offshoring of sensitive user data to China have exposed DeepSeek to intensifying scrutiny. In liberal democracies, where privacy, transparency, and accountability are prerequisites for public trust, these characteristics have become significant liabilities.

Strategic Silence and Lack of Public Engagement

Notably, DeepSeek has opted for a strategy of silence in the face of mounting regulatory pressure. German regulators, in their public statements, emphasized that the company failed to respond to multiple requests for clarification regarding its data transfer practices and legal basis for cross-border processing. This contrasts sharply with the crisis response playbook followed by U.S.-based AI firms, which typically includes publishing legal defenses, issuing transparency reports, or pledging compliance reforms.

This absence of engagement has only deepened suspicions. Critics argue that DeepSeek’s reticence is not merely a communications oversight, but a deliberate avoidance of regulatory confrontation. Given China’s stringent state secrecy laws and expansive cybersecurity requirements, it is plausible that DeepSeek is institutionally constrained from offering the kind of transparency demanded by European regulators.

From a strategic standpoint, the failure to address these concerns openly represents a missed opportunity. A proactive engagement campaign—featuring third-party audits, a commitment to GDPR-compliant data practices, and the establishment of a European data hosting facility—could have mitigated the reputational damage. Instead, DeepSeek now finds itself cornered: distrusted by regulators, scrutinized by privacy watchdogs, and potentially at risk of being de-platformed across multiple jurisdictions.

Market Implications of a European Ban

The potential removal of DeepSeek from the Apple App Store and Google Play in Germany—and possibly across the EU—would deal a significant blow to its international growth ambitions. While its core user base remains centered in China and other parts of Asia, the European market has symbolic and commercial importance. Success in the EU is often seen as a benchmark of trustworthiness and regulatory compliance. For a platform seeking to become globally competitive, exclusion from Europe sends a message that may reverberate far beyond its borders.

In addition, exclusion from European markets would disrupt partnerships with local developers, third-party service providers, and integration platforms that rely on app store accessibility. These cascading effects could lead to erosion in user growth and diminish DeepSeek’s appeal to non-aligned nations exploring partnerships with Chinese AI firms.

Furthermore, such a ban may act as a blueprint for other democratic jurisdictions. Canada, Japan, and Brazil have all expressed interest in adopting GDPR-inspired data protection frameworks. A coordinated response among these nations could limit DeepSeek’s international presence to a narrow band of digital ecosystems under Beijing’s influence.

Strategic Paths Forward: Scenarios and Recommendations

In light of these developments, DeepSeek faces a range of strategic options—each carrying different implications for its long-term viability and market reputation.

Scenario A: Full Withdrawal from EU Markets

The most conservative option would be to exit EU markets voluntarily, citing regulatory incompatibilities. While this would prevent further enforcement actions, it would also solidify DeepSeek’s isolation and limit its strategic reach. The company would be branded as a non-compliant actor, reinforcing the perception that Chinese AI systems cannot operate under democratic norms.

Scenario B: Establishment of a European Data Infrastructure

A more progressive route would involve the creation of a GDPR-compliant data infrastructure within the European Economic Area (EEA). This would entail hosting all EU user data on local servers, deploying strong encryption, and creating legal firewalls preventing data transfer to China. Microsoft and Amazon have already adopted similar approaches in response to European cloud regulations. While expensive and logistically complex, such a move would signal a genuine commitment to reform.

Scenario C: Strategic Realignment and Third-Party Certification

DeepSeek could pursue certification through an independent international standards body, offering algorithmic transparency reports and agreeing to model audits. This approach would require negotiating legal tensions with the Chinese government and demonstrating that its model training data does not contain unauthorized personal information. The tradeoff would be a loss of centralized control, but the potential reputational gain in Western markets may offset this.

Scenario D: Doubling Down on Friendly Markets

Alternatively, DeepSeek may refocus its expansion strategy on countries less hostile to Chinese technology. By aligning with the Digital Silk Road initiative and forming partnerships in Southeast Asia, the Middle East, and parts of Africa, DeepSeek could achieve regional dominance even without Western approval. However, this would reinforce the fragmentation of the global AI ecosystem and signal a shift toward a bifurcated digital order.

To contextualize DeepSeek’s rise and potential decline, we consider the following visual representation of its performance over time.

This trajectory illustrates how quickly global AI adoption can reverse in the face of regulatory action. While user demand for efficient and accessible AI remains strong, trust, legality, and compliance have emerged as the true gatekeepers of sustainability in the global digital economy.

Broader Lessons and Sectoral Implications

DeepSeek’s situation presents a cautionary tale for other emerging AI platforms—particularly those outside of U.S. and EU jurisdictions. Technological sophistication alone is no longer sufficient to guarantee success. As data becomes a contested resource, and algorithms are increasingly treated as sovereign instruments, companies must build legitimacy not only through innovation but also through transparent, verifiable adherence to legal norms.

Moreover, DeepSeek’s difficulties could serve as an accelerant for regulatory evolution. The European Commission, already working on implementing the AI Act, may use this case to expedite enforcement guidelines and define clearer criteria for AI system acceptability. International institutions like the OECD and G7 may also intensify efforts to harmonize AI governance standards, using DeepSeek as a precedent.

In summary, DeepSeek stands at a crossroads. Its early success, driven by technological ingenuity and efficient deployment, now contends with a radically altered risk environment. Whether it chooses to reform, retreat, or realign will determine not only its own future, but also the contours of the broader contest over global AI governance. What remains clear is that in a world increasingly governed by digital norms and transnational data flows, legitimacy is no longer a peripheral consideration—it is the primary currency of international trust.

Broader Tech-Geopolitical Implications

The regulatory and political reaction to DeepSeek’s presence in European and allied digital ecosystems is symptomatic of a much broader shift in global affairs. As artificial intelligence becomes a defining force in the 21st-century knowledge economy, the intersection of technology and geopolitics is producing new paradigms of conflict, cooperation, and control. The German-led call to block DeepSeek is not merely a data privacy issue—it is emblematic of a growing digital bifurcation that threatens to fragment the global internet into ideologically-aligned zones of influence.

AI as a Strategic Asset

Artificial intelligence is no longer viewed solely as a commercial innovation or scientific breakthrough. It has increasingly become a strategic asset, analogous to natural resources or military capabilities. Nations with advanced AI capacities can project influence economically, militarily, and culturally. This shift has led governments to reassess the openness of their digital infrastructures, particularly in relation to systems developed under authoritarian regimes.

In this context, DeepSeek represents a class of technologies that challenge existing norms of digital trust and accountability. Its origin in China—where laws mandate corporate data sharing with the state—places it at odds with liberal democratic principles centered on individual rights, rule of law, and institutional independence. The implications go far beyond user privacy. The risk calculus now includes concerns over algorithmic manipulation, behavioral profiling, and the potential embedding of surveillance functions in consumer software.

The fact that an app like DeepSeek can be both performant and unaccountable demonstrates how the international diffusion of AI is outpacing regulatory safeguards. For countries committed to democratic governance, this poses a dilemma: how to remain technologically competitive without importing tools that could compromise national values or citizen autonomy.

Digital Sovereignty and Strategic Decoupling

The European Union, through instruments such as the GDPR and the upcoming AI Act, has positioned itself as a global leader in digital regulation. However, the DeepSeek case reveals that legislation alone is insufficient without coordinated enforcement. Germany’s unilateral action suggests that national regulators may begin acting preemptively to safeguard their digital domains when supranational consensus is delayed.

This dynamic signals the advance of digital sovereignty—the idea that states must retain control over the data, algorithms, and digital infrastructure that shape their societies. It is closely linked to the emerging strategy of technological decoupling, particularly between China and the West. As trust in global governance mechanisms wanes, states are building parallel systems—each with their own standards, suppliers, and legal doctrines.

The removal or restriction of DeepSeek within Western jurisdictions could accelerate this decoupling. China, for its part, has already banned or tightly controlled access to U.S.-developed AI systems such as ChatGPT, Bard (Gemini), and Claude. Western moves to block Chinese systems like DeepSeek, under privacy and national security grounds, will likely be interpreted as reciprocal rather than unilateral.

This tit-for-tat escalation raises the specter of a balkanized internet—a fragmented global network where data does not flow freely, technologies are siloed, and digital services are region-specific. The result would be a reversion to technological blocs, mirroring the ideological and economic fault lines of a Cold War-style configuration.

The Role of Platform Powers in Geopolitical Mediation

Tech platforms like Apple, Google, and Microsoft are increasingly operating as geopolitical actors in their own right. Their decisions on app availability, data localization, and security features have cross-border implications, often rivaling those of state policy. In the DeepSeek case, their response will be scrutinized not only by regulators but also by diplomatic observers seeking cues about the evolving norms of platform governance.

By choosing to comply—or resist—Germany’s request, these companies must weigh the competing demands of market access, user safety, international law, and foreign policy alignment. In the absence of clear international standards for AI system interoperability, platforms find themselves in the uncomfortable position of adjudicating complex legal and moral questions about trust, risk, and legitimacy.

The emergence of this quasi-diplomatic role suggests a need for clearer norms around AI platform neutrality, cross-border legal harmonization, and responsible gatekeeping. Multilateral institutions, including the G7, the OECD, and the United Nations’ AI Advisory Body, may soon be called upon to develop binding frameworks that guide the behavior of both states and corporations in cross-jurisdictional AI deployment.

Global South Perspectives and the Geopolitical Middle

While the West and China represent the primary poles of AI geopolitics, much of the Global South is caught in the middle. Nations across Africa, Southeast Asia, and Latin America often face limited domestic capacity to build competitive AI systems and therefore rely on imports from dominant global players. In these contexts, the debate around DeepSeek takes on a different tone—one shaped by affordability, accessibility, and digital inclusion.

DeepSeek’s lightweight design, multilingual support, and offline capabilities make it particularly attractive in bandwidth-constrained environments. A wholesale ban of such tools, without providing viable alternatives, risks exacerbating the digital divide and feeding narratives that Western data protection standards are exclusionary rather than empowering.

Thus, any move to restrict foreign AI systems must be accompanied by a broader strategy to support open-source alternatives, subsidize local AI development, and build institutional capacity for data governance in lower-income countries. Otherwise, Western-led restrictions may appear as acts of technological imperialism rather than legitimate defenses of democratic values.

Long-Term Implications for AI Global Governance

The DeepSeek case underscores the urgency of establishing a global governance regime for artificial intelligence—one that reconciles the tensions between innovation, sovereignty, privacy, and interoperability. Without such a framework, fragmented enforcement actions will continue to provoke confusion, retaliation, and strategic uncertainty.

The development of baseline global principles for AI deployment—transparency, accountability, legal compliance, and auditability—must become a priority for international cooperation. These principles should be technologically neutral and politically inclusive, allowing for a diverse ecosystem of AI innovation while ensuring that core human rights and ethical standards are preserved.

In the absence of such cooperation, the world risks entrenching a “digital iron curtain”—a system of mutual exclusion where algorithms, data flows, and AI standards are no longer shared but fenced off behind sovereign borders. The DeepSeek controversy may prove to be a pivotal moment in preventing that outcome—or accelerating it.

Conclusion

Germany’s decision to formally request the removal of DeepSeek from the Apple App Store and Google Play marks a watershed moment in the global contest over data governance, technological sovereignty, and the future of artificial intelligence. At the heart of this dispute lies a fundamental conflict between the values embedded in European data protection law and the operational practices of AI platforms developed under markedly different political and legal systems.

DeepSeek, once hailed as a breakthrough in affordable and efficient AI, now finds itself increasingly constrained by legal scrutiny, institutional opacity, and geopolitical distrust. Its rapid growth, achieved in part through technological innovation and aggressive expansion strategies, has triggered a backlash that transcends commercial competition and enters the realm of statecraft. The concerns raised—ranging from unauthorized data transfers and opaque algorithmic practices to potential vulnerabilities to foreign surveillance—are not unique to DeepSeek. They are symptomatic of a broader crisis in global AI governance.

This blog has examined the multi-layered dynamics surrounding the DeepSeek controversy, from the intricacies of GDPR compliance and international regulatory fragmentation to the responsibilities of platform providers and the long-term risks of a fragmented internet. It has also outlined the strategic choices ahead for DeepSeek, governments, and global technology stakeholders.

What lies ahead will depend on how decisively stakeholders act—not just to enforce existing rules, but to create new, interoperable frameworks for trust and accountability in the AI era. As the line between digital infrastructure and national sovereignty continues to blur, the time has come for international collaboration, institutional transparency, and principled leadership.

The call to action is clear: safeguard user rights, uphold democratic norms, and build a global digital order where innovation does not come at the cost of freedom, privacy, or security.

References

- Reuters – Germany tells Apple, Google to block DeepSeek over data transfer issues

https://www.reuters.com/legal/litigation/germany-asks-apple-google-remove-deepseek-app-stores-privacy-concerns - New York Post – Germany moves to ban DeepSeek app over privacy concerns

https://nypost.com/2025/06/27/business/germany-asks-apple-google-to-block-chinese-ai-firm-deepseek-from-app-stores-over-unlawful-data-transfer - Red Hot Cyber – Apple and Google under pressure to remove DeepSeek

https://www.redhotcyber.com/en/post/apple-and-google-under-pressure-german-regulator-wants-to-remove-deepseek - Politico – German regulator calls out DeepSeek for violating GDPR

https://www.politico.eu/article/deepseek-ai-app-data-privacy-eu-gdpr-germany - Bloomberg – Europe scrutinizes DeepSeek amid fears of Chinese data control

https://www.bloomberg.com/news/articles/germany-europe-deepseek-data-privacy-ban - TechCrunch – Apple, Google face pressure to delist China’s DeepSeek AI

https://techcrunch.com/germany-apple-google-deepseek-ai-data-privacy - The Register – DeepSeek in trouble: Berlin regulator demands app store ban

https://www.theregister.com/2025/06/27/germany_ai_deepseek_blocked - Wired – What DeepSeek’s rise and fall means for global AI governance

https://www.wired.com/story/deepseek-ai-privacy-battle-germany - CNBC – DeepSeek faces international backlash over user data practices

https://www.cnbc.com/technology/deepseek-app-block-germany-europe - Euractiv – German move to ban DeepSeek could set EU-wide precedent

https://www.euractiv.com/section/digital/news/germany-pushes-deepseek-ban-eu