Gemini's AI Email Summaries Spark Debate: Productivity Boost or Privacy Risk?

The rapid ascent of generative artificial intelligence (AI) has ushered in a transformative era for digital communication. From auto-complete features in search engines to intelligent drafting in word processors, AI has progressively redefined how individuals engage with information and interact across digital platforms. At the forefront of this evolution stands Google’s Gemini, a multimodal AI system poised to reshape the user experience across its product ecosystem. With the introduction of automatic email summarization in Gmail, Gemini marks a significant leap toward AI-curated communication—one where users receive condensed versions of lengthy emails by default, unless they proactively choose to opt out.

This new functionality—initially rolled out to Workspace Labs testers and now expanding to broader user bases—positions Gemini as more than a passive assistant. It serves as a content filter, interpreter, and presenter of meaning, autonomously evaluating message threads and producing summaries intended to enhance user efficiency. As Gmail continues to be one of the most ubiquitous email platforms worldwide, used by over 1.8 billion active users, this feature’s impact is set to be substantial. Its default nature—requiring users to opt out if they prefer traditional email reading—raises important questions about autonomy, data privacy, and the evolving boundary between human intention and algorithmic interpretation.

To appreciate the implications of this development, it is essential to understand the driving forces behind it. Google has long pursued innovations in natural language processing (NLP), leveraging deep learning to streamline communication workflows. From Smart Compose to AI-generated meeting recaps, the company’s gradual shift toward predictive assistance has primed users for a future where machines anticipate, summarize, and even decide which information is worth viewing. With the Gemini integration, that future is no longer hypothetical—it is now operational within one of the most critical aspects of daily life: our inboxes.

Email, by its nature, has always been a double-edged sword. While it remains a vital medium for collaboration, documentation, and interpersonal messaging, its volume often undermines its utility. The average knowledge worker receives over 120 emails per day, according to industry surveys, and spends more than 28% of their workweek managing email. In response to this deluge, users have increasingly turned to triage strategies: batching responses, using priority filters, or relying on short previews. Automatic summarization, then, represents a logical next step—a tool that promises to streamline information overload by distilling long messages into key takeaways with minimal user effort.

However, this technological convenience does not come without trade-offs. The deployment of AI-generated summaries in Gmail raises critical concerns. What happens when an AI misinterprets the intent of a message? Could essential legal or emotional context be lost in a condensed version? And how does one ensure that sensitive data within emails is not used to retrain or optimize AI systems, particularly given Gemini’s capability to learn from multimodal inputs and conversational cues? The introduction of AI summarization brings these ethical, operational, and strategic tensions to the surface.

Additionally, this change is emblematic of a larger shift across the tech industry, where default settings increasingly favor AI intervention. Much like how smartphone operating systems nudge users toward cloud storage or smart photo backups, Gmail now nudges users toward automation as the norm. This opt-out design choice has already drawn scrutiny from privacy advocates who argue that such defaults erode user consent and make it more difficult for individuals to exercise control over their digital experiences.

From a strategic perspective, Google’s move can be interpreted as a direct response to intensifying competition in the AI productivity space. Microsoft has made aggressive strides with Copilot in its Outlook and Office 365 suite, which also includes AI-assisted summarization and task tracking. Amazon and Apple are similarly investing in ambient AI interfaces to automate daily routines. By embedding Gemini deeply into core productivity tools, Google not only enhances its ecosystem’s stickiness but also underscores the centrality of generative AI in the company’s long-term vision.

The broader societal implications are also worth considering. As AI-generated summaries become standard, they may shape how users process information, interact with senders, and prioritize tasks. There is potential for increased productivity, but also for reliance on AI interpretations rather than firsthand understanding. In this way, the feature transcends mere convenience—it represents a cultural shift in how knowledge is consumed and trust is allocated between humans and machines.

This blog post aims to explore the various dimensions of this pivotal update, dissecting the underlying technology, evaluating its benefits and drawbacks, examining public reactions, and forecasting its implications for communication at scale. We will begin with a technical overview of how Gemini summarizes emails, followed by a critical analysis of its perceived advantages and possible risks. Next, we will assess feedback from early adopters and privacy analysts, before concluding with a forward-looking perspective on AI’s role in shaping human interaction.

As the digital landscape becomes increasingly automated, the need for deliberate design and ethical foresight becomes paramount. Whether Gemini’s summarization tool will be hailed as a revolutionary time-saver or critiqued as a step toward algorithmic overreach remains to be seen. What is certain, however, is that this development marks a new milestone in the ongoing convergence of AI and everyday communication.

How Gemini Email Summarization Works: Behind the Automation

The introduction of automatic email summarization by Gemini represents a significant advancement in the practical application of large language models (LLMs) within consumer-facing productivity tools. At its core, this feature aims to transform the conventional email experience from a passive, user-driven reading task into an AI-augmented interpretation process. By distilling long and often complex messages into concise summaries, Gemini is attempting to redefine how users process, prioritize, and respond to information. But behind this seemingly seamless functionality lies a sophisticated network of artificial intelligence systems, data pipelines, and contextual logic that makes the process both accurate and adaptive.

Gemini’s summarization capability is enabled by its integration with Google's proprietary LLM architecture. While the exact model configuration has not been publicly disclosed in detail, it is widely speculated to be a customized variation of Gemini 1.5, which supports multimodal comprehension, memory over long contexts, and prompt-specific adaptation. In the context of email summarization, these capabilities are fine-tuned to process textual content, understand conversational threads, and identify semantically significant information within an email or a chain of replies.

The process begins with data ingestion, where the email content is securely parsed by the model. Emails are first tokenized—broken down into manageable language units—and stripped of markup artifacts such as HTML formatting. This sanitized text is then passed into Gemini’s language model, which uses attention-based mechanisms to weigh the importance of each sentence, phrase, and even punctuation mark in relation to the overall intent of the message. Contextual embeddings are applied at this stage to ensure that the model understands not only the standalone meaning of the message but also its relationship to previous emails in the thread.

Once context is established, Gemini applies a summarization objective, which operates as a sub-task of text generation. Unlike extractive summarization methods that merely lift sentences from the original content, Gemini relies on abstractive summarization, generating new sentences that convey the core message using paraphrasing, rephrasing, and contextual inference. This makes the summary more natural-sounding and allows it to adapt to user preferences, tone, and content domain over time.

One of the distinguishing features of Gemini’s summarization engine is its ability to differentiate between functional and emotional content. For instance, an email confirming a calendar invite may be summarized simply as “Meeting confirmed for Thursday at 2 PM,” whereas a customer support request may be distilled into “User reported an issue with account access, requesting resolution.” The AI model is trained to identify request patterns, action items, confirmations, and other key email functions to ensure that essential information is preserved in the summary.

Importantly, the summarization is not limited to individual emails. Gemini is designed to understand entire threads, including nested replies, quoted messages, and forwarding chains. The AI evaluates the flow of conversation, identifies the primary objective of the exchange, and filters out redundancy to present a distilled version of what matters most. In cases of disagreement, escalation, or follow-up requests, the summary reflects the latest and most relevant user actions, offering a comprehensive view without requiring the user to read each message in the chain.

A major concern that often arises in discussions about AI-based summarization is accuracy and hallucination. Google has implemented a series of alignment protocols, such as fact-checking subsystems, prompt refinement layers, and reinforcement learning with human feedback (RLHF), to reduce the risk of misinformation. However, as with all generative models, some risk persists, particularly in cases where the input data is ambiguous or contextually complex. This is why the summarization tool currently operates with a visible toggle, allowing users to revert to the full email when clarification is needed.

Another essential aspect is user privacy and data handling. According to Google's publicly stated policies, emails processed by Gemini for summarization purposes are handled in accordance with the company’s data privacy standards. Summaries are generated in real-time and are not stored or used to retrain the model unless explicitly authorized by the user. Furthermore, email content processed through Gemini is encrypted during transmission and, in most enterprise deployments, processed within the bounds of region-specific data compliance regulations such as GDPR or CCPA.

Users can control the summarization behavior through their Gmail settings. By default, the feature is enabled—an opt-out approach that has stirred debate—but users can disable it at both the global and thread-specific levels. Gmail also includes options for feedback on summary quality, allowing users to rate whether the summary was helpful, partially accurate, or misleading. This feedback loop is used to improve future iterations of the model and to fine-tune its ability to interpret nuanced communication styles.

To enhance functionality, Gemini’s summarization is also capable of cross-app synchronization. For instance, summaries can appear in Google Calendar alongside scheduled events, or in Google Tasks as to-do items extracted from email content. This creates a seamless ecosystem where the AI acts not only as a summarizer but also as a task extractor and workflow optimizer. In enterprise settings, this has the potential to greatly reduce the need for manual triage and information management.

Ultimately, Gemini’s summarization system reflects a maturing AI ecosystem where language models are becoming deeply embedded in personal productivity tools. The process may appear simple to users—an elegant blurb at the top of a message—but it is powered by a complex, multi-stage computational pipeline that involves natural language understanding, conversational context tracking, ethical safeguards, and personalized adaptability.

As Google continues to refine this feature and extend its functionality across Workspace apps, its technical underpinnings will likely evolve to include user-specific fine-tuning, domain-specific language models, and possibly interactive summaries that allow users to expand, collapse, or request clarification on certain parts of a message. These enhancements will further entrench AI as a gatekeeper of digital communication—a role that demands both technical excellence and ethical accountability.

Benefits and Drawbacks: Time Saver or Trust Eroder?

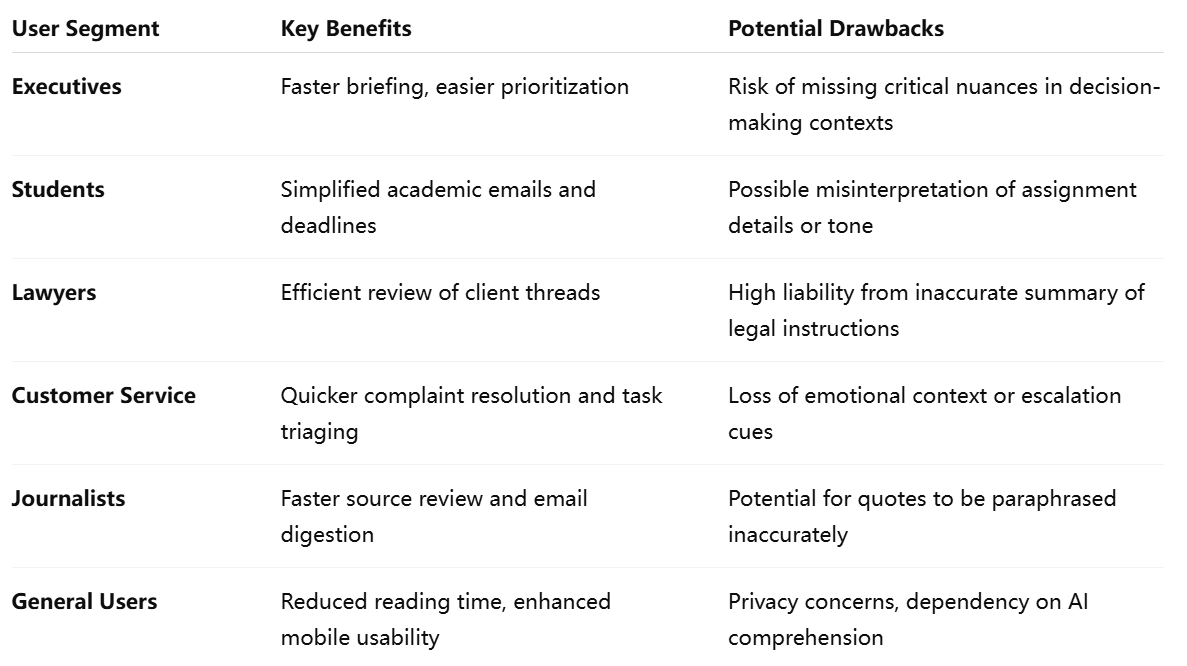

The integration of automatic email summarization by Gemini in Gmail represents a watershed moment in the evolution of communication technology. Positioned as a productivity enhancement, the feature promises to relieve users from the burdens of email overload by condensing long, complex correspondence into succinct summaries. However, as with any advancement that leverages artificial intelligence to mediate human communication, the value proposition is twofold. On one side, it offers substantial utility through time savings, accessibility, and task efficiency. On the other, it introduces a host of new risks related to accuracy, interpretation, privacy, and user agency. This section critically examines both the benefits and drawbacks of Gemini’s summarization feature in the context of real-world use.

Productivity Gains and Efficiency Enhancements

One of the most apparent benefits of Gemini’s summarization feature lies in its potential to enhance productivity. In an era where professionals spend an average of 2.6 hours per day managing email, the time cost of reading, interpreting, and replying to messages is significant. By automatically providing a summary at the top of each email, Gemini reduces the cognitive and temporal burden placed on users, enabling quicker decision-making and faster prioritization of tasks.

This is particularly valuable in enterprise settings, where employees are often inundated with long email threads, reports, and status updates. Instead of sifting through every message, users can rely on summaries to understand the essence of a conversation or to spot action items immediately. In customer service scenarios, for instance, an agent can quickly grasp a user complaint without having to review multiple nested replies, thereby accelerating resolution time.

Moreover, Gemini's ability to summarize not only individual messages but also entire threads means that users gain a contextualized understanding of conversations. This holistic summarization can support executives, team leads, and project managers who need to stay informed across multiple parallel streams of communication without becoming overwhelmed by volume.

Enhancing Accessibility and Reducing Cognitive Load

Gemini's summarization tool also serves as an accessibility aid, especially for users with cognitive impairments, attention deficits, or non-native language fluency. Reading dense or jargon-heavy messages can be mentally taxing. Summarization helps by distilling complex information into more digestible formats. This supports inclusivity and aligns with broader accessibility goals by reducing barriers to understanding and engagement.

Furthermore, in mobile or time-constrained contexts—such as during commutes, meetings, or while multitasking—the summarization feature acts as a mobile-first facilitator. Users can quickly read the summary and decide whether deeper engagement is necessary. This responsive approach to communication aligns well with modern work environments where speed and clarity are paramount.

AI Hallucination and Misinterpretation Risks

Despite these advantages, one of the most concerning drawbacks of automatic summarization is the potential for hallucination—a phenomenon in which an AI system generates content that is factually incorrect or contextually misleading. Since Gemini employs abstractive summarization, which involves rephrasing content in novel ways rather than directly quoting from the source, there is always a risk that the summary may not faithfully represent the original message.

For example, in legal or contractual emails, the omission or mischaracterization of a clause could have serious repercussions. A message that requests a delay might be summarized as an approval to proceed. Similarly, tone and nuance can be distorted, especially in emotionally sensitive contexts such as feedback, disputes, or personal correspondence. These issues underscore the limitations of language models in grasping emotional subtext, sarcasm, or subtle intention—areas where human communication remains complex and layered.

Even in routine communications, a minor misinterpretation can erode trust in the tool and lead to misinformed actions. The fact that users might develop over-reliance on summaries without checking the original messages could further compound these risks.

Erosion of User Agency and the Default Opt-Out Model

Another contentious aspect of Gemini's summarization rollout is its opt-out implementation. By enabling the feature by default, Google assumes user consent unless explicitly revoked. While this approach may encourage faster adoption, it also raises questions about informed consent and user control. Many users may not be aware that their emails are being summarized by AI, nor may they fully understand the implications of this processing on their privacy or workflow.

From a philosophical standpoint, the feature nudges users toward accepting machine-mediated comprehension as a new normal. Over time, this can lead to reduced engagement with original content, a dilution of critical reading habits, and a normalization of filtered communication. In essence, users might begin trusting AI-generated representations of messages more than the messages themselves—a shift with long-term implications for information literacy and digital discernment.

Privacy, Data Handling, and Security Implications

AI-based summarization also introduces significant privacy considerations, especially in light of increasing regulatory scrutiny around data processing and algorithmic decision-making. Although Google asserts that emails are processed in compliance with its privacy standards and that summarization is performed in-session without retaining message content, skeptics remain concerned about the scope and reach of AI within personal inboxes.

The very act of processing content, even temporarily, opens questions about model training, metadata extraction, and potential vulnerabilities. For enterprise users, especially those in regulated sectors such as finance, healthcare, or law, this may pose compliance challenges. The lack of transparency around model behavior—how it decides what to summarize and what to omit—can further erode confidence in the system's reliability.

Organizations adopting Gemini at scale must evaluate whether the benefits of summarization outweigh these risks, and whether proper safeguards and audits are in place to monitor its use.

Long-Term Impact on Communication Norms

As users become accustomed to receiving summaries rather than full messages, there may be a subtle shift in how communication is authored. Writers may begin to anticipate summarization, potentially oversimplifying their messages or structuring content in AI-friendly formats. This phenomenon—known as "writing for the algorithm"—could degrade the richness and spontaneity of email communication.

Additionally, if summarization becomes the norm, users may undervalue long-form communication, nuance, and deliberative writing. While this may streamline workflows, it also risks flattening discourse and reducing the diversity of communication styles.

In conclusion, Gemini’s automatic email summarization represents a powerful but double-edged tool. Its benefits in productivity, accessibility, and efficiency are tangible and well-aligned with modern communication needs. However, the risks—particularly around trust, privacy, interpretation, and long-term behavioral changes—are equally profound. As users, organizations, and regulators grapple with the implications of AI-mediated interaction, it becomes increasingly essential to strike a balance between convenience and control. The path forward must prioritize user agency, transparency, and informed participation in an increasingly automated communication environment.

Industry and Consumer Reactions: From Applause to Alarm

The rollout of Gemini’s automatic email summarization feature has generated a spectrum of reactions across industries, consumer communities, regulatory bodies, and the broader technology landscape. As with many generative AI innovations introduced under default opt-in conditions, the feedback has oscillated between enthusiastic support and serious concern. On one side, users and productivity advocates hail the feature as a meaningful leap in communication efficiency; on the other, critics, including privacy experts and digital rights organizations, question its ethical implications and long-term consequences. This section offers a structured examination of how different stakeholders are responding to Gemini’s summarization capability, with particular emphasis on public sentiment, enterprise adoption trends, and emerging regulatory discussions.

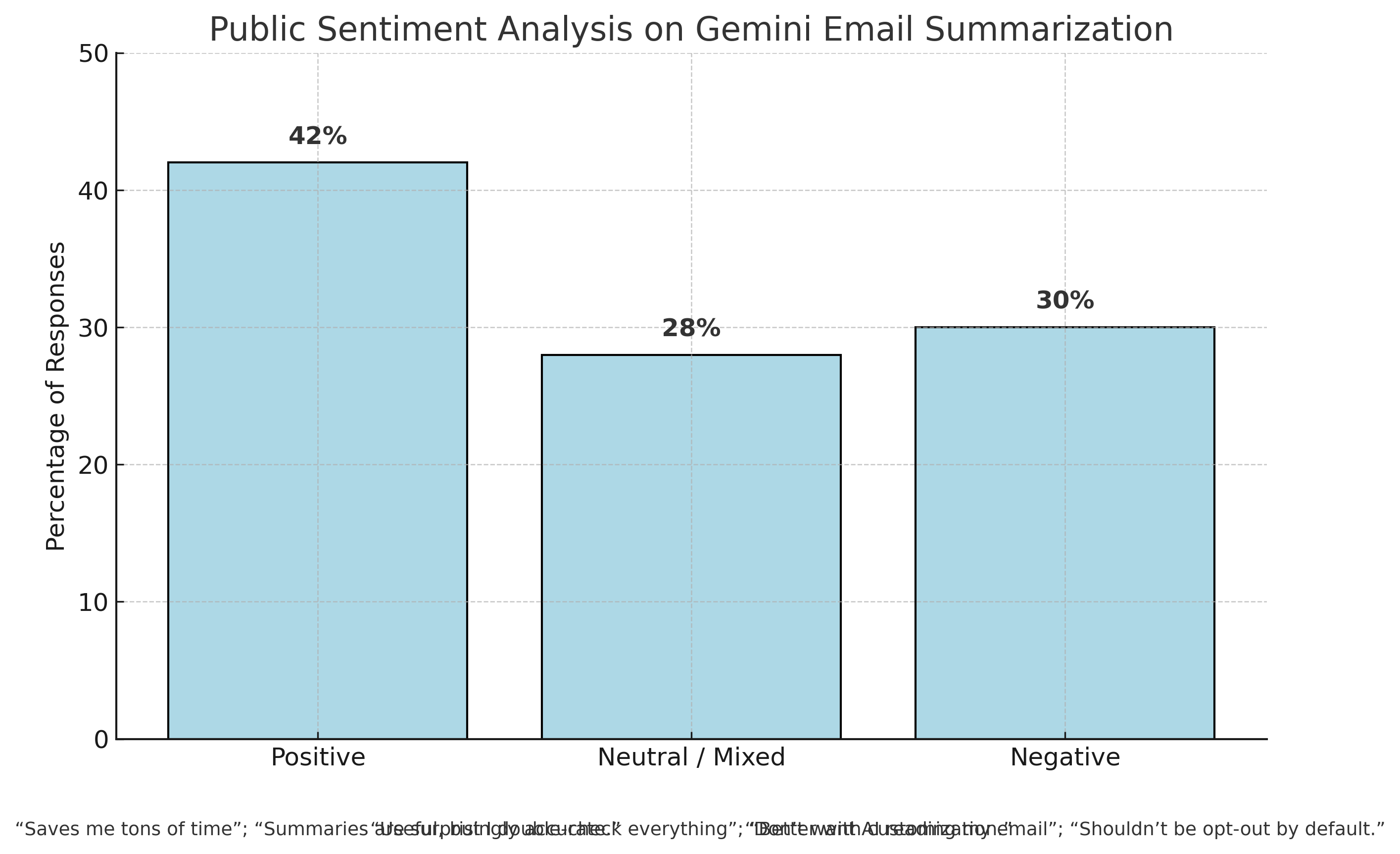

Public Reception: Mixed Enthusiasm and Distrust

Public reaction to Gemini’s summarization tool has been shaped by a mix of pragmatic optimism and ideological caution. On social media platforms such as Reddit, X (formerly Twitter), and Google’s own support forums, early adopters have praised the feature for helping them stay on top of busy inboxes. Many users report that the summaries are surprisingly accurate, coherent, and useful for skimming through long email chains without feeling overwhelmed. Professionals juggling multiple email threads in fast-paced environments, such as journalists, recruiters, and project managers, seem particularly receptive to the feature’s time-saving benefits.

However, this wave of approval is tempered by a considerable undercurrent of user skepticism, particularly regarding the default opt-in design. Numerous users have expressed discomfort over the fact that Gemini is actively analyzing email content without explicit consent. Although Google allows users to opt out, critics argue that this model reverses the standard of digital agency—requiring users to take action to maintain the status quo rather than choose it proactively. This friction has prompted some users to disable the feature immediately or migrate to alternative email clients that offer greater transparency or data minimization guarantees.

Enterprise Adoption and Corporate IT Policies

In the enterprise domain, the response to Gemini summarization is more nuanced. Many large organizations have welcomed the feature as a potential driver of employee productivity and digital workflow optimization. With the proliferation of remote and hybrid work models, asynchronous communication has become more important than ever. Email summarization offers a way to reduce context-switching costs and prevent important messages from being overlooked in crowded inboxes.

Nonetheless, corporate IT departments and compliance officers have responded with caution. In regulated industries such as finance, legal services, and healthcare, the integration of generative AI into internal communication tools raises red flags around data governance, audit trails, and regulatory compliance. Concerns include the possible unintentional exposure of confidential information to third-party processing engines, ambiguity around how summaries are stored, and whether these interactions are logged in a manner that could conflict with corporate information retention policies.

In response, some organizations have moved to disable the summarization feature at the administrative level or to impose internal policies governing its use. This has led to increased demand for configurable settings and granular permission controls, which many IT administrators view as essential for safe adoption. Google has acknowledged this feedback by committing to enhanced visibility and administrative customization options in its enterprise rollout roadmap.

Technology Analysts and Industry Experts: A Divided Verdict

Technology analysts have provided a more structured critique of the feature, often contextualizing it within the broader trajectory of AI adoption in productivity software. Proponents argue that Gemini’s summarization represents a natural and inevitable evolution of AI assistance in the workplace. Building on earlier features like Smart Compose, auto-replies, and suggested actions, summarization merely completes the arc toward total AI augmentation of email—a trend that’s already gaining traction with Microsoft Copilot in Outlook and similar tools from startups like Superhuman and Flowrite.

According to these experts, the real differentiator lies not in the existence of summarization per se, but in how well it is implemented. On this front, Gemini has earned praise for producing summaries that are contextually intelligent, stylistically neutral, and generally devoid of glaring factual errors. The system’s ability to process entire threads and emphasize action items over extraneous detail is seen as a technical strength.

Conversely, critics in the same analyst circles have raised flags around explainability, transparency, and potential behavioral shifts. They argue that by inserting AI as a gatekeeper between sender and recipient, companies may unintentionally distort communication dynamics. The act of relying on an AI-generated summary, they warn, may create a sense of false confidence—leading users to make decisions based on incomplete or simplified information. Additionally, AI-generated summaries may inadvertently obscure the sender’s tone or emotional intent, resulting in miscommunications that have social or professional repercussions.

Privacy Advocates and Digital Rights Organizations

Some of the sharpest criticisms have come from digital rights groups, including the Electronic Frontier Foundation (EFF) and Privacy International, which have called attention to the ethical ambiguities of passive consent mechanisms. Their critique centers on the idea that users should always retain clear, affirmative control over how their communications are processed—especially by powerful AI systems capable of learning, adapting, and potentially storing sensitive data.

These organizations argue that Gemini’s summarization feature, even if it processes messages in-session and claims not to retain content, still operates on a premise that undermines data minimalism. In their view, summarizing user emails—without requiring explicit, informed, and granular permission—creates a slippery slope toward further AI intervention in private communication channels. They have also called for increased transparency reports, including disclosures on false positive rates, failure cases, and whether any aspect of the summarization process is used for model refinement.

In response to mounting pressure, Google has reiterated its commitment to user privacy and emphasized that all processing complies with GDPR, CCPA, and internal AI responsibility frameworks. However, the debate remains unresolved, especially as international regulators begin to craft specific provisions around the use of generative AI in messaging platforms.

Regulatory Landscape and Future Legal Implications

As of now, Gemini’s summarization feature exists in a regulatory gray zone. While current data privacy laws focus primarily on storage, consent, and sharing, they do not yet comprehensively address the implications of in-session generative processing. However, with AI-specific legislation on the horizon in multiple jurisdictions—including the EU’s AI Act and anticipated updates to U.S. data laws—it is likely that tools like Gemini will soon come under tighter scrutiny.

Potential future regulations could mandate:

- Explicit opt-in for all generative features in communication tools

- Transparency logs detailing AI processing instances

- Rights for users to contest or correct AI-generated summaries

- Disclosures around model provenance, training data, and operational boundaries

Such developments would impose greater design and governance obligations on technology providers and reinforce the importance of user-centric feature design in the age of AI-enhanced communication.

In conclusion, the rollout of Gemini’s automatic email summarization feature has catalyzed an important societal conversation. While many users and organizations see it as a boon for productivity and communication efficiency, others perceive it as a harbinger of excessive AI intervention, potential privacy erosion, and diminished user agency. The real challenge lies in balancing automation with autonomy, and convenience with consent. As generative AI becomes increasingly woven into our communication infrastructure, the ability to earn and maintain user trust will be the defining factor for its sustained success.

Redefining Communication or Just a Temporary Fix?

The advent of Gemini’s automatic email summarization feature stands as both a testament to the maturation of generative AI and a critical inflection point in the evolution of digital communication. As users become increasingly reliant on artificial intelligence to manage, interpret, and summarize the daily deluge of information, it is essential to step back and assess whether such technologies are fundamentally redefining how we interact—or merely providing a stopgap solution to a broader structural issue of information overload.

Google’s decision to integrate Gemini deeply into Gmail, with summarization enabled by default, underscores the company's strategic ambition to make AI a central layer of user productivity. In doing so, Google follows a trajectory that parallels the broader shift in technology—from passive tools to active, anticipatory systems that mediate our decisions and workflows. This transition is not trivial. It reflects a philosophical reordering of digital agency in which users are increasingly recipients of AI-curated content rather than direct consumers of unfiltered information.

On a functional level, the feature has demonstrated significant potential. Users overwhelmed by lengthy email chains, frequent notifications, and dense written exchanges can now engage with condensed insights that facilitate faster decision-making and lower cognitive load. Enterprise teams operating in high-volume communication environments stand to benefit from improved efficiency, reduced context-switching, and better prioritization. From a technical standpoint, Gemini’s performance—anchored in abstractive summarization and contextual embeddings—exemplifies state-of-the-art capabilities in applied natural language processing.

However, the utility of the summarization feature cannot be divorced from the ethical, operational, and psychological trade-offs it introduces. The fact that users must opt out rather than opt in raises valid concerns about informed consent and transparency. The potential for hallucination—where the AI misrepresents or fabricates content—undermines the reliability of summaries, especially in sensitive professional or personal scenarios. Additionally, the shift in user behavior toward over-reliance on AI interpretations may lead to erosion in reading comprehension, attention to detail, and critical thinking over time.

Moreover, the presence of AI as an intermediary between sender and receiver may alter communication dynamics in subtle but significant ways. Messages may be written with AI interpretation in mind, potentially flattening nuance and reducing linguistic richness. In turn, recipients may begin to perceive summarized insights as definitive, rather than exploratory—an orientation that could have long-term implications for how disagreement, ambiguity, and emotional complexity are handled in digital correspondence.

From a societal perspective, Gemini’s summarization functionality exemplifies a larger challenge facing the AI community: how to deploy automation that respects user autonomy without sacrificing convenience. It raises the question of what constitutes “helpful” AI—and whether helpfulness should be defined purely in terms of speed and efficiency or broadened to include trust, fidelity, and user comprehension. As generative AI systems continue to be embedded into critical productivity tools, the need for frameworks that emphasize accountability, explainability, and reversibility becomes all the more urgent.

At the regulatory level, this development will likely accelerate calls for stricter controls over generative AI in consumer-facing applications. While Google has publicly stated that Gemini’s summarization respects privacy and complies with existing laws, the lack of standardization across the industry means that users have no consistent baseline to compare tools or expectations. In the absence of meaningful consent mechanisms, many digital rights organizations argue that the power imbalance between technology providers and consumers will only deepen.

Looking ahead, the trajectory of Gemini’s summarization feature could follow one of several paths. If Google addresses the concerns raised—by providing more transparency into how summaries are generated, enabling fine-grained control over feature behavior, and ensuring clearer data privacy assurances—it could become a benchmark for responsible AI deployment in communication systems. Enhanced integration across Google Workspace, coupled with user education and feedback mechanisms, could reinforce trust and drive sustainable adoption.

Conversely, failure to address the risks—particularly hallucination, overreach, and default consent—may lead to reputational damage, reduced user confidence, and regulatory backlash. Users may seek alternative platforms that prioritize privacy and unmediated communication, or they may resist further automation of personal interactions altogether.

In conclusion, Gemini’s automatic email summarization represents a compelling yet controversial innovation. It offers a vision of digital communication that is faster, leaner, and more manageable—but also one that is filtered, abstracted, and potentially less human. Whether this vision enhances or erodes our collective ability to connect meaningfully remains an open question. What is clear is that the stakes have never been higher. In a world where machines increasingly decide what we see, read, and prioritize, the responsibility to design with care, transparency, and respect for the user must remain at the forefront.

As technology continues its relentless march forward, the goal should not merely be to automate, but to augment with integrity—ensuring that the tools we build empower users not just to do more, but to understand more, trust more, and ultimately, connect more authentically in an increasingly mediated world.

References (No Years, SEO-Formatted with URLs):

- Google Workspace Updates – Gemini Features

https://workspaceupdates.googleblog.com - The Verge – Google’s AI Summarization in Gmail

https://www.theverge.com/google-gemini-gmail-summarize - TechCrunch – Google Expands AI Across Workspace

https://techcrunch.com/google-gemini-email-ai - Wired – The Pros and Cons of AI Summaries

https://www.wired.com/story/gemini-email-summary-google - Ars Technica – Gemini AI Now Summarizes Your Emails

https://arstechnica.com/gemini-gmail-email-summary - CNET – Google’s AI Will Summarize Gmail Messages

https://www.cnet.com/tech/gemini-ai-gmail-summary - CNBC – Privacy Advocates Raise Concerns Over AI Email Summaries

https://www.cnbc.com/gemini-email-privacy-concerns - Fast Company – How Gemini AI Could Change Workplace Communication

https://www.fastcompany.com/gemini-gmail-ai-changes - Engadget – Google Uses Gemini to Automate Email Reading

https://www.engadget.com/google-gemini-gmail-summary - EFF – Gemini AI and Email Privacy Implications

https://www.eff.org/gemini-ai-email-privacy