Developer Downfall? Unpacking the Hidden Risks of AI Dependency in Software Engineering

In the rapidly evolving landscape of software development, artificial intelligence (AI) has emerged as a transformative force. From streamlining repetitive coding tasks to offering intelligent suggestions and even generating entire blocks of code, AI tools have become indispensable components of the modern developer’s toolkit. Technologies such as GitHub Copilot, ChatGPT, Tabnine, and others are increasingly integrated into integrated development environments (IDEs), offering assistance that ranges from simple syntax correction to complex algorithm design. This growing adoption is driven by the promise of increased productivity, accelerated development cycles, and enhanced accessibility for individuals new to programming.

However, as developers become more reliant on AI-assisted tools, a new set of challenges and risks begins to surface. While the benefits are considerable, the dependency on AI systems introduces vulnerabilities that merit careful consideration. These risks are not limited to technical dimensions but also extend to educational, ethical, and professional domains. Developers may find themselves gradually losing touch with foundational programming principles, leading to an erosion of core problem-solving capabilities. Moreover, issues related to code security, intellectual property, and the quality of AI-generated solutions raise serious concerns for organizations and individual practitioners alike.

This blog post explores the multifaceted risks associated with increasing reliance on AI in software development. It aims to provide a comprehensive examination of how AI dependency may inadvertently undermine key competencies within the developer community. Through a structured analysis across five sections, the article will highlight critical themes including the evolution of AI in coding, the potential degradation of developer skills, security and quality issues in AI-generated code, the ethical and legal implications of code ownership, and strategies for fostering a balanced, responsible approach to AI integration.

By examining current trends and real-world examples, this discussion seeks to empower developers, team leads, and decision-makers with insights to make informed choices about their use of AI tools. As artificial intelligence becomes more capable and more deeply embedded in the software development lifecycle, a proactive understanding of its associated risks will be essential. Ultimately, the goal is not to discourage the use of AI in development, but to encourage thoughtful and intentional integration—ensuring that human ingenuity, critical thinking, and ethical accountability remain at the forefront of the software engineering discipline.

The Rise of AI in Software Development

The integration of artificial intelligence into software development has progressed from a niche innovation to a fundamental component of the modern programming ecosystem. The transition from rule-based systems and basic code autocomplete functionalities to large-scale AI-powered development tools marks a significant shift in how code is written, reviewed, and maintained. This transformation has been driven by advances in natural language processing (NLP), machine learning (ML), and, most recently, large language models (LLMs), which have collectively enabled machines to understand, generate, and refactor code at an unprecedented level of sophistication.

Historically, software development was entirely a manual and mentally intensive task. Developers relied on comprehensive knowledge of programming languages, algorithms, data structures, and debugging techniques. Over the past two decades, integrated development environments (IDEs) introduced productivity features such as syntax highlighting, code snippets, and autocomplete functions. These tools improved developer efficiency but were fundamentally limited in their contextual understanding. The rise of AI in the past five years, particularly transformer-based models, has fundamentally changed this landscape. AI systems can now interpret developer intent, understand natural language prompts, and generate code that aligns closely with specific functional requirements.

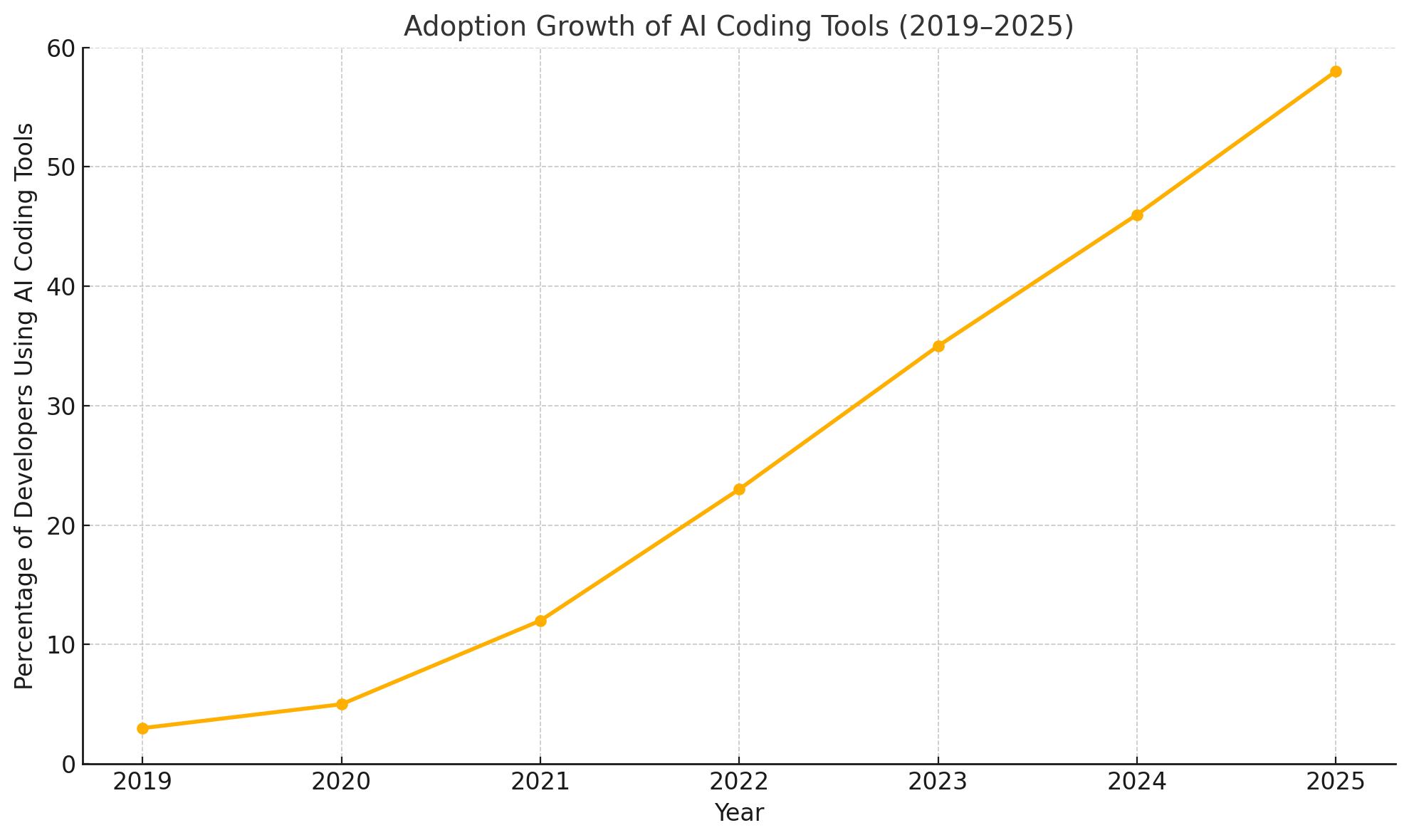

The most prominent example of this evolution is GitHub Copilot, launched in 2021 and built on OpenAI’s Codex model. Copilot acts as a real-time pair programmer, suggesting entire lines or blocks of code as the developer types. It leverages patterns from billions of lines of code drawn from public repositories, allowing it to offer solutions to common programming tasks. Since its debut, Copilot has seen rapid adoption across enterprises, startups, and educational institutions. According to GitHub's 2024 developer survey, over 46% of professional developers report using AI coding assistants regularly, a figure that has doubled since 2022.

Alongside Copilot, other platforms such as Tabnine, Amazon CodeWhisperer, Replit’s Ghostwriter, and ChatGPT plugins have expanded the landscape of AI-enabled coding support. These tools are not limited to code generation; they assist in documentation, code summarization, refactoring, test creation, and even debugging. Developers can describe what they want to build in plain English, and the AI will generate the scaffolding or complete implementations. This has proven especially valuable for prototyping, exploring unfamiliar APIs, or working across multiple programming languages.

The benefits are significant. Developers report faster turnaround times, reduced cognitive load, and lower barriers to entry for novice programmers. Startups and small teams benefit from increased velocity, as AI can partially offset limited manpower. Educational settings leverage AI tools to help students grasp complex coding concepts by providing immediate and contextual examples. Furthermore, companies integrating AI-assisted development into their workflows often report improvements in feature delivery timelines and codebase maintainability.

Despite these advantages, the speed of adoption has outpaced the development of best practices or standardized guidelines for AI tool usage. In many organizations, AI-generated code is introduced into production environments with limited human oversight. This reality underscores the importance of scrutinizing the implications of such widespread use.

To visualize the surge in AI adoption, consider the following chart:

These trends clearly illustrate a fundamental change in the software development paradigm. What was once a solitary, logic-driven exercise is becoming increasingly collaborative—with machines playing the role of intelligent assistants capable of contributing meaningfully to the codebase. Yet, this shift also introduces latent dependencies, as developers become accustomed to offloading more of their cognitive work to AI systems.

An important aspect of this transition is its impact on how development teams are structured. Junior developers increasingly rely on AI suggestions as substitutes for guidance typically provided by mentors or team leads. Mid-level engineers may optimize for speed over comprehension, trusting that the AI is “usually right.” Senior developers, while more aware of the nuances involved, may still grow dependent on these tools for boilerplate tasks, ultimately influencing the broader culture of software craftsmanship.

Moreover, AI-driven development is influencing hiring, training, and education practices. Bootcamps and technical courses now incorporate AI tools in their curricula, and interviews increasingly assess prompt engineering skills alongside traditional coding proficiency. As the boundary between human-generated and machine-assisted code blurs, so too does the definition of a skilled developer.

While this evolution may be inevitable, it is not without trade-offs. The transition toward AI-enhanced programming must be approached with a sense of caution and strategic awareness. As subsequent sections will explore, the convenience afforded by AI may come at the cost of diminished understanding, reduced accountability, and the potential erosion of long-term software quality.

In summary, the rise of AI in software development represents a paradigm shift fueled by innovation, necessity, and a relentless pursuit of efficiency. Tools like Copilot and its peers are rapidly becoming standard components of the developer experience. Yet as the field races toward greater automation, it becomes critical to pause and evaluate the consequences of such a shift—not only in terms of technical outcomes but in the very fabric of what it means to be a developer in the AI era.

Erosion of Core Programming Skills

As artificial intelligence becomes increasingly embedded in the software development process, a growing concern emerges: the gradual erosion of core programming skills among developers. While AI tools offer immense convenience and efficiency, their widespread use may inadvertently undermine a developer’s ability to write, understand, and debug code independently. This phenomenon poses risks not only to individual proficiency but also to the broader integrity of software engineering as a discipline.

Traditionally, developers have acquired their expertise through direct engagement with problems—writing algorithms from scratch, debugging complex issues, and learning from errors encountered along the way. These experiences instill a deep understanding of programming paradigms, computational thinking, and best practices. However, with AI tools like GitHub Copilot, ChatGPT, and Tabnine now capable of generating entire code snippets or functions from simple prompts, developers may bypass the rigorous cognitive processes that once formed the bedrock of software craftsmanship.

A significant consequence of this shift is a diminished grasp of programming fundamentals. Developers relying heavily on AI-generated suggestions may struggle to comprehend the underlying logic of the code they integrate into their applications. Over time, this can result in a superficial understanding of syntax and structure, with a reduced capacity to innovate or troubleshoot effectively when AI tools fall short. This issue is especially pronounced among novice programmers who, rather than learning through exploration and iteration, become accustomed to accepting AI outputs at face value.

The implications of this are not merely theoretical. Several studies and industry reports have begun to document this trend. For example, a 2024 survey by Stack Overflow revealed that 38% of developers under the age of 30 admitted to using AI tools as their primary method for solving coding problems, often without verifying the logic or security of the generated code. This dependence can lead to an overconfidence in code that has not been thoroughly understood, increasing the likelihood of introducing bugs or vulnerabilities into production systems.

Moreover, the erosion of core skills extends beyond syntax and semantics. Problem decomposition—a crucial aspect of effective programming—risks being neglected. Developers may become less inclined to break down complex requirements into manageable components if they believe AI can generate an end-to-end solution. This discourages analytical thinking and hampers the development of robust architectural design skills. The role of abstraction, modularity, and optimization—core principles that differentiate excellent programmers from average ones—may become undervalued in an AI-dominated workflow.

Another concern lies in debugging. While AI can assist in identifying errors and suggesting fixes, developers must still understand how to trace issues through a codebase, interpret stack traces, and assess the broader implications of a change. Without these abilities, reliance on AI becomes a double-edged sword. When an AI-generated function fails or produces unintended consequences, the developer must have sufficient knowledge to diagnose and resolve the issue. A lack of debugging proficiency can lead to prolonged downtime, higher maintenance costs, and decreased code quality.

Mentorship and professional growth within development teams are also affected. In environments where AI-generated code is pervasive, junior developers may receive fewer opportunities to engage in deep learning processes guided by senior engineers. Instead of exploring multiple approaches to a problem or engaging in constructive code reviews, junior team members may adopt a passive stance, assuming that AI-suggested code is inherently correct. This dynamic risks creating a workforce that is proficient in prompt engineering but deficient in foundational software engineering skills.

Anecdotal evidence from industry professionals supports these concerns. In one notable case shared at a 2023 developer conference, a team lead at a major fintech company reported that entry-level developers hired during the pandemic—when remote work and AI tools became more prevalent—demonstrated weaker debugging and architectural design skills than previous cohorts. Despite being productive in terms of output volume, these developers often lacked the ability to explain the code they were using, let alone refactor it for long-term maintainability. The issue necessitated additional onboarding and retraining measures to ensure quality and accountability.

Educational institutions are not immune to these challenges. While some universities have embraced AI tools as part of their curriculum, others express concern that students are outsourcing too much of their cognitive effort. Professors report instances of students submitting assignments composed almost entirely of AI-generated code, often with minimal understanding of how or why the code works. This trend calls into question the effectiveness of traditional assessment models and the role of educators in preserving programming literacy in the AI era.

Furthermore, the psychological effects of AI dependence warrant consideration. Developers who routinely rely on AI to solve problems may experience diminished confidence in their own abilities. This learned helplessness can discourage initiative and reduce the motivation to explore alternative solutions. The creative aspect of programming—once considered a hallmark of the profession—may give way to a transactional mindset centered around efficiency and minimal effort. Over time, such a shift can erode the culture of innovation that has long driven technological progress.

It is important to recognize that the erosion of core skills does not occur in isolation. It is exacerbated by organizational pressures to deliver faster, coupled with a lack of structured oversight on AI tool usage. In many agile environments, velocity metrics and delivery deadlines incentivize developers to prioritize speed over comprehension. When AI tools facilitate rapid prototyping and deployment, the temptation to skip thorough code reviews, testing, and documentation becomes stronger. Without deliberate intervention, the long-term effects of this shortcut-driven approach can compromise software reliability and maintainability.

Despite these challenges, there are strategies that organizations and individuals can employ to mitigate the erosion of programming skills. Encouraging code literacy, mandating code reviews, and fostering a culture of continuous learning are critical. Developers should be encouraged to question AI-generated outputs, conduct manual testing, and document their reasoning for incorporating specific solutions. Pair programming—especially when one partner is human and the other AI—should be structured in a way that emphasizes learning rather than passive acceptance.

In addition, mentorship must be preserved as a cornerstone of professional development. Senior engineers should guide less experienced colleagues through the rationale behind architectural decisions, trade-offs, and implementation strategies. Educational programs should adapt to the presence of AI by designing assignments that require students to explain, modify, and defend their code, rather than merely submitting working solutions.

Ultimately, while AI tools are powerful allies in the development process, they must be used judiciously. Developers must remain vigilant stewards of their own skills, recognizing that convenience should never come at the cost of capability. The goal is not to reject AI assistance but to use it in a way that complements, rather than replaces, the human intellect at the heart of software creation.

In conclusion, the erosion of core programming skills is a pressing and multifaceted risk that accompanies AI dependence. It affects not only the technical competence of individual developers but also the collective resilience of development teams and the software systems they produce. Safeguarding the foundational elements of programming requires intentional action, balanced tool usage, and a renewed commitment to mastery in an era increasingly defined by machine assistance.

Security and Code Quality Risks

While artificial intelligence has introduced new efficiencies in software development, its integration has also generated a parallel set of security and code quality concerns. AI-generated code, though impressive in its syntactic and functional capabilities, does not inherently adhere to security best practices or software quality standards. As developers increasingly lean on AI systems to produce code autonomously, the risk of embedding subtle bugs, vulnerabilities, and design flaws into production environments grows substantially.

One of the central challenges in using AI for code generation is the inherent unpredictability of machine-generated outputs. AI models such as those powering GitHub Copilot or ChatGPT are trained on vast corpora of publicly available code from repositories across the internet. While this allows the models to generate syntactically correct and contextually plausible solutions, it also means they may replicate insecure or outdated patterns from their training data. Importantly, these tools lack true semantic understanding or the ability to guarantee the correctness of their outputs across diverse application contexts.

Security vulnerabilities introduced by AI-generated code are a growing concern for organizations. Common examples include improper input validation, insecure authentication methods, weak encryption implementations, and susceptibility to SQL injection or cross-site scripting (XSS) attacks. In a notable case reported in 2023, an enterprise discovered that code generated by an AI assistant for a web application had inadvertently disabled server-side input sanitization, leading to an exploitable XSS vulnerability. The oversight went unnoticed until a security audit several months after deployment, emphasizing the dangers of deploying AI-generated code without comprehensive review.

In addition to security lapses, AI-assisted development can compromise software quality. Quality, in this context, refers not only to correctness and functionality but also to maintainability, scalability, performance, and adherence to design principles. AI tools, while capable of generating working code, often do so in a way that lacks modularity, proper documentation, or alignment with a project's architectural conventions. Developers who blindly trust AI-generated solutions may end up introducing technical debt that compounds over time, increasing the cost of future maintenance and upgrades.

A 2024 study conducted by the MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) found that AI-generated code had a 30% higher likelihood of containing critical security vulnerabilities compared to code written by experienced developers. In controlled experiments, AI-assisted developers introduced exploitable flaws in over 50% of their projects when prompted to use AI tools without manual validation or code review. These findings underscore the importance of treating AI outputs as drafts requiring careful inspection rather than production-ready artifacts.

Furthermore, AI tools are not immune to context errors. While they may correctly interpret a simple prompt or function requirement, they lack awareness of the broader software ecosystem in which their code will operate. For instance, an AI-generated function might rely on deprecated APIs, fail to comply with project-specific naming conventions, or assume incorrect data formats. In isolation, these issues may appear trivial, but when multiplied across a large codebase, they contribute to inconsistency, fragility, and potential integration failures.

Another important consideration is the potential for AI-generated code to circumvent organizational compliance standards. Industries such as finance, healthcare, and defense operate under strict regulatory frameworks that mandate secure coding practices and auditability. AI tools, unless configured and monitored appropriately, can introduce code that falls short of these compliance requirements, exposing organizations to legal and reputational risks.

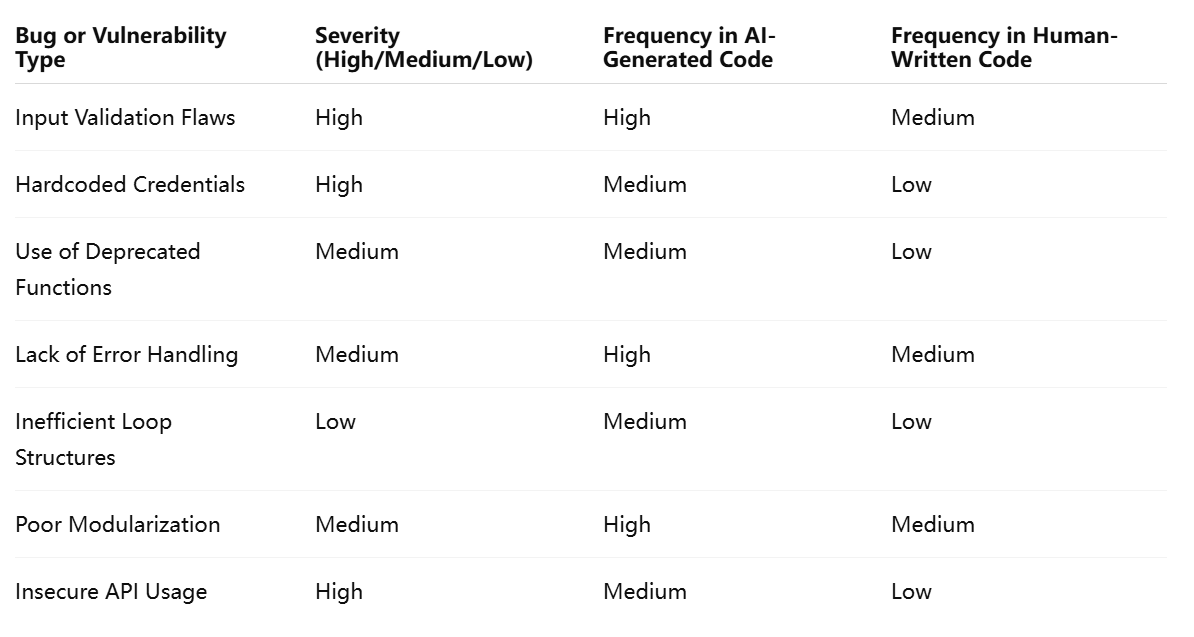

To illustrate the contrast between AI-generated and human-written code in terms of security and reliability, consider the following comparative table:

The above table highlights the disproportionate risk introduced when developers incorporate AI-generated code without sufficient scrutiny. Although AI can expedite coding tasks, it does so without the nuanced judgment or contextual awareness of an experienced software engineer. This limitation becomes particularly problematic in mission-critical applications where even minor flaws can have cascading consequences.

Moreover, the rapid adoption of AI tools is outpacing the evolution of secure development practices. Many organizations have yet to update their secure coding guidelines, training programs, or code review checklists to account for AI-assisted development. In such environments, developers may inadvertently introduce low-quality or insecure code simply because their workflows have not adapted to accommodate the new risks.

One potential solution is the integration of automated security scanning tools and static code analyzers alongside AI assistants. Tools like SonarQube, Checkmarx, and Snyk can serve as a secondary validation layer, catching common errors and vulnerabilities that might escape a developer's notice. Additionally, peer code reviews—long considered a best practice—must remain a mandatory step in the development lifecycle, even when AI is involved. AI outputs should never bypass human oversight, particularly in environments where trust, safety, and compliance are paramount.

The responsibility also lies with the developers themselves to maintain a healthy level of skepticism and vigilance. Just as seasoned engineers do not blindly trust code found in online forums, they should apply the same caution to AI-generated suggestions. Questions such as “Is this the most secure and efficient way to solve this problem?” or “Does this align with our architectural standards?” should become second nature in the AI era.

Beyond the individual developer, team leaders and organizations must foster a culture of critical engagement with AI tools. This includes providing training on secure code practices, promoting awareness of AI’s limitations, and instituting policies that define acceptable use cases and boundaries for AI assistance. Some companies are even beginning to develop internal AI governance frameworks, assigning specific roles to audit and evaluate the quality and security of AI-contributed code on an ongoing basis.

In conclusion, while AI coding tools undoubtedly enhance productivity, they also introduce tangible risks to security and code quality. These risks, if left unaddressed, can compromise application integrity, inflate technical debt, and expose organizations to regulatory or reputational harm. Developers and organizations must recognize that AI-generated code is not immune to error or oversight. It must be subjected to the same rigorous scrutiny, validation, and testing as any other part of the development process. Only through such diligence can the industry reap the benefits of AI while safeguarding the reliability and security of modern software systems.

Intellectual Property and Ethical Concerns

As artificial intelligence becomes increasingly integrated into the software development lifecycle, questions surrounding intellectual property (IP) rights and ethical responsibilities have assumed a central role in industry discourse. AI coding assistants—trained on vast repositories of publicly accessible code—raise profound concerns regarding the ownership, originality, and legality of the content they generate. These issues present not only legal challenges but also moral dilemmas that developers, organizations, and platform providers must address.

At the heart of the intellectual property debate lies the issue of training data provenance. Many AI-powered code generation tools rely on large language models (LLMs) trained using publicly available code sourced from platforms such as GitHub, Bitbucket, and Stack Overflow. While these repositories host open-source code, much of it is protected under various licenses—ranging from permissive ones such as MIT and Apache 2.0 to restrictive ones like the GNU General Public License (GPL). These licenses often contain stipulations regarding attribution, redistribution, and derivative works.

When an AI system is trained on such licensed code and subsequently generates outputs that resemble or replicate that code, the boundaries between fair use and infringement become blurred. Developers using AI tools may unknowingly incorporate code fragments that violate license terms, exposing themselves or their employers to legal liability. For instance, if AI-generated code closely resembles a function under GPL but is used in a proprietary product without adhering to GPL requirements, it may constitute a breach of license—even if the developer had no direct knowledge of the source material.

This issue is further complicated by the opaque nature of AI model training. Users often have no visibility into the datasets used to train the models nor the licensing structure of those datasets. This lack of transparency makes it exceedingly difficult to determine whether a particular output is original, derivative, or infringing. While AI vendors may include disclaimers or terms of use that attempt to transfer responsibility to the user, such provisions do little to mitigate real-world legal risks.

Beyond legal concerns, there are ethical implications tied to the use of AI in code generation. Open-source contributors spend countless hours developing, refining, and maintaining their codebases. When AI systems leverage this labor to generate outputs without attribution or recognition, it raises questions about fairness and respect for the open-source community. The foundational ethos of open-source software is built upon collaboration, transparency, and acknowledgment. AI-generated outputs that fail to credit the original authors erode this ethos and undermine the sustainability of open collaboration.

Moreover, ethical accountability becomes increasingly ambiguous in AI-assisted development. If a piece of AI-generated code results in a catastrophic failure—whether due to a security flaw, logical error, or performance issue—who bears responsibility? The AI tool provider, the developer who used it, or the organization that deployed the resulting software? Traditional software engineering places accountability squarely on the human developer. However, as AI systems begin to take a more active role in shaping software outputs, the question of moral and professional responsibility becomes more complex.

Consider the scenario in which an AI tool suggests an algorithm that, while performant, introduces significant privacy concerns—perhaps by logging user data in an insecure manner. If the developer, due to inexperience or misplaced trust, implements the suggestion without scrutiny, the resulting harm affects end-users, damages reputations, and may trigger legal repercussions. Yet tracing the origin of the flawed implementation back to a specific actor becomes problematic when AI systems operate as “black boxes” with no explanation or traceability.

Another emerging concern is the potential for AI tools to reinforce existing biases in code or propagate harmful patterns. If the training data includes biased implementations—such as discriminatory user input handling, hardcoded assumptions, or culturally insensitive practices—the AI may reproduce or even amplify these flaws. Developers may not detect such issues, especially if the output appears technically sound. This introduces not only ethical risks but also reputational damage and public backlash if such biased behaviors are deployed in consumer-facing applications.

To navigate these complex issues, developers and organizations must adopt proactive strategies for ethical and legal compliance. First, it is essential to understand the licensing implications of integrating AI-generated code into software projects. This may require the assistance of legal counsel or IP specialists, especially for companies operating in regulated industries or jurisdictions with strict copyright enforcement. Some organizations have started conducting AI code audits to detect potential license conflicts or duplicated patterns from open-source projects.

Second, developers should treat AI outputs as raw material rather than finished products. Code generated by AI should be reviewed, refactored, and validated in the same manner as human-authored code. Where feasible, developers should document the provenance of AI-generated code—detailing prompts used, tool versions, and post-generation modifications—to maintain an auditable trail of their development process.

Third, ethical guidelines for AI tool usage should be formally codified within organizations. These guidelines might include policies for attribution, data privacy, bias detection, and appropriate use cases. Training programs can help developers understand not only how to use AI tools effectively but also how to do so responsibly. Encouraging a culture of ethical reflection is critical to ensuring that AI augments rather than diminishes professional standards in software engineering.

AI vendors also have a significant role to play. They must prioritize transparency by disclosing high-level information about the sources used in model training and implementing mechanisms for citation and attribution where possible. Emerging techniques such as watermarking, output tracing, and synthetic signature tagging could enable users to identify the origins of generated code fragments. Furthermore, providers should improve model interpretability to help users understand why a particular solution was suggested and what its limitations might be.

In parallel, policy and regulatory frameworks must evolve to keep pace with the realities of AI-assisted software development. Governments and standard-setting bodies may need to issue guidelines on copyright applicability to AI-generated works, define the scope of developer liability, and promote responsible AI development practices. Already, some jurisdictions are exploring legislation around AI transparency and accountability that could extend to software development contexts.

In conclusion, the intellectual property and ethical dimensions of AI-assisted coding are not merely peripheral concerns—they are central to the responsible evolution of the field. As AI becomes more capable and more pervasive, developers must not abdicate their legal and moral responsibilities. Rather, they must embrace a conscientious approach that safeguards the rights of original creators, ensures transparency, and promotes accountability at every stage of the development process. Only by embedding ethical and legal considerations into the core of AI integration can the software industry preserve its integrity while harnessing the transformative potential of intelligent automation.

Building a Balanced Approach to AI in Development

The increasing integration of artificial intelligence into software engineering is reshaping the development landscape at an unprecedented pace. While the earlier sections of this blog have highlighted the risks associated with AI dependency—ranging from skill erosion and security vulnerabilities to ethical and intellectual property concerns—it is equally important to recognize that AI tools, when used responsibly, can serve as powerful enablers of productivity and innovation. The key lies not in rejecting AI, but in fostering a balanced, informed, and principled approach to its application in software development.

A balanced strategy begins with a clear understanding of AI’s role within the development process. AI should be viewed not as a replacement for the human developer but as an augmentation layer that enhances efficiency and facilitates experimentation. Just as compilers, version control systems, and integrated development environments revolutionized software engineering in earlier eras, AI represents the next evolutionary step in toolchain advancement. However, unlike its predecessors, AI introduces probabilistic behavior, which requires deliberate oversight and human judgment.

Developers must cultivate a mindset that regards AI outputs as suggestions or drafts rather than final solutions. This mental framework encourages critical thinking, fosters learning, and maintains accountability. For instance, when using an AI tool to generate a function, developers should actively review the logic, assess edge cases, refactor the code to match organizational standards, and test it thoroughly before integration. This practice ensures that AI acts as a catalyst for productivity rather than a conduit for unchecked automation.

One of the most effective ways to institutionalize balanced AI use is through formal guidelines embedded within development workflows. Organizations should establish internal policies that define how AI tools should be used, when human review is mandatory, and which types of code or tasks are considered appropriate for AI assistance. These policies might include mandates for peer review of AI-generated code, limitations on the use of AI in security-critical modules, and requirements for documentation of AI-assisted contributions.

Moreover, the importance of continuous learning cannot be overstated. Developers must remain committed to refining their foundational programming skills, even as AI tools reduce the need for routine coding tasks. Investing time in studying algorithms, design patterns, system architecture, and debugging techniques reinforces long-term competence and enables developers to make informed decisions when interpreting AI outputs. Organizations can support this goal by offering structured training programs that blend traditional software engineering education with practical guidance on AI tool usage.

Team structures and collaboration practices should also evolve to accommodate the presence of AI. Pair programming, long recognized as a method for improving code quality and knowledge sharing, can be adapted to include AI as a “third partner.” In this model, two developers work together while periodically consulting an AI assistant for suggestions, explanations, or alternative implementations. This setup not only increases efficiency but also encourages dialogue around the validity of AI-generated code, creating a shared sense of accountability and deeper mutual understanding.

Mentorship must remain a core component of developer growth. Senior engineers should take proactive roles in guiding junior colleagues on how to interpret and evaluate AI-generated code. This mentorship should emphasize critical evaluation skills, ethical reasoning, and architectural thinking. By doing so, organizations can preserve institutional knowledge, build resilience, and prepare the next generation of developers to work effectively in AI-augmented environments.

Another key element of a balanced approach is observability and feedback. Organizations should monitor how AI tools are being used within their teams—tracking metrics such as the percentage of code generated by AI, the frequency of post-generation edits, and the incidence of bugs or security flaws linked to AI-generated code. This data can inform ongoing refinement of policies, highlight areas where training is needed, and ensure that AI is delivering measurable value without compromising quality or security.

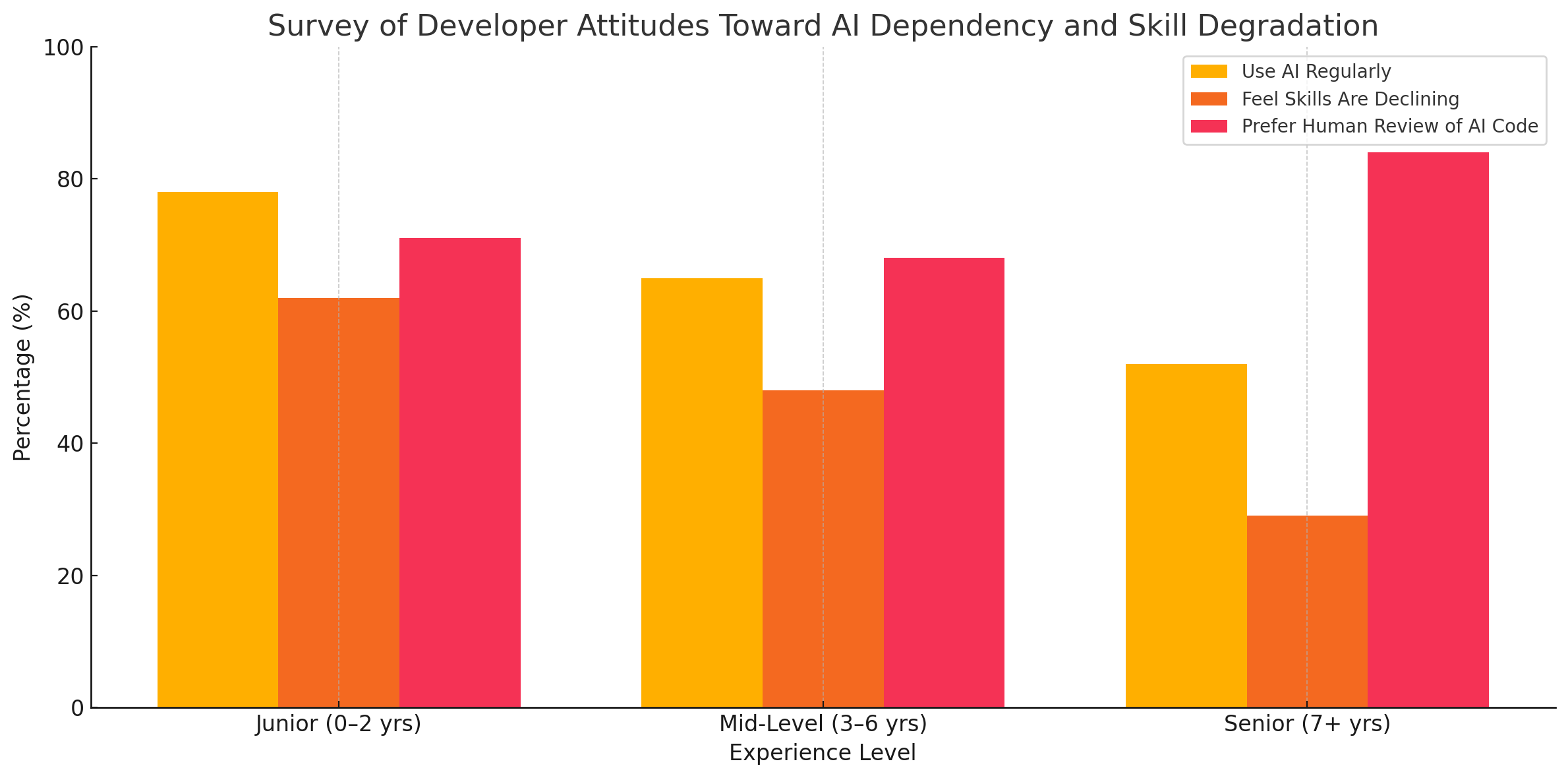

To better understand developer attitudes and practices, consider the following visual representation:

The chart highlights a crucial insight: while AI tool usage is high across all levels of experience, more seasoned developers exhibit a stronger preference for maintaining human oversight and express less concern about skill degradation. This suggests that balanced use is not only achievable but also positively correlated with experience and critical engagement.

In addition to internal practices, engagement with the broader community is essential. Developers and organizations should contribute to open discussions about responsible AI development, share lessons learned, and advocate for transparency from AI tool providers. The collective intelligence of the developer ecosystem can play a significant role in shaping norms, identifying risks, and proposing solutions that balance innovation with integrity.

Equally important is the role of leadership in setting the tone for AI adoption. Engineering managers and CTOs must articulate a vision that emphasizes quality, accountability, and continuous improvement. Rather than pushing teams to blindly increase output through AI, leaders should champion the strategic use of AI to augment human capabilities while preserving craftsmanship, security, and ethical standards.

Finally, developers must also embrace a future-oriented perspective. The pace of AI advancement suggests that tools will become even more capable, offering more sophisticated features such as automated architecture generation, full-stack scaffolding, and autonomous debugging. Preparing for this future requires a flexible mindset, a commitment to ethical reasoning, and a willingness to adapt roles and responsibilities within the development process.

In conclusion, the path forward lies in thoughtful integration—not uncritical reliance—on AI in software development. A balanced approach combines the strengths of intelligent automation with the irreplaceable value of human judgment, creativity, and responsibility. By establishing clear guidelines, promoting continuous education, fostering collaborative cultures, and maintaining vigilant oversight, developers and organizations can harness the transformative power of AI while preserving the integrity of their work. The future of software development will not be written by machines alone, but by humans who know how to work wisely alongside them.

The rapid integration of artificial intelligence into the fabric of software development has ushered in a new era of opportunity and complexity. AI-powered tools have become invaluable companions to developers, offering assistance in code generation, debugging, documentation, and beyond. Yet, as this blog post has demonstrated, the growing dependence on such tools is not without significant risks. From the erosion of fundamental programming skills to the emergence of security vulnerabilities, ethical ambiguities, and legal uncertainties, the implications of unchecked AI reliance extend far beyond mere technical considerations.

Developers today find themselves at a pivotal juncture. While the allure of AI-enhanced productivity is undeniable, it must be weighed against the responsibility to uphold software quality, professional integrity, and long-term maintainability. The shift toward AI-supported development is not inherently detrimental—indeed, it holds the promise of transforming the way software is conceived and built—but it requires intentionality, vigilance, and balance.

Organizations must lead the way in crafting policies and practices that reinforce this balance. Structured guidelines for AI use, robust code review processes, and a commitment to continuous education are essential for maintaining development standards in an AI-augmented world. Equally important is the cultivation of a culture in which developers are encouraged to question, verify, and refine AI-generated outputs, rather than accept them uncritically.

Educational institutions, too, play a critical role. They must adapt curricula to prepare students not only to work with AI tools but also to think critically about their outputs. Emphasizing core computer science principles, ethical reasoning, and practical application will ensure that new generations of developers are equipped to thrive in an increasingly automated environment without sacrificing the rigor that defines engineering excellence.

At the individual level, developers must take ownership of their learning and remain committed to deepening their expertise. AI may streamline certain tasks, but it cannot replace the nuanced judgment, creativity, and problem-solving capabilities that define truly skilled professionals. By treating AI as an assistant rather than a substitute, developers can continue to grow while leveraging the best of what intelligent systems have to offer.

In sum, the future of software development will not be shaped by AI alone, but by the choices developers and organizations make in how they engage with it. Striking the right balance between automation and accountability, efficiency and comprehension, innovation and ethics will determine not only the quality of the code we produce but also the character of the industry itself. The risks of AI dependency are real, but with thoughtful action, they are not insurmountable. Instead, they present an opportunity to redefine excellence in software development for the era ahead.

References

- GitHub Copilot – https://github.com/features/copilot

- OpenAI Codex – https://openai.com/blog/openai-codex

- Stack Overflow Developer Survey – https://survey.stackoverflow.co

- OWASP Secure Coding Practices – https://owasp.org/www-project-secure-coding-practices

- MIT CSAIL AI & Programming Research – https://csail.mit.edu

- Tabnine AI Assistant – https://www.tabnine.com

- ChatGPT for Developers – https://platform.openai.com

- Amazon CodeWhisperer – https://aws.amazon.com/codewhisperer

- Snyk Code Security Platform – https://snyk.io

- Replit Ghostwriter – https://replit.com/site/ghostwriter