Dell Launches Blackwell-Powered AI Platform to Redefine Enterprise Acceleration

In a strategic move poised to redefine the contours of enterprise computing, Dell Technologies has unveiled its next-generation AI acceleration platform, built on the recently launched Nvidia Blackwell GPU architecture. The announcement marks a pivotal moment in the ongoing race to dominate the AI infrastructure market—a race where hardware innovation has become as critical as algorithmic advancement. As organizations accelerate adoption of generative AI (GenAI), deep learning, and large-scale data processing workloads, the need for robust, scalable, and energy-efficient compute platforms has reached unprecedented levels. Dell’s Blackwell-powered offering directly addresses this surge in demand with a sophisticated solution designed for performance-intensive enterprise environments.

The backdrop to this announcement is the explosive growth in artificial intelligence applications across industries. From autonomous systems and large language models (LLMs) to personalized customer experiences and real-time fraud detection, AI is no longer confined to research labs. It has become an operational imperative. However, with this shift comes immense compute demands—demands that legacy systems often struggle to fulfill efficiently. This is where Nvidia's Blackwell GPU architecture enters the frame, offering industry-leading throughput, FP8 precision for AI workloads, improved thermal efficiency, and seamless multi-GPU communication via the latest NVLink interconnects. Dell’s decision to integrate this architecture into its AI platform signifies a calculated effort to future-proof enterprise computing infrastructure.

At the heart of this development is Dell’s broader strategy to establish itself as the backbone of enterprise AI infrastructure—on-premises, at the edge, and in hybrid environments. The company’s updated AI-optimized PowerEdge servers, specifically engineered for Blackwell GPUs, aim to offer unmatched modularity, compute density, and operational efficiency. These new servers are not standalone boxes of hardware but are part of a tightly integrated ecosystem comprising Dell’s management software, orchestration layers, and support for Nvidia’s AI Enterprise suite. This end-to-end stack is designed to streamline the deployment, management, and scaling of AI workloads across a diverse range of industries.

Dell’s collaboration with Nvidia on this platform is not incidental. It represents a strategic partnership rooted in a shared vision of accelerating enterprise AI transformation. With Nvidia setting the benchmark for AI computing hardware and Dell excelling in infrastructure orchestration and enterprise integration, the combined offering presents a compelling value proposition. In essence, this is not merely a product launch; it is a blueprint for how enterprises will deploy and operationalize AI at scale in the years to come.

This blog post will unpack the significance of Dell’s announcement in detail. The first section explores the technological foundation—the Nvidia Blackwell architecture—and how it differs from its predecessors and competitors. The second section focuses on Dell’s AI platform design and capabilities, from server models to deployment flexibility. The third section highlights real-world use cases across industries and maps them to Dell’s infrastructure. The fourth section analyzes the competitive landscape, benchmarking Dell’s offering against other hardware vendors and hyperscalers. Together, these insights will provide a comprehensive understanding of how Dell’s Blackwell-based platform is set to impact the future of enterprise AI.

As organizations continue to navigate the complexities of digital transformation, data sovereignty, and AI governance, infrastructure decisions take on greater significance. Dell’s latest move suggests a clear intention: to lead in the AI-first enterprise era by offering platforms that not only support today's workloads but are architected for the exponential growth of tomorrow’s innovations.

The Blackwell Architecture – A Leap in AI Acceleration

At the core of Dell’s next-generation AI platform lies the Nvidia Blackwell GPU architecture, a technological advancement that redefines the frontiers of accelerated computing. Unveiled as the successor to the Hopper architecture, Blackwell represents a substantial leap forward in terms of raw performance, energy efficiency, and architectural versatility. It is explicitly designed to address the growing complexity and scale of artificial intelligence workloads, particularly those related to generative models, large language model (LLM) training, and real-time inference. With Dell integrating this cutting-edge hardware into its infrastructure, understanding the foundational innovations of the Blackwell architecture is critical to appreciating the platform’s overall capabilities.

Architectural Design and Innovation

The Blackwell GPU architecture is built to meet the evolving demands of AI workloads that increasingly require petaflops of compute and terabytes of memory bandwidth. At the hardware level, Blackwell introduces a dual-die design, which combines two GPU dies on a single package. This design significantly increases the transistor count—reportedly exceeding 208 billion transistors—without sacrificing performance-per-watt metrics. The architecture is engineered with second-generation Transformer Engine capabilities that support FP8 precision, a floating-point format specifically tailored for AI and machine learning operations.

The move toward FP8 is a direct response to the need for more efficient computation in training and inferencing tasks. While previous architectures primarily leveraged FP16 and TensorFloat-32, FP8 provides a middle ground that enhances both compute throughput and memory utilization, particularly in large-scale transformer models. The adoption of FP8 allows Blackwell GPUs to execute up to 20 petaflops of AI performance per chip, enabling massive parallelism that is ideal for training billion-parameter models in record time.

Advanced Interconnects: NVLink and NVSwitch

Beyond raw compute, one of the most noteworthy features of the Blackwell architecture is its communication fabric. Blackwell introduces a next-generation NVLink 5.0 interconnect, which enables up to 1.8 TB/s of bi-directional bandwidth between GPUs. This significantly reduces latency and boosts throughput in multi-GPU configurations. NVLink’s impact is particularly salient in data center deployments where tens or hundreds of GPUs must work in unison to handle complex model training pipelines or real-time inference clusters.

Furthermore, Blackwell pairs seamlessly with NVSwitch 5.0, Nvidia’s high-speed switch that enables direct GPU-to-GPU communication across an entire server or node. The integration of these technologies ensures that GPU resources are not siloed but are instead orchestrated as a unified computational entity. For Dell’s AI platform, which targets high-density deployments, this means increased operational efficiency, minimized bottlenecks, and reduced power draw per compute operation.

Energy Efficiency and Thermal Design

In contrast to conventional acceleration architectures that often face diminishing returns as power requirements grow, Blackwell prioritizes performance-per-watt efficiency. Each GPU is optimized for power envelopes between 700 and 1200 watts, depending on workload profiles and thermal design configurations. Nvidia employs liquid cooling compatibility and advanced thermal engineering to maintain optimal operating temperatures without compromising density or server footprint.

This focus on energy efficiency is pivotal at a time when data centers are under pressure to reduce their carbon footprints and adhere to strict regulatory standards. Dell’s decision to implement Blackwell across its next-gen PowerEdge servers aligns with enterprise sustainability goals, offering a solution that is not only powerful but also environmentally considerate.

AI-Specific Enhancements

Blackwell GPUs are infused with AI-specific accelerators and caching mechanisms that reduce data fetch latency, particularly for operations like matrix multiplications, attention mechanisms, and graph traversals—common tasks in AI models. Additionally, the architecture includes improved sparsity support, allowing models to skip redundant calculations without affecting accuracy. This translates into faster training cycles and more efficient inferencing, which are critical for production-scale AI deployments.

Another significant improvement lies in Blackwell’s ability to support mixed-precision training and inferencing within a single computational graph. This flexibility allows developers and data scientists to optimize for speed or accuracy on a per-layer basis, thereby fine-tuning models dynamically during the training process. Dell’s platform is optimized to exploit this feature through its deep integration with Nvidia’s software stack, including CUDA, cuDNN, and the TensorRT libraries.

Blackwell vs Hopper: Comparative Analysis

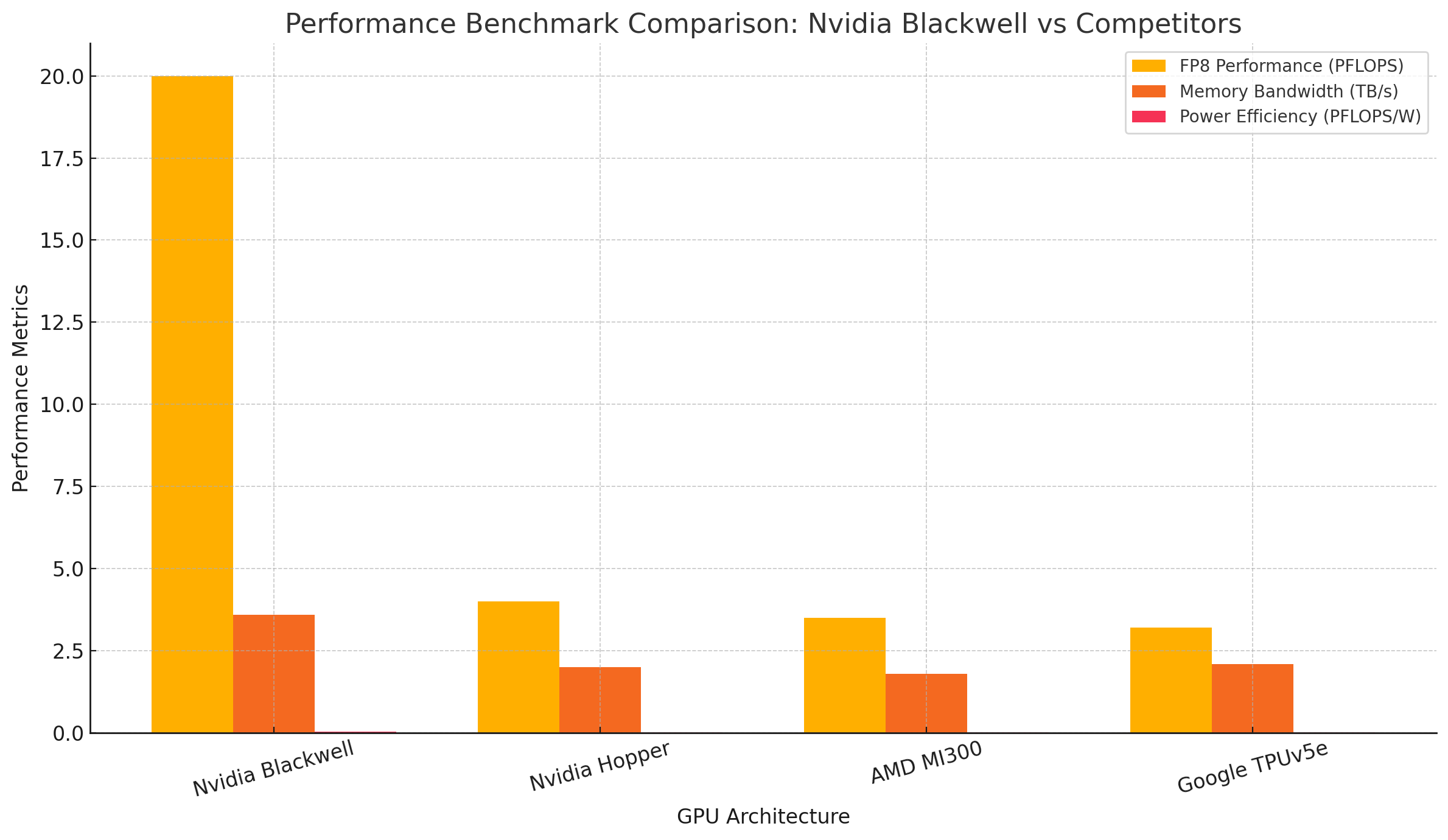

To fully grasp the magnitude of the architectural advancement, it is useful to compare Blackwell with its predecessor, Hopper (H100). Hopper delivered up to 4 PFLOPS of FP8 performance, while Blackwell scales that to 20 PFLOPS, representing a 5x performance increase. Memory bandwidth has been significantly upgraded as well, with Blackwell supporting up to 3.6 TB/s, compared to Hopper’s 2 TB/s, when fully integrated with NVLink and high-bandwidth memory (HBM3e).

The latency improvements brought by NVLink 5.0 and the energy savings achieved through architectural refinements make Blackwell more suited for multi-modal AI workloads, including image generation, video synthesis, and large-scale recommender systems. For Dell, this means that the servers equipped with Blackwell GPUs are now capable of hosting a much broader array of use cases—from AI supercomputing clusters to federated edge nodes.

This chart illustrates the substantial improvements Blackwell delivers in FP8 AI performance, memory bandwidth, and power efficiency over its predecessors and rivals.

Implications for Dell’s Platform

The decision to base Dell’s new AI infrastructure on the Blackwell architecture is not merely a performance-centric move; it is a strategic alignment with the future trajectory of AI development. Blackwell provides the scalability, modularity, and flexibility required to accommodate the exponential growth in AI model size and complexity. It also opens the door for AI infrastructure-as-a-service offerings, where enterprises can rent compute on-premises or in hybrid environments without compromising performance.

Dell’s AI platform leverages these hardware capabilities through a tight integration with Nvidia’s software ecosystem, ensuring a seamless user experience from development to deployment. Tools like Nvidia Base Command, Nemo, and AI Workbench are natively supported, enabling users to manage, monitor, and scale workloads effortlessly.

Dell’s AI Platform – Systems, Scale, and Deployment Models

With the introduction of Nvidia’s Blackwell architecture as a foundational component, Dell Technologies has crafted a highly optimized AI platform tailored for enterprises seeking scalable, efficient, and high-performance infrastructure. This section delves into the specific systems Dell has engineered to support Blackwell GPUs, the architectural philosophy behind its AI platform, and the diverse deployment models it enables. As AI workloads grow in scope and complexity, Dell’s emphasis on flexibility, manageability, and enterprise-readiness positions its new offerings as a cornerstone of next-generation digital infrastructure.

AI-Optimized PowerEdge Servers: Purpose-Built for Blackwell

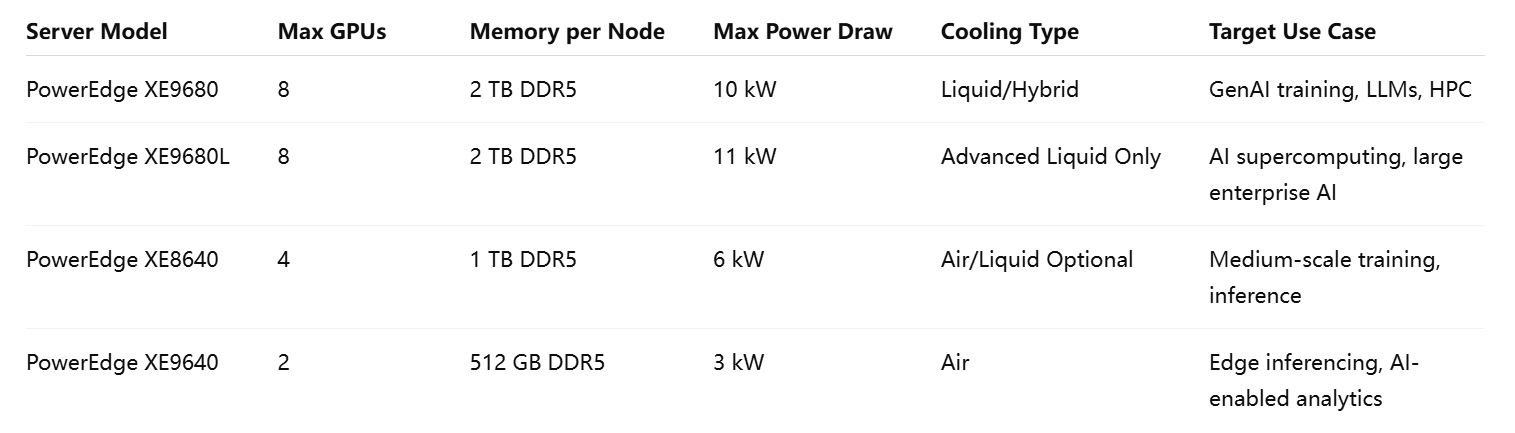

At the center of Dell’s AI strategy is its flagship server series: PowerEdge XE9680 and the forthcoming XE9680L, designed from the ground up to accommodate the thermal, electrical, and architectural demands of Nvidia Blackwell GPUs. These high-density servers support up to eight Blackwell GPUs per chassis, leveraging enhanced PCIe Gen5 and NVLink 5.0 connectivity for minimal latency and maximum throughput. The compact and modular nature of the PowerEdge line allows data centers to scale performance linearly while maintaining operational efficiency.

Dell’s engineering approach is evident in the attention paid to airflow dynamics, hot-swappable components, and liquid cooling compatibility. Given that each Blackwell GPU can draw up to 1200 watts under full load, efficient thermal dissipation becomes a non-negotiable requirement. Dell’s PowerEdge servers integrate advanced cooling technologies—ranging from cold plate liquid cooling to high-performance heat sinks—that support sustained operation even under peak computational loads. These systems are engineered not only for compute density but also for sustainability, aligning with enterprise ESG targets and green data center initiatives.

Beyond the XE9680 family, Dell’s broader PowerEdge portfolio is expected to include models supporting 2, 4, and 6-GPU configurations, enabling tiered deployment models based on workload requirements and budget constraints. Whether an organization is training multi-trillion parameter models or deploying lightweight inference at the edge, Dell’s modular architecture ensures a seamless fit.

Storage and Networking Integration: High-Speed, High-Capacity Fabric

Dell’s AI platform is not limited to compute. It tightly integrates with high-throughput storage solutions such as Dell PowerScale for AI-ready NAS and PowerFlex for software-defined block storage. These systems provide the I/O performance and fault tolerance necessary for feeding massive datasets to AI models in real time. PowerScale’s scalability to tens of petabytes makes it particularly well-suited for data-intensive workloads in sectors like genomics, autonomous driving, and video analysis.

On the networking front, Dell incorporates high-bandwidth Ethernet and Infiniband interconnects, offering bandwidths up to 400 Gbps with ultra-low latency switching. This ensures that data movement between CPUs, GPUs, and storage layers remains frictionless, thereby eliminating the typical bottlenecks encountered in distributed training or inference pipelines. The inclusion of SmartNICs and support for RDMA over Converged Ethernet (RoCE) further optimizes data transfers, essential for workloads that involve real-time decision-making or streaming data.

Software Stack and Orchestration Capabilities

What sets Dell’s platform apart is not merely its hardware prowess but also its comprehensive software stack. Central to this is Dell OpenManage Enterprise, which provides unified lifecycle management for compute, storage, and networking components. Through a single dashboard, IT administrators can deploy firmware updates, monitor thermal conditions, configure power profiles, and manage GPU utilization.

In collaboration with Nvidia, Dell ensures native support for Nvidia AI Enterprise, a suite that includes pre-trained models, optimized frameworks like TensorFlow and PyTorch, and orchestration tools such as Nvidia NeMo, Triton Inference Server, and Base Command Manager. This tight integration simplifies model development, deployment, and monitoring. Developers can rapidly prototype and test AI models, while DevOps teams can operationalize them using Kubernetes-based workflows, fully supported within Dell’s infrastructure.

Additionally, Dell’s platforms are compatible with popular MLOps tools such as MLflow, Kubeflow, and Weights & Biases. These integrations ensure that AI lifecycle management—from dataset ingestion to model deployment—is automated, auditable, and scalable. The platform also supports multi-tenant environments with secure role-based access control (RBAC), making it ideal for shared research labs or enterprise-wide AI initiatives.

Flexible Deployment Models: Edge, Core, and Cloud

Recognizing that AI workloads are increasingly distributed, Dell has built its Blackwell-powered platform to support multiple deployment models:

- Core Data Center: High-density PowerEdge XE9680 systems are optimized for centralized data centers and AI supercomputing clusters. These deployments are ideal for model training, federated learning, and large-scale simulation tasks.

- Edge and Remote Sites: Dell provides ruggedized, short-depth servers designed for edge AI inference in manufacturing floors, smart cities, and healthcare facilities. These nodes leverage containerized microservices and are optimized for local processing to reduce latency and reliance on cloud connectivity.

- Hybrid Cloud: Through its APEX-as-a-Service model, Dell enables enterprises to deploy Blackwell-based infrastructure on-premise with cloud-like agility. This is particularly valuable for organizations with data sovereignty concerns or those operating in regulated environments such as finance, defense, and pharmaceuticals.

Each deployment model is supported by Dell CloudIQ, an AI-powered operations management tool that provides predictive analytics, capacity planning, and automated alerting across hybrid environments. Whether running on bare metal, virtual machines, or Kubernetes clusters, workloads are dynamically scheduled based on GPU availability, power profiles, and cooling capacity.

Security, Compliance, and Sustainability Considerations

Dell has incorporated a multi-layered approach to security. This includes hardware-level protections like TPM 2.0, Secure Boot, and Silicon Root of Trust, as well as software-based controls such as full-disk encryption, role-based access, and compliance with NIST, ISO, and HIPAA standards. These features are particularly crucial as AI models are increasingly used to process sensitive data—from personal medical records to proprietary financial algorithms.

In parallel, Dell emphasizes sustainable computing by offering systems compliant with ENERGY STAR, EPEAT, and RoHS guidelines. Innovations like dynamic fan controls, AI-based power optimization, and intelligent workload scheduling contribute to significantly reduced energy consumption and total cost of ownership (TCO).

Through a synergistic blend of powerful hardware, advanced software, and operational flexibility, Dell’s AI platform represents a comprehensive solution for modern enterprises. It enables organizations to transition from experimental AI to full-scale production in a secure, manageable, and scalable manner. The next section will explore how these capabilities are being translated into real-world impact across industries and highlight several promising use cases.

Real-World Applications and Enterprise Use Cases

The true measure of any AI infrastructure platform lies not merely in its technical specifications but in the value it delivers across practical, mission-critical deployments. Dell’s Blackwell-powered AI acceleration platform is built with this ethos in mind—engineered to facilitate high-impact applications across a range of verticals, from healthcare and financial services to manufacturing, retail, and government operations. In this section, we examine how Dell’s AI platform is being leveraged to tackle real-world challenges, enable transformative insights, and support the AI lifecycle from experimentation to production.

Healthcare and Life Sciences: From Genomics to Real-Time Diagnostics

The healthcare industry is undergoing a digital transformation powered by AI, with Dell’s platform playing a pivotal role in advancing precision medicine. One of the most impactful use cases is genomic sequencing and analysis. Blackwell’s high-throughput FP8 computation enables the rapid processing of vast genomic datasets, accelerating time-to-insight in oncology, rare disease diagnostics, and pharmacogenomics. Research institutions and biotech companies are already adopting Dell’s AI-optimized servers to train transformer-based models that predict protein folding, model gene interactions, and simulate molecular dynamics.

In clinical environments, Dell’s edge-compatible servers are being deployed to support real-time diagnostic tools, such as AI-assisted radiology. By integrating with Nvidia’s Clara imaging and AI Enterprise suite, Dell’s infrastructure allows hospitals to analyze MRI and CT scans with near-instant precision, improving diagnostic accuracy while reducing physician burnout. These deployments often involve hybrid models, with sensitive data processed on-premises to comply with HIPAA regulations and less critical workloads shifted to the cloud for scalability.

Moreover, Dell’s platform is instrumental in accelerating drug discovery pipelines, reducing the cost and duration of traditional R&D cycles. AI models trained on Dell hardware are being used to screen compound libraries, predict molecular interactions, and optimize clinical trial designs. These applications exemplify how compute-intensive tasks in life sciences can be transformed by AI infrastructure that delivers both raw performance and regulatory compliance.

Financial Services: Fraud Detection and Risk Modeling at Scale

In the world of finance, milliseconds matter. Dell’s AI platform, powered by the Nvidia Blackwell architecture, provides the low-latency, high-throughput environment required for real-time fraud detection, credit scoring, and algorithmic trading. Financial institutions are deploying Blackwell-enabled PowerEdge servers to train and deploy AI models that can analyze millions of transactions per second, flagging anomalous patterns indicative of fraudulent behavior.

Dell’s infrastructure supports deep learning models used in risk assessment, portfolio optimization, and regulatory reporting. The support for mixed-precision training allows for faster iteration cycles without sacrificing the precision needed for compliance and auditability. By integrating with Nvidia RAPIDS and Dell OpenManage tools, financial institutions gain end-to-end visibility into model performance, energy usage, and compliance status, all from a centralized dashboard.

In addition, the secure boot features and multi-tenant management capabilities make Dell’s infrastructure particularly attractive for fintech startups operating in multi-cloud environments. These startups benefit from the ability to containerize workloads, scale compute dynamically, and maintain robust security postures—all critical in a sector where data integrity and regulatory adherence are non-negotiable.

Manufacturing and Industry 4.0: Predictive Maintenance and Visual Inspection

The convergence of AI and IoT has given rise to smart factories, where Dell’s AI platform is being used to power a range of industrial applications. One of the most valuable implementations is in predictive maintenance. By deploying sensors and edge-enabled PowerEdge servers, manufacturers can gather real-time data on machine performance, analyze it using time-series models, and preempt equipment failures. This proactive approach reduces downtime, extends equipment lifespan, and improves overall operational efficiency.

Visual inspection powered by AI is another transformative use case. Leveraging convolutional neural networks (CNNs) and high-resolution camera feeds, Dell-powered platforms can identify defects on assembly lines in real time, far surpassing the accuracy and consistency of manual quality control. These systems are optimized for on-premise inferencing, where latency is critical and cloud round-trips are impractical.

Additionally, Dell’s AI infrastructure supports digital twin simulations—virtual replicas of physical manufacturing environments that allow operators to simulate process improvements, optimize logistics, and reduce waste. With Blackwell’s increased compute density and efficient interconnects, these simulations can be run in near real-time, providing valuable insights to process engineers and supply chain managers.

Retail and Customer Experience: Hyperpersonalization and Demand Forecasting

Retailers are increasingly turning to AI to optimize the customer journey, and Dell’s platform serves as a critical enabler of this transformation. With support for Blackwell GPUs, retailers can now deploy recommendation systems that operate at massive scale, processing real-time behavioral data to deliver hyperpersonalized product suggestions. These models draw on deep collaborative filtering, transformer-based architectures, and reinforcement learning—all of which demand the high-performance compute environment Dell provides.

In the realm of demand forecasting, Dell’s systems are used to train models that analyze sales history, seasonal trends, and external variables such as weather and socio-economic indicators. These models help optimize inventory levels, reduce stockouts, and align marketing efforts with predicted consumer behavior.

Dell also supports AI-powered chatbots and voice assistants for e-commerce platforms, integrating natural language processing (NLP) models that run on Blackwell GPUs. These solutions enhance customer engagement, reduce support costs, and gather valuable data for continuous improvement.

Public Sector and Research: Accelerating National AI Agendas

Government agencies and academic institutions are leveraging Dell’s AI platform to support climate modeling, urban planning, and national security initiatives. High-resolution simulations of climate data, for example, require compute capabilities at the scale that only platforms like Blackwell-enabled PowerEdge clusters can provide. These simulations assist in predicting extreme weather events, assessing environmental policy outcomes, and guiding infrastructure investments.

In the defense sector, Dell’s secure hardware environment is used for image and signal intelligence, where real-time inference is critical. These deployments often occur in air-gapped environments with strict physical and digital access controls. Dell’s adherence to international compliance standards makes it a preferred partner for government contracts and strategic research collaborations.

Additionally, Dell’s AI platform powers academic research in fields such as natural sciences, AI ethics, and linguistics, providing institutions with the computational tools needed to train frontier models and perform large-scale data analysis. Through partnerships with Nvidia and integration of open-source toolchains, universities gain access to industry-grade infrastructure that supports both educational and research missions.

From healthcare breakthroughs to industrial efficiency and retail personalization, Dell’s Blackwell-based AI platform demonstrates clear versatility and enterprise relevance. It enables organizations to deploy AI where it matters most—securely, efficiently, and at scale.

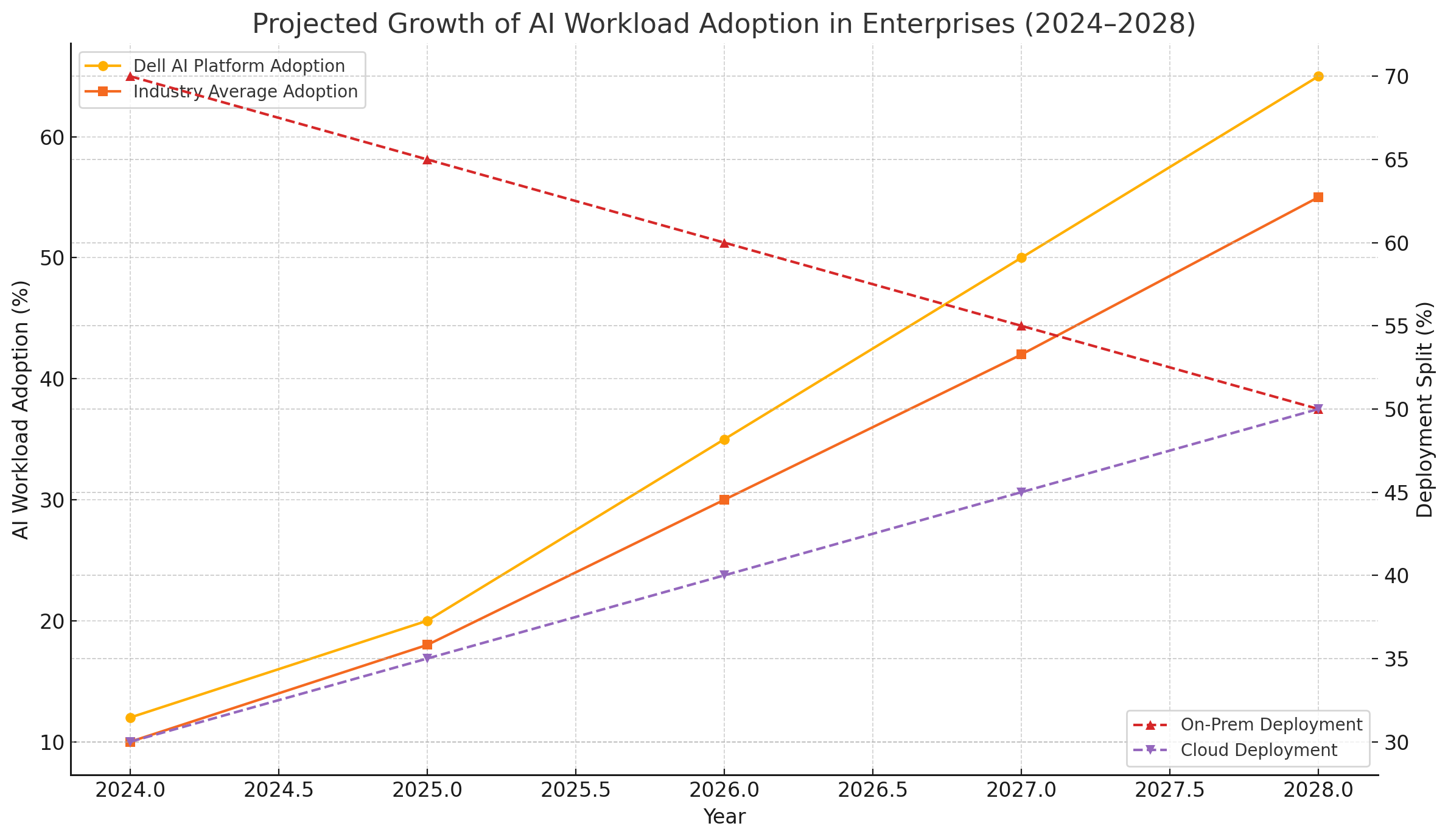

This chart visualizes the rising adoption of AI workloads across enterprises, highlighting Dell’s projected market penetration relative to industry averages, along with the shifting balance between on-prem and cloud-based AI deployments.

Competitive Landscape and Strategic Implications

The unveiling of Dell’s Blackwell-based AI acceleration platform represents not just a technical advancement, but a strategic maneuver within an increasingly crowded and high-stakes AI infrastructure market. As enterprises double down on artificial intelligence to drive innovation, productivity, and differentiation, the competitive landscape for AI hardware providers has intensified. In this section, we examine Dell’s position relative to key competitors—including Hewlett Packard Enterprise (HPE), Lenovo, Supermicro, and major cloud hyperscalers—while assessing the broader implications of Dell’s approach to enterprise AI acceleration.

Dell vs. Traditional OEM Competitors: HPE, Lenovo, and Supermicro

Among Dell’s closest rivals in the enterprise server space are HPE, Lenovo, and Supermicro—each of which has introduced GPU-accelerated infrastructure to target AI workloads. HPE’s Cray EX and Apollo 6500 systems, for example, are optimized for high-performance computing and deep learning applications. Lenovo has expanded its ThinkSystem family with Nvidia GPU-enabled models, and Supermicro has carved out a niche by offering highly customizable AI servers with aggressive pricing.

What distinguishes Dell in this competitive field is its holistic platform strategy. While competitors often focus on server hardware in isolation, Dell integrates compute, storage, networking, and management software into a cohesive ecosystem. The PowerEdge XE9680 series, when combined with Dell OpenManage, PowerScale storage, and Nvidia AI Enterprise, provides a fully integrated stack that reduces deployment complexity and accelerates time-to-value. Moreover, Dell’s global support network and lifecycle management tools offer robust enterprise service levels, making it a preferred choice for large-scale deployments across regulated and mission-critical environments.

In addition, Dell’s deep partnership with Nvidia grants it early access to next-generation GPU innovations and performance optimizations. This enables Dell to be among the first to bring Blackwell-based systems to market, giving it a temporal and technological edge in customer adoption.

The Cloud Challenge: AWS, Google Cloud, and Microsoft Azure

While traditional OEMs compete on-premises, cloud hyperscalers pose a different kind of threat. Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure offer instant access to AI-optimized infrastructure via the cloud, appealing to startups, research institutions, and enterprises seeking elasticity and operational simplicity. AWS’s Trainium and Inferentia chips, GCP’s TPUs, and Azure’s integrated Nvidia A100/H100 VMs are all designed to handle AI workloads with scalability and speed.

However, cloud-centric AI deployments are not without limitations. Data sovereignty, cost unpredictability, latency, and regulatory constraints often necessitate on-premise or hybrid approaches—areas where Dell’s platform excels. With the Blackwell-based PowerEdge servers, Dell offers cloud-equivalent performance with localized control, a model particularly appealing in finance, healthcare, and defense sectors. Additionally, Dell’s APEX consumption model mirrors the flexibility of the cloud, allowing customers to scale resources dynamically while maintaining data locality and compliance.

Crucially, the total cost of ownership (TCO) over multi-year periods often favors Dell’s platform, especially for workloads that require sustained GPU utilization. This economic argument is reinforced by Dell’s commitment to energy efficiency, system reuse, and backward compatibility with existing data center infrastructure.

Strategic Implications: Market Positioning and Differentiation

Dell’s adoption of Nvidia’s Blackwell architecture signals a deliberate strategy to reassert its leadership in the next wave of AI-driven enterprise infrastructure. By aligning its roadmap with Nvidia’s cutting-edge GPU developments, Dell positions itself as a go-to vendor for organizations seeking high-performance, scalable, and future-ready AI systems.

This strategy has several implications:

- Acceleration of Hybrid AI Deployments: As AI workloads become more diverse and complex, hybrid infrastructure models are gaining traction. Dell’s platform supports seamless interoperability between on-prem and cloud environments, enabling organizations to balance performance, cost, and compliance. This hybrid capability is especially valuable for multinational corporations managing data across jurisdictions.

- Enterprise AI Democratization: Dell’s modular design and consumption-based pricing via APEX make advanced AI infrastructure accessible to a broader range of organizations, including mid-sized enterprises and research institutions. This democratization accelerates innovation across sectors, promoting wider AI adoption.

- Industry Vertical Customization: Dell is increasingly tailoring its solutions for specific industry needs—offering pre-configured stacks for life sciences, manufacturing, and financial services. This vertical strategy allows Dell to deliver optimized performance for domain-specific AI models, increasing customer stickiness and reducing deployment friction.

- Strengthening Ecosystem Partnerships: The integration with Nvidia’s full software suite—ranging from CUDA and Triton to NeMo and Clara—enhances the developer experience and simplifies MLOps. By cultivating a robust ecosystem around its platform, Dell ensures ongoing relevance in a fast-evolving AI landscape.

- Sustainability as a Competitive Lever: With enterprises increasingly prioritizing ESG metrics, Dell’s focus on energy-efficient cooling systems, responsible sourcing, and carbon footprint transparency provides a differentiator. The company’s AI infrastructure solutions are designed with sustainability in mind, helping clients meet their own green computing goals.

Risks and Considerations

Despite its strengths, Dell’s strategy is not without challenges. The rapid pace of innovation in AI hardware means that product lifecycles are becoming shorter, and staying ahead requires sustained investment in R&D and supply chain agility. Additionally, while the Blackwell architecture is expected to drive performance gains, cost and power consumption may be prohibitive for smaller customers or edge use cases without advanced thermal infrastructure.

Another concern is the intensifying competition in custom AI chips. Companies like Apple, Meta, Amazon, and Google are investing in domain-specific accelerators to optimize workloads more efficiently than general-purpose GPUs. While Dell remains reliant on Nvidia, the proliferation of proprietary silicon may eventually reshape market dynamics in ways that favor vertically integrated cloud providers.

Furthermore, the AI regulatory landscape is evolving, and requirements around transparency, fairness, and model explainability may necessitate tighter integration between infrastructure providers and software compliance tools. Dell will need to expand its offerings to include governance capabilities and partnerships with AI policy platforms to stay aligned with future mandates.

Dell’s Long-Term Position in the AI Ecosystem

Despite these risks, Dell is well-positioned to thrive in the emerging AI-native enterprise landscape. Its focus on platform integration, strategic partnerships, vertical alignment, and operational flexibility provides a durable competitive advantage. By embedding Nvidia’s most advanced GPU technology within a full-stack enterprise solution, Dell is transforming from a traditional hardware supplier into a foundational enabler of enterprise AI strategy.

As organizations shift from AI experimentation to production-scale deployment, infrastructure decisions will become increasingly central to business outcomes. In this context, Dell’s Blackwell-based platform offers a powerful combination of performance, reliability, and enterprise alignment, setting a new standard for how AI infrastructure is conceptualized, deployed, and scaled.

Conclusion

Dell’s unveiling of its Nvidia Blackwell-based AI acceleration platform marks a definitive moment in the evolution of enterprise computing infrastructure. As AI workloads continue to proliferate—both in scale and in complexity—organizations are confronted with the pressing need for platforms that not only deliver peak computational performance but also support a broad spectrum of operational requirements, from data sovereignty and regulatory compliance to sustainability and long-term scalability. Dell has responded to this moment with a thoughtfully engineered, fully integrated AI platform that bridges the gap between raw GPU power and enterprise-grade infrastructure reliability.

The Blackwell architecture itself represents a substantial technological leap. With its dual-die design, FP8 precision support, and massive memory bandwidth, it empowers enterprises to train and deploy next-generation AI models faster and more efficiently than ever before. Dell’s integration of this architecture into its PowerEdge servers, storage solutions, and orchestration software illustrates a clear commitment to enabling AI-first transformation at every level of the enterprise stack.

Moreover, Dell’s competitive differentiation lies in its platform-centric strategy—offering not just hardware, but a complete ecosystem of compute, networking, storage, and management tools. Through robust support for hybrid and edge deployments, secure infrastructure design, and tight integration with Nvidia’s AI software suite, Dell’s solution provides the flexibility and control that modern enterprises demand.

Real-world applications across healthcare, finance, manufacturing, retail, and public sector demonstrate the versatility and impact of Dell’s AI platform. From reducing time-to-diagnosis in genomics to detecting fraud in real time and optimizing industrial operations, Dell is enabling organizations to harness AI not as a peripheral enhancement but as a central operational enabler.

Strategically, Dell has positioned itself as a formidable player in the AI infrastructure race—not only competing with traditional OEMs but also offering viable alternatives to cloud hyperscalers. Its APEX as-a-service model, sustainable engineering practices, and vertical solution stacks further extend its reach into diverse markets with tailored value propositions.

As we look ahead, the success of AI initiatives will increasingly depend on the quality, agility, and scalability of the underlying infrastructure. With the Blackwell-powered platform, Dell provides a blueprint for how enterprises can deploy AI responsibly, securely, and at scale. In doing so, it strengthens its role not merely as a server vendor, but as a strategic partner in the AI-driven digital transformation of business.

References

- Dell Technologies – PowerEdge XE9680

https://www.dell.com/en-us/dt/servers/poweredge-xe9680 - Nvidia – Blackwell Architecture Overview

https://www.nvidia.com/en-us/data-center/blackwell/ - Dell APEX – Infrastructure as a Service

https://www.dell.com/en-us/dt/apex/index.htm - Nvidia AI Enterprise Software Suite

https://www.nvidia.com/en-us/data-center/products/ai-enterprise/ - Dell OpenManage Enterprise

https://www.dell.com/support/solutions/en-us/omenterprise - Nvidia NeMo for LLM Development

https://developer.nvidia.com/nemo - Dell PowerScale Storage for AI

https://www.dell.com/en-us/dt/storage/powerscale - Nvidia NVLink Technology

https://www.nvidia.com/en-us/data-center/nvlink/ - Dell CloudIQ for AI Operations

https://www.dell.com/en-us/dt/services/cloudiq.htm - Nvidia Triton Inference Server

https://developer.nvidia.com/nvidia-triton-inference-server