Comic Book Artists Push Back: Why AI Art Is Threatening Creative Livelihoods

The intersection of artificial intelligence and creative expression has become one of the most hotly contested arenas in the digital age. In recent years, AI-powered image generation tools such as Midjourney, DALL·E, and Stable Diffusion have made it possible to produce highly detailed, stylistically sophisticated visual art within seconds. What began as a novel application of machine learning has rapidly evolved into a transformative force across multiple creative domains—including the world of comic books. For artists who have long defined the industry through painstaking hand-drawn work, storytelling nuance, and personal aesthetic signature, this technological advancement is perceived by many not as an enhancement, but as an existential threat.

One such artist recently voiced what has become a widespread sentiment in the visual arts community, declaring artificial intelligence a “threat to livelihood.” Far from being an isolated opinion, this viewpoint captures a growing unease among illustrators, storyboard creators, and independent comic book artists. For decades, comic creation has involved a collaboration of writers, inkers, colorists, and editors. However, AI models capable of generating entire panels, mimicking visual styles, and altering narratives with user input are now challenging that ecosystem.

At the core of this disruption is a stark economic reality. Freelance comic artists already face a precarious labor market—often underpaid, undervalued, and without institutional protection. The rise of generative AI poses a risk of widespread disintermediation, where publishers and even hobbyists might replace traditional labor with algorithmic outputs. In parallel, broader questions surrounding copyright, artistic integrity, and the ethics of training AI on human-made datasets have further intensified the debate. Is AI democratizing art or undermining it? Can innovation and originality coexist when machines are trained to replicate human imagination?

This blog post explores the multifaceted implications of AI in the comic book industry. Through a combination of case studies, legal analysis, market data, and firsthand perspectives, we examine how automation is reshaping the future of visual storytelling. We also confront the central question raised by many in the industry: is artificial intelligence a tool for creative amplification—or a catalyst for creative displacement? From the rise of AI-assisted publishing to the backlash from working artists, the debate over AI’s role in comics is more than a technological issue—it is a cultural reckoning with what it means to create, own, and sustain art in the 21st century.

The Evolving Role of AI in Comic Art

The comic book industry, long anchored in hand-drawn artistry and narrative cohesion, is experiencing a technological upheaval driven by artificial intelligence. Over the past five years, advancements in generative AI—specifically in text-to-image and image-to-image models—have begun reshaping how comics are conceptualized, designed, and distributed. Tools like OpenAI’s DALL·E, Stability AI’s Stable Diffusion, and Midjourney have enabled both professionals and amateurs to create visually stunning illustrations with unprecedented speed. While these technologies have unlocked new creative possibilities, they have also redefined the artist’s role in the comic production pipeline.

AI's presence in comic creation initially emerged through supportive functionalities. Early applications focused on automating repetitive or time-intensive tasks such as background generation, colorization, or texturing. For example, AI-assisted coloring tools allowed artists to apply consistent palettes across entire panels, streamlining workflows without undermining artistic control. Software like Clip Studio Paint and Adobe Photoshop began integrating machine learning to suggest color schemes or auto-fill line art—offering labor-saving enhancements to professionals working under tight deadlines.

However, the scope of AI’s involvement rapidly expanded. Generative models evolved from assistive roles to more autonomous content creation. A key inflection point occurred when image-generation systems began to produce full-frame illustrations based on textual prompts. Platforms like Midjourney and Leonardo AI allow users to input descriptive phrases such as “cyberpunk detective standing in neon-lit alley,” instantly producing stylized images that mimic the look and feel of traditional hand-drawn comics. The ability to control mood, framing, character design, and even mimic famous artistic styles has turned AI into a potential stand-in for human illustrators.

This progression was further accelerated by open-source diffusion models, which enabled enthusiasts to fine-tune algorithms on custom datasets—sometimes without the consent of the original artists. As a result, entire AI models can now emulate specific comic book aesthetics, from golden-age superhero illustrations to Japanese manga ink work. While some creators experiment with these tools to augment their portfolios, others see them as encroachments on their artistic identity.

The appeal of AI-generated comic art lies in its speed, cost-efficiency, and scalability. A traditional comic book issue, typically requiring weeks of collaborative labor from artists, inkers, and colorists, can now be prototyped in days using AI tools. Startups and self-publishing platforms have capitalized on this by offering AI-generated comics directly to consumers, circumventing traditional creative labor entirely. Web-based services such as “ComicsMaker AI” or “StoryWizard” allow users to generate storylines, characters, and panels with minimal human input. These tools democratize access to storytelling—but at the cost of devaluing professional artistry.

Yet, it is not only independent creators who are exploring these technologies. Large publishing houses are increasingly experimenting with AI to enhance productivity and reduce operational costs. While many have refrained from publicly acknowledging full reliance on AI art, some have begun incorporating AI into background illustrations or as preliminary concept drafts. Industry insiders suggest that AI-driven layout suggestions and page design tools are now part of pre-production pipelines in several mainstream studios. This quiet adoption illustrates a broader trend: while full automation may not be the immediate goal, AI is increasingly embedded as a cost-cutting tool.

Simultaneously, hybrid artists—those who consciously blend AI output with manual drawing—are emerging as a new creative demographic. These individuals use generative models for inspiration or base layers and then apply manual adjustments to align the output with personal vision. This practice has gained traction on platforms like ArtStation and Behance, where creators often annotate which elements were AI-generated and which were hand-rendered. While this approach promises greater creative flexibility, it also blurs authorship boundaries, complicating questions of originality and credit attribution.

In educational contexts, AI tools are reshaping how emerging artists engage with visual storytelling. Art schools and comic workshops are beginning to include modules on AI-assisted design, encouraging students to explore prompt engineering alongside sketching. The argument made by proponents is that fluency with AI tools will soon become a requisite professional skill, akin to digital inking or photo editing. Yet, for many instructors and students, this evolution feels like a dilution of fundamental creative disciplines, raising concerns about how future generations of artists will define their craft.

Despite the enthusiasm from some quarters, backlash from traditional artists has been swift and vocal. Many argue that AI art, trained on vast repositories of human-made work, amounts to a form of creative appropriation. Comic artists in particular—whose livelihoods depend on distinct visual styles—find themselves at the center of the controversy. Some report clients requesting AI art in lieu of paid commissions, while others observe a noticeable drop in freelance inquiries. The economic pressure is compounded by platform policies that have been slow to restrict or label AI-generated content, allowing mass uploads of machine-created work alongside original portfolios.

The broader cultural conversation is also shifting. Readers, long attuned to the idiosyncrasies of hand-drawn work, are becoming more aware of stylistic mimicry and narrative shallowness in AI-generated comics. Online forums, including Reddit and Discord communities, increasingly feature debates over the authenticity of AI-produced stories. While some readers celebrate the novelty and accessibility of such works, others express concern that the emotional resonance and layered symbolism inherent in human-drawn art is being lost.

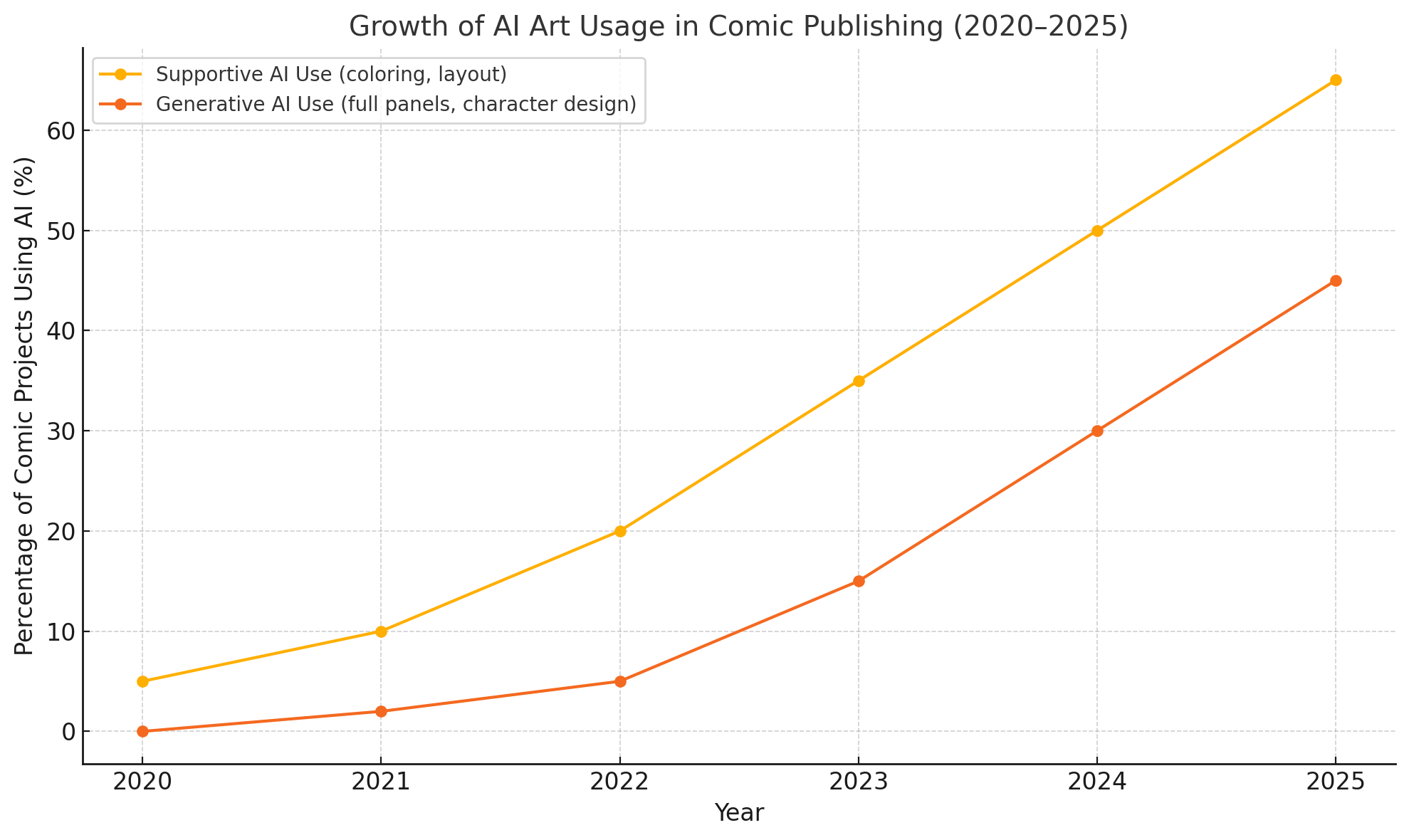

To quantify this evolving landscape, consider the following chart.

In summary, artificial intelligence has moved from the periphery to the center of comic book production. What began as a set of assistive functions is rapidly transforming into a dominant mode of visual storytelling—albeit one fraught with ethical, creative, and economic complexities. As generative AI becomes more accessible, its integration into comics will continue to deepen, forcing both creators and consumers to reassess the boundaries of authorship, value, and art itself.

Artist Backlash and Economic Threats

The rapid integration of artificial intelligence into comic art production has sparked significant resistance from within the artistic community. For many comic book artists—both independent creators and those working with established publishers—AI represents not a collaborative tool, but a disruptive force with serious ramifications for job security, artistic integrity, and long-term viability in an already precarious creative economy. The emergence of generative AI models capable of producing high-quality illustrations has catalyzed a wave of artist-led backlash, bringing economic and ethical concerns to the forefront of the cultural debate.

At the heart of the protest is the growing perception that AI-generated art devalues human labor. Comic art is not merely a technical skill—it is an expressive craft honed over years of practice. Yet AI models, trained on massive datasets composed of human-made artworks (often scraped without consent), can replicate visual styles in seconds. This dynamic has led to instances where clients, publishers, or startups opt for AI-generated illustrations to reduce costs, bypassing the need for commissioning human artists altogether. In many cases, freelance illustrators report losing potential work to clients who have chosen AI as a more “efficient” or “budget-friendly” solution.

Economic concerns are further compounded by the volatile nature of freelance employment in the comic book industry. Many artists work on a project-to-project basis, often without the protections afforded to salaried positions. With AI increasingly capable of completing entire panels, background designs, or even entire issues, the demand for entry-level or mid-career artists—those most vulnerable to displacement—has begun to decline. Anecdotal evidence suggests that some small publishers are already experimenting with reducing or eliminating artist roles in favor of AI-based pipelines.

The erosion of income opportunities is particularly acute among independent artists, who depend heavily on commission-based work, online marketplaces, and conventions to sustain their livelihood. The influx of AI-generated content on platforms such as DeviantArt, ArtStation, and Etsy has created an oversaturated environment, making it more difficult for human-created art to stand out. Some artists have reported seeing their original works copied, imitated, or transformed by AI, only to reappear in digital marketplaces with little or no attribution. This not only undermines the value of original creations but also leads to a broader sense of disillusionment and loss of agency.

In response to these trends, a growing number of artists have become vocal critics of AI integration in visual media. Online campaigns such as “#NoToAIArt” have gained traction on social media, serving as rallying cries for creators calling for greater transparency, consent, and ethical boundaries. Several petitions have circulated demanding platforms label or ban AI-generated artwork. Notably, artists have also staged digital protests—such as uploading blank images or satirical AI parodies—to raise awareness about the devaluation of creative labor.

Beyond economic pressures, the artist backlash is rooted in a deeper cultural anxiety: the fear that artistic identity and individual voice are being eroded. Comic book artists often develop a distinct signature style that reflects their personal journey, worldview, and aesthetic philosophy. When AI can mimic these visual languages without context or lived experience, the result may be technically impressive but emotionally hollow. Many artists argue that AI-generated art lacks the intentionality, improvisation, and emotional nuance that make comics a unique medium for storytelling. The growing substitution of human artistry with algorithmic output risks reducing comic books to a series of predictable, style-polished panels devoid of narrative soul.

These tensions are exacerbated by the opacity of AI training models. Most generative art systems are built on large, often unregulated image datasets scraped from the internet. Artists whose works are included in these datasets have little to no recourse, as legal frameworks governing data scraping and artistic ownership remain ambiguous. In the absence of consent mechanisms, attribution protocols, or opt-out options, creators find themselves involuntary contributors to systems designed to replicate and potentially replace them.

To better understand the economic landscape from the artist’s perspective, the following comparative table outlines key pain points experienced by human creators versus the functional strengths of AI art tools.

| Artist Pain Point | AI Tool Capability |

|---|---|

| Time-consuming manual illustration | Rapid image generation in seconds |

| Creative burnout from repetitive tasks | Infinite production with no fatigue |

| Income instability and freelance precarity | Free or low-cost generation for users and clients |

| Difficulty gaining visibility in oversaturated markets | Algorithmic generation optimized for engagement |

| Risk of stylistic plagiarism | Style transfer and imitation through trained datasets |

| Need for attribution and credit | No built-in attribution mechanisms in AI systems |

| High training investment in skill development | No formal training required by the user |

| Ethical use of reference material | Opaque training on potentially copyrighted datasets |

This table highlights the asymmetry between the challenges faced by human artists and the advantages AI tools provide to end-users and content distributors. The net result is a creative environment where economic pressures incentivize automation over human collaboration—a trend that many fear will accelerate without regulatory or institutional safeguards.

There is also growing discontent over how major platforms handle AI-generated content. For instance, ArtStation initially allowed AI art uploads without labeling, leading to mass artist protests and a temporary blackout in protest of the policy. In response, the platform introduced a tagging system, though critics argue it remains insufficient. Similarly, Etsy and other digital marketplaces continue to host AI-generated art with little oversight, leading to confusion among buyers and reputational harm for legitimate artists accused of using shortcuts.

In the face of these developments, professional organizations have begun to advocate for policy change. The Graphic Artists Guild and the Comic Book Workers United (CBWU), for example, have issued statements urging industry stakeholders to establish clear boundaries for the ethical use of AI in commercial art. These include demands for opt-out rights for artists whose works are used in training datasets, the implementation of watermarking technologies, and contractual clauses that protect human creators from being replaced by generative systems.

Despite these efforts, institutional response has been slow. Many publishers, facing financial constraints and under pressure to keep up with digital consumption trends, have shown hesitance to draw hard lines against AI adoption. Instead, some appear to favor a hybrid model in which AI is used to support human creators—though in practice, this often leads to reduced commissions and less creative autonomy for artists.

In conclusion, the artist backlash against AI in the comic book industry is not merely a resistance to change—it is a reaction to the existential threat posed by automation to a deeply personal and culturally significant profession. The economic pressures of freelance instability, coupled with the ethical gray areas of AI development, have pushed many artists to question the sustainability of their careers. As the line between original art and algorithmic imitation becomes increasingly blurred, the need for transparent policies, equitable compensation, and cultural respect for human creativity has never been more urgent.

Copyright, Fair Use, and Legal Frameworks

The integration of generative artificial intelligence into comic book creation has not only disrupted creative workflows and labor dynamics—it has also introduced a host of unresolved legal and intellectual property issues. At the center of the controversy is the question of authorship and ownership: when an AI system produces an illustration or mimics a comic artist’s signature style, who holds the copyright? Does the creator of the AI model, the user inputting prompts, or the original human artist whose style was emulated have legal claim? As these questions remain largely unanswered, the comic book industry finds itself grappling with a legal vacuum ill-equipped to address the challenges posed by machine-generated creativity.

At the core of the legal ambiguity lies the way AI systems are trained. Most prominent generative image models—including Stable Diffusion, Midjourney, and OpenAI's DALL·E—rely on vast datasets scraped from publicly accessible images, including those hosted on artist portfolios, social media platforms, and commercial art repositories. These datasets are rarely curated with consent. As a result, thousands of comic book illustrations, both published and unpublished, have likely been used to train algorithms that can replicate styles and aesthetics with alarming precision.

From a legal standpoint, this raises serious questions about the legitimacy of these training practices. In the United States, copyright law traditionally protects “original works of authorship fixed in any tangible medium of expression,” including visual art and illustrations. However, it does not currently extend the same protection to machine-generated outputs. According to the U.S. Copyright Office, works generated entirely by AI without meaningful human authorship are not eligible for copyright. This means that while an artist's original drawing is protected, a similar image generated by AI trained on that drawing is not—at least under current rules.

This has led to significant concern among comic book creators, many of whom feel their intellectual property is being exploited without compensation or acknowledgment. In some cases, artists have discovered AI-generated images online that closely resemble their work—either in composition, color palette, or stylization—but cannot pursue legal action due to the absence of direct replication or the lack of clarity in attribution. The lack of enforcement mechanisms and opt-out provisions in AI training processes effectively renders artists powerless in defending their visual identity.

In recent years, a few legal cases have emerged that could shape the future of copyright law as it pertains to AI-generated art. Notably, in a high-profile class-action lawsuit filed against Stability AI, Midjourney, and DeviantArt, a group of artists alleged that their copyrighted works were used to train generative models without permission. The case, which is still under litigation, claims that these companies violated intellectual property law and profited from unauthorized derivative works. While the outcome remains uncertain, its verdict could set a precedent for how courts interpret the ownership and legality of AI-generated content in artistic contexts.

Another important dimension of the legal debate involves fair use. AI developers often argue that using publicly available images for training falls under the doctrine of fair use, especially when the output is considered “transformative.” However, the boundaries of fair use are notoriously gray and context-dependent. In the case of generative art, courts have yet to definitively determine whether the transformation by AI is sufficiently distinct to qualify under fair use protections—especially when the output closely mirrors the original works used in training.

Furthermore, the international legal landscape is fragmented. Different jurisdictions interpret copyright, data protection, and fair use differently. In the European Union, for example, there is increasing emphasis on transparency and consent in data usage. The European Parliament’s AI Act includes provisions requiring AI developers to disclose whether copyrighted data was used in training. By contrast, the United States maintains a more permissive stance, emphasizing innovation and technological advancement over artist protection. This discrepancy complicates enforcement, particularly for comic book artists who distribute their work globally.

In response to mounting pressure, some platforms and publishers have begun implementing limited protective measures. ArtStation, for instance, introduced a “NoAI” tag that artists can apply to their works to signal exclusion from training datasets. However, critics argue that such tags are reactive and largely symbolic, lacking the technical enforcement necessary to prevent scraping. Others call for proactive watermarking or blockchain-based provenance systems that could verify authorship and restrict unauthorized use.

Major comic publishers have remained largely silent on these legal issues, often navigating a fine line between embracing emerging technologies and avoiding reputational damage. However, some indie publishers and creators have begun taking public stances against the use of AI-generated art in submissions or competitions. For example, several webcomic platforms now explicitly prohibit AI content, citing concerns over fairness, originality, and the inability to verify the creative process.

Ethical considerations further complicate the legal conversation. Even in jurisdictions where scraping may be technically legal, artists argue that the practice is ethically exploitative. Many illustrators compare AI training on their work to plagiarism—except automated and scaled beyond human comprehension. The inability to track how many times one’s style has been absorbed, reinterpreted, or monetized by an algorithm creates an environment of systemic disempowerment for artists.

Efforts to regulate the space have begun, albeit slowly. In the United States, legislators have introduced preliminary bills aimed at establishing rights for creators whose work is used in AI training, but these efforts remain in early stages. Similarly, advocacy groups such as the Authors Guild and Creative Commons have proposed voluntary licensing frameworks that would allow creators to opt in or out of training datasets. However, the effectiveness of these proposals hinges on industry adoption—something that has yet to materialize at scale.

Until clearer legal frameworks emerge, comic book artists must navigate a murky terrain where copyright enforcement is limited, ethical guidelines are voluntary, and platform policies are inconsistent. The current state of affairs places a disproportionate burden on individual artists to protect their work in an ecosystem that is evolving faster than the laws designed to govern it.

In sum, the legal complexities surrounding AI-generated comic art reflect a broader crisis of ownership in the digital age. As machine learning continues to redefine what it means to create, attribute, and protect visual content, the need for updated legal standards that prioritize consent, transparency, and accountability has never been more pressing. The future of artistic labor—and the integrity of the comic book medium—will depend in part on whether lawmakers, technologists, and publishers can rise to meet this challenge.

The Industry’s Response and Paths Forward

As the tension between artificial intelligence and traditional comic book artistry intensifies, key stakeholders across the industry are beginning to craft responses—some reactive, others proactive. Comic publishers, artist advocacy groups, online platforms, and even AI developers themselves are navigating a rapidly evolving landscape where technological innovation, ethical considerations, and economic realities intersect. The challenge lies in finding a balanced path forward—one that acknowledges the potential of AI as a creative tool while safeguarding the livelihoods, rights, and voices of human artists.

The responses from major comic book publishers have, thus far, been measured and fragmented. Companies like Marvel and DC Comics have yet to release comprehensive public policies on AI-generated content. However, internal sources and industry watchdogs suggest that these publishers are experimenting with AI integration in limited capacities, particularly in pre-visualization, background generation, and editorial layout assistance. These applications, while ostensibly supportive, risk becoming more intrusive if not carefully bounded. For now, many legacy publishers appear to be taking a “wait and see” approach—observing technological capabilities while assessing reputational and legal risks.

By contrast, some independent publishers and digital-first platforms have adopted more explicit stances. For example, Tapas Media and Webtoon—popular platforms for user-generated comics—have issued guidance discouraging or outright prohibiting the use of AI-generated content in submissions, particularly in monetized or competition-driven categories. These decisions often stem from concerns about fairness, transparency, and the preservation of human creativity in storytelling. Webtoon, in particular, has expressed an interest in supporting its artist community by maintaining a “human-first” standard across its ecosystem, although it continues to monitor AI developments closely.

Artist advocacy organizations have emerged as crucial players in shaping the narrative and advocating for ethical boundaries. Groups such as the Comic Book Workers United (CBWU), the Society of Illustrators, and the Graphic Artists Guild have released statements and organized forums to address the rise of AI in creative production. These entities are calling for industry-wide standards, including clear labeling of AI-generated content, compensation models for artists whose work has been used in AI training datasets, and opt-out mechanisms for creators wishing to preserve the integrity of their artistic identity.

A particularly noteworthy initiative comes from the Artists’ Rights Alliance, which has proposed a Creative Integrity Framework. This framework includes principles such as consent-based training data, fair revenue sharing for derivative outputs, and clear attribution protocols. Although not yet universally adopted, the framework has sparked productive dialogue between artists, technologists, and platform operators. It serves as a potential foundation for building more inclusive and equitable creative ecosystems.

From a technological standpoint, some AI developers are responding to criticism with efforts to increase transparency and ethical compliance. For instance, Stability AI has announced the development of new filters to exclude specific image classes and artist styles from its training sets. OpenAI has introduced tagging features and usage restrictions for DALL·E, although questions remain about the enforcement and sufficiency of such measures. Midjourney, on the other hand, has faced sustained scrutiny for its reluctance to disclose training data or implement opt-out features—highlighting the uneven commitment to ethical standards across the AI sector.

A number of startups have emerged with the specific goal of developing ethical AI for creatives. Companies like Spawning.ai offer artists tools to protect their work, such as “Do Not Train” metadata and dataset visibility trackers. These initiatives signal a growing awareness within the tech community that sustainable innovation must be built on mutual respect and consent, rather than exploitation. However, adoption of such tools remains limited, and their efficacy depends on buy-in from larger platforms and content aggregators.

On the creative front, a new generation of hybrid artists is exploring ways to coexist with AI rather than oppose it outright. These creators integrate AI into their workflow not as a replacement, but as a means of expanding their artistic toolkit. For example, AI-generated drafts may serve as inspiration for composition, lighting, or character posing—after which the artist manually refines the image to maintain control over the final expression. This “AI-assisted human authorship” model has found favor among digital natives who are more open to technological experimentation but still value artistic intentionality.

Art education institutions are beginning to adjust their curricula to reflect these hybrid practices. Increasingly, comic art programs include modules on prompt engineering, generative model ethics, and AI-assisted design. The goal is to prepare students for a creative landscape in which fluency with both traditional and digital tools will be essential. Critics, however, warn that overemphasis on efficiency may discourage foundational skill development, creating a generation of creators more reliant on tools than on personal vision.

Meanwhile, a growing number of artists and readers are advocating for AI-free creative spaces. Projects like the “Handmade Comics Manifesto” and collectives such as “Humans Only” promote comic works created exclusively by human hands, often publishing manifestos that reject algorithmic influence and emphasize analog techniques. These communities celebrate the imperfection, individuality, and tactile reality of hand-crafted art as a counterpoint to the polished, sometimes sterile outputs of generative AI.

In parallel, some crowdfunding platforms and publishers have committed to supporting AI-free projects through dedicated grant programs or visibility boosts. Kickstarter, for instance, has issued guidelines that encourage transparency about the use of AI in campaign content and reward development. Patreon has also introduced features allowing creators to disclose AI use, helping patrons make informed decisions about the nature of the content they support.

From a market perspective, analysts forecast the emergence of a dual-track comic economy. One track may consist of high-volume, low-cost, AI-generated content targeting casual readers or algorithm-driven discovery platforms. The other may focus on artisanal, creator-centric works that emphasize narrative depth, artistic authenticity, and collector value. This bifurcation reflects broader trends in creative industries, where automation enables mass production, but human craftsmanship remains a marker of prestige and emotional connection.

Looking forward, the comic book industry faces a series of strategic choices. The path forward could involve:

- Establishing industry standards for AI content disclosure, ethical dataset usage, and artist consent;

- Creating certification systems for AI-free content to assure readers of human authorship;

- Investing in hybrid training programs to equip artists with skills to navigate both traditional and AI-assisted workflows;

- Fostering dialogue between artists, developers, and publishers to align technological innovation with creative values.

Ultimately, the future of comic book artistry in the age of AI depends on whether the industry can reframe technological advancement not as a zero-sum game, but as an opportunity to reaffirm the central role of human creativity. By centering ethics, transparency, and respect for artistic labor, the comic book ecosystem can evolve to harness AI’s potential without sacrificing its soul.

Conclusion

The accelerating adoption of artificial intelligence in the comic book industry has stirred a profound and far-reaching debate—one that extends far beyond questions of technology or artistic novelty. It touches the very core of what it means to be a creator in the digital age. For many comic artists, AI represents not merely a new tool but a threat to the creative ecosystem they inhabit—a system historically rooted in individual expression, cultural storytelling, and the irreplaceable value of human perspective.

From the rise of AI-powered illustration tools capable of generating near-professional visuals, to the economic displacement of freelance artists and the murky legality surrounding training datasets, the challenges are multifaceted. AI’s role has expanded from a background assistant to a potential centerpiece of content generation, dramatically reshaping how visual narratives are produced, monetized, and consumed. Yet the backlash from artists, advocacy groups, and communities serves as a powerful reminder that progress without ethical consideration is rarely sustainable.

The legal vacuum around copyright enforcement, the ethical dilemmas of non-consensual training practices, and the dilution of artistic value on digital marketplaces all point to an urgent need for structured intervention. As courts weigh precedent-setting lawsuits and legislative bodies begin to draft regulations, the future of AI in comics hinges on how effectively society can establish safeguards that protect both innovation and integrity.

Encouragingly, segments of the industry are beginning to respond. Independent publishers, artist unions, and forward-thinking developers are advancing frameworks that promote transparency, consent, and equitable compensation. Meanwhile, a growing cohort of hybrid artists is navigating this transition creatively, demonstrating that coexistence—while complex—is possible when guided by principle and intent.

Still, a broader industry reckoning remains overdue. If AI is to serve the comic book world, it must do so not by undermining the foundational role of the artist, but by amplifying and respecting it. The future will depend on our collective ability to recognize that technological power is most meaningful when it enhances human voice, rather than replacing it.

As readers, creators, publishers, and policymakers confront this crossroads, the central question becomes not just what AI can do, but what kind of artistic future we wish to cultivate. The answer, like the best comic stories, will depend not only on the tools we wield but on the values we uphold.

References

- Stability AI Class Action Lawsuit – TechCrunch

https://techcrunch.com/stability-ai-lawsuit-artists - Comic Book Workers United (CBWU) – Official Website

https://www.cbwu.org/ - OpenAI's DALL·E Overview – OpenAI

https://openai.com/dall-e - Midjourney AI Image Generator – Midjourney

https://www.midjourney.com/ - DeviantArt’s AI Policy & Response

https://www.deviantart.com/about/policies/ai - ArtStation “NoAI” Tag and Community Protest

https://magazine.artstation.com/ai-tagging-feature - Graphic Artists Guild Advocacy on AI

https://graphicartistsguild.org/ai-ethics-and-artists - Webtoon Submission Guidelines on AI Use

https://www.webtoons.com/en/notice - EU AI Act Legislative Summary – Euractiv

https://www.euractiv.com/section/artificial-intelligence/news/eu-ai-act-summary - Spawning.ai and “Do Not Train” Tools for Artists

https://www.spawning.ai/