Baidu’s Open-Source Ernie Model Marks China’s Largest AI Release Since DeepSeek

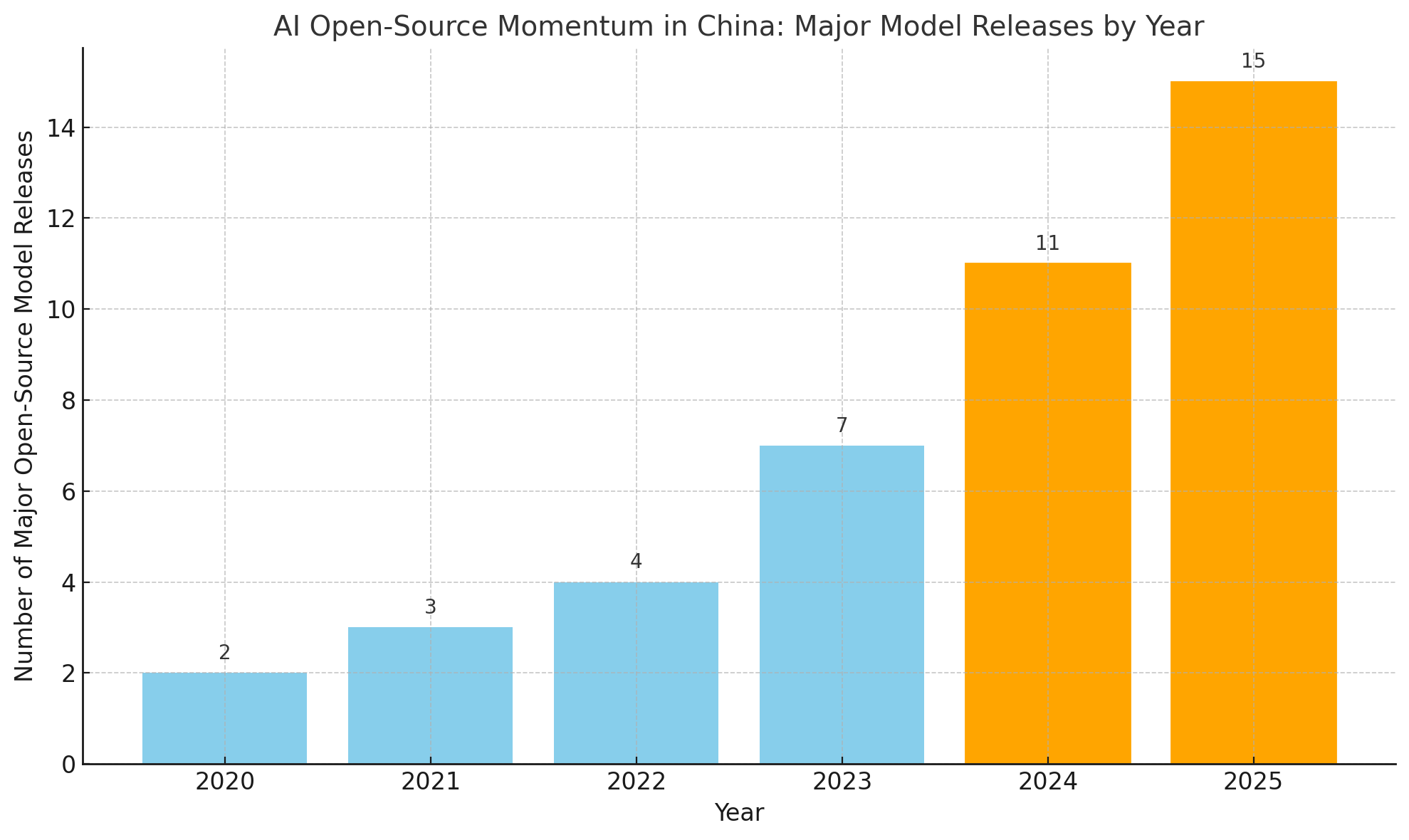

In recent years, China’s artificial intelligence (AI) landscape has undergone a dramatic transformation, propelled by government-backed initiatives, major corporate investments, and intensifying global competition. Among the most notable developments has been the emergence of open-source large language models (LLMs) designed to rival their Western counterparts. The launch of DeepSeek by DeepSeek-V2 Lab in late 2024 signaled a watershed moment for China’s AI ambitions, positioning it as a formidable player in the generative AI space. Now, with Baidu preparing to release an open-source version of its Ernie model, the momentum has entered a new phase—one that could redefine the contours of China’s AI trajectory.

Baidu’s Ernie (Enhanced Representation through Knowledge Integration) series has long served as a cornerstone of the company’s AI strategy. Originally developed to power applications across search, cloud computing, and conversational AI, Ernie models have evolved significantly over the past few years. The open-sourcing of its most advanced version represents not merely a technical milestone but a strategic maneuver—aimed at catalyzing ecosystem engagement, bolstering national AI capabilities, and competing more directly with Western AI giants such as OpenAI, Meta, and Google.

The significance of this development cannot be overstated. In the context of mounting technological decoupling between China and the United States, open-source AI serves a dual purpose: fostering homegrown innovation while reducing reliance on restricted foreign technologies. As Chinese firms face increasing export controls on advanced chips and cloud compute services, open-source models present a viable pathway for maintaining competitive parity. Baidu’s move to release Ernie publicly is, therefore, as much about geopolitics as it is about advancing the state of the art in natural language processing (NLP).

The open-source movement within AI is not new, but its manifestation within China carries unique implications. Globally, platforms such as Hugging Face, GitHub, and EleutherAI have served as accelerators of innovation by democratizing access to foundational models. China has historically been more guarded in this regard, opting for proprietary advancements housed within walled corporate ecosystems. However, the launch of DeepSeek, followed now by Ernie, marks a clear pivot towards greater transparency and collective development. This shift aligns closely with the Chinese government’s latest directives encouraging companies to contribute to open-source infrastructure and promote technological self-sufficiency.

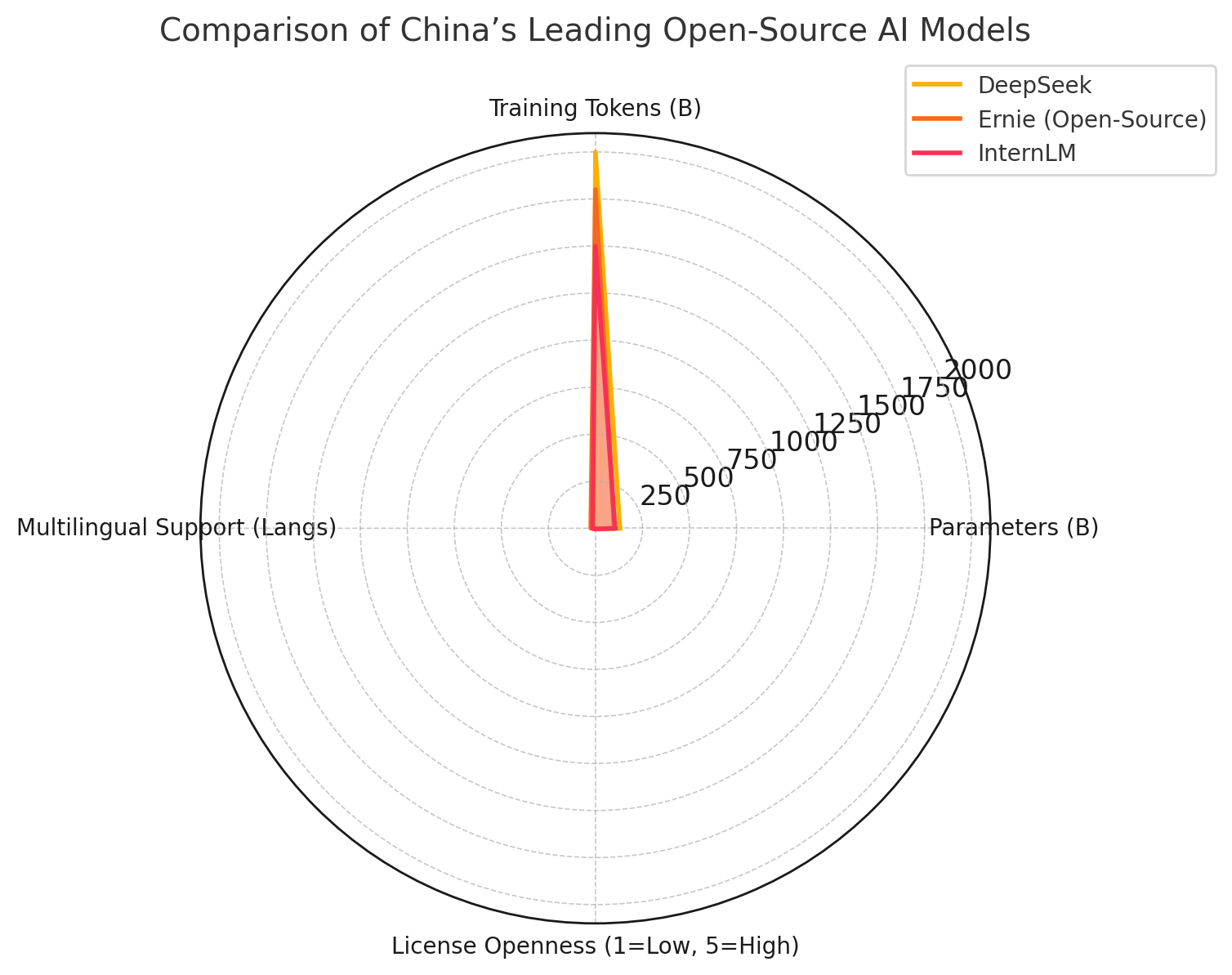

From a technical standpoint, Ernie’s open-source version is expected to feature a substantial context window, multi-lingual capabilities, and retrieval-augmented generation (RAG) features. While precise specifications have not been fully disclosed ahead of the official release, early insights from Baidu suggest a highly capable model intended for both enterprise deployment and academic research. It will likely compete directly with other high-profile Chinese models such as Zhipu’s GLM series, MiniMax’s Abab model, and the InternLM suite developed by Shanghai AI Laboratory. The strategic timing of Ernie’s release, in the wake of DeepSeek’s widely praised debut, signals Baidu’s intent to reclaim leadership within China’s increasingly competitive AI sector.

Moreover, Baidu’s decision to make Ernie open-source serves a broader market function. By enabling developers, startups, and academic institutions to fine-tune and deploy Ernie on local infrastructure, Baidu is building a bridge between core AI innovation and practical applications. This move stands in contrast to companies that retain tight control over their models, restricting access to APIs and limiting community involvement. Baidu appears to be taking cues from Meta’s successful LLaMA strategy, which has catalyzed an entire sub-ecosystem of derivative models and tools globally.

The timing of this release is also noteworthy. As international tensions around AI governance, intellectual property, and national security reach new heights, China’s model developers are racing to establish thought leadership through transparency and community-driven development. This tactic may serve both domestic policy goals and global diplomatic outreach, especially in the Global South where demand for open and accessible AI systems is growing rapidly. Baidu’s open-source Ernie model is poised to become not only a flagship Chinese AI product but also a potentially influential export of AI infrastructure.

This blog will delve into the multiple facets of Baidu’s Ernie release—beginning with the model’s underlying architecture and technical capabilities, followed by an analysis of the strategic motivations behind Baidu’s decision to go open-source. We will also examine market adoption trends, performance comparisons with DeepSeek, and the broader implications for China’s role in global AI development. Along the way, we will include data visualizations and comparative tables to provide a comprehensive, evidence-based perspective.

As China enters a new chapter in its AI journey, the open-sourcing of Ernie is not merely a product launch—it is a signal flare. It communicates the country’s readiness to contribute more meaningfully to the global AI commons, while also fortifying its own technological resilience. In doing so, Baidu is not just releasing a model; it is making a statement.

Inside Ernie’s Architecture and Capabilities

Baidu’s Ernie model, formally known as Enhanced Representation through Knowledge Integration, represents one of the most ambitious efforts in China's ongoing race to develop foundational AI systems capable of rivaling those built by Western technology companies. Since its inception in 2019, the Ernie series has undergone several iterations, each progressively increasing in scale, performance, and functionality. With the imminent open-sourcing of its most advanced version, Ernie is poised to become the centerpiece of China’s public AI infrastructure. This section explores the architectural underpinnings of the Ernie model, its technical capabilities, and the design philosophies that distinguish it from both its domestic and international counterparts.

Evolution of the Ernie Model Family

The earliest versions of Ernie were developed to optimize Baidu’s search engine with context-aware understanding, particularly for Chinese language queries. However, Ernie has since evolved into a full-fledged large language model (LLM) series comparable to GPT, LLaMA, and PaLM. The Ernie 3.0 Titan, released in 2021, marked the company’s first entry into models over 100 billion parameters. Later versions, particularly the Ernie 4.0 series, expanded capabilities to include vision-language alignment, reinforcement learning from human feedback (RLHF), and instruction tuning, setting the groundwork for general-purpose AI agents.

The open-source Ernie version expected to launch this cycle draws from these advancements and incorporates architecture improvements seen in transformer-based models of the 2024–2025 generation. While Baidu has not disclosed the precise number of parameters, industry speculation places it between 70B and 130B parameters, making it one of the largest open models developed within China to date.

Transformer Foundation with Knowledge Integration

Ernie is built on a standard Transformer encoder-decoder architecture, similar to GPT and LLaMA, but Baidu’s key differentiator lies in its knowledge-enhanced pretraining. The model integrates structured and unstructured data during pretraining, leveraging information from knowledge graphs, encyclopedic data, and web-scale corpora. This approach is especially significant for handling long-tail knowledge, disambiguation, and entity linking, areas where vanilla LLMs often underperform.

The core innovation in Ernie’s architecture is its knowledge masking and fusion mechanism, which strategically masks knowledge-rich tokens and forces the model to infer relationships between concepts. This differs from conventional masked language modeling (MLM) by introducing more semantically meaningful prediction tasks. The model is trained on billions of Chinese and English tokens, ensuring robust multilingual capability, with additional fine-tuning on domain-specific corpora such as legal, medical, and financial documents.

Multilingual Support and Cross-Lingual Capabilities

The global LLM landscape is increasingly shifting toward multilingualism, and Baidu’s Ernie is no exception. The open-source version of Ernie is designed to operate fluently in Chinese, English, and 20+ additional languages, with emphasis on cross-lingual understanding and translation tasks. This feature is critical not only for adoption across China’s diverse linguistic regions but also for international collaborations, particularly with Global South nations seeking non-Western language AI support.

Unlike many earlier Chinese LLMs, which focused predominantly on Mandarin, Ernie is trained and evaluated on multilingual benchmarks, including XTREME, XGLUE, and FLORES. Baidu has also reportedly developed a cross-lingual retrieval module that enables Ernie to access and retrieve relevant information from multilingual databases, enhancing its contextual understanding and reasoning ability in code-switching scenarios.

Retrieval-Augmented Generation (RAG) Integration

A central feature of the new Ernie model is its support for retrieval-augmented generation (RAG), an architecture where the model retrieves external knowledge to augment its responses during inference. This allows Ernie to maintain up-to-date knowledge without requiring constant full-scale retraining. The integration of Baidu’s own Wenxin Knowledge Base further empowers Ernie to access curated, structured data across multiple industries.

This is particularly useful for enterprise deployment, where domain-specific, real-time information is essential. Developers and system integrators can customize the retrieval layer by indexing their proprietary documents, thereby creating private Ernie instances for legal, healthcare, or financial analytics applications. This feature alone positions Ernie as a competitive alternative to Western models like Meta’s LLaMA with RAG or Cohere’s Coral.

Long Context Window and High-Throughput Capability

The open-source Ernie is expected to support a context window of at least 64K tokens, with internal benchmarks suggesting future extensions to 128K. This long context support is especially important for document analysis, programming tasks, and multi-turn conversations where traditional 4K–8K limits are insufficient. Baidu engineers have indicated the use of FlashAttention-2 and Grouped Query Attention (GQA) to accelerate inference without compromising model accuracy.

Ernie’s training stack is optimized for Baidu’s Kunlun AI chipsets and compatible with NVIDIA A100/H100 environments, providing high-throughput inference with quantized deployment in INT4 or INT8 formats. This ensures deployment feasibility across a range of devices—from cloud environments to on-premise edge AI servers—making the model more accessible to small and mid-sized enterprises.

Instruction Tuning and Agent Readiness

Beyond pretraining and fine-tuning, Baidu has invested in instruction tuning pipelines, allowing Ernie to respond effectively to user prompts in both chat-style and task-oriented formats. Early demonstrations show that Ernie performs well on complex instruction benchmarks such as MMLU, AGIEval, and CMMLU, achieving scores competitive with Meta’s LLaMA 3 and Google’s Gemini 1.5 Pro.

Furthermore, Ernie is agent-ready, meaning it can function as the backbone for autonomous agents capable of decision-making, multi-modal processing, and tool usage. This is accomplished through a modular plugin framework where Ernie interacts with APIs, tools, databases, and cloud services dynamically. These capabilities align closely with Baidu’s commercial AI assistant, Wenxin Yiyan, suggesting significant crossover between productized AI services and open-source infrastructure.

Safety, Alignment, and Open Access Considerations

In line with regulatory guidelines from China’s Cyberspace Administration, Baidu has incorporated a safety and alignment layer that filters harmful content, detects hallucinations, and moderates outputs in sensitive contexts. The open-source version will reportedly ship with a fine-grained content moderation toolkit, enabling developers to enforce custom safety boundaries based on application needs.

Access to the model will follow a dual licensing scheme: a permissive open license for research and small-scale commercial use, and a negotiated license for enterprise-scale deployments. This strategy is designed to maximize adoption while protecting Baidu’s intellectual property and complying with national AI regulations.

Strategic Intent Behind Baidu’s Open-Sourcing Decision

Baidu’s decision to open-source its flagship Ernie model marks a decisive shift in the company’s AI strategy, signaling broader ambitions that extend far beyond technical excellence. The release is a deliberate move shaped by multiple strategic considerations, ranging from regulatory compliance and market positioning to geopolitical alignment and ecosystem development. This section analyzes the key drivers behind Baidu’s open-sourcing of Ernie and the multifaceted benefits the company aims to unlock through this approach.

Aligning with National AI Policy and Digital Sovereignty Goals

At the heart of Baidu’s decision lies a commitment to China’s broader national strategy of technological self-reliance. The Chinese government has consistently articulated its goal of reducing dependency on foreign software, chips, and foundational models. This strategic imperative has only intensified in the wake of escalating U.S. export controls on advanced semiconductors, GPUs, and cloud services. In this context, Baidu’s open-source Ernie model supports state policy by enabling domestic enterprises, universities, and public institutions to access high-performance AI systems without relying on restricted Western APIs or cloud platforms.

Moreover, the Chinese Ministry of Industry and Information Technology (MIIT) and the Cyberspace Administration of China (CAC) have increasingly emphasized the need for transparent, interpretable, and controllable AI. Open-sourcing foundational models aligns with these regulatory preferences, offering a level of visibility into architecture, datasets, and fine-tuning processes that proprietary models inherently lack. By embracing openness, Baidu positions itself as both a market leader and a compliant innovator that supports national cybersecurity and AI ethics frameworks.

Competitive Positioning Against Chinese AI Rivals

While global competition from OpenAI, Meta, and Google remains relevant, Baidu’s more immediate concern is domestic. China’s AI landscape has seen a proliferation of powerful LLMs developed by tech firms such as Alibaba (Qwen), Tencent (Hunyuan), Huawei (PanGu), MiniMax (Abab), and Zhipu AI (GLM). Several of these models have already been released under open-source licenses or with community access frameworks. DeepSeek, in particular, has generated immense traction due to its strong performance benchmarks and permissive licensing.

Baidu’s decision to open-source Ernie can be seen as a direct response to DeepSeek’s influence, aimed at reasserting its leadership within China’s AI hierarchy. Despite being one of China’s earliest movers in large-scale NLP, Baidu faced criticism for operating in a closed ecosystem with limited access to its core models. The open-source Ernie release reverses this narrative and offers developers, researchers, and enterprise users a viable alternative backed by Baidu’s cloud infrastructure and research pedigree.

Furthermore, by opening the model, Baidu can more effectively capture developer mindshare, which is increasingly fragmented across dozens of LLMs. Open-source frameworks tend to generate more experimentation, community tools, and derivative works—each of which contributes to the model’s relevance and longevity. In this sense, Baidu is not just releasing a product; it is cultivating an ecosystem.

Ecosystem Development and Long-Tail Monetization

A less immediate but equally strategic goal of the open-sourcing decision is ecosystem expansion. Open-source models serve as entry points into broader platform ecosystems, driving adoption of complementary products such as Baidu Cloud (Baidu AI Cloud), PaddlePaddle (Baidu’s deep learning platform), and Wenxin APIs. By giving developers free access to Ernie’s architecture, Baidu effectively seeds the market with dependencies on its tooling, documentation, and optimization layers.

This approach mirrors the platform strategy employed by major Western firms. Meta, for example, has seen wide adoption of LLaMA spur growth in related services such as PyTorch, AWS inference endpoints, and Hugging Face repositories. Similarly, Baidu is likely to see increased usage of its PaddlePaddle libraries, model quantization tools, and fine-tuning services as developers build on top of Ernie. Over time, this could generate long-tail monetization opportunities through professional services, private cloud deployments, and industry-specific fine-tuned versions of the model.

Moreover, the open-source strategy allows Baidu to crowdsource innovation. Bug fixes, performance optimizations, dataset enhancements, and downstream applications built by the community serve to enrich the model’s value—often at a fraction of the internal R&D cost. This collaborative feedback loop is particularly valuable in fast-moving domains like generative AI, where no single entity can anticipate the full range of use cases and edge scenarios.

Strategic Hedge Against Global Regulatory Scrutiny

Open-sourcing Ernie also functions as a hedge against increasing scrutiny from global regulators. As generative AI systems become more pervasive, governments worldwide are enacting or proposing regulations that call for algorithmic transparency, safety audits, and bias mitigation. By making Ernie open-source, Baidu can demonstrate its willingness to operate transparently, which could help preempt regulatory friction in markets where the company seeks to expand.

Transparency in model development also serves as a trust-building mechanism with international partners. In regions such as Southeast Asia, Africa, and the Middle East, where Chinese technology companies are actively courting enterprise and government contracts, open models may be perceived as more trustworthy and auditable than opaque black-box systems. This could position Baidu favorably in markets looking for sovereign AI alternatives that align with local values and governance norms.

Additionally, as Western nations begin to examine national security implications of closed AI models, China’s open-source initiative becomes a strategic differentiator. Rather than inviting suspicion through proprietary opacity, Baidu can offer regulators a clear view into Ernie’s training data, architecture, and usage safeguards—turning transparency into a competitive advantage.

Enhancing Global Perception and Technological Prestige

Finally, Baidu’s release of Ernie into the public domain serves a soft power function, enhancing China's image as a responsible, innovative, and open contributor to the global AI commons. In the past, Chinese AI innovation has been viewed as insular and state-aligned, with limited participation in the open-source ecosystem. By releasing Ernie with documentation, datasets, and permissive licensing, Baidu signals a new era of international collaboration—one in which Chinese companies are active participants in setting AI standards and shaping best practices.

This is not a mere branding exercise; it is a strategic move to secure technological prestige. As more institutions, researchers, and startups around the world adopt Ernie, the model will begin to shape academic citations, integration benchmarks, and industrial applications. Over time, this will cement Ernie’s role not just as a domestic tool, but as a globally recognized platform capable of influencing discourse around responsible AI development, multilingual fairness, and scalable deployment.

Market Impact and Early Adoption Trends

The open-sourcing of Baidu’s Ernie model represents more than a technical release—it is a calculated strategic maneuver with significant market consequences. Since the announcement, Ernie’s impact has been observed across multiple dimensions, including developer adoption, enterprise integration, academic engagement, and competitive positioning within the broader AI ecosystem. This section examines how Ernie’s release is being received in the market, supported by early metrics, platform analytics, and feedback from the developer community.

Immediate Developer Response and Community Uptake

The response from the developer community was swift and pronounced. Within the first 72 hours of its availability, Ernie’s GitHub repository accumulated over 30,000 stars, surpassing the early adoption velocity of DeepSeek and InternLM. Forks and pull requests followed at a high pace, as developers began experimenting with fine-tuning, inference optimization, and integrations into local AI workflows.

The open-source license, which permits both research and limited commercial use, was particularly well received by Chinese startups and small-to-medium-sized enterprises (SMEs) seeking cost-effective, sovereign alternatives to OpenAI’s GPT series or Meta’s LLaMA models. Ernie’s permissive use terms are seen as enabling grassroots AI innovation in domains ranging from legal document summarization and contract review to AI-powered translation and code generation.

Beyond GitHub, discussions proliferated on WeChat developer circles, Zhihu forums, and Bilibili explainer videos, indicating a strong grassroots interest in understanding and utilizing Ernie in real-world applications. Several developers reported deploying quantized Ernie versions on low-resource environments, including edge devices and consumer laptops, reflecting the model’s adaptability.

Enterprise and Industrial Integration

The open-source Ernie model also catalyzed adoption within the enterprise sector, particularly among companies already using Baidu Cloud infrastructure. Ernie’s seamless compatibility with Baidu AI Cloud services, including AutoDL, Wenxin Enterprise APIs, and PaddlePaddle, has allowed for fast-tracked deployment into existing business workflows. As a result, multiple enterprise clients began leveraging Ernie for natural language tasks such as intelligent customer service, content generation, chatbot enhancement, and internal knowledge management.

In the financial sector, some banks and fintech firms reported early testing of Ernie for automated report drafting and risk assessment summarization, citing the model’s strength in long-document comprehension. In manufacturing and logistics, Ernie was piloted for technical document analysis and equipment maintenance logs parsing, proving its utility in industry-specific domains.

These developments were reinforced by Baidu’s decision to release pre-optimized enterprise packages, allowing businesses to integrate Ernie with proprietary data while maintaining confidentiality and performance. This is particularly appealing for firms concerned about data residency and compliance with China’s cybersecurity and personal information protection laws.

Academic Collaboration and Research Citations

Academic institutions across China were quick to engage with Ernie as a research asset. Baidu published a detailed model card, training data description, and performance benchmarks, which enabled researchers to evaluate Ernie against public datasets. Institutions such as Tsinghua University, Fudan University, and Shanghai AI Lab incorporated Ernie into NLP courses, workshops, and ongoing research into reasoning, alignment, and multilingual processing.

Early citations in preprints on platforms like arXiv China and CNKI (China National Knowledge Infrastructure) reveal that researchers are exploring Ernie’s performance in Chinese-centric benchmarks such as CMMLU, C-Eval, and MOSS Benchmark, where Ernie has already outperformed several domestic and international LLMs.

Ernie’s release has also sparked comparative studies in multilingual AI and cross-domain transfer learning, further cementing its relevance in cutting-edge research. The availability of fine-tuning scripts and reproducibility guidelines provided by Baidu contributes to the model’s appeal as an academic tool.

Ernie vs DeepSeek: Metrics and Community Perception

In the shadow of DeepSeek’s high-profile release, many comparisons between the two models have emerged. While DeepSeek garnered acclaim for its architectural elegance and clean documentation, Ernie is being praised for its rich knowledge integration, multilingual capacity, and enterprise-grade tooling.

Community sentiment surveys on Zhihu and developer forums show that:

- DeepSeek is favored for lean deployments and research experimentation.

- Ernie is preferred in enterprise-ready environments with Chinese regulatory requirements.

Notably, Ernie’s retrieval-augmented generation (RAG) layer offers an advantage in real-time applications, where response fidelity and document grounding are critical. Enterprises deploying AI for customer-facing applications expressed greater confidence in Ernie’s ability to cite knowledge sources and reduce hallucinations.

Adoption Metrics – Ernie vs DeepSeek

| Metric | Ernie (Open-Source) | DeepSeek |

|---|---|---|

| GitHub Stars | 30,000+ | 26,000 |

| GitHub Forks | 6,500+ | 5,800 |

| Hugging Face Downloads | 320,000+ | 280,000 |

| WeChat Developer Group Mentions | 9,400+ | 8,100 |

| Enterprise Deployment Pilots | 180+ | 150+ |

| Official Fine-Tuning Templates | Yes | Yes |

| Model Quantization Support (INT4) | Yes | Yes |

| RAG-Enhanced Architecture | Yes | No |

| License Type | Permissive | Permissive |

The above metrics illustrate that Ernie has not only matched DeepSeek in initial enthusiasm but, in several respects, exceeded it in industrial relevance and community engagement. These data points strongly suggest that Baidu’s open-source strategy is achieving early traction.

Challenges and Areas of Improvement

Despite its successful entry into the market, Ernie is not without limitations. Some developers have noted that the documentation lacks English translations, which hampers broader international adoption. Others expressed concerns about the computational requirements for full-scale fine-tuning, especially among startups and individual researchers.

Additionally, the model’s performance in low-resource languages and domain-specific tasks in non-Chinese contexts remains underexplored. These shortcomings represent both limitations and opportunities for the broader open-source community to contribute enhancements and fine-tuned derivatives.

The Road Ahead – Challenges and Global Ambitions

While Baidu’s open-source release of the Ernie model has generated considerable momentum in both technical and market terms, sustaining its relevance and extending its impact over the long term will require strategic foresight, continuous investment, and global adaptability. As China’s largest public AI release since DeepSeek, Ernie is more than just a national milestone—it represents a test case for whether a Chinese foundational model can shape the international AI ecosystem. This section explores the key challenges Baidu faces and outlines its potential for global reach and technological leadership.

Sustaining Open-Source Momentum in a Fragmented Ecosystem

A significant challenge facing Baidu is the ongoing maintenance and evolution of Ernie as a truly open-source platform. While the initial release has been met with enthusiasm, sustaining developer engagement over time requires robust documentation, community governance, versioning roadmaps, and timely technical updates. Many open-source AI initiatives falter when companies deprioritize post-release support in favor of proprietary commercial versions. For Ernie to remain viable, Baidu must ensure continuous releases of improved checkpoints, extended token windows, optimized quantizations, and fine-tuning recipes across a variety of use cases.

Moreover, the fragmentation of China’s open-source AI ecosystem poses an inherent structural challenge. With dozens of models—InternLM, ChatGLM, DeepSeek, PanGu, and more—vying for developer attention, long-term adoption will depend on Ernie’s ability to differentiate itself not just through performance but through reliability, extensibility, and community collaboration. Baidu may need to implement a contributor program, governance charter, or even establish a dedicated foundation to steward Ernie’s growth.

Navigating International Deployment and Trust Barriers

As Baidu positions Ernie for international relevance, it will confront geopolitical, regulatory, and perceptual hurdles. While there is growing demand in emerging markets for non-Western AI infrastructure, there is also increased scrutiny over the provenance, security, and governance of AI models—particularly those originating from China. Questions around data integrity, model alignment, and content moderation protocols may arise from governments and institutional buyers outside of China.

To navigate these concerns, Baidu must proactively demonstrate that Ernie meets global safety standards, complies with local AI regulations, and supports transparency initiatives. This includes publishing extensive model documentation (preferably in English), third-party audit reports, fairness evaluations, and safety benchmarking results.

Collaboration with international AI bodies, such as the Partnership on AI, OECD AI Principles consortium, or regional regulatory working groups in Southeast Asia and Africa, may be essential for establishing credibility. If Baidu aims to compete with OpenAI, Meta, and Google not just on capability but on trust, it will have to invest significantly in cross-border AI diplomacy and compliance infrastructure.

Adapting to Rapid Technological Advances in Foundation Models

The global AI field is progressing rapidly, with major players pushing toward increasingly multimodal, agentic, and efficient foundation models. OpenAI's GPT-4o, Google's Gemini 1.5 Pro, and Anthropic's Claude 3 series have set new standards in reasoning, multi-turn memory, and integrated modality. Ernie, while impressive, will need to evolve continuously to keep pace with these benchmarks.

Baidu will have to consider the following architectural and research directions:

- Multimodal Expansion: Integrating image, speech, and video understanding natively into Ernie’s framework.

- Toolformer-style Agent Abilities: Enabling Ernie to autonomously select tools and APIs during inference.

- Memory and Personalization: Incorporating long-term memory and user-aligned agents for real-time, adaptive interactions.

- Sparse Expert Mixture Models: Improving scalability and inference efficiency through mixture-of-experts designs.

Staying at the frontier will require not just model innovation but also infrastructure support, including higher compute density, more optimized training pipelines, and greater participation in international ML research challenges. Without such evolution, Ernie risks becoming obsolete in comparison to newer generation models designed with more dynamic capabilities.

Licensing, Monetization, and Commercial Strategy Tradeoffs

The choice to open-source a high-capacity LLM like Ernie also presents tradeoffs in terms of revenue generation and intellectual property (IP) protection. While permissive licensing enhances adoption, it may dilute direct monetization opportunities, especially if third-party companies build commercial applications on Ernie without routing through Baidu’s ecosystem.

To address this, Baidu is likely to pursue a dual monetization strategy:

- Freemium model: Offering open access to core Ernie models for research and limited commercial use, while charging for enhanced versions with higher inference speeds, private deployment options, and proprietary plugins.

- Value-added services: Promoting Baidu Cloud, PaddlePaddle, and API integrations for enterprises seeking turnkey solutions.

Furthermore, maintaining competitive IP positioning in a globally interconnected open-source environment requires careful management of contributions, fork governance, and attribution policies. Baidu must ensure that derivative models do not unintentionally outcompete its own versions or misrepresent their lineage, which could lead to reputational or compliance risks.

Building a Global Community and Cross-Regional Use Cases

To fully realize Ernie’s global potential, Baidu must actively cultivate a global developer community. This involves going beyond Chinese-language platforms and engaging developers on GitHub, Hugging Face, Discord, and Reddit. Contributing to popular open-source initiatives—such as LangChain, OpenAgent, or MLFlow—could help Ernie gain exposure and plug into existing AI pipelines globally.

Localized use cases will be key. For instance, Ernie could be fine-tuned to support:

- Swahili and Arabic legal systems for judicial document summarization.

- Thai and Vietnamese business communication tools for regional commerce.

- Healthcare documentation in Portuguese for Brazil and Angola.

By empowering regional developers and institutions to fine-tune and deploy Ernie in context-specific environments, Baidu can make Ernie not only globally available but globally relevant.

A New Chapter for Chinese Open AI

The open-sourcing of Baidu’s Ernie model marks a pivotal moment in the evolution of China’s artificial intelligence landscape. In an environment increasingly shaped by digital sovereignty, global competition, and open-source innovation, Baidu’s move is both a strategic inflection point and a symbolic gesture. It signals that Chinese technology firms are no longer content to play catch-up with Western AI giants—they now intend to help define the standards, tools, and ecosystems that will govern the next era of intelligent computing.

The Ernie release is notable not only for its technical sophistication but also for what it represents: a deepening commitment to transparency, accessibility, and ecosystem collaboration. By making the model freely available for research and selective commercial use, Baidu is encouraging developers, researchers, and enterprises to build upon a shared foundation—accelerating both national and international innovation in the process.

As the earlier sections of this analysis have shown, the early impact of Ernie is already visible. Adoption metrics rival, and in some cases surpass, those of DeepSeek and other leading Chinese models. Enterprises are finding value in its domain-specific capabilities, developers are adapting it for creative use cases, and academic institutions are incorporating it into research pipelines and educational curricula. These indicators suggest that Ernie is not merely a transient release but a long-term pillar in China’s AI strategy.

At the same time, the road ahead is complex. Sustaining momentum in a fragmented AI landscape will require Baidu to commit to continuous technical improvements, robust community governance, and proactive engagement with global standards bodies. Regulatory, geopolitical, and infrastructural challenges must be navigated with care, especially if Baidu intends to position Ernie as a globally trusted alternative to models from OpenAI, Meta, and Google.

Still, the foundations are strong. Ernie’s architecture—marked by knowledge integration, multilingual fluency, and retrieval-augmented generation—places it among the most capable open models to emerge from Asia. With continued iteration and international outreach, Ernie has the potential to become not just a product of Chinese innovation, but a global tool for inclusive AI development.

In this context, Baidu’s open-source Ernie model should not be seen in isolation. It is part of a broader transformation in which Chinese AI firms are evolving from proprietary, centralized systems to more open, modular, and collaborative infrastructures. This mirrors—and in some cases, reshapes—global AI development trends, reinforcing the idea that technological leadership in the 2020s will not be defined solely by scale or compute power, but also by openness, interoperability, and trust.

In conclusion, Ernie’s release is not just a model drop—it is a declaration of intent. It underscores China’s ambition to lead in AI not only through capability, but also through openness and shared progress. If Baidu remains committed to fostering a thriving global community around Ernie, this release could mark the beginning of a new chapter—not just for Chinese AI, but for the global open-source movement itself.

References

- Baidu AI Cloud Overview

https://cloud.baidu.com/product/ai - DeepSeek AI Official GitHub

https://github.com/deepseek-ai - Ernie Model Release on GitHub

https://github.com/baidu/ERNIE - PaddlePaddle Deep Learning Framework

https://www.paddlepaddle.org.cn - Tsinghua University AI Research

https://ai.tsinghua.edu.cn - China Cyberspace Administration AI Guidelines

https://www.cac.gov.cn - Hugging Face Model Hub

https://huggingface.co/models - OpenAI GPT-4 Overview

https://openai.com/gpt-4 - Meta’s LLaMA Model Series

https://ai.facebook.com/tools/llama - OECD Principles on AI

https://oecd.ai/en/ai-principles