Audible Embraces AI Voice Tech: How Synthetic Narration Is Reshaping the Audiobook Industry

Over the past decade, audiobooks have emerged as one of the fastest-growing segments within the publishing industry, reflecting the digital transformation of media consumption. As listeners increasingly turn to spoken-word content during commutes, workouts, and daily routines, companies like Audible—a subsidiary of Amazon—have played a pivotal role in popularizing and expanding the audiobook format. Today, the audiobook experience is undergoing another profound shift, not in terms of consumer behavior, but in the technology underpinning its creation. Audible’s decision to incorporate artificial intelligence (AI) into audiobook production marks a new chapter in both storytelling and speech synthesis.

This technological pivot is not occurring in isolation. AI-generated voices, powered by advanced neural networks and machine learning models, have shown rapid progress in recent years. From customer service chatbots to personal virtual assistants, synthetic voice technology has matured from robotic monotones to near-human fluency, capable of conveying subtle emotion, pacing, and inflection. In this context, the audiobook industry—with its reliance on expressive voice narration—is both a natural challenge and an enticing opportunity for AI innovation.

Audible’s move to integrate AI into audiobook narration is, therefore, not just a technological upgrade—it is a strategic recalibration. It responds to growing demand for more content, faster production cycles, and greater accessibility across multiple languages and formats. It also reflects the company’s effort to address escalating production costs and limited narrator availability, especially for niche and long-tail content. With AI voice generation, Audible can potentially scale production while maintaining a consistent quality standard, opening the doors to a broader library and new market segments.

However, this evolution raises complex questions. Can AI truly replace the emotional nuance of human narrators? What does this mean for the thousands of professional voice actors who make a living from audiobook production? Will consumers embrace or reject synthetic narration once they are aware of its origin? And perhaps most critically, how will the integration of AI reshape the creative, ethical, and economic dynamics of audiobook publishing?

These concerns are not unfounded. Voice acting is both an art and a craft, one that extends beyond simply reading text aloud. Human narrators bring characters to life, build tension, establish mood, and forge emotional connections with listeners. The fear among creatives is that automation could commoditize this process, reducing artistic expression to a series of algorithmic predictions and tonal calibrations. Furthermore, the issue of consent looms large: if AI can convincingly mimic a voice, what safeguards exist to prevent misuse or unauthorized cloning?

Audible, for its part, appears aware of these challenges. Early reports suggest that the company is approaching the transition with a dual emphasis on technological advancement and human-centered ethics. Rather than eliminating human narrators, Audible may pursue a hybrid model—where AI handles less prominent works or provides multilingual versions, while flagship titles continue to feature well-known voice actors. Additionally, Audible is reportedly working to establish ethical frameworks that govern the use of synthetic voices, including guidelines for narrator consent and disclosure to listeners.

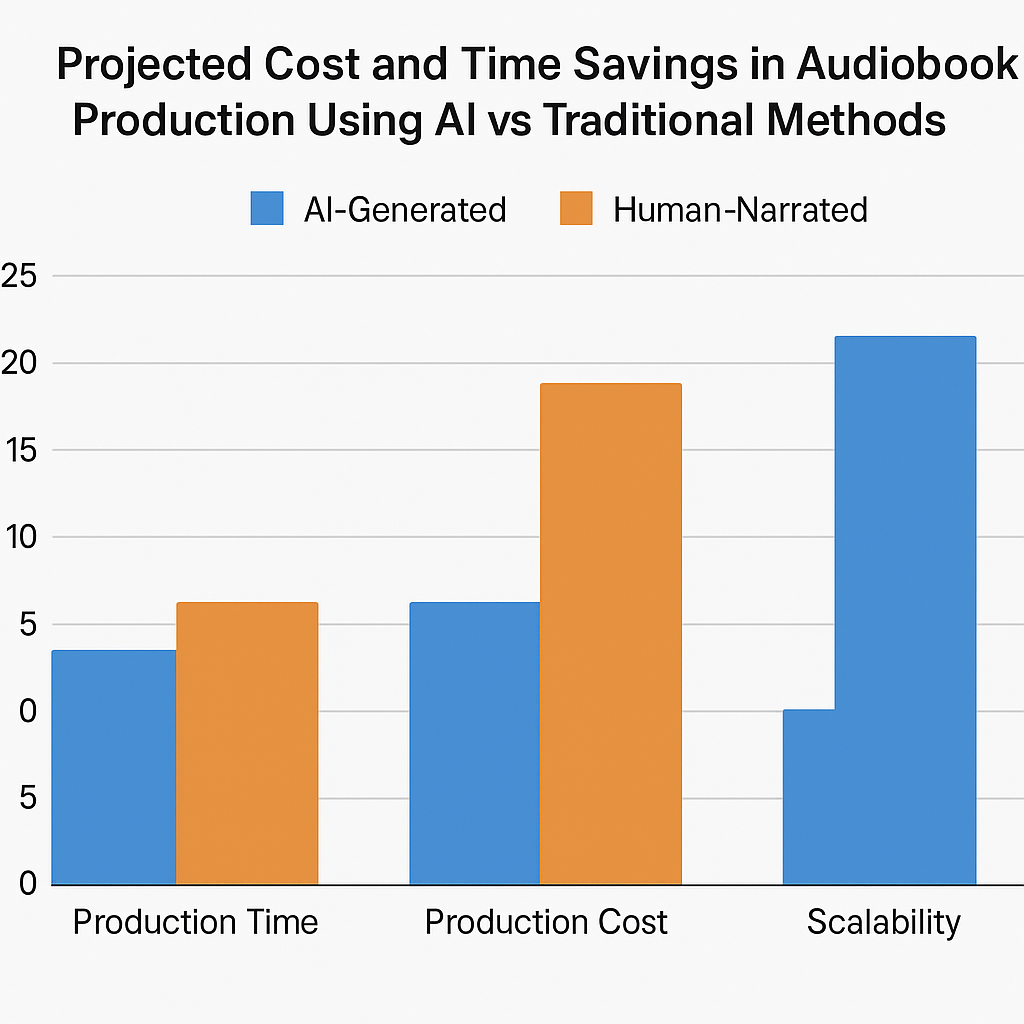

At the same time, the potential benefits of AI narration are difficult to ignore. For one, AI dramatically reduces the time and cost required to produce an audiobook. A traditional audiobook might take weeks of studio time, followed by editing, mastering, and quality control. An AI-narrated version, by contrast, can be generated in hours, with minimal overhead. This opens the door to narrating backlists, indie publications, or educational content that may not justify full-scale production costs.

Moreover, AI brings scalability to multilingual markets. High-quality translation combined with AI voice synthesis allows Audible to expand its catalog across languages and regions more efficiently than ever before. For example, a best-selling English-language title could quickly be released in Spanish, Hindi, or Japanese with AI-generated narration, tailored to local dialects and pronunciation standards. This capability enhances accessibility, not only geographically, but also for individuals with visual impairments or literacy challenges.

In many ways, Audible’s embrace of AI technology represents a broader trend: the convergence of media, machine learning, and automation. As AI continues to penetrate creative industries—from music to film to journalism—the audiobook sector stands as a compelling case study of what happens when art meets algorithms. It is a story about more than voice generation; it is about evolving business models, user preferences, and the enduring value of human creativity in an age of digital replication.

This blog will explore the full dimensions of this development. In the next sections, we will examine how AI voice technology works, the strategic decisions behind Audible’s AI adoption, the broader industry impact on narrators and publishers, the ethical and legal implications of synthetic narration, and the future landscape of audiobooks in a machine-assisted world. Through this analysis, we aim to understand not only what is changing, but why it matters—and for whom.

As artificial intelligence becomes a co-author in the way stories are told, the question is no longer whether AI will shape the future of audiobooks, but how thoughtfully and responsibly that future will be written.

How AI Voice Technology Works in Audiobook Production

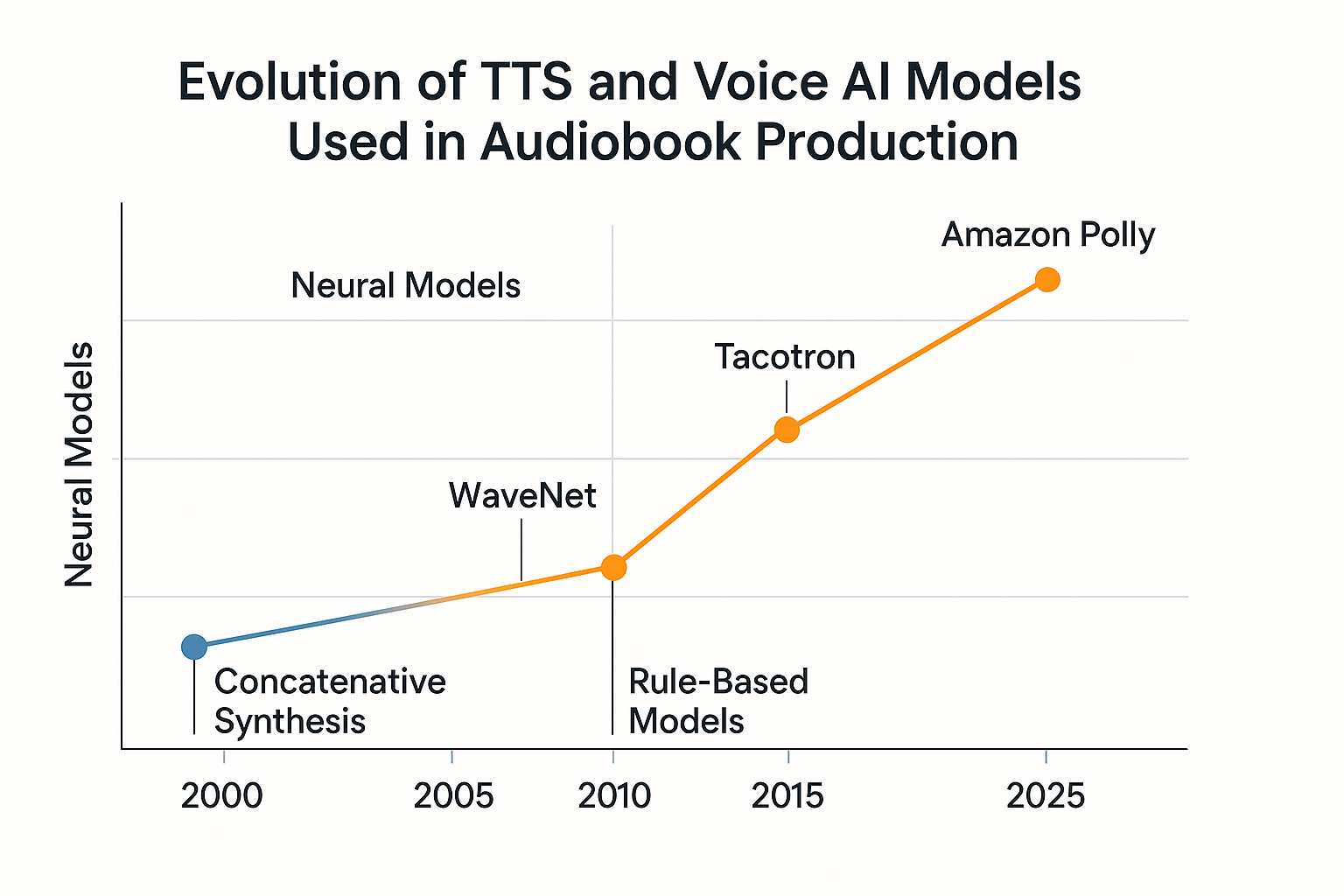

The use of artificial intelligence (AI) in audiobook production is a multifaceted process underpinned by recent advancements in natural language processing (NLP), speech synthesis, and machine learning. At the heart of this technological evolution lies the field of text-to-speech (TTS) synthesis, which transforms written text into spoken language through computational means. While traditional TTS systems have existed for decades, their robotic and unnatural intonations limited their applicability to commercial-grade audio content. Recent breakthroughs, however, have yielded neural TTS models capable of mimicking human speech patterns with remarkable fidelity and nuance. These models now form the foundation of AI-narrated audiobooks.

From Rule-Based Systems to Deep Learning

Historically, TTS engines employed concatenative synthesis, wherein segments of recorded speech were pieced together to form sentences. Though functional, this method often produced choppy, inconsistent outputs. It lacked flexibility, particularly in conveying emotion, tone shifts, and context-aware pronunciation. The advent of statistical parametric synthesis, and more significantly, deep learning-based models, marked a transformative leap.

Modern AI voice systems are typically built using sequence-to-sequence architectures powered by recurrent neural networks (RNNs) or transformers. Two foundational innovations have emerged as cornerstones in the field: Tacotron and WaveNet.

- Tacotron, developed by researchers at Google, introduced an end-to-end framework that maps textual input to a spectrogram—a visual representation of the audio signal. It handles text analysis, pronunciation modeling, and prosody generation in a unified pipeline.

- WaveNet, developed by DeepMind, is a generative model that converts spectrograms into raw audio waveforms. Unlike conventional vocoders, which often produce buzzy or metallic tones, WaveNet captures the minute details of speech, enabling natural-sounding results.

Together, these models form a two-stage process: first, the linguistic features of a sentence are analyzed and converted into a spectrogram using Tacotron; then, WaveNet—or its modern derivatives like WaveGlow or HiFi-GAN—reconstructs these spectrograms into speech.

Voice Cloning and Neural Voice Avatars

A particularly noteworthy innovation within AI voice technology is voice cloning, also known as voice replication or neural voice transfer. This approach allows an AI model to generate synthetic speech that sounds like a specific person. By training on as little as a few minutes of recorded audio, the system can create a "voice avatar", which replicates the speaker’s tone, pitch, accent, and even idiosyncrasies in articulation.

Companies like ElevenLabs, Resemble AI, and Amazon Polly Neural have commercialized this capability, enabling the production of audiobooks with highly expressive and customized narration styles. Some of these services allow authors or celebrities to license their voices for book narration, creating unique branded experiences. Others support the development of multilingual voice avatars capable of reading a single text in multiple localized versions.

This technology is particularly valuable in audiobook production, where brand consistency, emotional delivery, and voice familiarity contribute significantly to listener engagement.

Training, Fine-Tuning, and Human-in-the-Loop Systems

Training an AI model for audiobook narration typically involves large datasets comprising text-audio pairs. These datasets are often curated from professionally narrated audiobooks, podcasts, and open speech corpora. During training, the model learns to map phonemes, syntax, and punctuation to corresponding prosodic features—such as stress, rhythm, and intonation.

However, raw training is only the beginning. To achieve high-quality narration:

- Fine-tuning is employed on domain-specific texts, like fiction, nonfiction, or technical manuals.

- Emotion conditioning allows the AI to adjust its tone dynamically—for example, reading a suspenseful scene with urgency or a dialogue with subtle shifts in character voices.

- Human-in-the-loop (HITL) workflows involve voice engineers, linguists, and editors who review and correct AI outputs. This iterative feedback loop ensures that the narration adheres to content-specific standards and avoids mispronunciations, unnatural pauses, or tonal inconsistencies.

While the long-term vision may be full automation, current implementations often rely on this semi-automated approach to maintain quality parity with professional human narrators.

The Role of Large Language Models in Narration Contextualization

Another critical component in AI audiobook narration is contextual understanding, which enables the system to determine how to modulate voice delivery depending on the content type. For instance, a sarcastic remark, a rhetorical question, or a poetic line all require different vocal treatments.

Large language models (LLMs), such as GPT-4, PaLM, and Claude, are increasingly used to assist in this process. They can:

- Analyze paragraphs for emotional cues and genre conventions.

- Suggest narrative pacing based on sentence structure and story arc.

- Flag potential ambiguities in pronunciation, especially with proper nouns or culturally specific references.

This integration of LLMs with TTS ensures that the AI does not merely read text—it interprets it, albeit in a constrained manner, thus enhancing the listener's immersive experience.

Synthetic Voice Libraries and Real-Time Generation

In practice, audiobook platforms like Audible deploy voice libraries that offer a range of synthetic narrators categorized by gender, age, accent, and delivery style. These libraries allow publishers to select or request a suitable voice for a given title.

Once a script is finalized and processed through the AI pipeline, the narration is generated in real-time or batch mode. Post-processing layers may be added for:

- Ambient soundscapes

- Background music

- Pauses and chapter markers

These enhancements further bridge the gap between human and AI narration, creating a listening experience that is virtually indistinguishable from traditional production.

In summary, AI voice technology in audiobook production is the result of interlinked advancements in speech synthesis, neural networks, and contextual text understanding. With continued refinement and integration of ethical safeguards, these systems are redefining the boundaries of scalable, high-quality narration. As we proceed to examine Audible’s strategic approach to adopting this technology, it becomes clear that AI is not merely a tool for efficiency—it is a potential co-narrator of the stories we consume.

Audible’s Strategy – Balancing Automation and Authenticity

As Audible embarks on its journey into AI-narrated audiobook production, the company is navigating a complex terrain that requires a delicate balance between technological efficiency and the artistic expectations of its audience. While artificial intelligence offers unprecedented scalability and cost reductions, the core value of audiobooks has long been rooted in their emotional resonance and the nuanced performances of human narrators. Audible’s strategic response is not to replace human creativity but to complement and extend it through responsible and selective deployment of synthetic voice technologies.

Strategic Integration, Not Full Replacement

Audible’s approach signals a clear intent: AI will not be used to universally replace human narrators but to strategically enhance production capabilities where appropriate. This is particularly applicable in the context of back-catalogs, long-tail content, educational materials, and low-margin titles. These are areas where full-scale human narration is economically unfeasible or logistically impractical. By employing AI in these specific domains, Audible can broaden its content offerings without sacrificing quality for its flagship productions.

Moreover, AI is expected to play a supplementary role in hybrid workflows. For example, AI might be used to pre-narrate drafts, which human narrators then refine or correct. This method drastically shortens the overall production timeline while retaining human authenticity in the final output. Such hybrid strategies not only preserve the employment of skilled narrators but also align with consumer expectations of emotional depth and vocal realism.

Audible’s strategic restraint is also influenced by a recognition of its brand positioning. Known for high production values and celebrity-voiced performances, Audible understands that its audience expects premium experiences. As such, the company is unlikely to apply synthetic narration to best-selling titles or exclusive originals—at least in the short term. Instead, AI is being positioned as a force multiplier, not a wholesale disruptor.

Investments in Ethical AI and Consent Frameworks

An integral part of Audible’s strategy is the incorporation of ethical safeguards and consent mechanisms within the AI voice production pipeline. Voice cloning technology, while powerful, raises significant concerns regarding ownership and potential misuse. Audible is reportedly developing policies to ensure that all synthetic voices used in its productions are either derived from public domain voice models or created in collaboration with—and with the consent of—voice talent.

In practice, this involves licensing agreements with narrators who wish to create AI replicas of their voices. These narrators may then receive royalties or usage-based compensation when their digital avatars are used in productions. This arrangement offers narrators a new revenue stream and provides Audible with scalable voice assets that maintain continuity and quality across multiple projects.

Additionally, Audible is believed to be considering voice watermarking and other verification techniques to distinguish AI-generated content from human narration. This transparency initiative aims to maintain listener trust and prevent backlash from audiences who may feel misled by the synthetic nature of a given performance. Disclosure policies will likely become standard practice, where audiobook descriptions specify whether AI was used in full or in part.

Selective Use Cases: Accessibility, Localization, and Archival

Audible’s strategic roadmap reveals several use cases where AI is particularly well-suited and where automation can add genuine value without undermining authenticity.

- Accessibility Enhancements

AI-generated audiobooks can provide an efficient solution for making a broader range of texts accessible to the visually impaired or those with reading difficulties. Titles that would otherwise never receive narration due to budget constraints can now be made available in audio form, advancing Audible’s inclusivity mission. - Multilingual Narration and Localization

AI enables rapid generation of multilingual versions of audiobooks. With synthetic voices trained on regional accents and dialects, Audible can scale its presence in non-English-speaking markets. For instance, a popular English title can be rendered in Spanish, French, or Mandarin using culturally contextualized AI voices—without waiting for manual translations and new narration cycles. - Archiving and Re-issuing Backlist Titles

Audible maintains an enormous library of titles, many of which have never been released in audio format. AI provides a viable pathway to revive these works. By converting backlist content into narrated audio, Audible not only extracts new value from existing assets but also enriches its offering for niche readers and genre enthusiasts.

Each of these use cases reinforces a broader theme: AI is not replacing human creativity but rather democratizing access to spoken-word content. The goal is not uniform automation but strategic augmentation.

Maintaining Artistic Integrity and Listener Trust

Perhaps the most challenging aspect of Audible’s strategy is preserving the intangible qualities that make audiobooks emotionally compelling. Human narration is not merely a mechanical recitation—it is an interpretive act, shaped by pacing, breath, inflection, character voice differentiation, and dramatic timing. Audible is acutely aware that many listeners form emotional bonds with narrators, treating them as co-authors of the story experience.

To this end, Audible is expected to maintain a dual production model, where high-impact titles continue to be narrated by professionals, while AI is deployed selectively. This approach avoids alienating loyal customers and preserves the artistry that defines the medium. Furthermore, Audible is investing in research to enhance the emotional range of AI narrators. Early versions of AI narration were flat and monotonous, but newer models—trained on expressive datasets—are beginning to capture more realistic and context-sensitive emotions.

Yet Audible remains cautious. There is acknowledgment within the company that overly ambitious deployment of AI could damage its reputation or provoke resistance from creators and consumers alike. By choosing transparency and incremental adoption, Audible is positioning itself not as an automator, but as a facilitator of innovation.

Collaborations and Industry Signaling

Audible’s strategic direction is also evident in its external partnerships. The company has reportedly collaborated with AI voice platforms and TTS specialists, including startups and Amazon’s own machine learning divisions. These collaborations enable Audible to remain at the forefront of technological capabilities while retaining control over creative and editorial standards.

Moreover, Audible’s actions are being closely watched by the broader publishing industry. As one of the largest distributors of audiobooks globally, Audible’s decisions are likely to set precedent. Its gradual, ethics-centered rollout sends a clear signal: the future of audiobook narration lies not in a wholesale replacement of human talent, but in a new paradigm where AI and artistry coexist.

In sum, Audible’s strategy reflects a nuanced understanding of both the capabilities and limitations of AI voice technology. By blending automation with human oversight, and efficiency with ethical considerations, the company is forging a sustainable model for the future of audiobook production. As AI becomes a more prominent part of the storytelling ecosystem, it is Audible’s commitment to authenticity that may well define its success.

Industry Impact – Narrators, Publishers, and New Opportunities

The integration of artificial intelligence into audiobook production is poised to deliver far-reaching consequences for the audiobook ecosystem, impacting not only how content is created but also how value is distributed across its key stakeholders. As Audible and other major platforms move toward AI-augmented narration models, the ramifications are being felt most acutely by professional voice actors, independent and corporate publishers, and an emerging class of digital content entrepreneurs. While AI introduces significant efficiency and scalability, it also raises critical questions about labor displacement, creative control, and market accessibility.

Implications for Professional Narrators and Voice Actors

Among the most directly affected constituencies in this shift are audiobook narrators. For decades, narrators have played a pivotal role in shaping the auditory experience of storytelling, bringing emotion, interpretation, and personal artistry to every sentence. The rise of AI threatens to automate aspects of this performance, particularly in genres or titles where the cost of human narration may outweigh expected returns.

Narrators are responding to this development with a mix of concern and adaptation. Voice actor unions and advocacy groups have begun to vocalize objections to the unlicensed use of voice data for model training, urging clearer regulations around consent and compensation. There are increasing calls for "AI voice rights", which would legally protect a narrator’s voice from unauthorized cloning or commercial replication. These developments echo similar discussions unfolding in adjacent industries such as film and video game development, where AI-generated likenesses and voices are also raising ethical and legal concerns.

Despite these challenges, AI may also unlock new income streams for narrators. By licensing their voices to be used in synthetic form—under carefully negotiated contracts—narrators can monetize their work in ways not previously possible. Some may offer multiple voice models differentiated by tone or genre, creating a portfolio of “digital selves” that publishers can license for various projects. In this way, AI transitions from being a threat to becoming a tool for passive revenue generation and broader market reach.

Disruption and Adaptation in Publishing

For publishers—both traditional and independent—the arrival of AI in audiobook production represents a structural shift in production economics. The traditional audiobook publishing process can be time-intensive and expensive, often involving casting, studio recording, post-production editing, and final mastering. AI significantly reduces both time and cost, making it feasible to produce audiobooks for a wider array of titles, including niche genres, academic publications, self-help guides, and regional literature that might not otherwise receive attention.

Large publishing houses may use AI to accelerate the time-to-market for new releases, providing simultaneous print and audio versions upon launch. For example, nonfiction works or technical manuals that require frequent updates can be quickly revised and re-released with minimal additional cost. Meanwhile, independent authors and small presses—who often face budgetary constraints—stand to benefit the most. AI levels the playing field by allowing these entities to produce professionally narrated audiobooks without the need for expensive studio arrangements.

Moreover, publishers are exploring AI-generated multilingual narration to penetrate non-English-speaking markets. By leveraging neural TTS models trained on specific linguistic patterns, publishers can offer localized audiobook experiences that maintain pronunciation integrity and cultural nuance. This ability to produce high-quality translations and audio output at scale presents a competitive advantage for firms seeking global expansion.

The Rise of Self-Publishing and Creator Empowerment

One of the most transformative implications of AI audiobook production is the democratization of content creation. Self-published authors, who previously faced high barriers to entering the audiobook market, now have tools to produce and distribute narrated content with minimal investment. AI narration platforms—many of which offer integrated editing and publishing workflows—allow authors to select from a range of synthetic voices, adjust delivery tone, and generate audiobooks within days.

This trend is expected to fuel a new wave of independent audio-first publishing, where creators design content specifically for spoken-word formats. Genres such as serialized fiction, short stories, guided meditation, and educational content are particularly well-suited to AI-driven production. These formats require rapid iteration and flexible production models, attributes that align perfectly with the strengths of synthetic narration.

In addition, this accessibility catalyzes the growth of niche content communities. For example, books on indigenous knowledge, regional folklore, or underrepresented languages can now be produced and shared without relying on centralized publishing entities. AI effectively reduces the economic gatekeeping historically associated with audiobook creation, opening doors to a more diverse and inclusive auditory literature ecosystem.

New Business Models and Industry Innovation

The rise of AI in audiobook production is fostering innovation beyond content creation. New business models are emerging around voice licensing, narration-as-a-service, and AI-powered content marketplaces. Startups and technology vendors are developing platforms that allow users to upload manuscripts, choose synthetic voices, and distribute completed audiobooks directly to consumers or platforms like Audible, Spotify, or Apple Books.

Furthermore, companies are exploring voice personalization services, where listeners can select their preferred narrator—human or AI—for a particular book. This personalization may eventually extend to tone, speed, accent, and even gender, allowing for highly individualized listening experiences. These features reflect broader trends in AI and media consumption, where user agency and interactivity are increasingly central to product design.

Audiobook production may also integrate AI-powered analytics to optimize performance. For instance, listening behavior data—such as chapter drop-off rates, repeated segments, or user bookmarks—could be fed back into AI narration models to inform pacing and delivery style. This feedback loop turns audiobook consumption into a data-driven process, enabling continuous improvement in AI performance and listener satisfaction.

Anticipating Industry-Wide Shifts

While the benefits of AI-driven audiobook production are substantial, the industry is not without risk. The potential for oversaturation, quality dilution, and listener fatigue from synthetic narration is real. Additionally, as more content is generated with AI, distinguishing high-quality productions from mass-produced ones may become increasingly difficult for consumers.

Regulatory and labor-related challenges are also likely to intensify. Governments and unions may push for stronger protections to ensure fair compensation and prevent exploitation of creative professionals. Transparent disclosure practices—stating whether a book is AI-narrated—may become not just a courtesy but a legal requirement.

Nevertheless, the industry is clearly moving toward a future where AI is an integral component of audiobook production. As with any disruptive technology, the outcome will depend heavily on how stakeholders choose to adapt. Those who embrace ethical innovation, creative collaboration, and inclusive access are likely to thrive in the new paradigm.

In conclusion, the application of AI in audiobook production is reshaping the industry at every level—from voice talent and publishing workflows to business models and listener expectations. While challenges remain, the transition also brings immense opportunity for creators, consumers, and companies willing to innovate responsibly.

Ethical, Creative, and Legal Dimensions of AI-Narrated Audiobooks

The deployment of artificial intelligence in audiobook narration presents a host of ethical, creative, and legal considerations that transcend the technological benefits it offers. As the boundaries between human and machine-generated expression become increasingly blurred, Audible and the broader publishing industry must grapple with how to preserve the integrity of storytelling while respecting intellectual property rights, creative labor, and listener trust. This section explores the multifaceted challenges that accompany the rise of AI-narrated content and the frameworks being proposed or implemented to mitigate them.

Ethical Challenges: Consent, Transparency, and Fair Use

One of the most pressing ethical issues in the adoption of AI narration is the question of consent, particularly when it comes to voice cloning. The use of a narrator's vocal characteristics to train AI models without explicit permission has already sparked backlash within the voice acting community. This is especially problematic when voice data is scraped from publicly available sources—such as previous audiobooks, podcasts, or interviews—without informing or compensating the original speaker.

To address this, industry stakeholders are calling for standardized consent frameworks that clearly delineate how voices are collected, stored, modeled, and deployed. Narrators should have the ability to opt in or out of AI training processes and should be offered fair compensation if their voice data is used to generate commercial content. Some companies have begun to implement voice licensing agreements, under which narrators are paid royalties whenever their digital voice is used in a production. Such agreements not only protect the narrator’s rights but also offer them new revenue opportunities in a rapidly evolving market.

Equally critical is the issue of transparency. Listeners should be informed when a narration is AI-generated. This disclosure can be made in the audiobook’s metadata, cover notes, or introductory audio segments. Without such transparency, audiences may feel misled, especially if they discover post-factum that what they perceived as an authentic human performance was synthesized by a machine. Maintaining transparency is essential to preserving consumer trust and avoiding reputational damage.

Additionally, the concept of fair use is being tested in new ways. Legal scholars and rights organizations are debating whether using publicly available voice samples to train AI models constitutes infringement or falls under the doctrine of fair use. Until clear legal precedents are established, companies operating in this space face substantial uncertainty.

Creative Considerations: Emotion, Interpretation, and Authenticity

Beyond the legal and ethical realm lies a creative dilemma: Can AI truly replicate the artistry of a human narrator? Audiobook narration is not merely a mechanical function of reading aloud; it is a performative act that requires interpretation, pacing, emotional modulation, and character differentiation. The best narrators bring stories to life through their mastery of tone, rhythm, and dramatic timing.

AI voice models, even in their most advanced forms, still struggle with the subtle nuances of emotional expression. While they can mimic sadness, excitement, or suspense to a degree, these expressions often lack the dynamic range and spontaneity of human performance. For instance, narrating a tense courtroom scene or conveying the heartbreak of a personal tragedy requires more than tonal inflection—it demands empathy, instinct, and cultural literacy, qualities that remain elusive for machine-generated voices.

Furthermore, the creative process itself is diminished when narration becomes a product of computation rather than interpretation. The collaboration between authors and narrators often yields insights that enhance the storytelling experience. By automating this element, AI may streamline production but risks reducing narration to a formulaic, lifeless output. This concern is particularly acute in fiction, where narrative immersion hinges on the listener’s emotional engagement with the characters and the voice that embodies them.

Some propose a hybrid creative model, wherein human narrators use AI tools to augment their work. For example, narrators could use AI to prototype different delivery styles before committing to a full recording, or to generate dialogue in supporting characters’ voices while focusing their performance on the protagonist. In this way, AI functions as a creative assistant rather than a replacement, preserving artistic integrity while enhancing productivity.

Legal Landscape: Copyright, Deepfake Risks, and Regulatory Gaps

The legal framework governing AI-narrated audiobooks is still in its infancy. Several critical questions remain unresolved, such as: Who owns the rights to an AI-generated performance? Can a synthetic voice be copyrighted? What constitutes voice theft or impersonation in a commercial context?

Current intellectual property laws were not designed with synthetic content in mind. In many jurisdictions, copyright protection applies to “original works of authorship” created by humans. This creates a legal gray zone for AI-generated narrations. Some argue that the creators of the AI models, or the operators who guide the generation process, should hold the rights. Others contend that the underlying AI voice is merely a tool, and that no copyrightable expression exists unless human creativity is directly involved.

Another looming concern is the potential for deepfake voice abuse. As AI voice models become increasingly lifelike, malicious actors could use them to impersonate public figures, defraud consumers, or generate misleading content. For audiobook platforms, this presents both reputational and legal risks. Audible and its peers will need to implement authentication protocols, such as audio watermarking or traceable digital signatures, to prevent the unauthorized use of cloned voices.

Policymakers are beginning to take notice. Several countries have introduced or are considering legislation aimed at regulating synthetic media. These proposals include mandatory disclosure of AI-generated content, criminal penalties for voice impersonation, and new forms of copyright for digital likenesses. While these laws are still evolving, the audiobook industry must remain proactive in anticipating and aligning with emerging standards.

Cultural and Societal Implications

Finally, the rise of AI-narrated audiobooks raises broader cultural questions about the role of automation in storytelling. Narration, like writing, is a medium of human expression. When AI assumes this role, what becomes of the cultural and emotional transmission that has long defined oral storytelling traditions?

There is a legitimate fear that the widespread use of AI narration may homogenize the listening experience, leading to a narrow band of voice styles and tonal patterns. This could erode the diversity of voices that currently populate the audiobook landscape. For example, regional accents, speech idiosyncrasies, and nonstandard pronunciations contribute richness and authenticity to a story. AI models, trained on standardized data, often smooth out these variations, favoring clarity over character.

At the same time, AI has the potential to amplify cultural representation by enabling narration in underrepresented languages and dialects. If developed with inclusion in mind, AI voice tools could help preserve endangered languages, expand access to literature in indigenous communities, and empower storytellers from marginalized backgrounds.

The key lies in intentional design and responsible governance. Developers must build diverse datasets, prioritize ethical considerations, and work collaboratively with cultural stakeholders to ensure that AI narrators reflect, rather than erase, the richness of human expression.

In summary, the use of AI in audiobook narration is not merely a technical development—it is a profound cultural shift with ethical, legal, and creative implications. As Audible and other industry leaders chart this new territory, they must do so with a commitment to transparency, fairness, and artistic integrity.

The Future of Audiobooks in an AI-Driven Era

The adoption of artificial intelligence in audiobook production is not a fleeting experiment; it represents a fundamental reconfiguration of the audiobook value chain. As AI narration matures, the industry faces both the challenge and the opportunity of redefining what it means to listen to a book. Far beyond a cost-saving mechanism, AI promises to transform content creation, distribution, personalization, and accessibility—ushering in a new era of hyper-scalable and diverse audio literature. At the same time, its widespread implementation demands vigilance, foresight, and a renewed commitment to creative authenticity.

Hybrid Narration Models as the Norm

One of the most likely scenarios for the near future is the establishment of hybrid narration models. In this framework, AI and human narrators do not exist in opposition but collaborate within the production process. For instance, a single audiobook might feature human narration for key emotional chapters and AI narration for supplementary material, such as appendices, footnotes, or reference content. Alternatively, AI might be used to generate character voices in dialogue-rich fiction, with human narrators handling the narrative voice.

Such integration allows publishers to scale content production without compromising the artistic value associated with human performance. Moreover, hybrid models offer narrators creative agency over how their digital voice is deployed. Rather than being displaced by automation, skilled professionals become curators and directors of AI assets.

Global Expansion Through Multilingual AI Narration

A particularly transformative aspect of AI-generated audiobooks is their capacity for multilingual scalability. By leveraging multilingual neural text-to-speech (TTS) systems, audiobook platforms like Audible can release titles in multiple languages simultaneously. This capability opens vast new markets in Latin America, Asia, Africa, and Europe, where demand for localized content is strong but human narration resources are limited.

Advanced TTS engines can now be trained not just on standard dialects but also on regional variations, allowing for nuanced localization. In time, it may even be possible for listeners to choose not only the language but also the accent and cadence that best align with their preferences. This customization significantly enhances user experience and facilitates cultural inclusivity.

Furthermore, multilingual AI narration supports the preservation of low-resource languages, enabling communities to document and distribute oral literature, educational content, and folklore in their native tongues. This capability positions AI as a tool of cultural preservation rather than homogenization—provided it is deployed with sensitivity and linguistic diversity in mind.

Personalization and Adaptive Listening Experiences

Another area of innovation lies in the field of personalized audiobook experiences. With AI narrators, audiobook platforms can offer users unprecedented control over their listening environment. Features such as variable voice tone, pacing, pitch, and character assignment are becoming technically feasible.

Imagine a listener selecting an audiobook and customizing it to play in a preferred voice style—whether calm and meditative, energetic and expressive, or formal and academic. For fiction titles, users could assign different AI voices to individual characters, creating a cast-like experience without the need for multiple human actors. AI could also adapt dynamically, slowing down during complex sections and quickening pace during action sequences.

These adaptive experiences are not only more engaging but also serve functional purposes. For instance, educational audiobooks could adjust narration style based on subject matter complexity, while accessibility settings could offer dyslexic or visually impaired users enhanced clarity and pronunciation.

In the long term, AI-powered personalization may integrate with user behavior analytics, using past preferences and feedback to fine-tune future listening experiences automatically—much like recommendation engines but applied to vocal attributes and storytelling formats.

Democratizing Audiobook Creation

The democratizing effect of AI will continue to reshape audiobook authorship. Independent authors, educators, and small publishers will increasingly enter the audiobook space using AI narration tools that are cost-effective and user-friendly. These tools eliminate the need for expensive studio sessions and voice talent negotiations, putting audiobook creation within reach for nearly anyone with a manuscript and an internet connection.

This democratization fosters a more inclusive literary ecosystem, where voices historically marginalized by traditional publishing can find expression in audio form. Authors of self-help, spirituality, indigenous knowledge, or local history books—genres often overlooked by large publishing houses—can now reach global audiences with professional-grade narration.

Moreover, as AI toolkits become more intuitive, we can expect a surge in user-generated audiobooks, podcast hybrids, and serialized fiction. The line between audiobooks, podcasts, and voice apps will continue to blur, giving rise to new genres and storytelling formats that are audio-first by design.

Institutional Applications: Education, Corporate Training, and Government

Beyond entertainment and publishing, AI-narrated audiobooks will find broad applications across institutional contexts. Educational publishers are already exploring AI narration for textbooks, language learning programs, and exam preparation materials. These applications benefit from AI’s ability to ensure consistent pronunciation, reduce turnaround time, and scale across curricula.

In the corporate sector, AI narration is being integrated into employee onboarding programs, compliance training, and knowledge management systems. Companies can quickly generate spoken-word versions of HR manuals, safety procedures, or product documentation in multiple languages.

Governments and public institutions may also leverage AI to distribute legal texts, civic guides, and health advisories in accessible audio formats. These use cases align with public service mandates and could significantly improve engagement with underserved or low-literacy populations.

Risks, Oversaturation, and Content Quality

Despite these advances, the future of AI-narrated audiobooks is not without risks. One major concern is content oversaturation. As barriers to production fall, the volume of audiobooks in circulation is expected to rise sharply. While this abundance democratizes access, it also creates discoverability challenges. Platforms like Audible must invest in curation, quality control, and recommendation systems to help users navigate increasingly crowded libraries.

There is also the danger of quality degradation. AI narration, while improving, still lacks the full spectrum of human expression, and mass adoption may result in formulaic or uninspired performances. To prevent erosion of listener trust, platforms must set and enforce minimum quality standards, even for AI-generated works.

Furthermore, ethical deployment will remain paramount. Disclosure, consent, fair compensation, and robust anti-deepfake measures must be embedded into the future of audiobook distribution. As legal frameworks evolve, companies that fail to align with these principles may face regulatory backlash or reputational harm.

Shaping a Responsible and Creative Future

The convergence of AI and audiobooks is not a theoretical possibility—it is an active transformation with tangible implications. Audible and its peers stand at a critical juncture, one that demands both innovation and introspection. The future of audiobooks will be defined not only by the sophistication of AI voices but by the values that guide their use.

By embracing hybrid models, expanding global access, enabling personalization, and empowering creators, AI has the potential to enhance the audiobook medium rather than diminish it. The challenge for the industry lies in designing systems that serve both efficiency and empathy, both reach and representation.

In this AI-driven era, storytelling will remain central to the human experience. What changes is not the story, but how—and by whom—it is told.

References

- Audible Official Site – Audiobook Platform Overview

https://www.audible.com/about - Amazon Polly – Neural Text-to-Speech Technology

https://aws.amazon.com/polly/ - ElevenLabs – Voice Cloning and Narration AI

https://www.elevenlabs.io/ - Resemble AI – Synthetic Voice Platform

https://www.resemble.ai/ - DeepMind WaveNet – Natural TTS Generation

https://deepmind.google/technologies/wavenet/ - Google Cloud Text-to-Speech – Neural Synthesis API

https://cloud.google.com/text-to-speech - OpenAI – GPT and its Role in Contextual Narration

https://openai.com/research - Speechki – AI Audiobook Production for Publishers

https://speechki.org/ - Voicebot.ai – Analysis on Synthetic Speech Trends

https://voicebot.ai/ - Authors Guild – Statement on AI Voice Ethics

https://authorsguild.org/news