Apple Eyes OpenAI or Anthropic to Reboot Siri in Major AI Strategy Shift

For over a decade, Apple has stood as a dominant force in consumer technology by adhering to its core principles: premium hardware, tightly integrated software, and an uncompromising stance on user privacy. From the iPhone to the MacBook, Apple’s tightly controlled ecosystem has set benchmarks in design and functionality. Yet in the rapidly accelerating domain of artificial intelligence—particularly generative AI—the company finds itself at an inflection point. Despite its early move with Siri in 2011, Apple has lagged behind competitors in delivering conversational, contextually aware, and generative voice assistants. This delay, paired with the meteoric rise of ChatGPT and Claude, has eroded Apple’s technological edge in digital assistants and AI-enhanced user experiences.

Now, in a potential strategic reversal that has sent ripples across the tech industry, Apple is reportedly in advanced discussions to integrate external generative AI models—most notably from OpenAI or Anthropic—into Siri. The decision would mark a significant departure from its previous strategy of relying exclusively on internal AI development and on-device processing. The implications of this shift are profound, not only for Apple’s ecosystem but also for the evolving dynamics of the global AI arms race.

The timing of Apple’s potential collaboration is not coincidental. Since the public debut of ChatGPT by OpenAI in late 2022, consumer expectations for AI capabilities in smartphones and personal devices have been redefined. OpenAI’s models—particularly the GPT-4 and GPT-4o variants—have demonstrated exceptional reasoning, conversational coherence, multimodal understanding, and real-world task execution. Meanwhile, Anthropic’s Claude models have made safety, context length, and transparency cornerstones of their AI offerings, quickly earning attention from enterprise clients and privacy-conscious users alike.

Apple’s possible decision to bring either OpenAI or Anthropic into its iOS ecosystem reflects more than a reaction to market pressure. It signals a deeper recognition that the pace of innovation in foundational AI models has outstripped Apple’s internal capabilities—at least for now. While Apple has made substantial investments in its own language models, such as Ajax and MM1, these systems have yet to make a tangible impact on end-user experiences. In contrast, companies like Microsoft have deeply integrated OpenAI’s technology into their suite of products—Windows, Microsoft 365, Azure—providing a consistent, intelligent user interface across platforms.

Siri, once a symbol of Apple’s AI leadership, has long been criticized for its limitations. It struggles with multi-turn conversations, fails to grasp context, and lacks the fluidity expected of modern voice interfaces. In an era when users can ask ChatGPT to write emails, summarize documents, draft code, and engage in nuanced dialogue, Siri’s functional stagnation is increasingly glaring. If Apple moves forward with embedding a third-party large language model (LLM) into Siri’s core architecture, it would not only represent an attempt to catch up—but potentially leapfrog past the limitations that have constrained the assistant for years.

However, this shift also raises questions about how Apple will reconcile its privacy-first philosophy with the inherently cloud-based nature of many generative AI models. Apple has long distinguished itself by conducting much of its AI processing on-device, thereby minimizing data collection and exposure. Partnering with OpenAI, which operates models in the cloud and has faced criticism over data usage, could create tension with Apple’s established trust narrative. Conversely, Anthropic’s safety-focused and enterprise-friendly design ethos might be more compatible with Apple’s values. But Anthropic’s models, while rapidly advancing, may not yet match the performance scale and deployment depth of OpenAI’s offerings.

Moreover, Apple’s decision isn’t occurring in a vacuum. Competitors are moving aggressively. Google has merged its AI teams into DeepMind, releasing the Gemini family of models that now power Android’s latest generative features. Samsung, Apple’s primary smartphone rival, has already deployed AI models—including Google’s Gemini—within its Galaxy AI suite. Microsoft, meanwhile, continues to deepen its partnership with OpenAI while building its own custom chips and models to reduce dependence on external providers.

For Apple, the high-stakes choice between OpenAI and Anthropic will shape not only the future of Siri but also the broader trajectory of AI in iOS, macOS, visionOS, and beyond. Whether the company opts for OpenAI’s technical firepower or Anthropic’s alignment with privacy and safety may depend on the use cases Apple prioritizes—be it daily productivity, multimedia interaction, enterprise use, or cross-device intelligence.

Why Apple May Need Outside Help — The Internal AI Struggle

Apple’s public image has long been one of technological self-sufficiency and innovation. The company is renowned for vertically integrating hardware and software, maintaining meticulous control over its ecosystem, and emphasizing user privacy. Historically, this strategy has paid dividends: the iPhone, macOS, Apple Silicon, and custom chips such as the M-series and A-series processors have allowed Apple to deliver performance, security, and design in a seamless package. However, in the realm of generative AI—particularly large language models (LLMs)—Apple's in-house development efforts have failed to keep pace with the exponential advancements occurring externally. The resulting gap has forced the company to reevaluate its AI posture and consider third-party providers like OpenAI and Anthropic to supplement or potentially replace parts of its AI stack.

Siri’s Stagnation and the Limits of Evolutionary Change

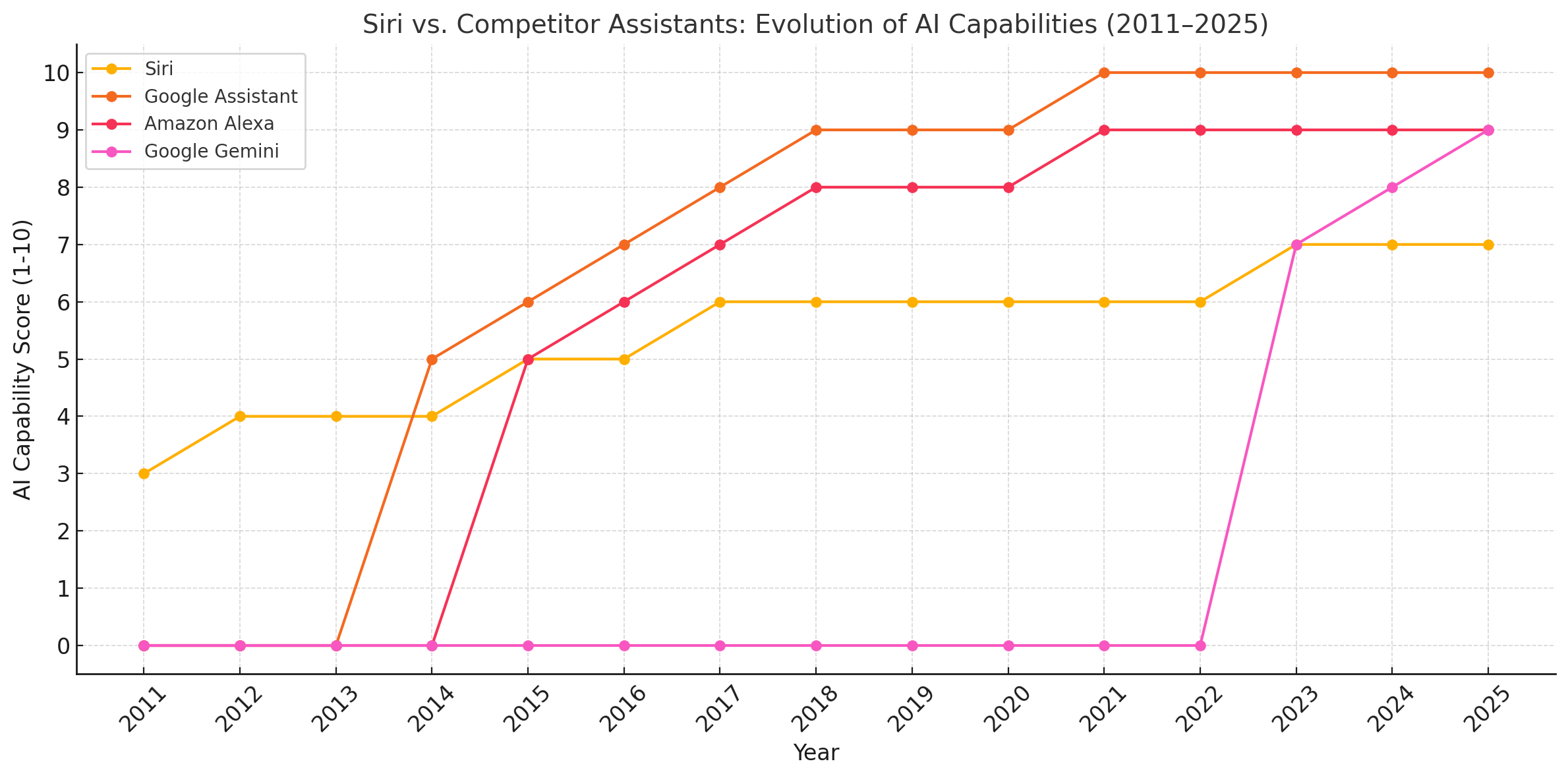

Apple introduced Siri in 2011 as the first mainstream virtual assistant embedded in a mobile operating system. At the time, it was a revolutionary product. Yet over the years, Siri has become emblematic of Apple’s lagging AI strategy. Unlike rivals such as Google Assistant and Amazon Alexa—which have grown smarter through iterative cloud-based training and broader third-party integrations—Siri has remained limited in scope, often drawing criticism for its inability to understand complex queries, maintain contextual awareness across conversations, and execute nuanced tasks.

This stagnation is partly the result of Apple’s conservative architecture. Siri’s design was built around static, rule-based systems and pre-defined intents, limiting its adaptability. While these systems were designed for speed and privacy, they inherently lacked the flexibility and reasoning capacity that modern users now expect from AI-powered interfaces. Today’s digital landscape demands assistants that can summarize documents, engage in multi-turn dialogue, generate content, and interpret multimodal inputs—capabilities that Siri, in its current form, cannot meaningfully provide.

Apple’s AI R&D: Ambitious but Incomplete

Behind the scenes, Apple has been actively investing in AI research. Its teams have developed several LLM projects, most notably the Ajax language model family, and a multimodal AI framework known as MM1. These models, while impressive in technical documentation, have not yet been productized in any major consumer-facing application. There are signs that Apple is integrating some of this work into core experiences—such as on-device dictation, autocorrect, and image recognition in the Photos app—but the absence of a cohesive, generative AI experience like ChatGPT is increasingly conspicuous.

Apple has also been quietly hiring leading AI researchers from companies like Google, Meta, and Amazon. Its acquisitions of startups such as Xnor.ai and Vilynx further demonstrate a desire to build AI talent and infrastructure in-house. However, building a production-grade LLM with generative and conversational capabilities comparable to OpenAI’s GPT-4 or Anthropic’s Claude 3 requires immense resources—not just in talent, but in compute power, model training, safety alignment, deployment, and user interface design.

Furthermore, Apple’s tight-lipped culture and emphasis on long product cycles may have inadvertently hampered rapid AI experimentation. Unlike startups or more open AI labs, Apple lacks a tradition of iterative public releases or community-driven feedback. This conservative approach, while successful in many hardware and OS domains, clashes with the fast-moving, feedback-intensive nature of generative AI development.

The On-Device Dilemma: A Double-Edged Sword

One of Apple’s long-held principles has been on-device AI processing, which enhances speed and preserves privacy. This design choice underpins many of the machine learning features found in Apple products today, from Face ID and Animoji to Live Text and Personal Voice. By minimizing data transmission to the cloud, Apple enhances security and avoids storing sensitive user data on external servers.

However, this privacy-centric model becomes limiting when it comes to training and deploying state-of-the-art generative models. LLMs require billions of parameters, extensive context windows, and large-scale inference—most of which are impractical to execute solely on smartphones, tablets, or even high-end laptops. The hybrid model that some companies now use—combining lightweight on-device models for quick tasks with cloud-based LLMs for heavier workloads—is something Apple has only recently begun to explore. But the company remains behind its competitors in publicly delivering such a hybrid architecture at scale.

The contradiction is stark: Apple’s brand relies on privacy, but its user base increasingly demands the kind of intelligence and fluidity that only cloud-based AI can currently deliver. Until Apple can reconcile this, it risks alienating users who turn to third-party apps like ChatGPT or Gemini for smarter experiences.

Ecosystem Pressure and Market Dynamics

Another critical factor pushing Apple toward external AI partners is the growing gap in ecosystem intelligence. Google has rapidly integrated its Gemini AI models into Android, Gmail, and Google Docs, while Microsoft has embedded OpenAI’s GPT models across its product suite, including Copilot in Windows, Office 365, and Azure. These integrations enable real-time summarization, contextual recommendations, automated writing, and complex task execution across user workflows. In contrast, Apple’s ecosystem, though polished and well-integrated, lacks a generative layer that ties everything together.

This is not lost on developers or enterprise customers. AI assistants have become not just a user-facing feature, but a core capability that defines productivity ecosystems. Without robust AI integration, Apple risks falling behind in enterprise penetration, education, and creative industries where AI-enhanced workflows are becoming the norm.

Moreover, the Vision Pro and other emerging product lines demand sophisticated AI capabilities to interpret user input across spatial interfaces. If Siri remains anemic and disconnected from generative models, these devices will underdeliver on their promise of a futuristic user experience.

Cost and Opportunity Calculus

Developing generative AI in-house is not just a technical challenge; it is a capital-intensive one. Training state-of-the-art models can cost hundreds of millions of dollars. Moreover, sustaining these models—through inference, safety tuning, fine-tuning, and infrastructure maintenance—represents ongoing operational expense.

Apple is not constrained by budget, but it is bound by its historical investment model. The company prefers to amortize R&D over product cycles and maintain high gross margins. Partnering with a mature AI provider like OpenAI or Anthropic would allow Apple to shortcut the infrastructure build-out and deploy a scalable solution quickly, particularly in anticipation of iOS 19 and the next iPhone cycle.

Additionally, licensing external models offers Apple flexibility. It can roll out limited features, assess user demand, and gradually build its own model in parallel. Such a strategy mirrors Apple’s earlier moves with Intel chips and Google Maps—relying temporarily on external solutions before transitioning to in-house alternatives when the technology matures.

In sum, Apple’s internal AI ambitions, though substantial, have not yet yielded a viable generative experience that can compete with the current industry leaders. Siri’s decline, the mismatch between Apple’s privacy model and generative AI’s compute needs, the broader ecosystem competition, and the economic logic of strategic partnerships all suggest that Apple’s pivot toward OpenAI or Anthropic is not a retreat—it is a recalibration.

The Contenders

As Apple evaluates external partners to power a reinvigorated version of Siri, two companies have emerged as the frontrunners: OpenAI and Anthropic. Both firms are at the cutting edge of large language model development and offer distinctly different capabilities, philosophies, and strategic fits. The choice between these two contenders is not merely a technical decision—it reflects deeper considerations related to user privacy, product integration, brand alignment, and long-term ecosystem control.

This section examines the profiles of OpenAI and Anthropic, compares their flagship AI models, and analyzes how each might integrate with Apple’s product environment.

OpenAI: Innovation at Scale

OpenAI has arguably become the most recognizable name in generative AI. Since the release of ChatGPT in November 2022, OpenAI has redefined public expectations of what conversational agents can achieve. The company’s flagship models—GPT-3.5, GPT-4, and the recently announced GPT-4o (omni)—have demonstrated exceptional capabilities in language comprehension, code generation, multimodal processing, reasoning, summarization, and creative output.

OpenAI’s GPT-4o, in particular, integrates voice, vision, and text in a single model architecture, making it well-suited for real-time interaction. This could prove transformational for Siri, whose current limitations include an inability to process nuanced multi-modal input or sustain complex dialogue. GPT-4o is also optimized for low-latency performance, a critical feature for integration in mobile devices where response times significantly affect user experience.

In addition to its technical excellence, OpenAI offers robust API infrastructure, cloud compute scaling via Microsoft Azure, and a vibrant developer ecosystem. Apple could theoretically plug into OpenAI’s APIs to quickly enhance Siri’s conversational breadth and responsiveness without overhauling its back-end entirely. Such a solution would expedite time-to-market, especially ahead of iOS 19 or a potential AI-focused iPhone launch.

However, OpenAI’s cloud-based architecture and historical data handling policies raise questions regarding compatibility with Apple’s privacy principles. While OpenAI has made improvements in offering “no-training” API modes and enterprise-grade data isolation, some observers note that Apple may still be cautious about surrendering AI interactions to an external service. Further complicating matters is OpenAI’s close relationship with Microsoft—Apple’s longtime competitor—creating potential strategic tension.

Anthropic: Safety-First, Privacy-Aligned

Founded by former OpenAI researchers, Anthropic has emerged as a strong challenger in the LLM space with its Claude model family. Named after Claude Shannon, the father of information theory, Anthropic has distinguished itself through a focus on alignment, interpretability, and long-context reasoning. Its latest model, Claude 3.5 Sonnet, delivers competitive performance to GPT-4 in areas such as summarization, coding, and document comprehension, while exceeding in safety metrics and maintaining lower hallucination rates.

Anthropic’s models are engineered with constitutional AI principles—frameworks designed to embed safety and alignment into model behavior without the need for extensive reinforcement learning with human feedback (RLHF). This design ethos complements Apple’s brand image of responsible innovation, user-first design, and safeguarding against unintended AI behavior.

From a privacy perspective, Anthropic’s enterprise offerings include rigorous data protections, and the company does not train its models on API user inputs. This aligns more closely with Apple’s approach to data sovereignty and customer trust. Claude models are also increasingly deployed in private cloud environments, which could support hybrid architectures in which certain tasks are offloaded to the cloud, while others remain on-device.

That said, Anthropic is a younger and more conservative company when it comes to deployment scale. Its cloud infrastructure is more limited than OpenAI’s, and it lacks a deep integration layer equivalent to Microsoft’s tooling for OpenAI models. While technically proficient, Anthropic’s products have seen fewer consumer-facing implementations, which may raise concerns about maturity, latency, and performance at iOS scale.

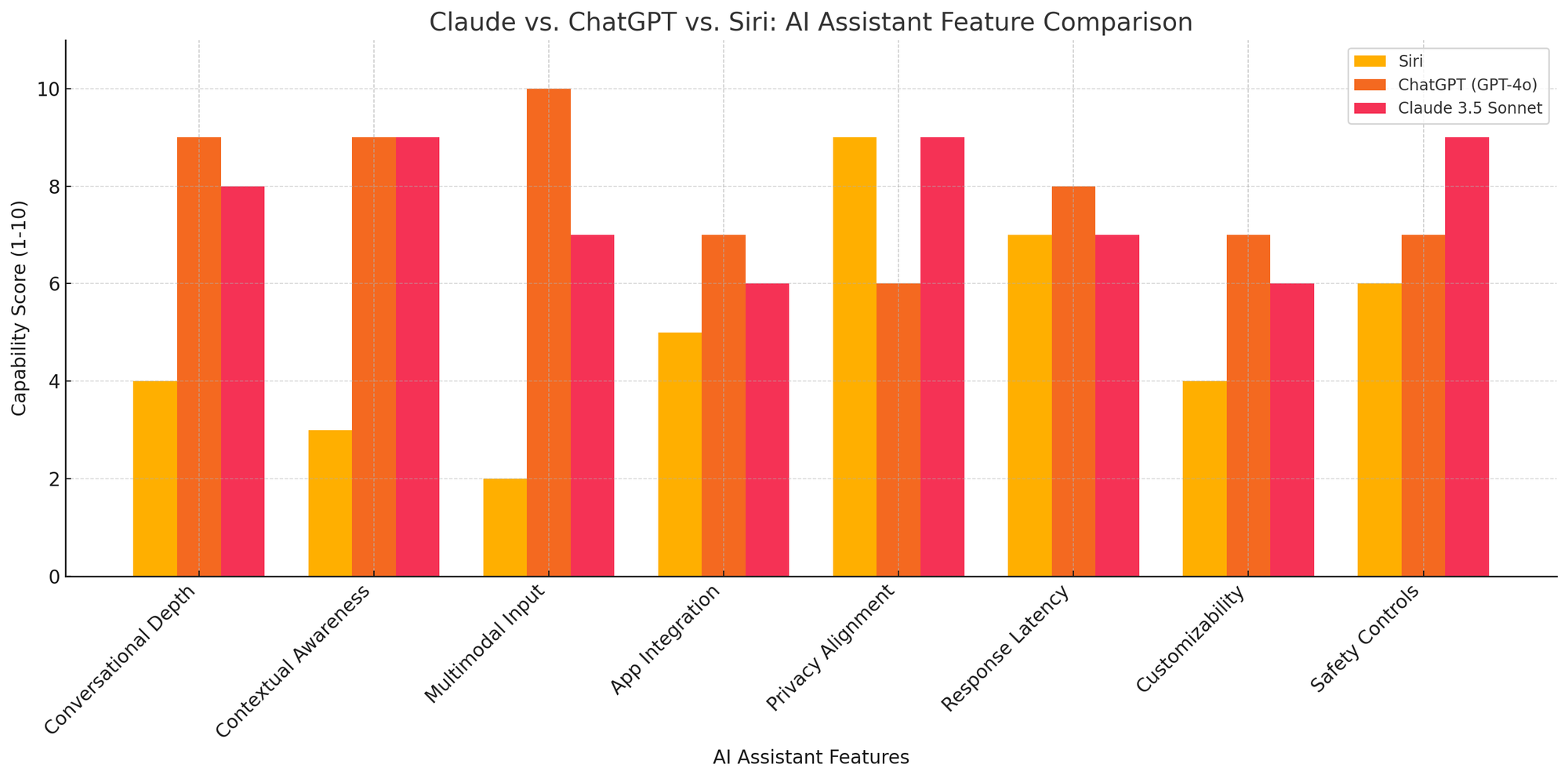

Capabilities Showdown: Claude vs. ChatGPT

To make an informed choice, Apple must consider how Claude and ChatGPT compare across several dimensions critical to product integration. These include multimodal fluency, real-time response capability, hallucination rate, language support, extensibility, and resource efficiency.

| Capability | ChatGPT (GPT-4o) | Claude 3.5 Sonnet |

|---|---|---|

| Multimodal Input | Advanced (text, voice, image) | Text, image (limited voice support) |

| Context Window | 128K tokens | 200K tokens |

| Latency | Low (optimized for real-time) | Moderate |

| Hallucination Control | Improved but still present | Strong emphasis on accuracy |

| Privacy Controls | Enterprise API modes available | No training on API inputs |

| Deployment Maturity | High (used by millions daily) | Growing but limited |

| Integration Risk | Higher due to Microsoft ties | Lower, independent ownership |

From this matrix, OpenAI emerges as the more mature, feature-rich option, particularly for voice-first assistant applications. However, Anthropic’s longer context window and safety alignment make it a safer and more philosophically compatible partner for Apple.

Strategic Considerations Beyond the Models

The technical debate is just one part of Apple’s decision-making process. Strategic, branding, and ecosystem factors may ultimately tip the scales.

- Data Sovereignty: Apple may prefer a partner who allows model deployment on Apple-controlled infrastructure (e.g., private cloud or on-device inference). Anthropic has hinted at this flexibility, whereas OpenAI currently leans toward centralization via Azure.

- Brand Independence: Partnering with OpenAI could entangle Apple indirectly with Microsoft. This might complicate public messaging and dilute brand sovereignty, particularly if future iOS users rely on a model primarily associated with Windows or Bing.

- AI Roadmap Control: Apple may favor a partner that is willing to customize models for tight OS-level integration. Anthropic’s smaller scale could enable a more bespoke relationship, whereas OpenAI’s product roadmap is more rigidly defined.

- Regulatory Risk: In a climate of increasing scrutiny around AI governance, Apple might view Anthropic’s safety-first approach as a hedge against future regulatory blowback. OpenAI’s public controversies and fast-paced rollouts may bring additional exposure.

Developer Ecosystem and Long-Term Flexibility

Another consideration is how these partnerships will affect Apple’s developer ecosystem. If Apple chooses OpenAI, it may inherit access to the vibrant GPT plugin ecosystem and GPT-native apps, creating an instant AI economy within iOS. This could complement Apple’s App Store monetization model and bolster its services revenue.

Conversely, if Apple works with Anthropic, it may have more room to co-create a developer stack tailored to Apple platforms. This could result in tighter integration with Swift, CoreML, and Apple’s proprietary chips—an advantage in performance, security, and user experience.

In either case, Apple is likely to layer its own UI/UX and personalization frameworks over the core model, preserving the Apple “feel” while leveraging the intelligence of the underlying LLM.

A Choice Between Power and Principles

In weighing OpenAI and Anthropic, Apple faces a nuanced trade-off: OpenAI offers unmatched capability and maturity, while Anthropic offers safety, privacy, and philosophical harmony. The decision will reveal not just what Siri is becoming, but also what kind of AI company Apple wants to be—aggressive and performance-driven, or deliberate and user-protective.

Whichever partner Apple selects, the outcome will mark a pivotal evolution in how Siri works, how AI is integrated across Apple’s platforms, and how users interact with technology on a daily basis.

What This Means for Siri and Apple’s AI Ecosystem

If Apple proceeds with integrating either OpenAI’s GPT models or Anthropic’s Claude models into Siri, the implications for its ecosystem will be profound and far-reaching. This decision goes beyond simply improving a digital assistant—it signifies a recalibration of Apple’s AI strategy across every layer of its platform architecture. From interface design to device-level compute, from developer APIs to privacy infrastructure, incorporating third-party generative AI will reshape how Siri operates and how users interact with Apple devices.

This section explores how Siri would be transformed by such a partnership, the architectural changes required to support generative AI, the likely impact on privacy and personalization, and how this would ripple across the broader Apple ecosystem.

Siri’s Functional Leap: From Command Executor to Conversational Agent

At present, Siri operates within a narrow command-and-control framework. It can answer basic questions, perform structured tasks, and interface with apps—but it fails to maintain context, struggles with open-ended queries, and lacks the flexibility of modern AI assistants. By integrating a model like GPT-4o or Claude 3.5, Siri would evolve into a context-aware, dialogue-capable assistant with significantly enhanced capabilities:

- Conversational Continuity: The ability to understand follow-up questions without rephrasing or restating context.

- Summarization & Insight Extraction: Useful for messages, emails, calendar events, and even web pages or documents.

- Multimodal Understanding: With GPT-4o, Siri could potentially process visual inputs (screenshots, photos) and voice in real time.

- Creative Output: Assisting in composing emails, writing notes, generating ideas, or translating across languages.

This would shift Siri from a utility to a personal AI concierge—capable of not just executing commands, but understanding goals, preferences, and complex instructions.

Architectural Shifts: Cloud, Hybrid, or On-Device?

Historically, Apple’s AI services have prioritized on-device processing. This model ensures faster performance, preserves user privacy, and reduces dependence on the cloud. However, integrating large-scale LLMs—most of which require vast GPU clusters and inference at scale—would necessitate a different architecture.

Apple faces three potential design options:

- Cloud-Based Execution: Leveraging external infrastructure (e.g., Microsoft Azure for OpenAI or Amazon/AWS for Claude) for model inference. This allows access to full model capabilities but poses privacy and latency risks.

- Hybrid Model: Using on-device LLMs for basic tasks and routing advanced queries to the cloud-based provider. This balances privacy and functionality, and could mirror how Apple handles dictation or image recognition today.

- Private Cloud Deployment: Apple could negotiate for exclusive hosting of the LLMs on its own private cloud infrastructure, ensuring control over data and compliance with its privacy policies.

Among these, the hybrid model appears the most likely. Apple could preserve user trust by keeping sensitive queries local while tapping into external AI only when necessary. This also aligns with Apple’s chip innovation trajectory: the Neural Engine in A-series and M-series chips could handle light inference tasks, while complex reasoning is outsourced securely.

Privacy, Data Handling, and Trust

Apple has long marketed itself as the guardian of digital privacy. Any partnership with a third-party AI provider must uphold this reputation. Users expect that their Siri interactions—voice commands, messages, search queries—remain secure, ephemeral, and inaccessible to external parties.

This presents a core challenge. OpenAI, despite improvements in enterprise data handling, is still perceived as a cloud-first organization. Anthropic, with its no-training-on-inputs policy and emphasis on model alignment, may align more naturally with Apple’s privacy-first philosophy.

To maintain trust, Apple will likely implement:

- Explicit Disclosure: Letting users know when and how external AI is being used.

- Opt-In Features: Generative Siri enhancements may be off by default, requiring user activation.

- Data Anonymization & Encryption: Ensuring no personally identifiable information is transmitted.

- Session-Based Memory: Allowing useful context during interactions but avoiding persistent storage.

If Apple can achieve this balance, it could deliver next-gen AI capabilities without compromising on the core value that differentiates it from Google, Amazon, and others: data protection.

Developer Tools and API Ecosystem Integration

An AI-powered Siri would not only enhance user experience but also extend new capabilities to developers across Apple platforms. With an embedded LLM, Apple could expose new SiriKit APIs or integrate advanced natural language understanding into its development tools, enabling apps to:

- Interpret complex voice commands and route them contextually.

- Summarize app-specific content (e.g., news articles, recipes, workouts).

- Offer in-app agents that use Siri’s AI layer to assist users.

- Leverage system-wide multimodal interpretation, including voice, gesture, and image inputs.

This could reignite developer interest in Siri integrations, which have historically been constrained by limited intent templates and inflexible voice command systems. It would also provide a competitive answer to Google’s “Help Me Write” features and Microsoft’s Copilot across productivity apps.

Furthermore, Apple may integrate LLM capabilities into Xcode, enabling developers to generate code, refactor functions, or write documentation using natural language—all processed through a secure, Apple-managed interface.

Impact Across the Product Portfolio

A smarter, generative Siri would not be limited to iPhones. It would become a cross-platform AI layer, influencing nearly every product Apple ships:

- macOS: Integration with Spotlight and system-level search, email writing assistance, and app navigation.

- iPadOS: Productivity tools for students, creators, and designers that leverage multimodal interaction.

- visionOS: A necessity for Apple Vision Pro, where conversational interaction and spatial awareness will define usability.

- watchOS: Enhanced on-the-go functionality, including summarization, reminders, and predictive health queries.

- tvOS and HomePod: Improved smart home control, content discovery, and contextual voice response.

This strategic AI layer would effectively position Siri as Apple’s AI operating system, tightly coupled to its hardware, UI, and services.

Reframing User Expectations

For over a decade, users have been conditioned to expect little from Siri. It is often used for basic tasks—setting alarms, checking the weather, or sending a message. A generative AI upgrade has the potential to completely reframe user expectations, positioning Siri as an intelligent, interactive partner.

This transformation may be gradual. Apple is unlikely to unveil a radically different Siri overnight. Instead, it may roll out features in stages: starting with advanced text generation, followed by summarization tools, then voice-driven creative functions, and finally full multimodal agents.

If executed with clarity and trust, the new Siri could restore Apple’s relevance in AI—not just as a follower, but as a leader in experience-centered intelligence.

A Systemic Shift, Not a Feature Addition

The decision to incorporate OpenAI or Anthropic into Siri is not a mere product update—it is a systemic shift in Apple’s AI philosophy. It would reshape how Siri is built, how data is handled, how developers create apps, and how users interact with Apple’s products across form factors.

For Apple, the move represents an embrace of the generative AI era—on its own terms. It is a pragmatic recognition that while internal models like Ajax and MM1 show promise, user expectations have leaped ahead. Whether Apple partners with OpenAI’s scale or Anthropic’s safety, the end result will be a smarter Siri and a reimagined Apple AI ecosystem.

Strategic and Competitive Implications

Apple’s potential integration of third-party large language models (LLMs) into Siri is not merely a product decision—it is a strategic inflection point that will reverberate across the global technology landscape. As generative AI becomes a foundational layer for computing platforms, Apple’s move to adopt external AI providers like OpenAI or Anthropic marks a pivotal departure from its tradition of building core technologies in-house. It is a calculated response to mounting competitive pressure, shifting user expectations, and the accelerating race to define the next era of human–machine interaction.

In this section, we examine the broader implications of Apple’s AI pivot—how it will influence Apple’s competitive positioning, challenge existing business models, affect global regulatory dynamics, and redefine the AI platform war.

The Platform War Enters a New Phase

The tech industry has long operated in cycles: desktop vs. mobile, apps vs. web, cloud vs. edge. Today, a new platform battle is emerging—one defined by AI-native operating systems. Google, Microsoft, Meta, and Amazon are no longer competing merely on hardware or software, but on which AI model becomes the most deeply embedded, most trusted, and most extensible layer in people’s daily workflows.

Apple, historically dominant in hardware-software integration, risks ceding this new AI layer to its rivals unless it moves decisively. Microsoft’s aggressive integration of OpenAI across Windows and Office 365 has already redefined productivity software. Google is embedding Gemini models into Android, Gmail, Docs, and the Pixel line. Meta is turning its family of apps into LLM-enhanced social engines. Amazon is fusing Alexa with custom Titan models to reassert its position in the home.

By outsourcing Siri’s intelligence layer to OpenAI or Anthropic, Apple can accelerate time-to-innovation, regain relevance in AI discourse, and assert itself in the emerging battle over AI platform dominance.

Balancing Speed with Ecosystem Control

One of Apple’s enduring strengths is its vertical integration. From silicon design to operating system UX, Apple controls every facet of the user experience. Relying on a third-party AI model—especially one hosted in external cloud infrastructure—represents a strategic vulnerability.

Key risks include:

- Dependency on External Roadmaps: Apple may be constrained by the release schedules, licensing terms, and architectural decisions of the AI provider.

- Brand Dilution: If users recognize GPT-4o or Claude as powering Siri, it could weaken the perception of Apple as the innovator behind the experience.

- Cloud Costs and Infrastructure Entanglement: Using GPT via Microsoft Azure or Claude via AWS could entangle Apple in complex cost structures and cloud dependencies.

To mitigate these risks, Apple is likely to seek customized partnerships. This could include licensing a version of the LLM for exclusive use, hosting it on Apple’s own secure cloud infrastructure, or gradually transitioning to a fully proprietary model once Apple’s internal models mature.

In essence, Apple’s strategy may resemble its past transitions: starting with third-party components (Intel CPUs, Google Maps, OpenGL) and later replacing them with Apple Silicon, Apple Maps, and Metal.

Redefining AI Monetization and Services Strategy

Apple’s services business—now contributing over $80 billion annually—depends heavily on App Store fees, iCloud subscriptions, Apple Music, and AppleCare. Adding a powerful AI layer to Siri opens new monetization pathways, but also introduces complex strategic tradeoffs.

Potential revenue opportunities include:

- Siri Pro Tier: An optional subscription layer for enhanced generative capabilities, similar to ChatGPT Plus.

- AI-as-a-Platform: Letting developers integrate Siri’s LLM into their apps via Apple APIs, with usage-based billing.

- Vertical Enhancements: Embedding AI tools into Apple Music (smart playlists), Apple News (summarization), or Mail (smart replies).

However, Apple must tread carefully. Charging for AI capabilities risks alienating users who expect them to be free, especially given that Android now ships Gemini Nano on-device at no extra cost. Furthermore, Apple must avoid overreliance on a paid model while balancing cost containment for AI inference workloads, which can be expensive at scale.

Ultimately, Apple’s opportunity lies in bundling AI seamlessly into its value proposition—augmenting devices and services without drawing undue attention to the underlying business model shift.

Competitive Reaction and Market Dynamics

Apple’s AI pivot will undoubtedly provoke reactions across the industry:

- Google will likely accelerate Gemini integration into Android, Google One, and Pixel devices to retain AI leadership on mobile.

- Microsoft, given its OpenAI alignment, could attempt to restrict licensing flexibility or pursue exclusive access to future GPT model enhancements.

- Samsung, which has partnered with both Google and Baidu for AI, may redouble its focus on localized, hybrid AI models to differentiate its Galaxy AI experience.

- Meta may emphasize open-source AI as a counter-narrative to Apple’s closed ecosystem, while seeking regulatory scrutiny on Apple’s AI integrations.

Startups and developers, meanwhile, will look to Apple’s final implementation for signals. If Apple offers meaningful access to Siri’s new capabilities, it could ignite a renaissance in AI-native iOS app development, giving Apple a developer edge it has recently lost to web-based LLM platforms.

Antitrust and Regulatory Scrutiny

Apple’s dominance in mobile platforms already places it under significant antitrust scrutiny in the U.S., EU, and other regions. Embedding a powerful AI model into Siri—and possibly tying it into default search, browser, or app functionalities—may draw additional regulatory attention.

Regulators could raise questions around:

- Default AI Assistant Behavior: Will users have the ability to choose alternative AI providers?

- Data Collection Practices: Even if Apple anonymizes data, transmitting queries to external servers could raise privacy concerns.

- Market Fairness: Smaller AI startups may argue that Apple’s distribution advantage crowds them out of mobile platforms.

Moreover, if Apple selects OpenAI, which already maintains exclusive licensing with Microsoft, the concentration of AI power among a few U.S. giants may raise alarms globally—particularly in Europe, where the AI Act is taking shape.

To preempt backlash, Apple may opt for transparency, user choice, and sandboxing features that isolate LLM interactions from core device telemetry.

Shaping the Next Generation of Human–Machine Interfaces

Finally, and perhaps most significantly, Apple’s embrace of generative AI will shape the evolution of how humans interact with technology. With Vision Pro and spatial computing on the horizon, Apple is redefining interaction paradigms. Voice, gesture, eye-tracking, and contextual AI must coalesce into a fluid experience.

By embedding a capable LLM into Siri, Apple could unify these inputs into a truly intelligent interface—where the AI understands what you mean, not just what you say. This would redefine not just the assistant, but the interface layer of the Apple ecosystem itself.

Rather than simply being reactive, Siri could become anticipatory, proactive, and emotionally intelligent. That would fundamentally shift how people perceive their devices—from tools to collaborators.

A Strategic Bet with Industry-Wide Consequences

Apple’s deliberation over integrating OpenAI or Anthropic into Siri is a signal: the generative AI age demands speed, flexibility, and unprecedented intelligence. For a company that has long prized control and vertical integration, this marks a calculated risk—a bet that partnering now leads to independence later.

Whether Apple ultimately favors OpenAI’s horsepower or Anthropic’s safety, the decision will define the next phase of platform competition. It will affect how billions interact with their devices, how developers build for Apple platforms, and how regulators shape the future of AI. In this AI arms race, Apple is not too late—but it must act decisively to remain a leader.

Apple's Generative AI Gamble and the Road Ahead

Apple has long been a company that prefers evolution over revolution, internal innovation over third-party reliance, and deliberate refinement over impulsive adoption. Yet the rapid acceleration of generative AI has forced even Apple—a master of controlled ecosystem design—to reconsider its stance. The reported deliberations around integrating OpenAI or Anthropic into Siri reflect a broader strategic recognition: generative AI is not optional—it is foundational.

This potential pivot signifies more than just a smarter Siri. It represents a larger inflection point where Apple must decide whether it will continue to shape the future of personal computing as a leader in intelligence, or risk becoming a reactive follower in the AI-native era. The outcome of this decision will influence not only the trajectory of Siri but also the future of iOS, macOS, visionOS, and Apple’s standing in the global technology arena.

From Hardware-Centric to Intelligence-Centric

For much of its history, Apple’s strength has resided in its hardware excellence. From the iPhone and iPad to Apple Silicon, the company has continually set performance standards. Yet hardware alone no longer defines consumer experience. Increasingly, intelligence defines usability.

What good is a powerful processor if the software lacks contextual awareness? What use is a spatial computer like Vision Pro if it cannot interpret your intent? As AI becomes the defining layer of computing, the focus shifts from speed and form factor to understanding and adaptability.

By integrating external LLMs, Apple acknowledges that delivering next-generation intelligence cannot be achieved solely through chip innovation. It requires access to vast foundational models, honed by billions of parameters and terabytes of training data. It means embedding not just processing capability, but semantic comprehension and generative reasoning into every Apple device.

OpenAI or Anthropic: Two Paths, One Future

As explored earlier in this post, OpenAI and Anthropic present Apple with two distinct options:

- OpenAI offers unparalleled scale, multimodal capability, and real-world deployment maturity. It is the most direct route to leapfrogging Siri’s limitations and catching up to user expectations immediately.

- Anthropic delivers on alignment, long-context safety, and privacy compatibility. It offers a more philosophically aligned, if incrementally paced, path forward—one that prioritizes user trust and control.

Choosing between them is not merely a matter of feature comparison. It is a strategic signal: will Apple prioritize speed and scale, or control and principle? Will it lean into GPT’s ubiquity or Claude’s safety envelope? Will it trade short-term dependence for long-term flexibility?

Whichever path it selects, Apple is unlikely to remain dependent forever. Historically, Apple has used partnerships as transitional mechanisms—leveraging Google Maps before launching Apple Maps, using Intel CPUs before switching to Apple Silicon. The use of OpenAI or Anthropic may be a tactical move—a way to meet immediate market needs while continuing to develop Ajax, MM1, or future proprietary models internally.

Siri Reborn: Rewriting Expectations

Siri has long been a point of both utility and frustration for Apple users. It was once revolutionary but has become a punchline in tech commentary—mocked for its inability to grasp nuanced queries or hold a conversation. Apple now has the opportunity to redefine Siri from the ground up.

With a powerful LLM backing it, Siri could become:

- A conversational agent capable of sustained, multi-turn dialogue.

- An intelligent assistant that summarizes documents, interprets images, and suggests actions.

- A multimodal hub that unites voice, gesture, visual input, and context into a cohesive interface.

- A proactive collaborator that helps manage tasks, drafts responses, and automates routines across Apple devices.

But to succeed, Apple must go beyond technical excellence. It must deliver these capabilities in a way that feels native to the Apple ecosystem—fast, secure, private by default, and deeply integrated with its design language and device capabilities.

This is not just about fixing Siri. It is about building the next interface layer—the intelligent operating system beneath iOS, macOS, and visionOS.

User Trust Will Define Success

Apple’s reputation as a steward of user privacy is one of its most valuable assets. While users may be excited about more capable AI, they will not tolerate compromises on trust. Apple must therefore approach this rollout with extreme clarity and control.

That means:

- Transparent disclosures about when external AI is used.

- Strict limits on data retention and model training.

- Opt-in defaults with visible privacy indicators.

- Clear settings to disable or sandbox AI access.

Apple must also prepare to defend its approach before regulators. Antitrust scrutiny, AI ethics inquiries, and global governance debates will only intensify. Apple’s ability to retain control over the AI experience while complying with legal frameworks will be as important as its technical execution.

A Developer Renaissance — or a Missed Opportunity?

Should Apple fully embrace generative AI, it can revitalize interest in its developer ecosystem. SiriKit has long been constrained by limited intents. But with LLMs integrated at the OS level, Apple could empower developers to:

- Build AI agents within their apps.

- Use Siri as a semantic router for complex commands.

- Leverage system-wide summarization, generation, and contextual inference.

This could trigger a renaissance in AI-native iOS development, especially if Apple provides new tools in Xcode, Swift, and SwiftUI to interface with the AI layer. However, failure to open this capability could isolate Apple in a world increasingly dominated by extensible, AI-driven platforms like Android and Windows Copilot.

The Road Ahead: A New Apple Experience

The road ahead will be defined by gradual rollout, user feedback, and continuous refinement. Apple will likely debut generative features in beta, restrict initial access, and expand capabilities over successive OS updates. Like Face ID, M1, or Retina Displays before it, Apple will aim to make AI invisible yet indispensable—a seamless upgrade rather than a jarring transformation.

In the next five years, Siri could evolve into a persistent, multimodal assistant that integrates across all Apple services and devices. It could become the ambient layer that interprets, assists, and adapts—without ever violating user trust or aesthetic cohesion.

This is Apple’s opportunity to define what responsible AI looks like at consumer scale. Not merely powerful, but understandable. Not just fast, but respectful. Not a gimmick, but a core element of the modern computing experience.

The Gamble of Innovation

To remain relevant in the AI age, Apple must take calculated risks. Waiting until its own models match GPT-4 or Claude 3.5 could be too late. Moving forward with a partner carries its own dangers. But inaction is no longer an option.

If executed wisely, Apple’s partnership with OpenAI or Anthropic could mark the beginning of Siri’s redemption—and the start of a new chapter where Apple once again defines the interface of the future. This isn’t just about upgrading an assistant. It’s about committing to a future where intelligence is integrated, invisible, and intuitively Apple.

References

- The Verge – Apple reportedly in talks with OpenAI to power Siri

https://www.theverge.com/2024/5/15/apple-openai-siri-integration-ai - Bloomberg – Apple’s AI Strategy Takes Shape with Possible OpenAI Deal

https://www.bloomberg.com/news/articles/apple-openai-anthropic-ai-siri - TechCrunch – Apple may use OpenAI or Anthropic to give Siri a brain transplant

https://techcrunch.com/2024/05/16/apple-siri-openai-anthropic-ai - Axios – Apple’s generative AI roadmap finally emerges

https://www.axios.com/technology/apple-siri-generative-ai - Wired – Siri Is Falling Behind. Can Generative AI Save It?

https://www.wired.com/story/apple-siri-generative-ai-partnership - Ars Technica – Siri could soon get ChatGPT-style smarts through external AI models

https://arstechnica.com/gadgets/news/apple-siri-openai-anthropic - CNBC – Apple's big AI shift: Why it's looking at OpenAI and Anthropic

https://www.cnbc.com/2024/05/15/apple-openai-anthropic-siri-ai - Financial Times – Apple eyes outside AI firms to fix Siri’s shortcomings

https://www.ft.com/content/apple-siri-ai-openai-anthropic - The Information – Inside Apple’s delayed AI rollout and Siri overhaul

https://www.theinformation.com/articles/apple-siri-openai-ai-integration - Engadget – Apple’s Siri may soon be powered by a third-party AI model

https://www.engadget.com/apple-siri-third-party-ai-openai-anthropic-130033