Anthropic’s Claude Gets Real-Time Web Search: A Game-Changer for AI Accuracy and Transparency

In the evolving landscape of artificial intelligence, real-time access to information has emerged as a fundamental differentiator among AI models. The ability of language models to supplement their foundational training data with up-to-date insights retrieved from the internet represents a critical leap forward in both utility and user experience. On this front, Anthropic—one of the leading developers of next-generation AI systems—has taken a significant step with the launch of a web search feature for its Claude models. This feature enables Claude to access and cite current information from the internet, thereby significantly enhancing the depth, reliability, and relevance of its responses.

Anthropic’s Claude, a family of AI assistant models built with a focus on alignment, safety, and usability, has steadily gained traction since its initial release. Known for its cautious and structured responses, Claude has often been favorably compared with OpenAI’s ChatGPT and Google’s Gemini for its balanced approach to accuracy and user transparency. However, until recently, Claude's capabilities were limited to its pre-trained knowledge base, rendering it unable to access information published after its last training cutoff date. This limitation affected its effectiveness in scenarios requiring current data—such as breaking news, evolving legal frameworks, product launches, and live market trends.

The introduction of the web search feature marks a strategic inflection point in Anthropic’s product roadmap. Claude can now access real-time information from the open web, verify it across multiple sources, and cite results in a structured and interpretable manner. This transition positions Claude not only as a conversational assistant but also as a research tool and productivity enhancer capable of handling more dynamic queries that require up-to-date context.

The integration of real-time web search into Claude’s architecture is not merely a technical enhancement; it is a response to the broader user expectations that have arisen from competing AI platforms. OpenAI’s ChatGPT, particularly in its pro version, has long supported internet browsing for real-time queries. Similarly, niche search-focused assistants like Perplexity AI have demonstrated the value of built-in search and citation capabilities. By embedding web search functionality directly into Claude, Anthropic signals its intent to remain competitive in a landscape where the boundary between traditional search engines and AI assistants is becoming increasingly blurred.

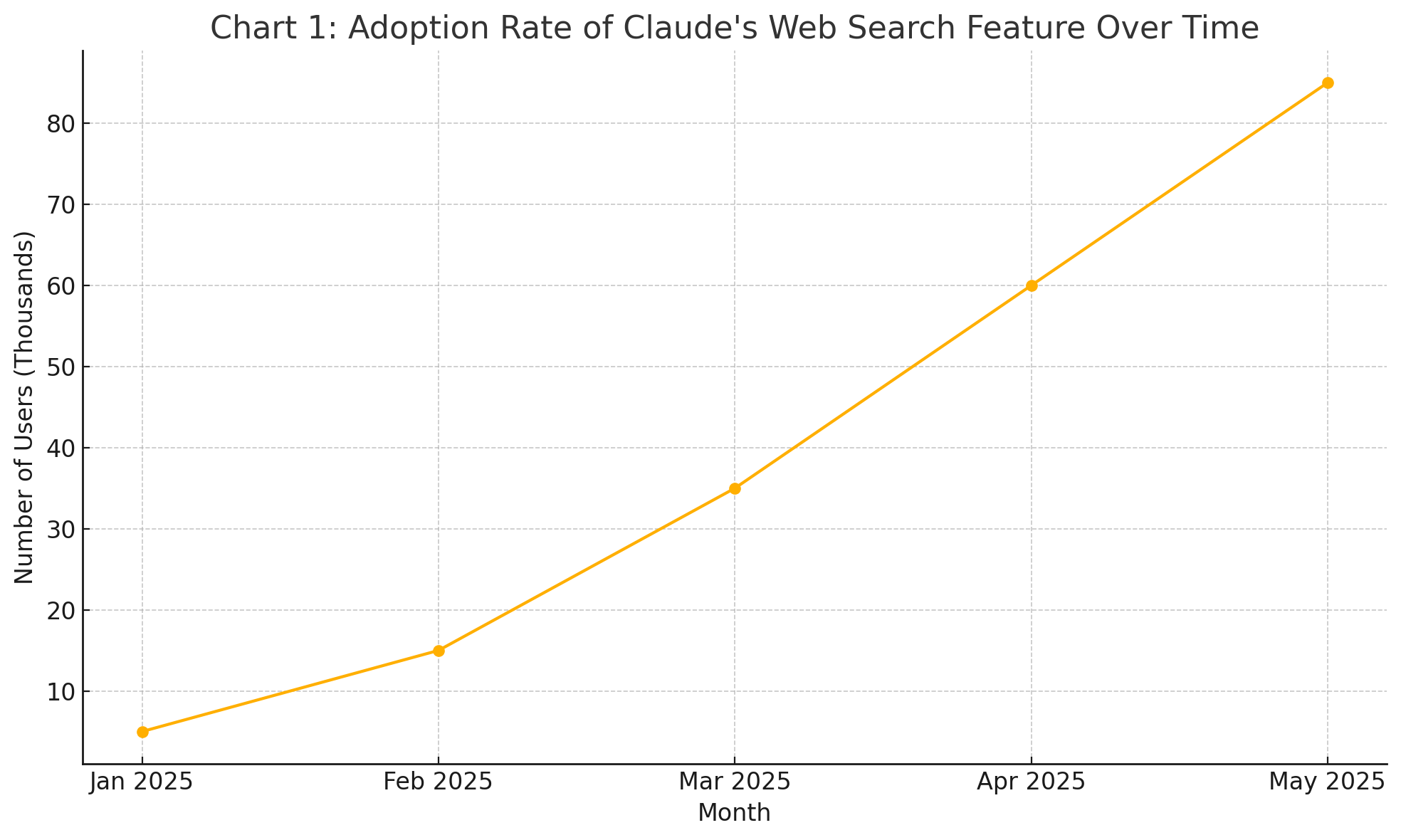

Anthropic’s rollout strategy for this new capability is both measured and practical. Initially made available through its API for developers and enterprise customers, the web search feature is currently integrated into Claude 3.5 Sonnet and Claude 3.7 Sonnet, with broader expansion planned for other versions such as Haiku. Priced at $10 per 1,000 searches—plus the standard token usage costs—the feature is positioned as a premium add-on that unlocks significantly greater utility. While this pricing may limit access for casual users in the short term, it sets a scalable framework for businesses and advanced users seeking high-quality, real-time data enrichment within their applications.

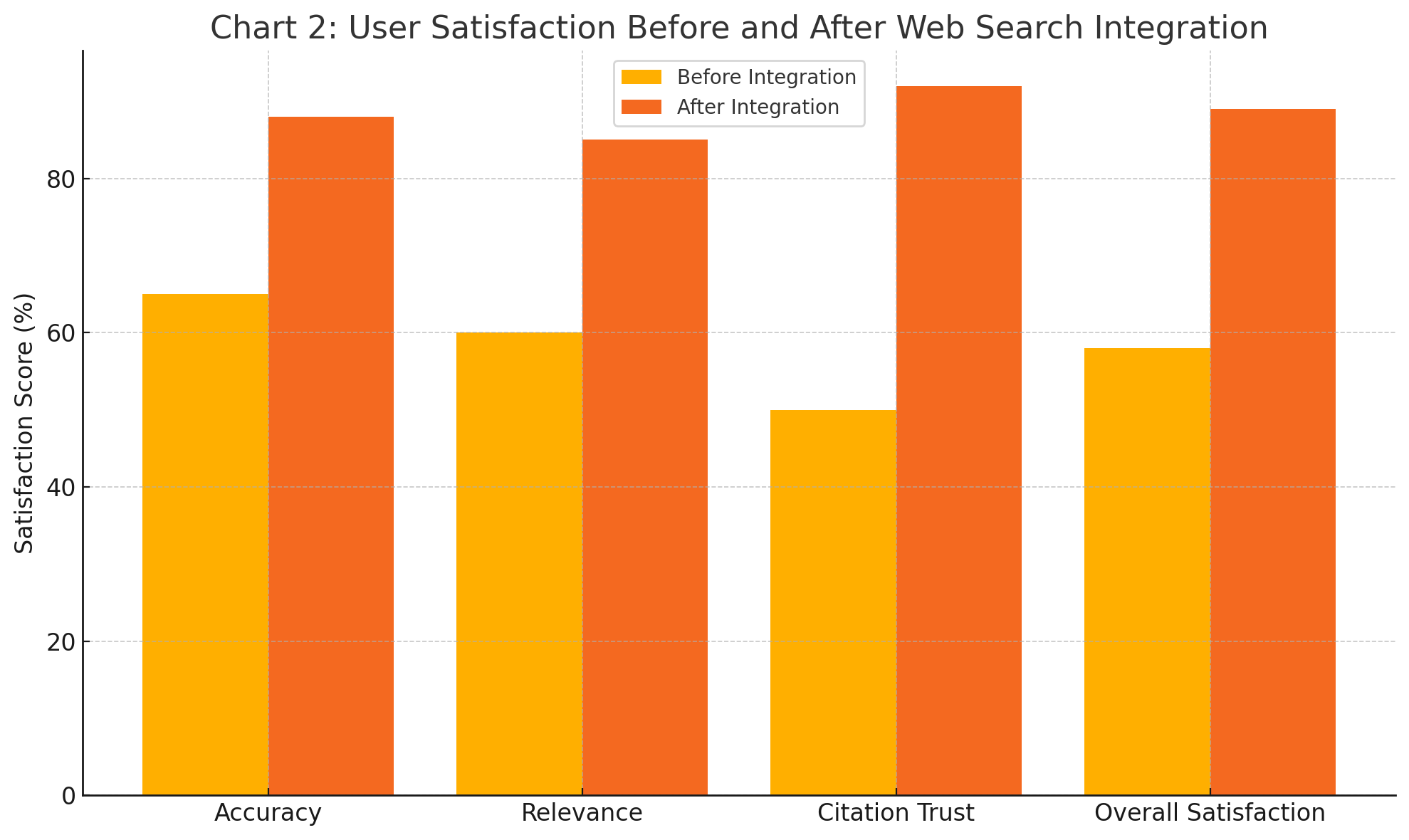

In terms of user experience, Claude's web search is designed with a focus on transparency and trust. Responses generated through this feature are automatically annotated with source citations, enabling users to verify the information independently. This is a key differentiator in an era where misinformation and AI hallucinations are significant concerns. Developers also gain fine-grained control over the search function, with options to whitelist or restrict domains, helping to tailor the results to specific industries or use cases.

The launch comes at a pivotal moment for AI adoption across sectors. From legal research and academic writing to technical documentation and news aggregation, there is growing demand for AI systems that not only understand language but also retrieve and evaluate factual information in real time. The ability to synthesize current data into coherent, referenced responses offers clear advantages in workflows that depend on both speed and credibility. Anthropic’s enhancement of Claude with web search aligns with these imperatives, making the tool more attractive to professional users in journalism, finance, consulting, and academia.

This development also has broader implications for how AI companies envision the future of search. Traditional search engines operate by serving up links that users must manually sift through; AI-driven search, by contrast, aims to provide synthesized, conversational answers grounded in those sources. In this model, AI is no longer a supplement to search—it is the search engine. With Claude’s new feature, Anthropic inches closer to realizing this vision, reshaping the way users interact with information online.

In this blog post, we will explore the significance of this new web search capability in detail. We begin by examining the technical implementation of the feature, including how Claude retrieves, filters, and cites information from the internet. We will then analyze the impact on users and developers, considering practical use cases and integration pathways. In addition, we will provide a comparative evaluation of Claude’s search features against competitors like OpenAI’s ChatGPT and Perplexity AI, supplemented by visual data. Lastly, we will consider the future trajectory of AI search capabilities and the strategic implications for Anthropic as a company operating in a highly competitive domain.

As real-time knowledge becomes a competitive necessity rather than a luxury in AI tools, Anthropic’s bold move to enable web search for Claude is not just a feature update—it is a redefinition of what users should expect from intelligent systems. Whether this will elevate Claude to a leadership position in the space or merely keep it in the running remains to be seen. What is certain, however, is that the paradigm of AI assistance is shifting once again—toward a future where language models are not only trained on the past but are also actively aware of the present.

Understanding Claude's Web Search Capability

The integration of real-time web search into Anthropic’s Claude models represents a significant architectural enhancement that transforms the nature of large language models (LLMs). This section provides an in-depth examination of the technical implementation, usability aspects, cost structure, and tooling integrations that define Claude’s web search capability. Together, these components offer a comprehensive understanding of how Claude retrieves, processes, and presents information from the open internet to deliver grounded, current, and verifiable responses.

Technical Architecture and Functionality

At the core of Claude’s web search feature lies a tool-use framework that allows the model to invoke external APIs during a conversation. When a user query cannot be answered solely from Claude’s training data—particularly those involving recent events or data published after its last knowledge update—the model is designed to recognize the insufficiency and autonomously trigger a web search. This mechanism mimics the reflexivity of human researchers who consult external sources when their internal knowledge is inadequate.

The process begins when the model parses the query and determines the intent and relevance of a real-time search. Once the search tool is activated, Claude submits a web search query via Anthropic’s internal search API. The system then fetches multiple candidate results from a curated selection of trusted web sources. Unlike indiscriminate crawling engines, Claude’s search tool is optimized for relevance, credibility, and citation readiness. The top results are parsed, and key content is extracted for synthesis. Importantly, Claude does not merely repeat content verbatim but instead digests it and reformulates it into a concise response, citing the source(s) used.

This search functionality is currently embedded within Claude 3.5 Sonnet and Claude 3.7 Sonnet, which are among the most capable models in Anthropic’s portfolio. These models have been optimized to work in tandem with external tools and APIs through Anthropic’s secure backend infrastructure. Developers using Claude’s API gain access to this feature by configuring the tool-use environment and enabling search as a callable tool. The model thus functions as a composable agent, selectively employing web search when needed, rather than defaulting to it for every query.

User Experience and Interface Design

From a user standpoint, Claude’s web search capability has been designed to prioritize transparency, ease of interaction, and trust. When web search is invoked, users are clearly notified that the response is based on external content retrieved in real-time. Each cited source is linked at the bottom of the response, allowing for immediate verification. This citation-first philosophy is intended to counteract concerns around hallucination—a persistent issue in LLMs where models may fabricate facts or invent references.

The structured citations typically include the title of the article or page, the domain, and a hyperlink to the original source. When multiple sources are consulted to answer a complex query, Claude includes multiple citations and clarifies which part of the answer corresponds to which reference. This transparency fosters user confidence in the factual accuracy of the model’s outputs and encourages further exploration by clicking through to original materials.

For developers, Claude’s web search feature is accessible via a flexible API, documented extensively in Anthropic’s developer portal. With a few lines of configuration, developers can define the operational boundaries of the tool—such as limiting the domains that Claude is allowed to search or setting rate limits on web search calls to manage cost. This allows for granular control, making the feature suitable for both consumer-facing apps and enterprise tools that require strict content governance.

Pricing Model and Economic Considerations

Anthropic has introduced a clear and scalable pricing model for its web search feature. The service is currently priced at $10 per 1,000 search queries, in addition to standard token-based charges for prompt and completion generation. This tiered structure ensures that organizations can predict and manage usage costs effectively. While the price point is currently geared toward professional users and developers, Anthropic has indicated plans to explore broader accessibility models as the infrastructure matures.

The rationale behind the cost structure is grounded in both infrastructure overhead and information value. Conducting live web searches introduces latency, bandwidth usage, and data filtering requirements that are significantly more resource-intensive than standard inference tasks. Moreover, the value proposition of retrieving up-to-date, relevant, and reliable information in real-time—particularly in industries such as finance, law, or media—justifies the premium pricing for those relying on decision-critical accuracy.

To further support budgeting and performance monitoring, Anthropic offers usage analytics through its API dashboard. Developers can track search volume, cost per user, and response accuracy metrics, enabling optimization of prompts and query strategies to minimize unnecessary searches. This feature is especially useful in environments where prompt engineering and cost-efficiency are high priorities.

Integration with Tool Ecosystem and Developer Platforms

Claude’s web search feature does not exist in isolation; it is part of a broader tool ecosystem designed to support complex, multi-step reasoning and task execution. The web search tool integrates seamlessly with other Claude capabilities such as the Claude Code tool, enabling developers to build intelligent agents that can both retrieve relevant documentation and write or modify code accordingly. For instance, a user could ask Claude to “find the latest security patch notes for TensorFlow and update the example code accordingly,” and the model would retrieve the patch notes, cite the source, and update the code as instructed.

Anthropic has also made the feature compatible with popular third-party development platforms, including Python, TypeScript, and RESTful frameworks. This extensibility allows developers to embed Claude with web search capabilities into custom interfaces, enterprise dashboards, and mobile applications. Combined with Claude’s strong contextual understanding and language generation abilities, this opens the door to creating intelligent assistants, research bots, and customer support agents that are significantly more capable than their static counterparts.

Additionally, Anthropic provides robust documentation to support onboarding, with example configurations, code snippets, and testing environments. This developer-first approach is in line with Anthropic’s overall philosophy of safety, control, and interpretability. By giving developers the tools to shape how Claude uses the web, the company ensures that applications can be tailored to specific compliance, ethical, or operational standards.

Trust, Safety, and Information Ethics

One of the defining features of Claude’s web search implementation is its commitment to information ethics and safety. In contrast to systems that may amplify unreliable or malicious sources, Claude’s search pipeline incorporates filters that prioritize credible, high-quality domains. Additionally, developers can whitelist or blacklist specific domains, giving them the ability to fine-tune the information sources Claude uses in its responses.

This emphasis on controlled retrieval is particularly important in domains such as healthcare, legal advice, and public policy, where the cost of misinformation can be high. By restricting access to a vetted pool of sources and clearly labeling all citations, Claude positions itself as a model that not only generates intelligent responses but also respects the integrity of the information ecosystem.

Anthropic also maintains transparency around data handling and privacy. Web search queries are anonymized and not used to retrain the model, ensuring that sensitive or proprietary search data remains confidential. These measures help reinforce user and enterprise trust, especially in regulated industries where data handling policies must meet stringent compliance requirements.

Impact on Users and Developers

The deployment of a real-time web search feature in Anthropic’s Claude models is not merely a technical milestone—it is a paradigm shift with profound implications for the end-user experience and developer ecosystem. As artificial intelligence tools increasingly serve as co-pilots in both personal and professional contexts, the integration of web search fundamentally changes how users interact with, rely on, and benefit from large language models (LLMs). In this section, we examine the practical impact of Claude’s web search capability on users across various sectors and on developers building AI-integrated applications. Furthermore, we consider how privacy, control, and customization provisions influence adoption and trust.

Enhanced Capabilities for End-Users

For end-users—whether individuals, researchers, or professionals—the most immediate and tangible impact of Claude’s web search functionality is a dramatic improvement in information accuracy and contextual relevance. Prior to this feature, Claude, like many other LLMs, was constrained by its static training data. This limitation often rendered it ineffective for queries involving recent developments, such as evolving legislation, ongoing geopolitical events, newly released scientific papers, or dynamic business intelligence.

With web search now enabled, Claude can retrieve live information directly from credible online sources. This significantly enhances its performance in areas such as:

- News summarization: Users can obtain real-time overviews of breaking events, complete with citations from leading outlets.

- Product comparison: Claude can analyze and compare features, prices, and reviews of products based on the latest data from e-commerce platforms and tech publications.

- Academic assistance: Students and researchers benefit from Claude’s ability to retrieve and synthesize recent scholarly articles, journals, and conference proceedings.

- Legal and policy updates: Lawyers and analysts can query Claude for summaries of newly passed legislation or regulatory changes in different jurisdictions.

In all these scenarios, the presence of citations not only improves user confidence in the provided answers but also allows for deeper investigation, enhancing the model’s role from mere assistant to trusted research partner.

Additionally, Claude’s capability to identify when it lacks up-to-date knowledge and invoke the search feature selectively is a marked improvement in user interaction. Rather than returning an outdated or potentially misleading answer, Claude can now admit the limitation, fetch current data, and deliver an enriched response—all within a seamless conversational flow.

Developer Opportunities and Ecosystem Expansion

The rollout of web search functionality also introduces a suite of opportunities for developers looking to embed real-time intelligence into their products and services. Previously, developers seeking up-to-date AI outputs had to combine a language model with a third-party search API, manage query orchestration, and build custom tools for parsing and verifying results. With Claude’s built-in search capability, much of this complexity is abstracted away.

Developer Benefits Include:

- Simplified architecture: The Claude API now acts as a single endpoint for both reasoning and search, streamlining backend systems and reducing latency.

- Rich application use cases: Developers can create applications that respond dynamically to the evolving web—e.g., chatbots that pull current financial reports, dashboards that summarize industry news, or knowledge assistants that provide the latest guidance in healthcare and compliance.

- Custom domain targeting: Through domain restrictions and query filters, developers can tailor Claude’s web search behavior to suit specific industry needs, ensuring domain relevance and regulatory alignment.

Moreover, by leveraging Anthropic’s citation and source integrity features, developers gain tools that support explainability and user accountability. In applications such as finance or healthcare, where decisions must be auditable, these citations form the foundation of ethical AI deployment.

The ability to set guardrails—such as limiting the scope of accessible websites—also aligns Claude with enterprise-grade requirements. For example, a financial firm could configure the model to restrict searches to government databases, industry publications, and its own internal documentation, minimizing the risk of misinformation and ensuring compliance with internal guidelines.

Industry-Specific Implications

Across multiple industries, Claude’s web search feature enables new capabilities that improve efficiency, reduce operational costs, and enhance the quality of insights. Below are some representative examples:

- Healthcare and Life Sciences: Doctors, researchers, and medical writers can use Claude to retrieve the latest clinical trial results, drug approval updates, and medical guidelines. Claude can cite authoritative sources like the CDC, WHO, or peer-reviewed journals, improving both the speed and accuracy of medical reporting.

- Financial Services: Analysts can query Claude to explain changes in interest rates, recent earnings reports, or regulatory shifts, with data pulled in real time from Bloomberg, Reuters, or government websites. This reduces dependence on siloed analytics tools and enables conversational access to market intelligence.

- Legal and Regulatory Affairs: Lawyers can obtain summaries of new case law, statutory changes, or compliance requirements across jurisdictions, enabling faster legal research and document preparation.

- Education and Academia: Teachers and students gain access to the most recent information for research papers, classroom discussions, and homework assistance. The model’s ability to cite reputable academic sources ensures that its outputs are grounded and verifiable.

- Marketing and eCommerce: Marketing teams can use Claude to monitor competitive campaigns, retrieve customer sentiment from forums and reviews, and generate reports that reflect current trends and public perception.

In each of these domains, Claude’s enhanced ability to retrieve and contextualize real-time data improves decision-making and task automation.

Privacy, Data Control, and Responsible Use

As AI models become more integrated with sensitive workflows, questions of privacy and responsible data use become paramount. Anthropic has taken several measures to ensure that Claude’s web search feature adheres to robust data governance standards.

Key privacy and control features include:

- Anonymized queries: Web search queries are anonymized and not stored in a way that ties them back to specific users or conversations.

- No retraining from user data: Claude does not use search results or user prompts for further training, which prevents data leakage and preserves user confidentiality.

- Custom domain control: Developers can restrict searches to pre-approved domains, ensuring that only trusted sources are used in generating responses.

- Citation transparency: Each answer that includes externally sourced data is accompanied by citations, enabling verification and promoting intellectual honesty.

These measures are particularly important for applications in regulated industries or public sector environments, where data sovereignty and source verification are critical. By embedding these features, Anthropic makes Claude a viable AI solution not just for general-purpose tasks but also for high-stakes, compliance-sensitive domains.

Empowering Intelligent Workflows

At a higher level, Claude’s real-time web search capability supports the development of intelligent, agent-like workflows that bridge the gap between static automation and adaptive reasoning. Developers can now build agents that:

- Conduct live research,

- Validate outputs before presentation,

- Iterate based on newly acquired data, and

- Provide human-readable justifications for their decisions.

This evolution transforms Claude from a passive model into an active digital co-worker—capable of executing multi-step tasks, supporting complex knowledge work, and adapting to dynamic conditions.

In summary, the rollout of web search functionality in Claude delivers measurable benefits to both end-users and developers. Users enjoy more relevant, reliable, and verifiable responses, while developers gain access to an adaptable, integrated toolset for building AI-enhanced applications. Combined with strong privacy controls and citation mechanisms, Claude’s web search feature sets a new benchmark for trustworthy, real-time AI assistance.

Claude vs. Competitors: A Comparative Analysis

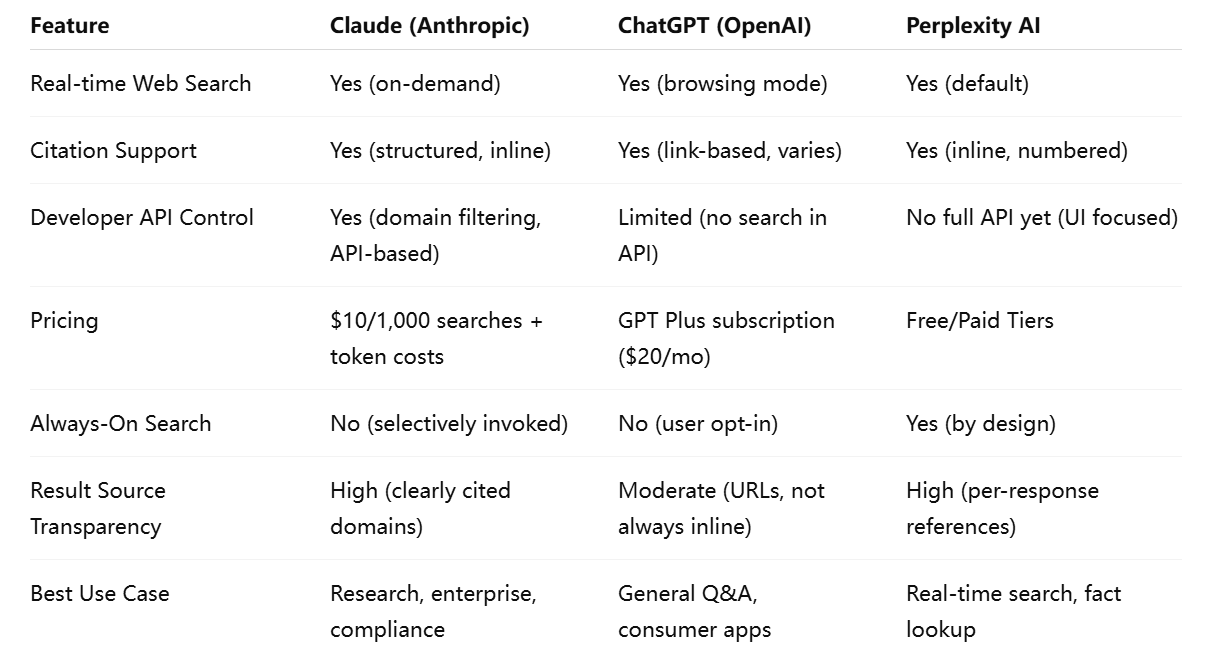

As artificial intelligence rapidly evolves into a central pillar of digital infrastructure, competitive differentiation among large language model (LLM) providers increasingly hinges on value-added features that transcend basic language understanding. Among these, the integration of real-time web search stands out as a vital enhancement that enables LLMs to respond with current, verifiable information. In this section, we undertake a comparative analysis of Anthropic’s Claude with other leading AI models that offer similar search capabilities—most notably OpenAI’s ChatGPT and Perplexity AI. This evaluation examines each model’s strengths, limitations, integration flexibility, citation transparency, and alignment with commercial or academic applications.

The Web Search Paradigm in Modern LLMs

Real-time web search functionality in language models enables dynamic knowledge retrieval, allowing AI systems to access, synthesize, and present up-to-date information far beyond their training cutoffs. Traditionally, LLMs have been bound by the limitations of static datasets, which often lag behind current developments by several months or even years. By incorporating search tools, these models can respond to queries that require live updates—such as market conditions, scientific discoveries, or recent news—with actionable insights grounded in external sources.

This capability reflects a broader transformation in how AI assistants are used. Rather than merely offering generalized advice, modern LLMs are expected to deliver context-aware, real-time, and source-cited answers. Consequently, AI providers have begun to position their models as intelligent web agents—functioning not only as chatbots but as research assistants, investigative companions, and decision-support tools.

Claude’s Web Search Feature: Differentiating Factors

Anthropic’s Claude stands out for its methodical and citation-driven approach to web search. The Claude 3.5 Sonnet and Claude 3.7 Sonnet models are currently equipped with the web search capability via Anthropic’s tool-use API framework. When invoked, Claude performs structured queries across a range of high-quality web sources, extracts key content, and responds with a synthesis of information backed by transparent citations.

Key differentiators include:

- Citations by default: Claude embeds citations automatically, identifying the domain, article title, and hyperlink to the original source. This ensures traceability.

- Developer control: Through domain whitelisting and custom query routing, developers can regulate which websites Claude is permitted to search.

- Privacy safeguards: Queries are anonymized, and user data is not used for model retraining.

- Pricing model: Claude’s search feature costs $10 per 1,000 searches, plus standard token usage—designed for professional environments.

Claude’s architecture emphasizes trust, compliance, and controlled information retrieval, making it especially attractive to enterprises operating in regulated industries.

ChatGPT’s Browsing Mode: Flexibility and Generalization

OpenAI’s ChatGPT, particularly in its GPT-4 variant available through ChatGPT Plus, also offers web browsing functionality. When browsing is enabled, the model performs Google-like searches and synthesizes the content into a human-readable format. The system is widely used for general knowledge updates, news retrieval, and fact-checking.

Strengths of ChatGPT's search integration include:

- Familiar user interface: ChatGPT integrates browsing seamlessly into its native app, making it accessible even to non-technical users.

- Broad retrieval scope: The model can search and summarize data from a wide range of domains, including niche blogs, commercial sites, and media outlets.

- Real-time citations: OpenAI includes URLs at the bottom of the response, although citation quality may vary.

However, ChatGPT’s approach to citation can be less structured than Claude’s. While it provides links to sources, it may not always attribute specific facts to particular sources within the body of the text. Furthermore, developers using ChatGPT via the OpenAI API currently lack direct control over web search integration—browsing remains largely a user-facing feature within the ChatGPT platform.

Perplexity AI: Search-Native Design

Perplexity AI is a model built around search from the ground up. Unlike Claude and ChatGPT, which add browsing as an auxiliary function, Perplexity is inherently designed to operate as an AI-powered search engine. Every query initiates a web search, and responses are constructed using summaries of multiple sources, prominently listed with inline citations.

Distinctive features of Perplexity AI include:

- Inline citation model: Each sentence or paragraph in the response is directly associated with a numbered citation, increasing transparency.

- Always-on search: The model is optimized for scenarios requiring constant access to updated content.

- Free access: Perplexity’s core functionality is free, with a paid “Pro” tier offering access to larger context models and advanced filters.

While Perplexity excels at real-time fact aggregation, its outputs can be relatively shallow in reasoning depth compared to Claude or GPT-4. It is best suited for quick fact-checking, list generation, and document summarization—rather than complex multistep reasoning or analytical synthesis.

Below is a comparative overview of web search features across the three models:

This comparison highlights how each model’s approach is optimized for different use cases. Claude is built for precision, security, and professional reliability; ChatGPT emphasizes broad accessibility and conversational engagement; and Perplexity targets fast-paced, information-centric queries.

Strategic Market Positioning

Claude’s introduction of web search is a calculated move to stay competitive in a market increasingly driven by real-time capability. As users grow accustomed to AI systems that “know the latest,” models without browsing quickly fall behind in both perceived and actual utility. Anthropic’s strategy to roll out web search via API—paired with its existing focus on model alignment and interpretability—positions Claude as a formidable choice for organizations seeking responsible AI integration.

In contrast, OpenAI continues to dominate the consumer-facing AI space through the ChatGPT product ecosystem, offering a blend of entertainment, assistance, and light research. However, its limited developer access to web search hampers innovation in third-party use cases. Meanwhile, Perplexity AI’s specialization in search-centric design has earned it a unique niche, particularly among users prioritizing fast answers and high citation granularity.

As enterprise adoption of AI matures, the competitive advantage will increasingly hinge on customization, citation fidelity, domain control, and real-time intelligence—all areas where Claude is now better positioned due to its modular web search architecture.

In summary, Claude’s new web search capability significantly enhances its standing among AI models with real-time retrieval features. While OpenAI’s ChatGPT offers seamless integration for consumers and Perplexity delivers aggressive citation-focused answers, Claude distinguishes itself through its developer flexibility, structured sourcing, and alignment with enterprise priorities. These distinctions will play a critical role as organizations assess which models to deploy for decision-critical applications in law, finance, healthcare, and knowledge management.

Future Prospects and Conclusion

The launch of web search capabilities in Anthropic's Claude models marks a pivotal evolution in the development of large language models (LLMs), setting a new standard for real-time knowledge access, verifiability, and enterprise readiness. As Claude transitions from a static assistant to a dynamic, context-aware tool capable of retrieving and citing current information, the implications for both user experience and broader industry trajectories are substantial. This section examines the forward-looking prospects of Claude’s development roadmap, anticipated expansion of features and accessibility, and the strategic influence of this advancement on the AI ecosystem as a whole.

Expanding the Scope of Access

One of the most immediate areas of anticipated growth for Claude’s web search feature involves increasing availability across geographies, user tiers, and deployment environments. At present, web search is enabled for Claude 3.5 Sonnet and Claude 3.7 Sonnet through API access and enterprise offerings. However, Anthropic has signaled plans to roll out the feature to a broader set of users—including those on its web-based Claude platform and, eventually, free-tier customers.

Such a move would democratize access to real-time information via Claude, enabling individuals, students, small businesses, and civic organizations to benefit from credible, up-to-date insights. In an era where disinformation is prevalent and trust in search engines is eroding, making a citation-first model available to the public has the potential to significantly elevate public discourse, digital literacy, and informed decision-making.

Furthermore, expanding integration into Claude’s lighter-weight models, such as Claude Haiku, could allow the feature to serve mobile and low-latency environments more effectively. This flexibility is vital as LLMs move beyond desktops and enterprise systems to power consumer apps, IoT interfaces, and wearable devices.

Multimodal Search and Next-Generation Interactions

Another compelling frontier lies in extending Claude’s web search functionality into multimodal interactions. As LLMs increasingly process and generate not just text but also images, audio, and video, real-time search capabilities could be adapted to retrieve multimedia content. For instance, future iterations of Claude may be able to pull and cite relevant images, graphs, PDFs, or videos in response to user queries, greatly enriching the depth and format of AI-generated responses.

Such enhancements could enable more interactive and pedagogical experiences, particularly in education, design, and research. Imagine a user asking Claude for the latest IPCC climate report figures and receiving a summary accompanied by charts and downloadable visual references. This vision of AI-powered, multimedia-enriched knowledge assistants aligns with the growing demand for information delivery that matches varied learning styles and professional needs.

Moreover, the convergence of search and dialogue will allow Claude to act not merely as a search engine proxy but as a personalized knowledge navigator. Instead of receiving a list of links or even a static synthesis, users will engage in an iterative dialogue with the model, refining the scope and depth of information with natural language prompts. This direction signals a shift from information retrieval to knowledge orchestration.

Enterprise and Vertical Market Integration

From a commercial perspective, Claude’s web search feature is poised to accelerate adoption in vertical markets that require domain-specific intelligence delivered in real time. Industries such as legal services, pharmaceuticals, government administration, and journalism present complex information environments where data must be timely, accurate, and source-traceable.

Claude’s built-in support for citation, combined with its ability to restrict search to pre-approved domains, makes it well-suited to operate within regulated frameworks. As enterprises increasingly seek AI systems that can be audited and aligned with governance protocols, Claude’s approach offers a compelling proposition.

Furthermore, Anthropic’s pricing model—charging per 1,000 search queries in addition to standard token costs—supports predictable cost modeling and enterprise scalability. With the addition of telemetry features, usage tracking, and analytics APIs, organizations will be able to fine-tune performance, optimize cost-efficiency, and ensure compliance with internal policies.

Looking ahead, Claude’s capabilities could be deeply embedded within customer relationship management (CRM) systems, enterprise resource planning (ERP) software, and legal research platforms—redefining workflows through intelligent augmentation of search and synthesis.

Ethical AI, Source Integrity, and Societal Impacts

Claude’s emphasis on transparency and citation also highlights a broader philosophical stance on the ethical use of AI. As concerns mount over AI hallucinations, opaque algorithms, and the potential for misinformation, Claude’s structured sourcing and user-verifiable references position it as a model aligned with public accountability and information integrity.

This orientation has profound implications not only for professional adoption but also for society at large. When deployed in educational, governmental, or journalistic settings, Claude’s commitment to sourcing can foster greater trust in AI as a partner in public discourse. This stands in contrast to generative systems that may offer fluent but unverifiable responses—posing risks in high-stakes domains.

In this regard, Claude is not merely a technical product but a philosophical one, articulating a vision of AI that respects epistemic rigor and user agency. As LLMs become embedded in public infrastructure and daily decision-making, models that combine power with accountability will be essential in shaping responsible AI governance frameworks globally.

Anthropic’s Strategic Position and the Future of AI Search

With the release of web search for Claude, Anthropic has strengthened its strategic position in a highly competitive landscape dominated by titans like OpenAI, Google, and Meta. While each of these firms brings distinct advantages—OpenAI in model performance and interface design, Google in indexing and search, and Meta in open-source innovation—Anthropic has carved out a differentiated niche focused on alignment, control, and transparent knowledge delivery.

This approach is increasingly resonating with enterprise buyers, developers, and institutional partners who seek AI systems that are not only capable but also governable and trustworthy. As AI becomes further embedded into critical infrastructure, the ability to deliver current information with traceability may become the default expectation, not the exception.

In the long term, we can expect Claude to expand its capabilities in areas such as:

- Personalization: Tuning search behavior based on user preferences or organizational context.

- Federated knowledge systems: Combining real-time web data with private knowledge bases in hybrid deployments.

- Autonomous agents: Enabling Claude to act proactively, conducting scheduled research, monitoring data feeds, or validating documents.

In parallel, Anthropic is likely to face increasing pressure to integrate with more front-end applications, productivity tools, and collaboration platforms. Seamless user experience across interfaces—from chat to voice to embedded agents—will be essential in scaling adoption and staying competitive.

Conclusion

The introduction of real-time web search to Claude represents a transformative advancement in the evolution of language models. By integrating current knowledge retrieval, structured citation, and developer-level controls, Anthropic has expanded Claude’s functionality from a static assistant to a dynamic, real-time intelligence engine.

This capability offers tremendous value to users seeking up-to-date, reliable information and to developers building the next generation of AI applications. Its impact spans a wide array of domains—from research and education to finance and law—enhancing the model’s ability to serve as a trusted, responsive, and explainable tool.

In a competitive field where real-time context and factual accuracy are increasingly vital, Claude’s web search feature not only narrows the gap with leading alternatives but in many respects sets a new benchmark for responsible AI design. As Anthropic continues to refine and extend these capabilities, Claude is poised to play a central role in shaping the next era of intelligent systems—one in which transparency, timeliness, and trust are no longer optional, but foundational.

References

- Anthropic Official Announcement

https://www.anthropic.com/news/web-search-api - Claude Web Search Tool Documentation

https://docs.anthropic.com/en/docs/build-with-claude/tool-use/web-search-tool - Anthropic Claude 3 Model Overview

https://www.anthropic.com/index/claude-3 - Claude API Pricing Details

https://docs.anthropic.com/claude/docs/claude-api#pricing - TurtlesAI Coverage on Claude Web Search

https://www.turtlesai.com/en/pages-2784/anthropic-launches-web-search-api-for-claude - Perplexity AI Features

https://www.perplexity.ai - OpenAI ChatGPT Plus Info

https://openai.com/chatgpt - Claude Web Search Use Case Examples

https://docs.anthropic.com/en/docs/tool-use-overview - Claude for Enterprise Developers

https://docs.anthropic.com/claude/docs - Anthropic’s Vision on Safe and Steerable AI

https://www.anthropic.com/safety