Amazon’s AI-Powered Audio Summaries – A New Era in E-Commerce UX

Amazon has once again demonstrated its prowess in redefining the e-commerce experience by rolling out a new feature that leverages artificial intelligence (AI) to generate short-form audio summaries of product listings. Initially introduced for a select range of items, this innovation aims to enhance accessibility, reduce cognitive load during product discovery, and respond to evolving consumer preferences for hands-free, auditory content. At its core, this development reflects a broader industry trend—the convergence of AI, voice interfaces, and digital commerce.

The announcement arrives at a pivotal moment for Amazon, whose massive ecosystem is increasingly influenced by emerging technologies in natural language processing and multimodal interfaces. By turning product descriptions into succinct spoken-word summaries, Amazon is positioning itself to better serve mobile-first users, those with visual impairments, and time-constrained shoppers seeking faster, more intuitive browsing. In a digital landscape saturated with textual overload and screen fatigue, this innovation signifies an important shift toward low-friction retail experiences driven by AI-generated auditory content.

The Evolution Toward Multimodal E-Commerce Experiences

Over the past decade, the digital shopping journey has evolved from static catalog browsing into an immersive, dynamic, and hyper-personalized experience. With innovations in AI, machine learning, computer vision, and speech recognition, e-commerce platforms are no longer constrained by two-dimensional visuals and long-form copy. Amazon’s AI-powered audio summaries can be understood as part of this ongoing transformation—a shift away from screen dependency and toward multisensory commerce.

The rise of voice commerce—purchasing products through voice commands on devices like Amazon Echo—has set the stage for this type of innovation. According to market research, global voice shopping is expected to surpass $80 billion in sales by 2026, with a significant portion of that growth driven by AI enhancements. Yet, until recently, most voice-driven interactions were limited to placing reorders, checking order statuses, or asking Alexa for deals. Amazon's new audio summary feature moves the capability further upstream in the buying journey: the product discovery phase.

Why Audio? Why Now?

The rollout of audio summaries aligns with three converging trends in consumer behavior and technological advancement:

- Accessibility and Inclusion: Millions of users worldwide have some form of visual impairment or cognitive disability. Audio summaries offer a more inclusive shopping interface, reducing reliance on screen readers or complex navigation flows.

- Time-Efficiency and Convenience: In a world marked by multitasking and rapid consumption habits, consumers increasingly seek ways to interact with content while driving, cooking, commuting, or exercising. Listening to product overviews provides a streamlined way to process information without visual commitment.

- Shifting Content Preferences: Just as podcasts and audiobooks have gained tremendous popularity, consumers are warming up to audio-first UX. Younger generations, particularly Gen Z and millennials, show a strong affinity for snackable, voice-driven content formats.

From a competitive standpoint, Amazon's adoption of this technology can be seen as a pre-emptive maneuver to cement its leadership in e-commerce as other tech-savvy retailers experiment with similar features. As AI voice synthesis becomes more human-like and less robotic, its use in mainstream commerce will only accelerate.

AI-Powered Summaries: The Technology Backbone

At the heart of Amazon’s innovation lies its growing investment in generative AI and speech synthesis models. The company is likely using a version of its own large language model (LLM) architecture—similar to the models behind Alexa or Bedrock APIs—to extract key insights from product pages and deliver them as succinct, conversational summaries. These are then converted into audio using Amazon Polly or another internal neural text-to-speech system.

The summarization process likely involves ranking key product features, consumer ratings, and unique selling points before compressing them into a form that is both informative and engaging in under 30 seconds. For users, the result is a seamless listening experience that mirrors a podcast teaser or a quick audio brief. For Amazon, it’s a demonstration of vertical integration—controlling the full AI pipeline from data ingestion to natural language generation to audio rendering.

Beyond Amazon: A Glimpse into the Future of Retail UX

This development, while currently limited to select products, carries vast implications. It paves the way for a future where product pages are no longer read—but listened to, and where AI voice agents become our personalized shopping assistants. It also reflects the broader adoption of AI-generated media in marketing and commerce—from automated product videos to chatbot-driven recommendations and voice-guided customer service.

More importantly, it prompts a key question for retailers, developers, and UX designers alike: How do we design interfaces that are not just accessible but adaptive to real-life contexts and cognitive styles? For some, audio summaries may become the preferred way to browse. For others, they may serve as a complement to traditional text and image formats. Either way, the future is clearly moving toward personalized, multimodal retail storytelling.

Setting the Stage for Deeper Analysis

In the sections that follow, we will delve into the mechanics of this audio summary feature, explore the strategic rationale behind Amazon’s move, evaluate its potential influence on the broader industry, and assess the ethical and operational challenges that accompany AI-generated content. From customer impact to competitive implications, Amazon’s latest rollout offers a rich case study in how emerging technologies continue to reshape the foundations of digital commerce.

Inside Amazon’s Innovation: How the Audio Summary Feature Works

Amazon’s recent deployment of AI-generated audio product summaries represents a compelling integration of machine learning, natural language processing, and synthetic voice technologies. While the feature might appear simple on the surface—an audio playback button on a product page—its architecture is underpinned by a complex orchestration of technologies designed to enhance the user experience at scale. This section unpacks the technological backbone of the feature, its operational workflows, and the broader systems integration that makes real-time or near-real-time audio summarization possible for millions of listings.

The Technical Workflow Behind Audio Summaries

The process begins with data extraction, where Amazon’s system pulls structured and unstructured information from a product’s detail page. This includes the title, bullet points, full product description, customer reviews, FAQs, and manufacturer specifications. This corpus of data becomes the input for a large language model (LLM)—possibly from Amazon’s Bedrock framework or a customized variant of Alexa’s underlying model.

The LLM is trained to generate concise, informative, and neutral summaries that capture the essence of the product. Key product features, differentiators, and user sentiment are distilled into a short script, typically designed to be spoken within 15 to 30 seconds. The generated text undergoes a quality assurance pipeline, which may include reinforcement learning filters, rule-based validation, and sentiment control to avoid bias or misinformation.

Once the text summary is finalized, it is sent to Amazon’s text-to-speech (TTS) engine, likely powered by Amazon Polly’s neural TTS models. Polly is capable of producing lifelike human voices in various languages, dialects, and emotional tones. The resulting audio file is compressed and cached for delivery via Amazon’s global content delivery network (CDN), ensuring low-latency playback across devices and regions.

Real-Time vs. Pre-Generated Summaries

A key architectural decision in deploying this feature revolves around whether the summaries are generated in real-time or pre-generated and stored for future access. Given the computational cost and the scale of Amazon’s product catalog, the company appears to be using a hybrid approach.

- Pre-generated summaries are likely applied to high-traffic items and top-selling SKUs. These summaries are updated periodically based on changes to product listings or the addition of new review data.

- Real-time summaries, on the other hand, may be reserved for newer listings or less trafficked items, where automation allows Amazon to offer a feature without incurring unnecessary storage costs.

This hybrid model enables Amazon to optimize both resource efficiency and user experience, tailoring the implementation based on usage patterns, product priority, and category relevance.

Deployment Scope and Product Selection Criteria

At the time of launch, Amazon has restricted the rollout of audio summaries to select categories, including consumer electronics, household goods, and health and wellness products. These categories were likely chosen for three reasons:

- High Consumer Research Intensity: These products often have complex specifications that users need to understand quickly.

- High Review Volume: The abundance of user-generated content allows the AI model to detect common sentiment trends and concerns.

- Competitive Differentiation: These segments are saturated, and a unique audio summary can influence purchase decisions through improved accessibility and engagement.

Eventually, Amazon is expected to extend this capability to fashion, books, tools, and other verticals where consumers benefit from multimodal content delivery.

Device Integration and UX Touchpoints

The integration of audio summaries is designed to be seamless across devices. On mobile apps, a small speaker icon appears near the top of select product pages, which, when tapped, plays the short summary. On smart speakers and Alexa-enabled devices, users can request summaries through voice commands such as “Alexa, what’s this product about?” or “Read me a summary.”

Amazon’s infrastructure also supports personalization, allowing summaries to be context-aware. For instance, if a user has a history of purchasing sustainable products, the audio summary might prioritize environmental benefits if available. Similarly, for frequent tech buyers, technical specifications could be highlighted more prominently in the narration.

Natural Language Generation and Tone Calibration

One of the more nuanced challenges in generating audio summaries is achieving the right tone and register. Unlike static product descriptions, audio content is inherently conversational and subject to interpretive nuance. Amazon’s LLMs are therefore calibrated to maintain:

- Neutrality: Avoiding subjective adjectives like “best” or “perfect,” unless sourced directly from verified user reviews.

- Conciseness: Adhering to time constraints while preserving key product details.

- Relevance: Prioritizing what consumers care most about—size, use-case, warranty, price-performance ratio—based on clickstream and review mining.

To accomplish this, Amazon’s summarization engine likely leverages extractive and abstractive summarization techniques. Extractive methods identify and rephrase critical phrases, while abstractive models reinterpret the content into novel expressions that are more engaging when spoken aloud.

Voice Selection and Personalization

Amazon Polly offers a diverse set of voices with varying emotional tones, gender attributes, and language support. For the current implementation, Amazon is standardizing voice choices to ensure consistency and reliability. However, in the near future, users might be allowed to select preferred voices (e.g., male/female, soothing/energetic) or even regional accents for greater cultural relevance.

This could open doors to localized commerce experiences, where a customer in India might hear a summary in Indian English with region-specific idioms, while a user in the UK might get a summary in a different tone altogether.

Quality Control and Error Handling

To safeguard against hallucinations, incorrect claims, or biased interpretations, Amazon employs a multi-layered validation pipeline that includes:

- Automated content checks using predefined guardrails.

- Feedback collection mechanisms for users to flag inaccurate summaries.

- A/B testing to compare engagement rates between audio and non-audio users, enabling continuous optimization.

This emphasis on governance ensures that while the process is automated, it remains trustworthy and consumer-centric.

Strategic Goals and Business Impact for Amazon

Amazon’s introduction of AI-powered audio summaries is not a mere enhancement of user experience—it is a strategically calibrated initiative aligned with the company’s long-term objectives in customer retention, technological leadership, and operational efficiency. As competition in the e-commerce sector intensifies and consumer expectations evolve, Amazon is under growing pressure to differentiate itself not only through product variety and delivery speed, but through intelligent, multimodal user interfaces. This section explores the strategic rationale behind the rollout, the expected business impact across key performance indicators (KPIs), and how this innovation reinforces Amazon’s broader ecosystemic advantages.

Enhancing Engagement through Low-Friction Interactions

One of Amazon’s core strategic priorities has long been to minimize friction in the consumer journey. From the introduction of one-click purchasing to cashierless Amazon Go stores, the company continually invests in reducing cognitive and logistical barriers. AI-generated audio summaries represent the next evolution in this agenda. They allow users to consume product information passively, reducing the need to scroll, skim, or interpret lengthy descriptions—an especially valuable benefit in a mobile-first shopping landscape.

The shift from visual to auditory content also aligns with Amazon’s expanding footprint in voice commerce, particularly through Alexa-enabled devices. By extending the shopping experience into the auditory realm, Amazon can increase engagement duration, stimulate product discovery, and encourage impulsive decision-making, especially in high-frequency, low-cost categories.

Expanding Accessibility to Unlock New Markets

Another strategic goal underpinning this feature is accessibility expansion. Globally, over 2.2 billion people live with some form of vision impairment, according to the World Health Organization. Traditional e-commerce interfaces, with their dense textual formats and small screen real estate, create barriers to entry for these users.

By integrating audio summaries into the browsing experience, Amazon is effectively removing a key friction point for millions of potential shoppers. Moreover, this functionality enhances usability for consumers with dyslexia, cognitive impairments, or those who simply prefer audio-first consumption patterns—a trend gaining momentum with the rise of audiobooks, podcasts, and smart home ecosystems.

Driving Conversion Rates with Personalized Summarization

The deployment of audio summaries also serves a direct conversion optimization function. Research in behavioral psychology suggests that humans process auditory information more quickly and emotionally than textual data. An emotionally neutral yet informative audio summary can therefore reduce decision fatigue, clarify product value propositions, and accelerate the path to purchase.

In Amazon’s environment, where marginal gains in conversion rates translate to substantial revenue increases due to scale, this innovation can deliver high returns. Early A/B testing may indicate that pages with audio summaries show:

- Higher add-to-cart rates

- Increased time-on-page duration

- Greater review engagement

- Lower return rates due to improved expectation alignment

Amazon is likely to integrate machine learning feedback loops to continually refine how audio summaries impact these KPIs, allowing real-time adjustments that drive business impact.

Leveraging AI to Reduce Operational Overhead

Beyond consumer-facing benefits, audio summaries also have implications for cost reduction and operational efficiency. Amazon traditionally employs large content teams, third-party vendors, and support staff to curate and manage product listings across its vast inventory. These efforts include editing bullet points, generating A+ content, and translating listings across geographies.

The automation of summary generation reduces the need for manual content moderation and creation, particularly for standardized product types. Over time, AI-generated summaries could supplant human-generated ones for low-margin items or products with abundant structured metadata, freeing up resources for higher-impact content strategy.

Additionally, by compressing key product features into audio format, Amazon may also reduce customer service inquiries, particularly “pre-purchase questions” related to compatibility, features, or specifications. This can yield measurable reductions in call center volume and chatbot usage.

Strengthening Platform Stickiness and Ecosystem Lock-In

Another strategic dimension is the feature’s potential to reinforce Amazon’s platform lock-in strategy. Audio summaries are designed to work seamlessly across Amazon’s native apps, website, and Alexa-enabled devices. By embedding this functionality into proprietary endpoints, Amazon creates feature differentiation that is not easily replicable by third-party sellers, resellers, or competing platforms.

This differentiation is especially potent in categories where trust and clarity are paramount—such as electronics, medical supplies, or home improvement tools. Consumers may gravitate toward Amazon not only for competitive pricing, but for the confidence-building utility of high-quality, AI-narrated product overviews.

Furthermore, as more sellers participate in the program, Amazon gains a stronger hold over brand messaging and listing formats, potentially standardizing how information is consumed in its ecosystem.

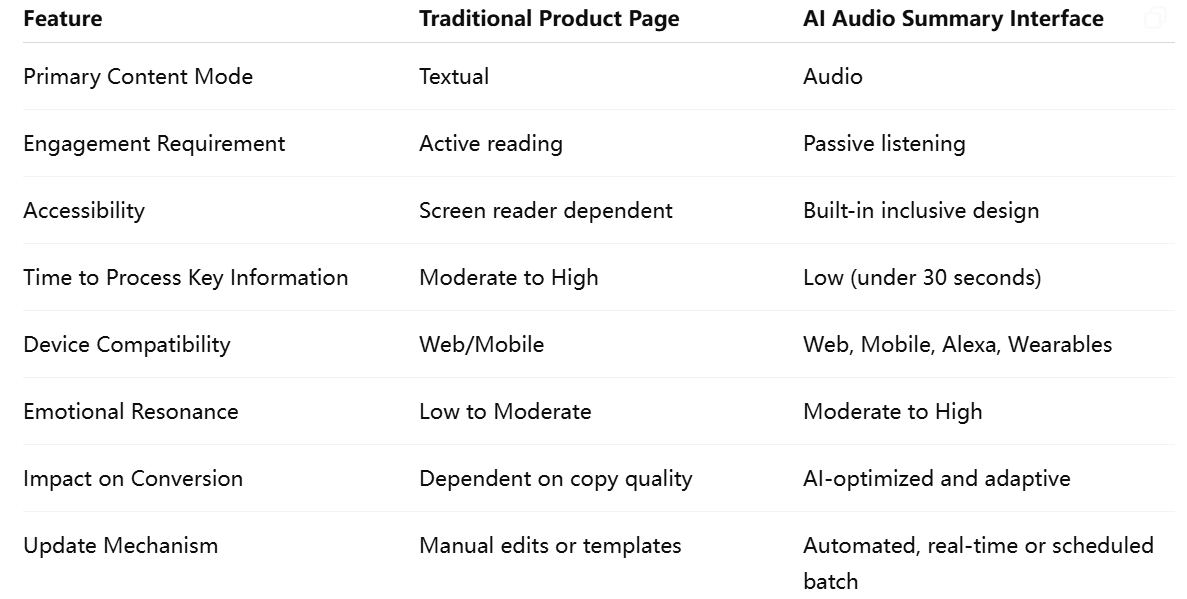

Below is a comparative overview of traditional product pages versus the new audio summary-enabled interface:

This table illustrates how audio summaries enhance usability, especially for accessibility-challenged users and mobile shoppers, while simultaneously offering Amazon operational leverage and brand consistency.

Anticipated Competitive Advantage

As e-commerce platforms increasingly look toward immersive and adaptive interfaces, Amazon’s first-mover advantage in AI audio summaries positions it at the frontier of next-generation user experience. While companies like Shopify, Walmart, and Alibaba are experimenting with AI-generated product content, few have integrated voice into the core shopping interface with the depth and device ubiquity Amazon commands.

This innovation is not only a customer engagement tool—it is also a competitive moat. If successfully scaled and embraced by users, audio summaries could become a baseline expectation in e-commerce UX, much like one-click checkout or free returns. In that case, Amazon's early investments will yield a defensible edge that is hard to match.

Preparing for Future Monetization Pathways

Although not currently monetized, the audio layer opens a new frontier in retail media innovation. Amazon could eventually offer sponsored audio placements, brand-personalized voices, or dynamically tailored upsell prompts integrated into summaries. Such formats would add yet another revenue stream to Amazon’s booming advertising division, which exceeded $40 billion in annual revenue.

With personalization engines and contextual analytics already embedded into Amazon’s infrastructure, the company could, for example, vary audio summaries based on a user’s browsing history or geographic location, further enhancing ad-targeting precision.

Industry Implications: E-Commerce, Retail Media, and AI Convergence

Amazon’s deployment of AI-powered audio summaries is not merely a company-specific innovation; it signals a pivotal moment in the evolution of digital commerce, marking the convergence of artificial intelligence, immersive audio, and platform economics. As Amazon lays the groundwork for a multimodal shopping future, the implications for the broader e-commerce industry, retail media networks, and AI infrastructure are both profound and far-reaching. This section examines how competitors are likely to respond, what the rollout suggests about the future of retail media monetization, and how AI convergence is reshaping commerce at a foundational level.

Setting a New Standard for Digital Shopping Experiences

Historically, user experience innovation in e-commerce has been incremental—improved UI layouts, faster page loads, mobile-optimized interfaces, and augmented reality integrations. With the launch of AI-generated audio summaries, Amazon elevates the shopping experience from visual optimization to sensory expansion, allowing consumers to interact with product content through auditory channels.

This shift changes expectations for what constitutes a “complete” product page. Audio summaries may soon be seen not as an optional enhancement, but as a mandatory accessibility layer. As consumers grow accustomed to passively receiving product information—particularly in hands-free contexts—competitors that do not offer equivalent functionality risk falling behind.

The implications extend beyond consumer electronics and household goods. Fashion retailers, automotive platforms, luxury brands, and even B2B marketplaces could adopt similar auditory capabilities to accommodate professionals who browse catalogs while commuting or multitasking. The end result is a redefined baseline in user engagement: from visual dependence to multimodal intelligence.

Competitive Pressure on Global and Niche Platforms

Amazon’s move has strategic ripple effects across the e-commerce landscape. Global players such as Walmart, Alibaba, Rakuten, and JD.com will be compelled to evaluate their own capabilities in AI voice synthesis, customer personalization, and content scalability.

- Walmart, which has already invested in Gen AI-powered shopping search tools, may integrate audio summaries via its app or in-store digital kiosks.

- Alibaba, with its strong emphasis on smart commerce via Tmall Genie (its Alexa equivalent), could quickly replicate or even surpass the feature with Mandarin-first deployment.

- Shopify, which powers over a million independent sellers, may explore third-party integrations that allow merchants to generate and host their own audio summaries using open-source or SaaS tools.

Niche and vertical-specific marketplaces—such as Etsy, Houzz, or Zappos—may face a more complex challenge. Without the AI infrastructure or device ecosystem of Amazon, they will need to either partner with speech AI vendors or leverage APIs from platforms like OpenAI, ElevenLabs, or AWS Bedrock to implement comparable functionality.

In this environment, platform differentiation will increasingly be determined not by catalog size or price competitiveness alone, but by the richness and adaptability of the AI-powered content experience.

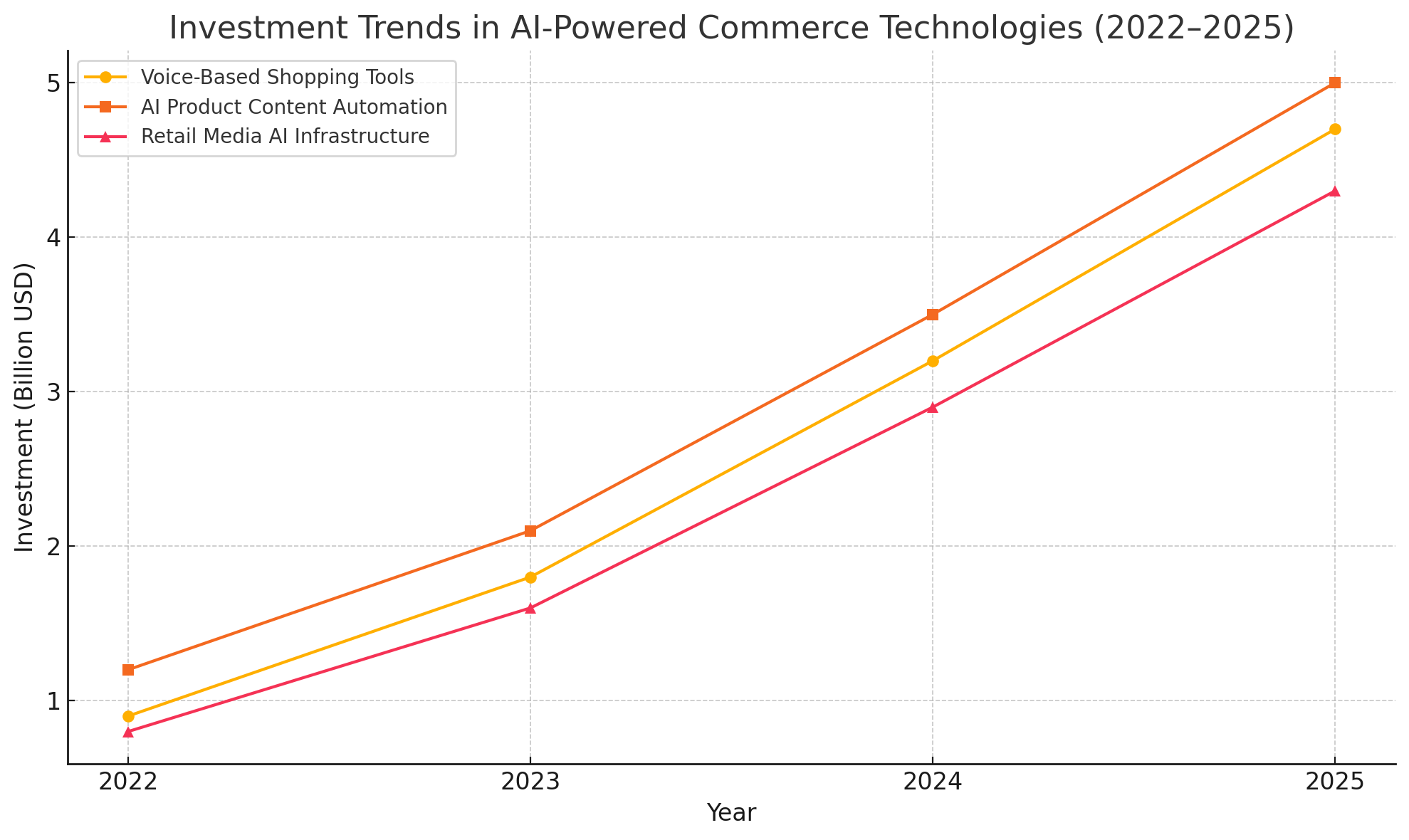

Retail Media Evolution: Toward Audio-Based Monetization

Perhaps one of the most transformative implications of Amazon’s audio innovation lies in the realm of retail media networks. Retail media—wherein brands pay for product placements, sponsored listings, and native ads within e-commerce ecosystems—has exploded in recent years, becoming a multi-billion-dollar industry. Amazon’s advertising division alone generated more than $40 billion in revenue in the past year.

Audio summaries introduce an entirely new surface for ad personalization and monetization. While Amazon has not yet commercialized the format, several opportunities are apparent:

- Sponsored audio intros: Brands could pay to have a brief tagline or call-to-action embedded into the beginning or end of product summaries.

- Dynamic cross-sell audio prompts: Summaries might conclude with an AI-generated suggestion to explore complementary or bundled items.

- Voice brand personas: Vendors could pay a premium to use branded voices or tones that align with their identity—luxury brands might choose a sophisticated narrator; family products could employ a warm, friendly tone.

These innovations could usher in a new era of audio-native advertising, complementing visual banners and product carousels with contextual, voice-optimized messaging that respects user attention spans and cognitive load.

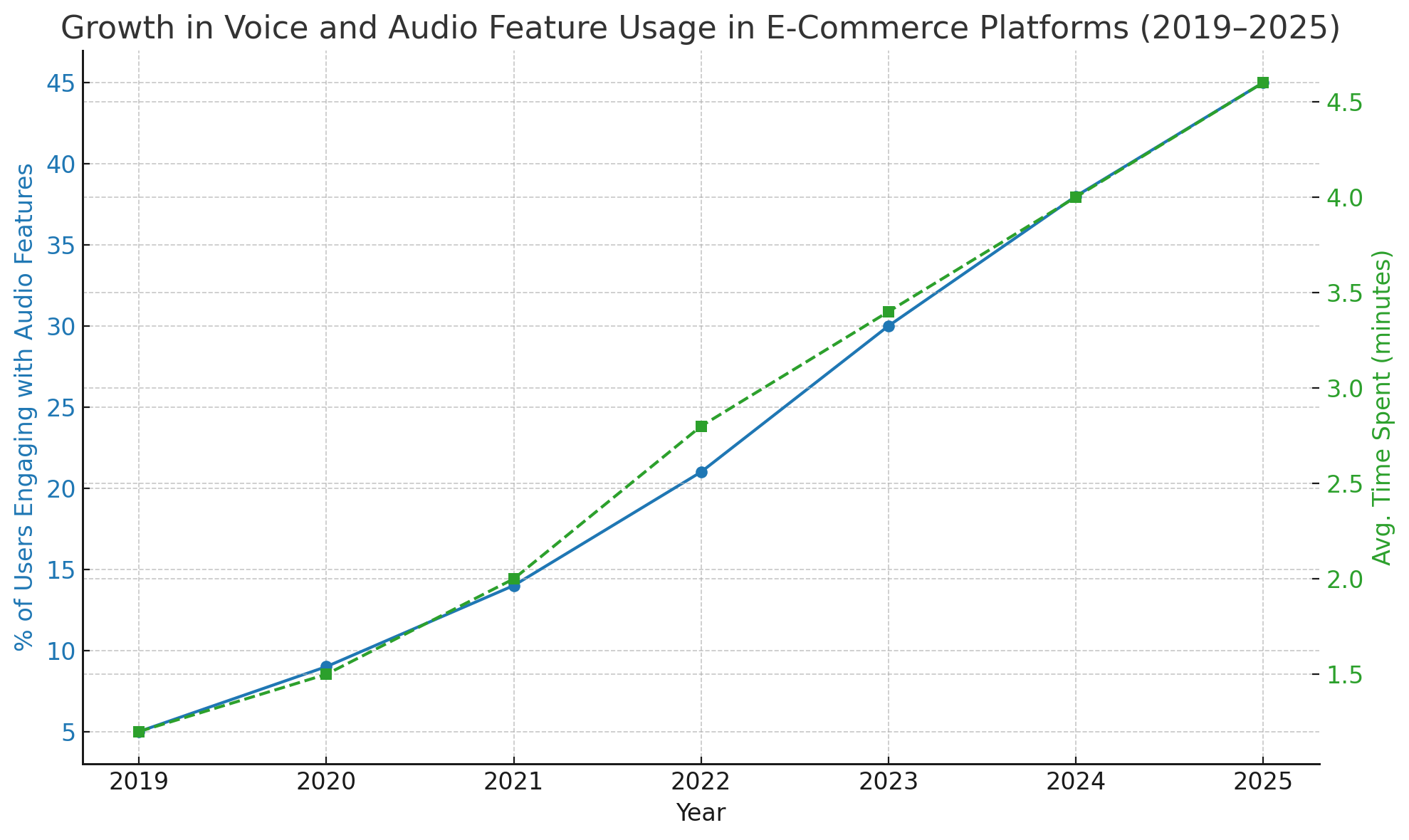

To illustrate the momentum behind this shift, we introduce a visual analysis of market activity.

AI Convergence: The Integration of Modalities and Platforms

What Amazon has pioneered is not merely an audio feature, but a microcosm of a broader industry shift—AI convergence. We are witnessing the integration of:

- NLP (for summarizing product data)

- Speech synthesis (for transforming text to voice)

- Behavioral analytics (for personalizing delivery)

- Voice recognition (for activating summaries through commands)

- Edge AI (for real-time playback on mobile and IoT devices)

Together, these technologies form an intelligent interface layer that interprets user intent, adapts to context, and delivers tailored content in the user’s preferred modality. This convergence is likely to influence not only e-commerce but also industries such as:

- Healthcare: Audio summaries of prescriptions or treatment options

- Education: Voice-driven overviews of course content or learning modules

- Enterprise SaaS: Dashboard narrations for productivity or analytics tools

Amazon’s initiative thus serves as a blueprint for AI-infused UX design—an approach that other sectors may emulate to meet rising expectations for adaptive, human-like interfaces.

Regulatory and Ethical Implications for the Industry

As AI-generated voice content becomes ubiquitous, questions of transparency, consent, and accountability will become unavoidable. For instance:

- Should users be notified when a voice is synthetic versus human?

- Can summaries omit or de-emphasize negative reviews without ethical risk?

- How are biases in AI training data addressed when selecting which features to highlight?

Regulatory bodies in the U.S., Europe, and Asia are increasingly scrutinizing AI applications in consumer tech. Companies deploying similar features will need to ensure algorithmic transparency, bias mitigation, and user opt-out mechanisms to avoid reputational or legal fallout.

An Ecosystem-Wide Call to Adapt

Ultimately, Amazon’s audio summaries represent more than an innovation—they are a paradigm signal. As the line between human and machine communication continues to blur, digital commerce will increasingly be defined by how well platforms understand and respond to the sensory preferences of users. Audio is the next natural step, and those who fail to adapt risk not only stagnation but irrelevance.

The convergence of AI and audio is not optional—it is inevitable. Retailers, developers, brands, and regulatory institutions must work in tandem to ensure that the future of AI-powered commerce is not only intelligent but ethical, inclusive, and compelling.

Challenges, Ethical Concerns, and the Road Ahead

As Amazon pioneers the deployment of AI-powered audio summaries in e-commerce, the advancement brings not only technological promise but also a range of complex challenges. These include operational hurdles, ethical dilemmas, and concerns around transparency and control. While the feature marks a significant evolution in digital retail interfaces, the transition to audio-first commerce must be handled with careful consideration of the broader implications for consumers, sellers, developers, and regulators. This section examines the major challenges Amazon and the industry face, explores the ethical considerations that accompany such innovations, and offers a forward-looking assessment of how this technology might evolve.

The Risk of Misinformation and Oversimplification

One of the most immediate challenges in generating AI-based audio summaries is the potential for misinformation, whether through omission, misinterpretation, or biased representation. Summarization models, by design, distill information—removing certain details while emphasizing others. While this is efficient, it inherently carries the risk of oversimplifying complex product attributes or unintentionally omitting critical caveats.

For example, a product that has both strong and weak customer reviews might be summarized in a tone that suggests uniform positivity, potentially misleading consumers. Technical specifications could also be diluted or inaccurately rephrased, especially in categories like electronics or medical devices, where precision is paramount.

Mitigating these risks requires Amazon to invest in robust validation pipelines, multi-pass fact-checking models, and feedback-driven learning loops. However, as the system scales to millions of listings, maintaining accuracy and neutrality across diverse categories remains an ongoing and resource-intensive endeavor.

Algorithmic Bias and Content Fairness

Like all AI systems, the summarization and voice synthesis tools used in Amazon’s ecosystem are trained on vast datasets that may reflect historical biases or commercial skew. If not properly managed, these biases can shape what information is included or excluded from audio summaries, thereby affecting consumer perception and purchasing behavior.

Concerns include:

- Favoritism toward products with higher engagement or premium seller status

- Exclusion of minority-owned brands or niche products that lack extensive metadata

- Tone bias, where certain products receive more enthusiastic narration than others due to training data imbalances

Ensuring fairness in AI-generated content is a significant ethical imperative. Amazon and other platforms must implement bias detection frameworks and offer disclosure protocols so users understand how summaries are generated and on what basis content is prioritized.

User Consent and Transparency

The deployment of AI-generated voice content introduces new expectations around transparency and informed consent. Consumers should be made aware when they are listening to machine-generated summaries rather than human-authored ones. Without such disclosures, users may conflate synthesized voices with expert opinions or verified guidance, potentially leading to misplaced trust.

This raises several questions:

- Should each summary begin with a disclaimer (e.g., “This is an AI-generated summary”)?

- Should users have the option to disable or opt out of the feature?

- How should platforms handle incorrect summaries that influence purchases?

Amazon will need to define best practices not only in user interface design but also in legal compliance, particularly in regions like the EU where AI transparency regulations are rapidly evolving under frameworks such as the AI Act and GDPR.

Impact on Seller Autonomy and Brand Messaging

Sellers on Amazon invest heavily in creating detailed product descriptions, often optimized for SEO and brand tone. The introduction of automatically generated audio summaries may dilute or even distort brand messaging, especially if the AI paraphrases key points or omits branded terminology.

This can lead to conflicts of interest between platform standardization and seller individuality. Key concerns include:

- Loss of control over how products are represented

- Inconsistent tone between branded copy and generic summaries

- Reduced differentiation, especially for artisanal or experience-based goods

To address this, Amazon may need to offer seller-side customization tools that allow brands to preview, adjust, or override auto-generated summaries. This would ensure a balance between automation and authentic representation.

Regulatory and Compliance Considerations

The rise of AI-generated audio summaries also intersects with evolving global regulatory standards for synthetic content, consumer protection, and AI accountability. Authorities in the U.S., European Union, and Asia are increasingly scrutinizing how AI technologies are deployed in commercial environments, particularly in sectors involving financial transactions, advertising, and consumer rights.

Key legal areas Amazon must navigate include:

- Disclosure mandates for synthetic voice use in consumer transactions

- Liability structures for misrepresentative or misleading summaries

- Data usage limits in personalization models used to adjust summaries dynamically

Failing to comply with these frameworks could expose Amazon to litigation, fines, or reputational harm, particularly in jurisdictions where digital consumer protection laws are stringent.

Technological Scalability and Cost-Benefit Realization

While the promise of audio summaries is evident, Amazon must manage the technical and economic realities of scaling the feature across its vast product catalog. Even with hybrid models (pre-generated for popular SKUs and real-time for others), the computational expense of AI summarization and neural voice synthesis is significant.

Challenges include:

- Latency management for real-time requests

- Storage requirements for audio cache libraries

- Device compatibility issues across web, mobile, and smart speakers

To achieve long-term ROI, Amazon will need to continuously optimize model efficiency, compress audio assets, and fine-tune its deployment logic based on real-world usage patterns.

The Road Ahead: Toward Responsible and Adaptive AI Commerce

Despite the hurdles, the future of AI-powered audio summaries is rich with opportunity. As machine learning models become more nuanced and capable of understanding context, tone, and intent, the quality of voice interfaces will improve dramatically. We can anticipate several likely developments:

- Personalized audio summaries that reflect user profiles, shopping behavior, and regional language preferences

- Conversational shopping agents that allow follow-up queries and real-time voice comparisons

- Branded voice personas, where sellers can select from a range of tones or accents to match their identity

- Multi-lingual support to engage non-English-speaking users in global markets

In the broader context, Amazon’s initiative serves as a case study in how AI and audio can converge to create inclusive, efficient, and emotionally resonant commerce interfaces. It is not merely about adding a new content format—it is about reimagining how people interact with digital platforms in a world that increasingly values convenience, accessibility, and agency.

Conclusion

The integration of AI-powered audio summaries into Amazon’s platform represents a bold step toward the future of e-commerce—one that prioritizes accessibility, multimodal interaction, and AI-driven intelligence. However, this innovation also brings forth challenges that must be navigated with ethical integrity, technological rigor, and regulatory awareness. For Amazon and the wider industry, success will depend not merely on technological execution, but on the ability to build trustworthy, inclusive, and human-centered AI experiences.

As voice becomes a central medium in digital transactions, the companies that succeed will be those that listen—both to their users and to the deeper implications of the systems they design.

References

- Amazon – About Amazon's AI initiatives

https://www.aboutamazon.com/news/innovation-at-amazon - TechCrunch – Amazon adds generative AI summaries to product listings

https://techcrunch.com/amazon-generative-ai-summaries - The Verge – Voice shopping and the future of e-commerce

https://www.theverge.com/voice-shopping-future - Wired – How AI is changing the way we shop

https://www.wired.com/story/ai-changing-ecommerce - Forbes – The evolution of retail media networks

https://www.forbes.com/sites/retail-media-evolution - VentureBeat – Amazon’s use of LLMs and speech synthesis

https://venturebeat.com/ai/amazon-llm-voice-synthesis - Business Insider – AI shopping assistants are taking over

https://www.businessinsider.com/ai-shopping-assistants-retail - McKinsey & Company – The future of AI in commerce

https://www.mckinsey.com/ai-in-commerce - Statista – Voice commerce market projections

https://www.statista.com/statistics/voice-commerce-forecast - Harvard Business Review – Ethical challenges in AI adoption

https://hbr.org/ethical-ai-challenges