AI’s Hidden Environmental Costs: The Truth About Energy, Emissions, and Water Use

Artificial Intelligence (AI) is now woven into the fabric of daily life for billions of individuals and businesses worldwide. From conversational chatbots that handle customer service inquiries, to image and video generators used by content creators, to advanced language models that power productivity tools, AI technologies are no longer confined to research laboratories—they operate ubiquitously behind the scenes, often invisible but highly influential. The convenience, efficiency, and creative potential unlocked by AI have led to rapid adoption across industries, with companies racing to integrate these capabilities into their offerings.

However, this dramatic rise in AI usage also brings an under-examined consequence: a significant and often hidden environmental cost. Public discussions about AI typically focus on its potential to transform industries, drive economic growth, and solve complex societal challenges. Rarely do these conversations extend to the technology’s substantial resource requirements and environmental footprint. AI’s energy consumption is immense—not just in the development and training of models but also in their continuous operation. Moreover, the infrastructure supporting AI, such as hyperscale data centers filled with power-hungry graphics processing units (GPUs), has broad implications for energy grids, carbon emissions, and water resources.

This issue is particularly pressing at a time when the global community is striving to combat climate change and reduce greenhouse gas emissions. Data from the International Energy Agency (IEA) shows that information and communications technology (ICT) already accounts for approximately 2–4% of global carbon emissions—a share expected to rise as AI adoption accelerates. The environmental impact of AI is not limited to electricity usage alone; it also encompasses hidden elements like water consumption for cooling data centers and the rare materials needed to manufacture advanced semiconductor chips.

The carbon cost of training large AI models can be staggering. For example, OpenAI’s GPT-3 required an estimated 1.287 gigawatt-hours (GWh) of electricity to train—equivalent to the energy use of 120 average U.S. homes in a year. With newer and larger models now being developed, such as GPT-4 and Gemini 1.5 Pro, the training footprint only continues to grow. Furthermore, unlike traditional software, large AI models incur ongoing environmental costs every time they are used. Each AI-powered search, translation, image generation, or voice query draws on significant computational resources running in distant data centers. As AI-powered tools become embedded in everything from smartphones to industrial automation systems, the cumulative energy draw could be immense.

The public’s awareness of these environmental issues is growing but remains fragmented. Many users do not realize that their casual AI queries—such as asking an AI assistant to draft an email or generate a social media caption—might trigger a sequence of high-intensity computing operations with a measurable carbon impact. Similarly, businesses adopting AI-powered services for customer engagement or content creation may overlook the corresponding rise in their organizational carbon footprint. The invisible nature of these emissions makes it difficult to assess and address the true environmental cost of AI.

It is also worth noting that the sustainability practices of AI companies vary widely. While some firms, like Google and Microsoft, have pledged to use carbon-free energy for their data centers, others rely heavily on conventional fossil-fuel power. Furthermore, the geographic location of data centers plays a major role in determining their environmental footprint. In regions with carbon-intensive electricity grids, the carbon impact of AI operations can be several times higher than in regions where renewable energy dominates.

As AI use scales up, the tension between technological innovation and environmental responsibility becomes more pronounced. Policymakers, regulators, and environmental advocates are beginning to scrutinize the sector more closely. For instance, the European Union’s Digital Decade framework includes provisions for data center sustainability, and several U.S. states are considering new disclosure requirements for tech companies. Investors and consumers are also starting to demand more transparency about the environmental impacts of digital services, including AI.

In this blog post, we will take an in-depth look at the hidden environmental costs of AI. We will explore the carbon footprint associated with training and using large models, the energy and water demands of AI-supporting data centers, and the emerging challenges around sustainable AI deployment. We will also examine mitigation strategies being pursued by the industry, such as more efficient model architectures, renewable-powered infrastructure, and improved transparency practices.

Ultimately, the goal is to foster a more informed conversation around AI’s environmental impacts. As we embrace the benefits of AI, it is crucial to ensure that progress does not come at the expense of our planet. By understanding the true costs—and the opportunities to reduce them—we can strive toward an AI future that aligns with global sustainability goals.

The Growing Carbon Footprint of AI Models

Artificial Intelligence (AI) models, particularly the large-scale systems underpinning today’s most advanced applications, have made remarkable leaps in capability. However, with this progress comes an environmental burden that is both substantial and growing. The training and deployment of large AI models require immense computational power—power that translates directly into energy consumption and carbon emissions.

Training Large AI Models: A Carbon-Intensive Process

At the heart of today’s AI revolution are large language models (LLMs), vision models, and multimodal systems that depend on billions or even trillions of parameters. These parameters are fine-tuned using vast datasets drawn from the internet and other digital sources. The process of training such models involves running high-performance hardware—typically GPUs or specialized AI chips like TPUs—for weeks or months at a time. The energy consumed during this phase is considerable.

Estimates suggest that training OpenAI’s GPT-3 model required approximately 1.287 gigawatt-hours (GWh) of electricity. This is equivalent to the annual electricity usage of roughly 120 average U.S. households. The carbon emissions associated with this energy use depend on the energy mix of the data centers involved. In regions where coal or natural gas dominate the grid, emissions per kilowatt-hour (kWh) are much higher than in areas served predominantly by renewables.

As model sizes grow, so too does the environmental footprint. GPT-4, Gemini 1.5 Pro, Meta’s LLaMA 3, and Anthropic’s Claude 3 all surpass earlier models in size and complexity, requiring even more training data, compute power, and energy. In fact, a recent study from Hugging Face and Carnegie Mellon University found that training the largest models today can emit hundreds of metric tons of CO₂. For context, this is comparable to the lifetime emissions of several passenger vehicles.

Inference: The Ongoing Energy Draw

While training is a one-time event per model version, inference—the act of running the model to respond to queries—is a continuous process that consumes energy every time the model is used. With the explosive adoption of AI services, the cumulative energy draw from inference is rapidly outpacing even the training footprint.

Every user interaction with an AI model—whether generating an image, composing text, translating a document, or answering a voice query—triggers a computation sequence across numerous GPUs. Even seemingly trivial uses, such as asking a chatbot for recipe ideas or creating AI-generated memes, contribute incrementally to the global AI energy bill.

Google researchers recently estimated that serving AI queries at internet scale could soon consume as much energy as entire small countries. For example, a single AI-powered search query may consume 10 times more energy than a traditional search query. As more services integrate AI—including search engines, productivity tools, e-commerce platforms, and entertainment apps—the aggregate impact is likely to grow exponentially.

Corporate Carbon Emissions from AI

Leading technology companies are becoming increasingly transparent about the carbon emissions associated with their operations, including AI workloads. Microsoft’s 2024 Environmental Sustainability Report, for example, revealed that the company’s overall carbon footprint rose by nearly 30% year-on-year, largely driven by AI-related data center expansions and increased compute demand. Google’s most recent report showed a similar trend, with emissions rising for the first time in several years due to AI energy use.

Smaller companies and startups in the AI space often rely on cloud infrastructure provided by hyperscalers like AWS, Azure, and Google Cloud. While these platforms offer efficiencies of scale, they also contribute to the concentration of energy-intensive AI workloads in major data centers around the world.

The Carbon Cost of Popular AI Models

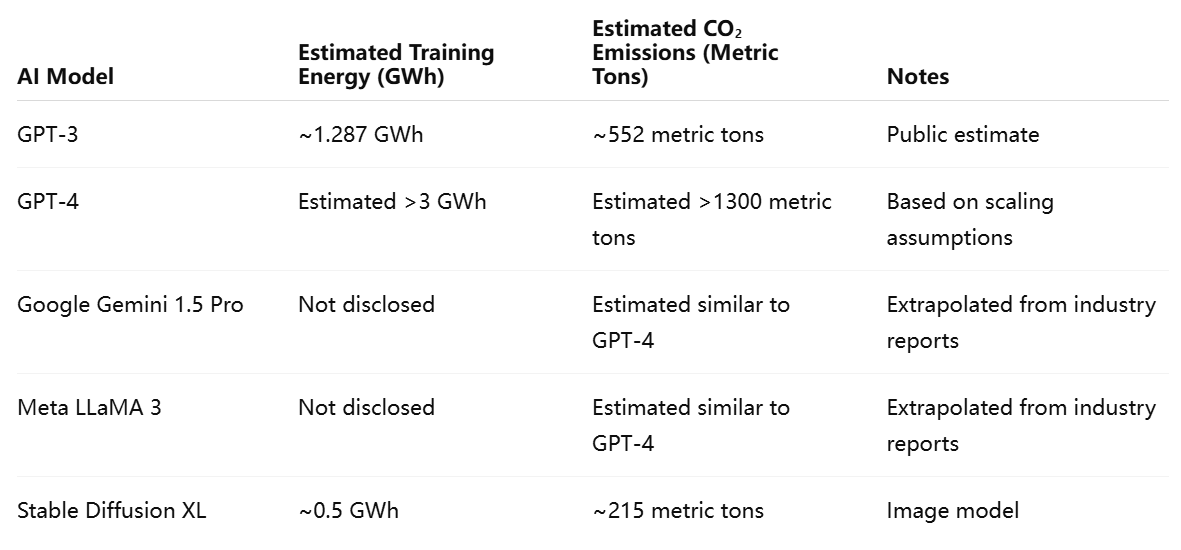

To illustrate the scale of this issue, consider the estimated carbon footprints of several well-known AI models:

These figures are illustrative but highlight that each major model comes with an environmental price tag. Furthermore, the cumulative impact of millions of users interacting with these models daily far exceeds the initial training cost.

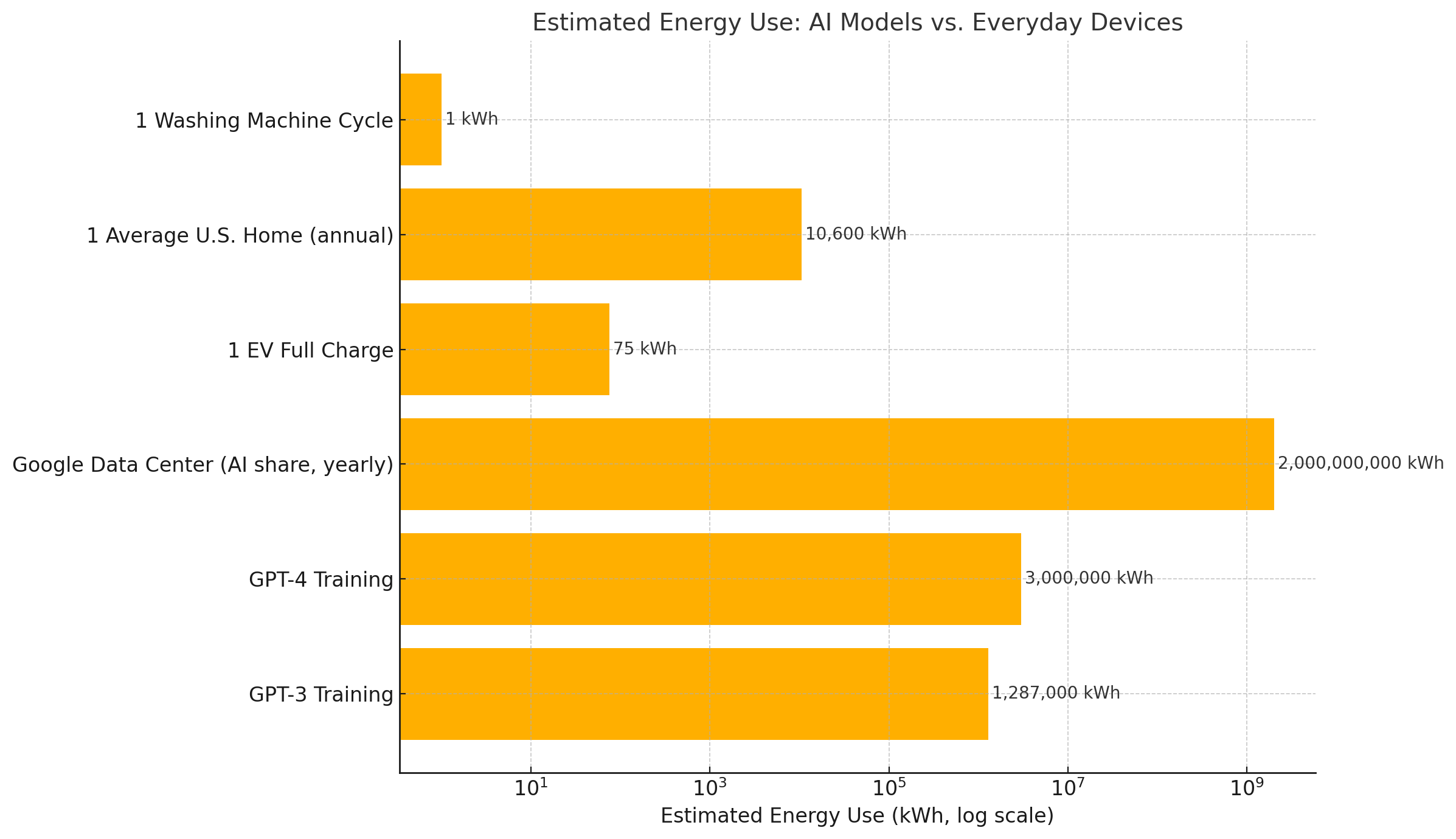

To help contextualize the energy demands of AI models, the following chart compares their estimated energy usage to more familiar everyday devices:

As seen in the comparison, the training of a single large AI model consumes energy equivalent to powering hundreds of homes for an entire year. This does not account for the ongoing inference energy use that adds up daily as users interact with these models.

Why This Matters for Sustainability

As governments and corporations commit to net-zero targets and more stringent climate goals, the escalating energy needs of AI technologies could pose a challenge. Without a coordinated effort to increase the energy efficiency of AI systems and accelerate the transition to renewable-powered data centers, AI’s carbon footprint may undermine broader sustainability objectives.

The sheer scale of projected AI adoption compounds the issue. IDC estimates that global spending on AI systems will surpass $500 billion annually by 2027, with AI-enabled services reaching into nearly every industry. If unchecked, the corresponding growth in energy use could drive up emissions even as other sectors decarbonize.

The bottom line is clear: AI is not an ethereal force operating in the cloud—it is an intensely physical technology with tangible environmental costs. To ensure that the benefits of AI do not come at the expense of planetary health, it is imperative that these costs be acknowledged, measured, and addressed.

Cloud Data Centers and AI Workloads — The Real Environmental Toll

The remarkable capabilities of today’s artificial intelligence (AI) models are inseparably linked to an unseen but critical infrastructure: hyperscale cloud data centers. These vast facilities, spread across the globe, serve as the physical foundation upon which AI services operate. Far from being intangible or “weightless,” AI workloads depend on an intricate network of energy-intensive servers, storage systems, and cooling technologies. As AI adoption accelerates, these data centers face mounting scrutiny for their environmental impacts—particularly their contributions to global energy consumption, carbon emissions, and water usage.

AI’s Dependence on Cloud Infrastructure

Contemporary AI systems are computationally demanding by design. Training a large model such as GPT-4 or Google Gemini requires massive clusters of advanced chips—typically NVIDIA A100 or H100 GPUs, or Google’s proprietary Tensor Processing Units (TPUs). These chips are deployed across hyperscale data centers operated by the largest cloud providers: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud.

Once a model is trained, it does not merely reside on a developer’s laptop or a corporate server. Instead, the model is deployed at scale in the cloud to serve millions or even billions of user requests. Each chatbot conversation, AI image generation, or voice query draws on the compute resources of these data centers, triggering an energy-intensive process with every interaction.

Because AI workloads require extremely high memory bandwidth, fast interconnects, and high availability, they tend to run on specialized GPU clusters. Compared to standard cloud computing or traditional IT servers, AI-focused clusters can consume 2 to 5 times more energy per unit of compute delivered. This growing preference for AI-optimized data centers is reshaping the landscape of cloud computing—and its associated environmental toll.

The Surge in Power-Hungry AI Chips

At the heart of AI data centers lies an increasing reliance on cutting-edge GPUs and accelerators. A single NVIDIA H100 GPU, for example, draws approximately 700 watts of power under load. A typical server rack housing eight H100 GPUs may consume over 6 kilowatts. Multiply this by thousands of racks across multiple availability zones, and the scale of power demand becomes apparent.

Moreover, AI training often requires not just weeks of compute but also persistent storage and networking overheads. Keeping these clusters at peak performance means they often run close to maximum power draw for extended periods. During inference—when AI applications interact with end users—the need for low latency requires maintaining significant amounts of compute capacity in an always-on state.

The result is a substantial increase in both peak and baseline power consumption across data centers serving AI applications. According to a recent report by the Uptime Institute, AI workloads now account for up to 20% of total power use in certain hyperscale facilities—a figure projected to rise to 30–40% within the next two years.

Cooling Requirements and Water Usage

AI data centers face another environmental challenge: cooling. As GPUs and accelerators run hotter and more densely packed, traditional air-based cooling systems are often insufficient. This has led to a resurgence in more energy- and resource-intensive methods such as chilled-water cooling and even direct liquid cooling.

Water usage in particular has emerged as a growing concern. Many hyperscale data centers consume vast amounts of water daily to dissipate heat from their servers. A recent study from the University of California Riverside estimated that a single AI query processed by an advanced model could indirectly use up to 500 milliliters of water—through the cumulative effect of keeping the servers cool.

In drought-prone regions, this water demand can exacerbate stress on local resources. Data centers in Arizona, Oregon, and parts of Europe have drawn public criticism for their water consumption, prompting calls for greater transparency and accountability.

Global Energy Demand Trends

The International Energy Agency (IEA) forecasts that global data center electricity consumption could reach over 1,000 terawatt-hours (TWh) annually by 2026—nearly double the 2022 figure. AI workloads are a primary driver of this increase.

The energy impact varies by region, depending on data center locations and local electricity mixes:

| Region | AI-Related Data Center Energy Use (Estimated) | Energy Mix | Sustainability Notes |

|---|---|---|---|

| United States | High (~400 TWh/year by 2026) | 60% fossil, 40% renewables | Major push toward solar + wind |

| European Union | Moderate (~150 TWh/year by 2026) | 50% fossil, 50% renewables + nuclear | Strict efficiency regulations emerging |

| Asia-Pacific | Very High (~450 TWh/year by 2026) | 70% fossil, 30% renewables | Rapid AI adoption, lagging decarbonization |

Regional Implications

Regional disparities in grid carbon intensity mean that the same AI workload can have dramatically different environmental impacts depending on where it is executed. For instance, running an AI model in Norway—where 98% of electricity comes from hydropower—produces negligible emissions, while running the same workload in parts of the U.S. Midwest or Southeast may result in significant carbon output.

Companies seeking to mitigate AI’s environmental cost often attempt to “shift” AI workloads toward greener data centers or match compute operations with renewable energy purchase agreements. However, demand for ultra-low latency in AI services sometimes constrains this flexibility—AI must often be served from data centers close to end users to ensure performance.

Growing Public and Regulatory Pressure

Awareness of AI’s data center impacts is growing among policymakers and environmental advocates. In Europe, the upcoming EU Data Act includes mandates for improved energy transparency from cloud providers. In the U.S., states like Oregon and Washington are exploring new water-use reporting requirements for data centers.

Consumer expectations are also shifting. Increasingly, tech users want to know the sustainability practices behind their digital services. Companies unable to demonstrate environmental responsibility for AI services may face reputational risks.

The Broader Environmental Toll

Ultimately, the environmental cost of AI is not confined to model training but is sustained and amplified by the physical reality of the data centers supporting this technology. As AI permeates every aspect of digital life, from workplace productivity to online entertainment, the corresponding growth in energy and water consumption should not be ignored.

Unless mitigated, this trend could complicate global decarbonization efforts—even as AI is simultaneously deployed to solve climate-related problems. Striking the right balance between AI-driven innovation and ecological responsibility will require systemic changes at the infrastructure level, transparent reporting, and ongoing investment in sustainable computing practices.

The Water Footprint of AI — A Less Known Factor

When considering the environmental costs of artificial intelligence (AI), public discourse often focuses on carbon emissions and energy use. Yet another critical resource is increasingly strained by the rise of AI technologies: water. The water footprint of AI is an underappreciated but significant component of its overall environmental impact. As AI workloads surge globally, their associated water consumption is drawing mounting concern from environmental groups, regulators, and local communities—especially in regions already grappling with water scarcity.

Why AI Models Require Water

The connection between AI and water is indirect but profound. The massive computational demands of AI systems—whether during training or inference—generate substantial amounts of heat within data centers. To prevent overheating and ensure optimal performance, these facilities require extensive cooling infrastructure.

In many hyperscale data centers, water-based cooling methods remain the most efficient and cost-effective solution. Chilled-water systems, evaporative cooling towers, and hybrid air-water solutions are widely deployed to maintain safe operating temperatures for servers, storage units, and AI accelerator racks.

Thus, every time an AI model is trained or queried, a portion of the energy used is ultimately dissipated as heat—heat that must be counteracted by systems that consume water. The more computationally intensive the workload, the greater the cooling—and thus water—requirements.

Quantifying AI’s Water Usage

While precise figures vary by facility and region, recent academic studies and corporate disclosures shed light on the magnitude of AI’s water footprint:

- Researchers at the University of California, Riverside estimated that processing a single conversation with Google’s Bard chatbot could consume between 500 milliliters and 1 liter of water, depending on data center cooling practices.

- For comparison, this means that generating 1,000 AI responses may indirectly consume 500 to 1,000 liters of water—roughly the amount needed to fill a small bathtub.

- Microsoft, in its 2024 Environmental Sustainability Report, disclosed that its global water usage had risen sharply year-over-year, largely due to increased AI-related workloads.

- Similar trends were reported by Google, which noted significant water demand growth at its data centers housing AI systems.

Importantly, inference workloads—not just training—account for an ongoing share of water consumption. As AI services like chatbots, content generators, and voice assistants become embedded in daily consumer and enterprise applications, the cumulative water use rises in tandem.

Regional Water Stress and AI Deployment

Not all data centers are created equal in terms of their water impact. The geographical location of a facility dramatically influences its sustainability profile.

In water-rich regions, such as the Pacific Northwest or parts of Scandinavia, the environmental cost of using water for data center cooling is relatively low. However, many high-growth AI hubs are situated in areas already facing water scarcity—either because of favorable tax regimes, access to renewable energy, or proximity to urban centers.

Examples of concern include:

- Arizona, USA: A major hub for AI-related cloud infrastructure operated by Google, Microsoft, and AWS. Arizona is also in the midst of a multi-year megadrought, with water tables falling and the Colorado River under severe stress.

- Oregon, USA: Home to several large hyperscale data centers. While parts of Oregon are water-abundant, others—especially in the arid interior—have experienced community pushback over corporate water use.

- Ireland: A fast-growing data center region for Europe, with local water utilities expressing concerns about the long-term sustainability of further expansion.

- Singapore: A high-density urban hub where water is an imported and expensive resource, with government-imposed restrictions on data center growth to manage environmental strain.

Deploying AI workloads in water-stressed regions creates a tension between economic opportunity and environmental sustainability. Without adequate mitigation, the expansion of AI-powered services could intensify local water shortages and provoke public opposition.

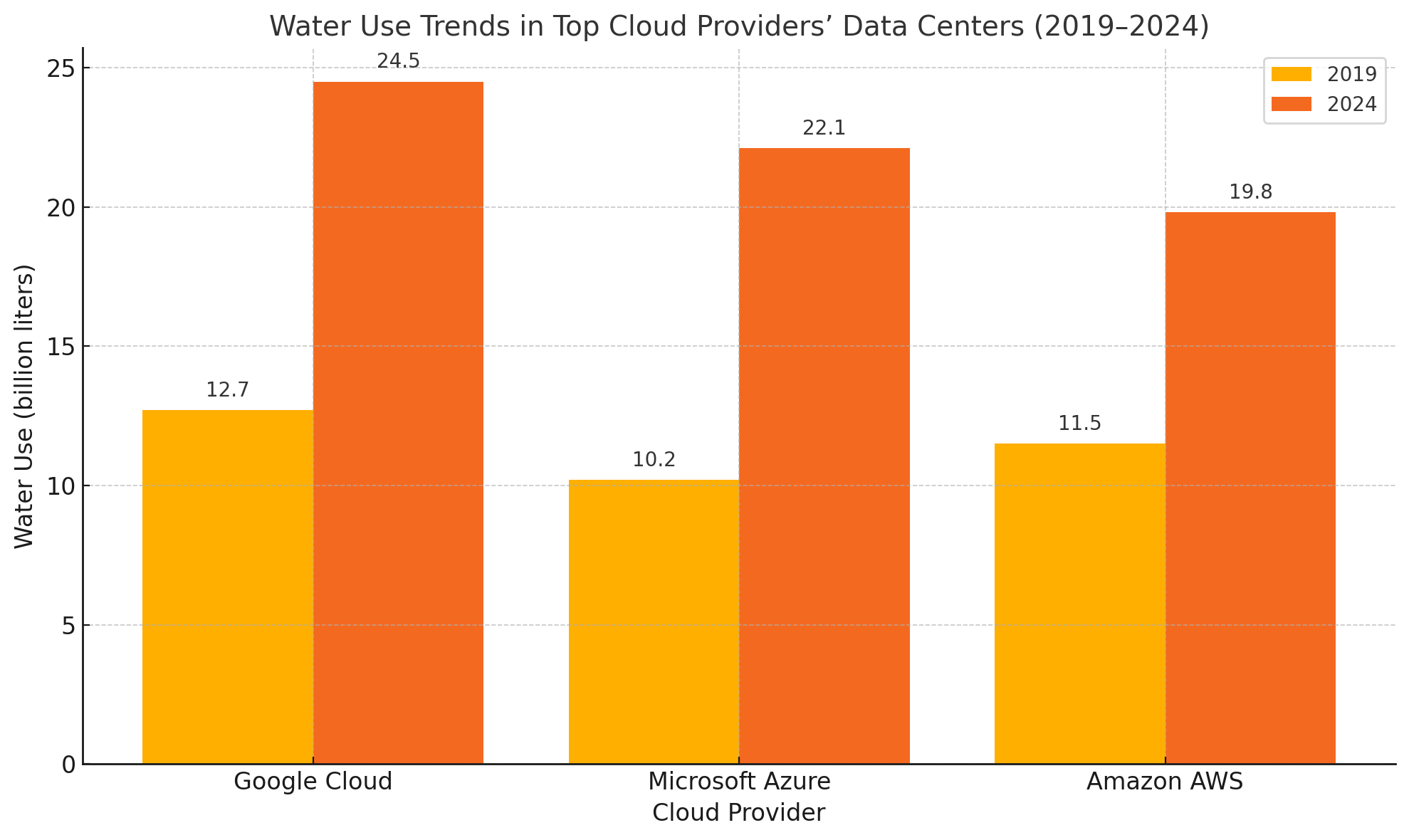

To visualize the growing water footprint of AI, the following chart tracks estimated water use trends for major cloud providers based on disclosed reports and independent analysis:

As illustrated, water consumption has nearly doubled for some providers in just five years—a trend closely correlated with the explosion of AI compute demand.

Public Backlash and Regulatory Responses

Public sentiment around data center water use is shifting. Environmental activists, local communities, and regulatory agencies are increasingly scrutinizing the water footprint of hyperscale cloud providers.

Recent developments include:

- Community protests: In parts of Oregon and Arizona, local residents have staged protests against the expansion of water-intensive data centers, calling for greater transparency and stricter environmental oversight.

- Legislative initiatives: U.S. states such as Oregon are advancing bills to require mandatory disclosure of water use by data centers and to limit water withdrawals during drought conditions.

- European Union regulations: Under the EU Green Deal, future directives will likely impose tighter efficiency and reporting requirements on data center operators—including water usage metrics.

- Investor pressure: ESG-focused investors are pressing tech giants to publish more granular water footprint data and to adopt best practices for water stewardship.

Emerging Mitigation Strategies

Faced with growing scrutiny, cloud providers are investing in more water-efficient technologies:

- Liquid cooling innovations: Advanced liquid cooling techniques (including direct-to-chip cooling) reduce total water usage by eliminating the need for large-scale evaporative systems.

- Closed-loop systems: Some data centers are adopting closed-loop cooling that minimizes water withdrawal by recirculating coolant.

- AI-powered optimization: Ironically, AI itself is being used to optimize data center cooling, allowing operators to reduce both energy and water consumption through intelligent system management.

- Geographic shifting: Certain AI workloads are being routed toward data centers in cooler climates or regions with abundant renewable energy and water resources, lowering overall environmental impact.

However, progress remains uneven, and industry-wide best practices are still evolving.

A Critical but Underexamined Issue

The water footprint of AI may be less visible than its carbon emissions, but it is no less important. In an era of growing water scarcity, the indirect consumption of billions of liters of water annually to power AI workloads deserves far greater public attention.

As AI becomes integral to modern life, the tech industry must confront its water impacts head-on—through greater transparency, technological innovation, and more sustainable siting decisions. Only by addressing the full environmental picture—including energy, emissions, and water—can the AI sector hope to align with global sustainability goals.

Can AI Go Green? Mitigation Strategies and Innovations

As artificial intelligence (AI) becomes embedded in nearly every sector of the global economy, the growing awareness of its environmental impact—spanning energy use, carbon emissions, and water consumption—raises an urgent question: Can AI technologies be made more sustainable? Encouragingly, multiple pathways are emerging to reduce AI’s environmental footprint, from more efficient model architectures to greener data center operations. Achieving a balance between AI-driven innovation and ecological responsibility is both a technological and ethical imperative for the coming decade.

Advances in Energy-Efficient AI Models

One of the most promising areas of progress lies within AI model design itself. Historically, achieving higher performance in AI has largely been a function of scaling—adding more parameters, more data, and more compute. This paradigm, while powerful, also exacerbates environmental costs. However, the industry is now pivoting toward smarter, not just bigger, models.

Key strategies include:

- Model compression: Techniques such as quantization, pruning, and weight sharing reduce the size and computational complexity of models without significantly sacrificing accuracy. By compressing large language models (LLMs) into smaller, faster variants, researchers can slash both training and inference energy costs.

- Knowledge distillation: This process involves training smaller "student" models to replicate the performance of larger "teacher" models. Distilled models typically require 50–75% less compute during inference, making them far more energy efficient for production use.

- Sparsity and Mixture-of-Experts (MoE): Emerging architectures use only a fraction of the model’s parameters at runtime, activating specific “experts” depending on the input. Google’s Switch Transformer and Meta’s MoE models demonstrate that sparsity can dramatically cut inference energy while maintaining high performance.

- Hardware-software co-design: AI developers are increasingly tailoring model architectures to the strengths of modern accelerators, achieving greater efficiency per watt. OpenAI’s GPT-4, for example, was reportedly optimized to run more efficiently on NVIDIA’s latest GPU hardware.

These innovations suggest that the exponential growth in AI model size seen in recent years is unlikely to continue indefinitely. Instead, future progress may center on achieving more with less—a crucial shift for sustainability.

Transition to Renewable-Powered Data Centers

The carbon footprint of AI is heavily influenced by the energy mix powering the underlying data centers. Here, the major cloud providers are making significant strides toward decarbonization:

- Google: As of 2024, Google Cloud is matching 100% of its global electricity use with renewable energy purchases and aims for 24/7 carbon-free energy at all data centers by 2030.

- Microsoft: Microsoft Azure is pursuing an ambitious goal of becoming carbon negative by 2030 and has committed to powering all data centers with 100% renewable energy by 2025.

- Amazon: AWS has pledged to reach 100% renewable energy across its global infrastructure by 2025, though regional disparities remain.

While these commitments represent substantial progress, challenges persist. Not all regions have sufficient renewable capacity to meet surging AI demand, and grid constraints can force data centers to draw on fossil-heavy electricity at times of peak load or low renewable availability. Energy storage technologies and grid modernization will play a crucial role in closing this gap.

Geographic Optimization for Sustainability

Another emerging strategy involves siting AI workloads in locations with abundant renewable energy and low-carbon grids. By shifting AI training and inference to greener regions, cloud providers can reduce net emissions.

For example:

- Training large models in Nordic data centers—where hydropower and wind dominate—can dramatically lower their carbon cost.

- Running inference in regions with robust solar and battery infrastructure (such as parts of California and Australia) similarly reduces environmental impact.

- Hybrid cloud architectures allow workloads to be dynamically routed to more sustainable data centers based on real-time grid carbon intensity.

Geographic optimization is not without trade-offs—particularly for latency-sensitive applications—but it offers a pragmatic avenue to "green" a significant portion of AI activity.

Efficiency Gains Through Shared Models and Open Source

The open-source AI movement also has a role to play in reducing environmental costs. By sharing pretrained models widely, the community can avoid duplicative training efforts across organizations. Instead of thousands of teams separately training similar models—each incurring significant compute and emissions—the ecosystem can converge on high-quality shared models optimized for reuse.

Initiatives such as Hugging Face’s Transformers library, Meta’s LLaMA series, and Mistral’s open models exemplify this collaborative approach. By leveraging well-trained, publicly available models, companies and developers can focus on fine-tuning and deploying AI without repeating the most resource-intensive stages of model development.

Moreover, open standards for measuring and reporting model efficiency (e.g., ML CO2 Impact standards) are emerging to help practitioners select greener model options.

What Consumers and Companies Can Do

While much of the responsibility for AI sustainability lies with cloud providers and AI developers, consumers and enterprises also have agency:

- Demand transparency: Users can encourage greater openness about the carbon and water footprints of AI services—prompting providers to report key metrics.

- Opt for efficiency: When multiple AI tools are available, favoring those with demonstrated efficiency (via open reporting or third-party assessments) helps drive market incentives for greener AI.

- Control usage: Enterprises should be mindful of unnecessary or excessive AI inference. For example, limiting automated content generation or batch processing to when it delivers real value reduces superfluous compute.

- Invest in sustainability partnerships: Organizations adopting AI at scale can engage with providers offering verified carbon-neutral or low-impact services.

As AI becomes further embedded in business processes and daily life, such demand-side actions can complement industry and regulatory efforts.

A Roadmap for Sustainable AI

In sum, a multipronged approach is emerging to align AI innovation with climate goals:

| Strategy | Key Impact |

|---|---|

| Model compression & distillation | Lower inference energy use |

| MoE & sparsity | Higher efficiency per compute cycle |

| Renewable-powered data centers | Reduced carbon emissions |

| Geographic workload optimization | Dynamic carbon footprint reduction |

| Open-source model sharing | Avoidance of duplicative training |

| Consumer demand for transparency | Market pressure for greener AI |

By pursuing these avenues concurrently, the AI industry can chart a path toward greater sustainability—though vigilance and continuous innovation will be required.

Innovation with Responsibility

AI’s environmental costs are real, but they are not immutable. Through targeted investments in technology, transparency, and operational best practices, it is possible to reconcile AI’s transformative potential with the planet’s ecological limits.

The future of AI will be shaped not only by raw performance, but by how responsibly that performance is delivered. As stewards of this technology, developers, companies, regulators, and users alike have a role in ensuring that AI’s progress also advances our shared sustainability goals.

Conclusion

The astonishing pace of AI advancement has transformed how businesses operate, how consumers interact with technology, and how society envisions the future of work, creativity, and problem-solving. Yet as we embrace these powerful tools, we must confront an equally powerful truth: AI technologies—particularly large models trained and deployed at scale—carry hidden environmental costs that demand closer scrutiny.

Throughout this article, we have examined the multifaceted environmental impacts of AI. The carbon footprint generated by model training and inference is substantial, with leading AI models requiring gigawatt-hours of electricity and contributing significant CO₂ emissions. The supporting cloud infrastructure—data centers packed with power-hungry GPUs—exerts a growing toll on global electricity grids and contributes to climate change. Furthermore, the water footprint of AI—largely invisible to the average user—is substantial, with billions of liters consumed annually to cool data centers worldwide.

These hidden costs underscore a central paradox: the same AI systems that promise to help humanity tackle climate challenges could, if poorly managed, exacerbate those very problems. The rise of AI must therefore be guided by a commitment to environmental responsibility.

The good news is that viable mitigation strategies exist—and are gaining momentum. Advances in model efficiency, such as compression, distillation, and sparse architectures, can dramatically lower the energy required for AI training and inference. Cloud providers are investing heavily in renewable energy, liquid cooling, and smarter workload distribution to reduce both carbon and water footprints. The open-source AI community, by encouraging model sharing and reuse, is helping to avoid redundant training efforts that would otherwise drive up emissions.

Still, challenges remain. The global demand for AI services is exploding, and without a systemic approach to sustainability, efficiency gains risk being outpaced by sheer growth. Moreover, geographic disparities in data center practices and regulatory frameworks mean that the environmental impact of AI remains highly uneven across regions.

For AI to truly align with global climate goals, coordinated action is needed across the entire value chain:

- Developers must prioritize efficiency in model architecture and training.

- Cloud providers must accelerate the transition to 24/7 renewable energy and improve transparency around water use.

- Regulators should establish clear guidelines and disclosure requirements to ensure accountability.

- Enterprises and consumers must demand sustainable AI offerings and use AI services mindfully, understanding that every query or generated image carries an ecological cost.

Perhaps most importantly, the conversation around AI’s hidden environmental impacts must become more visible and mainstream. Just as public awareness of “digital carbon footprints” has led to greater scrutiny of video streaming and cryptocurrency mining, a similar reckoning is needed for AI. Only by bringing these issues to light can we foster informed choices by businesses, policymakers, and individuals.

In the end, the path to sustainable AI is not about rejecting innovation, but about embracing it responsibly. The AI revolution should be a force not only for economic and societal progress, but also for environmental stewardship. With foresight, collaboration, and technological ingenuity, we can build an AI-powered future that is compatible with the planet’s ecological boundaries.

References

- International Energy Agency (IEA) — Data Centre Electricity Consumption

https://www.iea.org/reports/data-centres-and-data-transmission-networks - Microsoft Sustainability Report

https://www.microsoft.com/en-us/sustainability - Google Cloud Carbon-Free Energy Progress

https://cloud.google.com/sustainability - Hugging Face – Efficient AI and Carbon Impact

https://huggingface.co/blog/mlco2-impact - University of California Riverside – Water Use of AI

https://ucrtoday.ucr.edu - Uptime Institute – Global Data Center Trends

https://uptimeinstitute.com/resources/research-reports - Amazon Web Services Sustainability

https://aws.amazon.com/executive-insights/cloud-sustainability - EU Green Deal: Sustainable Digital Infrastructure

https://ec.europa.eu/info/strategy/priorities-2019-2024/european-green-deal - MIT Technology Review – AI’s Energy Problem

https://www.technologyreview.com - Carnegie Mellon & Hugging Face – Carbon Footprint of LLMs

https://huggingface.co/blog/large-language-model-carbon-impact