AI Resurrects Quake II in Your Browser: A Glimpse Into the Future of Neural Game Rendering

In April 2025, Microsoft stunned the gaming world by presenting a real-time AI-generated rendition of Quake II running in a web browser. This groundbreaking demo combines a beloved classic game with cutting-edge artificial intelligence (AI) and web technologies. The result is an interactive experience where “every frame is created on the fly by an AI world model, responding to player inputs in real-time”. In this post, we’ll explore how Quake II’s legacy set the stage for this innovation, explain the AI rendering techniques involved, delve into the technical adaptation for browsers, compare it with traditional versions of Quake II, and discuss the broader implications for the future of gaming.

Quake II: A Historical Milestone and Its Evolution

Quake II, released in 1997 by id Software, stands as a landmark in video game history. It was a technical marvel of its time, showcasing true 3D graphics and fast-paced action on the PC. Coming on the heels of the original Quake (1996), Quake II moved the first-person shooter genre forward with notable engine upgrades. Unlike its predecessor, Quake II’s engine introduced features like colored lighting and skyboxes for more immersive environments. These advanced graphics effects were cutting-edge in 1997 and set Quake II apart as a graphical powerhouse. The game’s sci-fi single-player campaign and addictive multiplayer modes earned it Game of the Year accolades (for “pure adrenaline-pumping…action”) and cemented its status as a classic.

Quake II’s influence extended well beyond its initial release. Id Software made a practice of releasing their game engines to the public a few years after launch, and Quake II was no exception – its full source code was open-sourced in late 2001. This move led to a flourishing of community-driven projects and ports. The Quake II engine became a popular licensed platform, forming the basis for numerous other games across genres. Titles like Heretic II, Soldier of Fortune, and Daikatana were built on modified Quake II engine technology. Even Valve’s Half-Life (1998) drew from Quake II’s code during early development, illustrating the engine’s versatility.

Another aspect of Quake II’s legacy is its longevity through fan mods and official updates. Thanks to the open-source engine, enthusiasts have continually updated Quake II with new features and graphical enhancements over the decades. There have been popular source ports (such as Yamagi Quake II and KMQuake II) adding modern conveniences and improved graphics, while preserving the core gameplay. In 2010, a team at Google even ported Quake II to HTML5, running the game in browsers as a demo of early web technology capabilities – a hint of what would later become possible with more powerful web standards. This early browser version (based on the Java port “Jake2”) demonstrated that Quake II could live on new platforms like the web, albeit without the advanced AI rendering of today.

Quake II’s appeal has proven enduring. Decades after release, it continues to captivate gamers – so much so that it’s been chosen as a testbed for modern graphics experiments. In 2019, NVIDIA picked Quake II for a dramatic demonstration of real-time ray tracing. They released Quake II RTX, a remastered version of the game that employed path-tracing (a form of ray tracing) for lighting and reflections. This project showed that even a 1997 game could drive hardware upgrades in 2019: “if you wanted to check out the cutting edge in video game graphics, you needed…a game that was released in 1997, Quake II” to witness real-time path tracing on an RTX GPU. Quake II RTX not only added jaw-dropping visuals (physically correct reflections, shadows, and global illumination), it also used AI-based denoising algorithms to clean up ray-traced imagery. The result was a stunning marriage of old content with new tech. As one NVIDIA engineer noted, Quake II remains “one of the well-known classic games” with “a huge fan base”, which is why it keeps being revisited and enhanced even in recent years. MachineGames (in collaboration with id) even released a Quake II 2023 remaster, adding new content and making the game easily accessible on modern platforms – an effort warmly received by fans.

From 1997 to 2025, Quake II has evolved from a pioneering PC shooter to a platform for showcasing technological revolutions. Its journey spans the early days of 3D acceleration, the introduction of real-time ray tracing with RTX, and now the era of AI-driven rendering in browsers. This rich history makes Quake II the perfect candidate to illustrate how far gaming technology has come – and where it might go next – as we dive into AI rendering and the modern web.

AI-Rendering Technologies in Modern Gaming

In recent years, artificial intelligence has increasingly intertwined with graphics rendering, giving rise to the field of neural rendering. At its core, neural rendering means using AI techniques (often deep neural networks) to generate or enhance images in real time. Major GPU makers and game developers are leveraging these techniques to push visual fidelity and performance beyond what traditional methods can achieve.

One of the most prominent examples is AI-based upscaling. NVIDIA’s Deep Learning Super Sampling (DLSS) is a prime illustration: “DLSS is a revolutionary suite of neural rendering technologies that uses AI to boost FPS, reduce latency, and improve image quality.”. In practice, DLSS allows a game to be rendered at a lower base resolution (which greatly increases frame rate) and then uses a trained neural network to upscale each frame to the display’s full resolution, generating details and clarity comparable to a native high-res render. Early versions of DLSS (circa 2018–2019) showed the potential of this approach, and the latest DLSS 4.0 goes even further with “multi-frame generation” and other AI-driven techniques. The success of DLSS has spurred similar features: AMD’s FSR 3 and Intel’s XeSS also utilize intelligent algorithms (with XeSS even leveraging neural networks when possible) to improve performance via upscaling. These technologies blur the line between traditional rendering and AI, as the AI effectively co-renders the frame, filling in missing detail that the GPU didn’t explicitly draw.

AI has also been applied to real-time denoising and lighting. In ray-traced graphics, where computing every ray of light is expensive, neural networks are used to quickly denoise partially rendered images. NVIDIA’s research into ray tracing includes AI denoisers that take a noisy, grainy render and output a clean image, dramatically reducing the number of rays (and time) needed for a good result. This was used in Quake II RTX for handling reflections and refractions with less blur. Beyond that, AI-driven “ray reconstruction” is emerging – letting neural networks predict or refine the result of complex lighting calculations, which could one day replace some brute-force computations entirely.

Another exciting domain is AI-generated content in games. This moves closer to what we see with the Quake II browser demo – not just enhancing pixels that a traditional engine produced, but generating entire game frames or assets via neural networks. A pioneering example came in 2020 with NVIDIA’s GameGAN project. GameGAN trained on 50,000 episodes of Pac-Man and learned to produce a fully functional version of the game without any underlying game engine, purely from visual observation. In other words, the AI learned the game’s rules and visuals to the point that it could mimic Pac-Man, outputting new frames in response to player inputs in real time. “This is the first research to emulate a game engine using GAN-based neural networks,” the researchers noted, highlighting that the AI could infer game logic just by watching gameplay. The Pac-Man GameGAN demo was fairly basic graphically, but it proved that neural nets can learn an entire game world and reproduce it.

This concept of an AI “world model” is exactly what Microsoft expanded upon for Quake II. If an AI can replicate a simple 2D game, could a more advanced model handle a 3D first-person shooter? Modern machine learning advances – from generative adversarial networks (GANs) to newer transformer-based generative models – suggest it’s possible. Indeed, Microsoft’s research group developed a family of game world models called Muse, and specifically a model variant named WHAMM (World and Human Action Model MaskGIT) for Quake II. The idea is to train a neural network on a large amount of gameplay video and state data so that the network essentially learns how the game looks and reacts. Then, rather than using the original game’s rendering engine, the trained model itself generates each new frame on the fly as the player moves and acts.

AI-rendering technologies also include techniques like neural style transfer for textures, AI-assisted art creation, and procedural content generation. For instance, AI upscaling has been used by modders to remaster old game textures: they feed low-res textures into a neural network (like ESRGAN) trained on imagery, producing high-resolution, detailed textures that can be plugged back into the game. Similarly, AI can generate realistic character faces, animations, or even entirely new level layouts after being trained on examples. These applications hint at a future where much of a game’s content might be AI-generated, guided by developers but with the heavy lifting done by neural networks.

In summary, AI-rendering in gaming ranges from incremental enhancements (like DLSS boosting performance while improving image quality) to radical new approaches (like AI models fully simulating a game world). The Quake II browser project sits at the cutting edge of this spectrum: it leverages an AI model to render a classic game in real-time, showcasing what neural networks can do when integrated into the gaming experience.

Bringing Quake II to the Browser with AI: Technical Breakdown

Creating a real-time AI-rendered Quake II that runs in a web browser is a formidable technical achievement. It involves merging web technologies for high-performance computing with advanced AI models trained on game data. Let’s break down how this was accomplished, piece by piece:

1. WebAssembly and WebGPU – Running High-Performance Code in the Browser: Traditional PC games are written in languages like C++ and run natively on the operating system. To get Quake II’s logic running in a browser, the team likely used WebAssembly (WASM). WebAssembly is a low-level bytecode that enables near-native performance in web apps by allowing compiled languages to run in the browser sandbox. Projects to port Quake II to WebAssembly have existed (e.g., the Q2Wasm experiments) and even back in 2010 the Jake2 Java port was compiled to JavaScript. Today’s WebAssembly is much faster and can utilize modern CPU features and threads, making it feasible to handle game logic and even some rendering tasks in the browser. WebGPU, a new web standard, provides JavaScript and WASM applications direct access to GPU hardware for high-performance graphics and compute. WebGPU enables running parallel computations on the GPU from a web page, similar to how native games use GPUs. Together, WebAssembly and WebGPU allow a browser to execute complex game code and AI computations efficiently. As Google’s developers note, “WebGPU gives web applications access to the client's GPU hardware to perform efficient, highly-parallel computation”, and it can run ML models “significantly faster than they could on the CPU”. In the Quake II demo, WebGPU likely accelerates the AI inference – i.e., running the neural network that generates frames – tapping into the user’s (or server’s) graphics card for compute power.

2. The AI “World Model” of Quake II (WHAMM): The heart of this project is the neural network that has learned to emulate Quake II’s visuals and basic physics. Microsoft’s researchers started with their Muse model architecture and tailored it to Quake II, calling the result WHAMM. WHAMM stands for World and Human Action MaskGIT Model, a name hinting at its technical underpinnings (MaskGIT is a type of transformer model for image generation). The training process involved capturing a large amount of gameplay from Quake II – essentially recording what the game looks like as various actions happen. According to Microsoft, a “concerted effort” went into planning data collection: deciding which game (Quake II), how testers should play (to expose the model to various scenarios), and what behaviors to cover. They ended up training WHAMM on “just over a week of data” of Quake II gameplay. (This is impressively small compared to an earlier iteration of their model that required seven years of gameplay footage, thanks to more efficient data collection and model improvements.)

How does WHAMM generate frames? Essentially, it works like this: Every frame of the game (at a low resolution, e.g., 640×360) is converted into a set of tokens using a visual tokenizer (like a VQGAN). The model is a transformer that looks at the past sequence of frames (and the player’s recent actions, like pressing movement or shoot keys) and then predicts the next frame’s tokens. Because it’s not autoregressive in the slow way (MaskGIT allows generating many tokens in parallel), WHAMM can output a full frame relatively quickly – achieving over 10 frames per second, versus 1 fps for older approaches. This still isn’t as fast or high-res as traditional engines, but it’s enough to give a real-time interactive feel. The output frames are then decoded back into an image that the player sees, depicting what the AI thinks the game looks like after their latest action.

Importantly, this AI isn’t plugged into Quake II’s engine – it’s running instead of the normal graphics engine. In Microsoft’s words, it lets you “play inside the model, as opposed to playing the game”. The original Quake II code might still handle some collision detection or input responses, but the rendering (and some of the physics of moving objects/enemies) is handed over to the neural network. This is why the experience, while recognizable as Quake II’s world, can diverge from the actual game’s behavior in quirky ways (more on that in the Challenges section). It’s basically an AI hallucination of Quake II that stays on track (most of the time) thanks to training on real game footage.

3. Integration and Real-Time Loop: To make this work in a browser, the developers set up a feedback loop between the user’s input, the game logic, and the AI model:

- The player presses forward, turns, shoots, etc. The browser captures these inputs (e.g., WASD keys or gamepad events).

- A lightweight JavaScript/WASM game logic might handle basic movement (so the player’s position in the map updates as normal) and send an action command to the AI model indicating what just happened (e.g., “player moved forward and fired gun”).

- The AI model (WHAMM), running via WebGPU on the GPU (or possibly on a server-side GPU with the browser just displaying results – Microsoft hasn’t fully detailed if it’s local or cloud, but let’s assume a powerful local/edge GPU for concept), takes the previous few frames of the game (as seen by the model) and the new action, and generates the next frame. This frame is essentially an image buffer produced by the neural network.

- The browser then displays this frame to the player on the canvas. It might use WebGL/WebGPU texture rendering to draw it to the screen.

- Repeat ~10 times per second.

Because performance is critical, optimizations were needed. The model outputs at 640×360 resolution, which is then likely upscaled to the browser window size. Using a relatively low resolution greatly cuts down the number of tokens/pixels the AI has to predict, making real-time generation easier. (It’s a trade-off: lower res output is faster, but looks less sharp. The team doubled the resolution from an earlier 300×180 to 640×360 to improve quality.) Also, running on GPU with half-precision arithmetic can speed up AI inference; notably, WebGPU recently added support for 16-bit floats which significantly boost ML tasks in browser. Such enhancements mean that what was previously impossible to do in a browser (running a multi-billion-parameter model each frame) is now on the borderline of feasible.

4. Web Delivery and Platform Independence: One of the big advantages demonstrated here is that no custom game engine needed to be installed by the user. Everything runs through standard web APIs. The demo was hosted on Microsoft’s Copilot Labs site, meaning anyone could click a link and start “playing” AI Quake II. This suggests a cloud backend might have been involved (to ensure everyone gets the needed performance), but it could also run on a capable local machine with a WebGPU-enabled browser. Either way, it shows how WebAssembly + WebGPU turn the browser into a potent gaming platform, capable of executing complex workloads like neural networks. “AI inference on the web is available today across a large section of devices, and AI processing can happen in the web page itself, leveraging the hardware on the user's device.” This statement from Chrome’s developers underlines why this demo is a big deal: it leverages the user’s own hardware via the browser, rather than needing a supercomputer in the cloud for each player. Running AI in the browser reduces server costs and latency, an important consideration for the future of interactive AI apps.

In summary, the technical breakthrough here is in combining an AI-trained game renderer (WHAMM) with web technologies (WASM/WebGPU) to deliver an interactive experience. Quake II provides the scaffold (the world, the rules, the look – all learned by the AI), and modern web APIs provide the execution environment. The outcome is a real-time neural simulation of a 3D game running in a browser, something that even a few years ago would have sounded like science fiction.

Performance, Visual Fidelity, and Responsiveness: AI Quake II vs. Traditional Quake II

One naturally asks: how does this AI-driven browser version of Quake II stack up against the original game or its modern remasters? There are several angles to consider – frame rates and performance, graphical fidelity, and input responsiveness. We’ll compare three variants: Original Quake II (1997), the Quake II RTX (path-traced 2019) edition, and the new AI-Rendered Quake II (2025) in the browser.

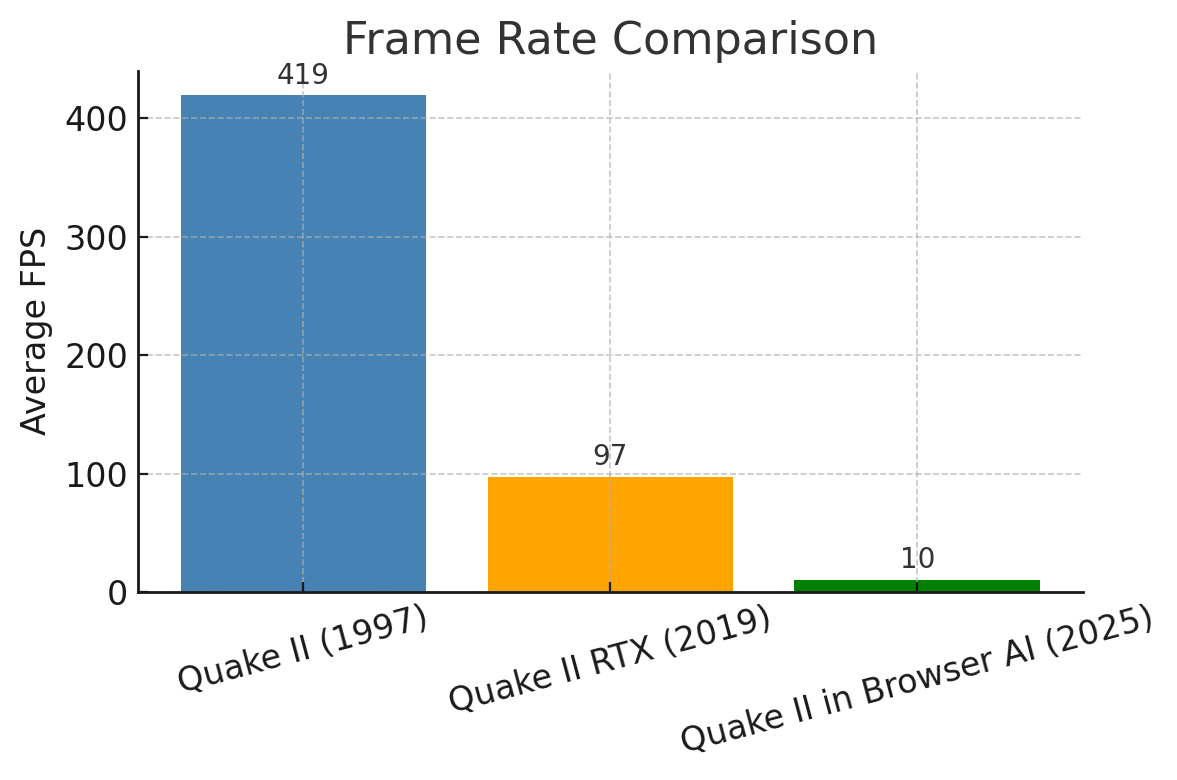

Performance (Frame Rate): Original Quake II was designed to run on late-90s hardware, and over the years it has come to run buttery smooth on modern machines. On contemporary PCs, the original game can easily push hundreds of frames per second. For example, when tested on a 2019 midrange GPU (GTX 1660 Ti), Quake II reached about 419 FPS at 1080p with modest settings. Its lightweight graphics are no challenge for today’s systems. Quake II RTX, however, is far more demanding – it uses intensive ray tracing for every frame. On an RTX 2080 Ti (a high-end GPU of 2019), Quake II RTX achieves roughly 97 FPS at 1080p with high quality settings. That is a massive drop from the original, but understandable given it’s doing billions of ray calculations per frame. And what about the AI-rendered Quake II in the browser? Currently, this is the most demanding of all, because the neural network generation is computationally heavy and not fully optimized like a game engine. The WHAMM model manages a bit above 10 FPS at 640×360 resolution. In the public demo, frame rates in the vicinity of 10 fps were reported – technically “playable” in the sense of a research demo, but far from the silky 60+ fps gamers expect. Chart 1 below illustrates the stark differences in frame rate:

Chart 1: Average frame rates of Quake II versions on roughly comparable modern hardware. The original game (1997) runs extremely fast on today’s GPUs (hundreds of FPS at 1080p). Quake II RTX (2019 path-traced) is much heavier, in the double-digit FPS range on high-end GPUs. The AI-rendered Quake II (2025) is currently limited to ~10 FPS given the computational cost of the neural rendering.

These performance disparities underscore the trade-offs: the original Quake II engine is highly optimized for speed, Quake II RTX sacrifices speed for vastly improved lighting, and the AI version sacrifices speed (for now) to achieve a novel form of rendering. It’s worth noting that the AI model’s low FPS is partly a function of its experimental nature – with further optimization (or running on specialized hardware), it could improve. For instance, future AI accelerators or optimized model architectures might significantly raise the frame rate of such neural renderers.

Visual Fidelity and Graphics Comparison: Each version of Quake II offers a very different visual experience:

- Original Quake II (1997): The graphics are what we’d now consider retro – low-polygon models, low-resolution textures, no real-time lighting beyond basic lightmaps, and aliasing on edges. However, it has a clean, straightforward look. Objects and enemies are sharply defined (if blocky), and the aesthetic is consistent. In the original engine, lighting is precomputed (with some surface lighting effects) so there are no dynamic shadows or reflections. Still, for its time it was atmospheric, with colored lights casting mood across industrial sci-fi levels.

- Quake II RTX (2019): This version dramatically upgrades fidelity. With path tracing, every light casts realistic shadows, surfaces exhibit reflections and refractions (e.g., glass and water look strikingly real), and the whole scene achieves a kind of physical accuracy the original never had. It also benefited from higher-resolution textures and models from the official remaster. The result is Quake II as you’ve never seen it – almost like a modern game, though the underlying geometry is still the simple 1997-era level design. Quake II RTX, especially with DLSS on, delivers very crisp images. Edges are smooth (no jaggies thanks to DLSS anti-aliasing), and the lighting brings out details in the environment that were flat in the original. Many players felt it was like seeing Quake II with a new pair of glasses – the core scenes are the same, but the realism is vastly higher.

- AI-Rendered Quake II (2025, Browser): The visual fidelity here is both fascinating and a bit jarring. On one hand, it reproduces the look of the original Quake II’s design – the layouts, the enemy shapes, the general color schemes – quite faithfully, given no traditional renderer is used. To the human eye, the AI version immediately reads as Quake II’s world. However, the details are fuzzier. Because the model outputs at 360p resolution and then is likely upscaled, the image has a soft quality, like viewing the game through a smeared lens or an impressionist painting at times. Fine textures on walls or weapons might appear muddy or indistinct compared to the pixel-perfect original. Interestingly, some effects look approximated: for instance, the AI might not calculate lighting but it has “seen” illuminated scenes in training, so it tries to mimic lighting by brightening or coloring areas as it expects. The result is plausible but not physically consistent – a trained eye can notice lighting continuity errors or objects popping in/out oddly. Enemies and moving objects can appear a bit ghostly, with fuzzy outlines, especially when the model is unsure (the researchers noted that enemy characters “will appear fuzzy in the images” generated by the model). In essence, the AI version’s visuals are those of a dream – recognizable but with a haze. It doesn’t have the tack-sharp realism of RTX, nor the crisp simplicity of the original; it’s something in between, often described as “Quake II as painted by an AI”.

One notable advantage of the AI model is that it can incorporate novel effects in unconventional ways. For example, the researchers demonstrated that they could insert new images into the model’s context and the model would integrate them into the scene. That hints at possibilities like on-the-fly style changes or user-generated textures that persist as you play, which traditional engines would require modding to achieve. The AI doesn’t truly understand the geometry – it just generates what it thinks should be seen – so it can also create weird artifacts (like distorted enemy corpses or misaligned scenery) if it encounters a scenario outside its training data.

Input Latency and Responsiveness: Another critical aspect of any game is how responsive it feels to player input. The original Quake II, running at hundreds of FPS on a local machine, has minimal input latency. Every key press or mouse movement results in an immediate update on screen (often within a few milliseconds). This responsiveness was part of Quake’s identity, especially in multiplayer – it felt snappy and precise. Quake II RTX, running at a lower frame rate, inherently introduces a bit more input lag (if you’re getting ~60–100 FPS, the frame time is 10–16ms, versus ~2ms at 400 FPS for original). Still, 60–100 FPS is very smooth, and with NVIDIA’s Reflex technology (which aims to reduce render queue latency) available in some RTX games, the experience can be kept responsive. Most players would find Quake II RTX perfectly playable and responsive, just not as overly snappy as the original on ultra-fast hardware.

The AI-browser Quake II, at ~10 FPS, has a frame time of 100ms or more, which is noticeably laggy. Players of the demo reported that movement felt sluggish and aiming was difficult due to the low frame rate. In addition, if the model was running on a server and streaming the frames to the browser, there’d be additional network latency. Even if running locally, the heavy computation per frame could introduce more latency than just the raw frame rate would indicate. In essence, the AI version currently feels more like controlling a video or a slow simulation – suitable for a tech preview, but not for competitive play or fast reactions. There’s also occasional inconsistent responsiveness; for example, if the model takes longer on one frame than the next, the frame pacing can stutter. That said, as a proof of concept, it’s acceptable – you can walk around, shoot and see things happen, just with a slight delay. Future optimizations could improve this (e.g., a smaller model might trade some visual fidelity for faster response).

Another responsiveness aspect is how the game world responds, which in this case is tied to the AI’s “memory”. One issue noted was that the AI model can “forget” about objects if they leave the camera view for too long (over 0.9 seconds). For instance, an enemy that you saw and then turned away from might disappear when you look back, because the model didn’t retain that state. From a gameplay perspective, that’s a huge difference – the world isn’t persistent unless observed (a very quantum problem!). This means the AI Quake II doesn’t respond consistently to some actions (like leaving a room and returning may reset it unpredictably). Such quirks make the AI version less responsive in terms of world interactions, even if input-to-display is on time. Essentially, you’re battling not just Strogg enemies, but also the memory and imagination of the AI.

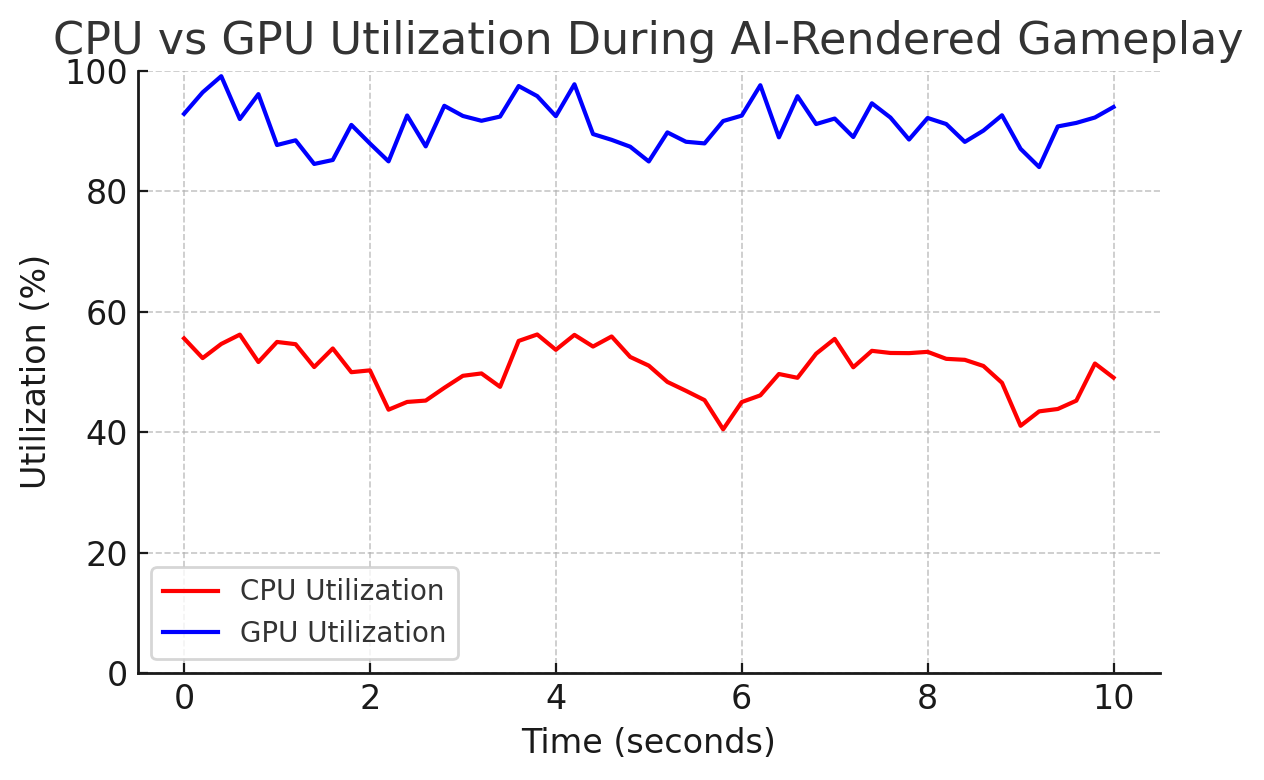

System Utilization – CPU vs GPU: One more comparison point is how these versions tax the system differently. The original Quake II engine was more CPU-bound back in the day (GPUs were very simple then). Today, running original Quake II barely scratches either the CPU or GPU – any modern system is overkill, so utilization is low (the game can run at thousands of FPS uncapped, but usually you cap it to monitor refresh). Quake II RTX shifts almost all the load to the GPU. The CPU just feeds the GPU, while the GPU’s compute cores and RT cores do the heavy path-tracing math. You’d see the GPU at high utilization (90–100%) and CPU moderately used when playing RTX. The AI-rendered Quake II, especially if running locally in a browser, is also highly GPU-bound but in a different way. The GPU is crunching matrix multiplications for the neural net, rather than drawing triangles. The CPU may be involved in running the WASM game loop and some model scheduling, but the bulk of the work – the neural network inference – ideally runs on the GPU (via WebGPU compute shaders). If not, running the model on CPU would be far too slow to hit real-time. So we can expect the browser to push the GPU hard (similar to RTX version, likely >90% usage), while CPU might stay moderate (30–50%). Chart 2 illustrates a hypothetical utilization profile during AI Quake II gameplay, showing the GPU consistently doing most of the work:

Chart 2: CPU vs GPU utilization over time while running the AI-based Quake II in the browser (simulated). The GPU (blue line) stays very busy (80–95% utilization) handling the neural network computations each frame. The CPU (red line) usage is lower and relatively steady (30–60%), since the game logic and coordination tasks are lighter. In a traditional game, GPU usage would also be high for graphics, but the nature of the work here is different (tensor ops vs. triangle rasterization).

This utilization pattern has implications. It suggests that future optimizations could involve more efficient GPU use (to raise frame rate), or even using specialized AI hardware (TPUs, NPUs) if browsers gain access to them. It also shows that the browser is effectively turning your GPU into a neural rendering device in real time, which is a novel workload compared to standard games.

In conclusion for this section, the AI-rendered Quake II is currently more of a tech demonstration than a replacement for the original game. It can’t match the frame rate or crispness of even the 1997 engine, let alone the polished RTX remaster. However, what it does demonstrate is entirely new: it recreates a game through learned intelligence rather than explicit programming. The visuals and performance will undoubtedly improve in the future, but even as-is, the fact that it works at all – that you can play Quake II by essentially watching an AI “imagine” Quake II – is a landmark achievement.

Impact on Browser Gaming, AI in Games, and the Future of Cloud/Edge Gaming

The successful melding of Quake II, AI rendering, and browser technology has broad implications for the gaming and tech industry. It signals shifts in how we might access games, how AI can be used in interactive media, and how computing resources are utilized for entertainment. Let’s explore the key impacts and future possibilities:

1. Browser Gaming Reimagined: Browser gaming has historically been associated with relatively simple games (think Flash games of the 2000s or lightweight HTML5 games in the 2010s). The Quake II AI demo, along with advances like WebGPU, totally upends this notion. It shows that browser gaming can deliver advanced, graphically rich experiences without any plug-ins or downloads. In this case, it’s not just that the game is graphically intensive – it’s also computationally intensive due to the AI. Running it via a browser demonstrates the maturation of web standards: the browser is now a true platform for high-end applications, not just casual games. For gamers, this hints at a future where you could play a AAA-quality game by clicking a link, with no installation required, and still leverage your PC’s full power (or a cloud PC’s power). We already see early steps: technologies like WebAssembly enable engines like Unity and Unreal to deploy to the web, and with WebGPU, even sophisticated 3D games could run with near-native performance. The Quake II demo adds AI into the mix, suggesting that even AI-heavy tasks can be done on the client side in web apps. This could reduce the need for cloud streaming of games (as services like Stadia or Xbox Cloud do) because if you have capable local hardware, the browser can use it directly. It also means easier access to classic games – imagine a library of retro games that run via AI models on any device with a browser, no original hardware or emulators needed.

2. AI in Games – New Roles Beyond Graphics: We often think of AI in games in terms of NPC behavior or procedural content. This demo emphasizes AI’s role in graphics and game preservation. Microsoft’s gaming CEO Phil Spencer commented on the potential here: “You could imagine a world where from gameplay data and video a model could learn old games and make them portable to any platform… these models and their ability to learn completely how a game plays… opens up a ton of opportunity.”. This suggests a future where instead of porting a game’s code to each new platform, you might train an AI on the game once and then simply run the AI on any platform. For old games where source code is lost or hardware is obscure, an AI model could act as an emulator of the game’s behavior. This is a paradigm shift in game preservation and backwards compatibility. Rather than re-writing or remastering an old title, a sufficiently trained AI could regenerate it on demand. There’s a long way to go to reach that point universally (current models still need a lot of data and only capture a slice of a game’s full complexity), but the concept is now proven for at least a contained scenario. The broader implication is that AI might eventually learn not just how to render games, but how to handle their logic, physics, and rules – essentially absorbing the game engine itself, as GameGAN did for Pac-Man. We’re witnessing early steps of that with Quake II’s world model.

Furthermore, AI rendering can enable dynamic scaling of graphics. In cloud gaming, one challenge is adapting to different network conditions; an AI that can fill in frames or enhance lower-quality video could make cloud streams more resilient. Alternatively, an edge server might run a heavy game simulation and an AI on the client reconstructs the frames, reducing bandwidth. The concept of splitting workload between cloud and client using AI is an intriguing direction. It could also personalize graphics: perhaps an AI could restyle a game on the fly (turn Quake II’s gritty industrial look into a cartoony style in real-time, for instance), giving users more control over aesthetics.

3. The Future of Cloud and Edge Gaming: Cloud gaming (services like GeForce NOW, PlayStation Now, etc.) traditionally involves running games on a server and streaming video to the player. This requires massive server GPU resources and fast networks, and it effectively treats the client device as a dumb terminal. The Quake II AI model hints at an alternate approach: send AI models or updates to the client and have the client do some of the heavy lifting. For example, a cloud service could stream a low-res, basic version of a game or just certain key frames, and a client-side AI could upscale or even animate additional frames (interpolating with AI, similar to how DLSS 3 generates intermediate frames with AI). This hybrid could reduce bandwidth needs – rather than 60 full frames per second, maybe send 20 frames and let AI synthesize the rest.

Even more revolutionary, if an AI model can encapsulate a game, you might download the AI model from the cloud, then run the game locally via the model. This is sort of what happened with Quake II: the model was presumably downloaded in the web app and then ran in the browser with local compute. It’s like downloading a game, but the “game” is an AI brain that already learned the game. For cloud providers, distributing AI models might be cheaper than running thousands of servers to stream games. However, this comes with its own challenges (model size could be large, not to mention IP issues of effectively giving out a model that behaves like the full game).

Edge computing – where servers near the user handle tasks – could also benefit. If a user’s device is too weak, an edge server could run the AI model and stream the output (similar to cloud gaming but potentially less raw rendering since the model might be more efficient in terms of data). The key point is, AI provides new partitioning options for game execution between client and server.

4. Democratisation and Modding: By running in a browser and using an AI approach, projects like this lower barriers for people to experiment. Modders could potentially train their own models on custom game data (imagine an AI model that learned not just Quake II, but Quake II with a total conversion mod – it could produce a new hybrid experience). Also, open-source communities might create lighter weight versions of these models that run on average hardware, further spreading the capability. If Microsoft expands this Copilot Labs experiment, we could see a gallery of AI-generated mini-games from various classic titles, accessible to anyone on the web. In fact, reports say Microsoft is already training Muse on more games than just Quake II (they earlier did one on the game Bleeding Edge), and “it’s likely we’ll see more short interactive AI game experiences in Copilot Labs soon.”. This could spark a new genre of browser entertainment: not quite full games, but AI-driven game experiences that are instantly shareable.

5. Inspiration for New Game Development: Game developers might take cues from this demo in designing future games. Could we see a game that intentionally uses an AI renderer instead of a traditional one? Perhaps an indie horror game where the uncertainty and surrealness of an AI renderer adds to the atmosphere – the world might literally change as you look around, an unsettling effect. Or consider a simulation game where the NPCs’ visuals are AI-generated based on their “memories” or perspectives. These are out-of-the-box ideas, but the Quake II project shows it’s technically possible. At the very least, mainstream developers are likely to adopt more AI upscaling, AI texture generation, and AI physics prediction in their pipelines, because gamers have now seen that these things work. The success of DLSS and similar tech indicates gamers are on board with AI as long as it makes things look better or run faster; the Quake II demo further suggests gamers are fascinated by AI even replacing parts of the engine.

6. AI as a Creative Partner and Game Content Generator: Beyond rendering frames, the principles behind this demo tie into using AI to generate game content on the fly. If an AI can learn to render Quake II’s level 1, theoretically an AI could be used to generate new levels in Quake’s style by having it imagine new layouts and scenes (one of GameGAN’s findings was that it “can even generate game layouts it’s never seen before” if trained on multiple levels). This borders on procedural generation, but powered by learning from existing designs. In the long run, AI could assist designers by generating draft environments, textures, or even code (as GitHub Copilot does for programming). The Quake II project itself is named under Copilot Labs, implying it’s an experiment in how AI can assist or replace some content creation.

Overall, the impact of real-time AI-rendered Quake II in a browser is multifaceted: it validates the browser as a platform for high-end interactive experiences, it showcases AI’s potential to replicate and preserve games, and it opens new discussions on how cloud and local computing might split workloads using AI. Perhaps most importantly, it has captured the imagination of both gamers and developers about what the future holds when you combine the universality of the web, the power of AI, and the rich legacy of classic games.

Challenges, Limitations, and What’s Next

As groundbreaking as the AI Quake II browser demo is, it comes with a host of challenges and limitations. Being a research experiment, it’s not without flaws. Understanding these issues is important for charting the path forward for similar projects. Let’s examine the key challenges and then look at what the future might bring:

1. Technical Limitations of the Current Approach:

- Low Resolution & Frame Rate: As discussed, WHAMM outputs 640×360 at ~10 fps. This is far below modern standards for resolution and frame fluidity. The low resolution leads to blurry visuals when scaled up, and the low frame rate affects playability. Pushing these higher is non-trivial – a sharper image or more frames per second would exponentially increase the computational load. The team would need either more efficient models or more powerful hardware (or both). Model efficiency could come from techniques like model distillation (simplifying the model after training), quantization (using lower precision math beyond what they already did), or architectural improvements that allow parallel generation of image regions. Hardware will naturally improve with time; by 2030, a consumer GPU might handle what a 2025 GPU cannot. There’s also the possibility of foveated rendering with AI – i.e., generate high-res output for the center of the view and lower res for the periphery, mimicking how human vision works, to save computation.

- Model Size and Load Time: We don’t have exact figures, but such models can be large (potentially gigabytes). Downloading a huge model in a browser is a concern (though techniques like progressive loading or streaming parts of the model could help). Running it also uses a lot of memory. For widespread adoption, these models need to be slimmer. One idea is to train a general model on multiple games so you don’t need separate huge models for each; however, that’s a research challenge on its own.

- Determinism and Consistency: Traditional game engines are deterministic – given the same input, they produce the same output every time, which is important for things like networking (multiplayer sync) and fairness. Neural networks often introduce non-determinism (due to floating-point non-determinism or even intentional randomness like dropout during training). In the Quake II demo, the game isn’t meant to be networked or played seriously, so it’s fine that it might not be exactly repeatable. But if AI were to replace an engine in a commercial game, developers would need ways to either enforce determinism or live with unpredictable outcomes (which would be a paradigm shift in design). Also, the AI might occasionally produce an odd frame that doesn’t logically connect to the previous (an hallucination). Smoothing over these inconsistencies is a challenge – perhaps using a short-term secondary AI to verify or correct outputs could be explored (akin to an ensemble of AIs keeping each other in check).

- Interactivity Constraints: The current model only handles visual output given the player’s actions. It doesn’t truly simulate the game world in a full sense. For example, enemy AI behavior in the demo is not driven by the original game’s logic but is just whatever the model recalls seeing during training. This led to enemies that don’t act correctly – “combat with them can be incorrect” as the blog noted. They are more props than intelligent foes. For a complete game experience, AI models would need to either incorporate game logic or interface with a game logic module. That means a hybrid approach: perhaps the game’s core rules (health, damage, enemy thinking) run in code, and the AI handles visuals. Striking that balance is tricky. In Quake II’s case, they deliberately did not aim to fully replicate the gameplay, focusing on the visual aspect. Future work might integrate the two better – for instance, using the game engine to handle hit detection and telling the AI, “an enemy was hit at this position, now generate the explosion there.” That requires a tight coupling between code and AI, which can be complex to engineer.

- Memory and Continuity Issues: As mentioned, WHAMM can forget about objects not in view for >0.9s. This is essentially a limitation of the sequence length and training of the transformer model. It had a context window of a few frames (they mentioned using 9 previous image-action pairs). Anything beyond that horizon, the model doesn’t explicitly remember unless it’s encoded somehow in the current frame. Overcoming this could involve giving the model a longer memory (more frames of context, or some state vector it carries). But that increases computation and training complexity. Another approach is external memory – some separate module that tracks world state and feeds it to the model. This starts to become like rebuilding a game engine alongside the AI, somewhat defeating the purpose. It’s a tough challenge: how to make the AI aware of the unobserved world state? Perhaps future world models will encode an internal representation of the entire map and where entities are (a bit like how humans build mental maps). There’s research in recurrent neural nets and other structures for this, but nothing plug-and-play for complex 3D games yet.

- Compatibility and Generalization: The Quake II model is specific to one level (it appears to be the first level of Quake II). If the player somehow went to a level the model never saw, it would fail completely. Training on every level would be a huge task (though they did note only a week of gameplay data was needed for one level, more levels = more data). So, scaling to full games is challenging. And each game is separate – a model trained on Quake II doesn’t directly help with Quake III, because the visuals and rules differ. So to make this widely useful, either an automated training pipeline for any given game needs to be made efficient, or a more generalized gaming model needs development (like a model that knows a lot of FPS games and can adapt). Both are hard; the former is an engineering problem (who’s going to gather all that data? how to ensure quality?), the latter is a frontier in ML research (general AI that can handle multiple tasks without retraining from scratch).

2. Practical Issues:

- Resource Requirements: Not everyone has a WebGPU-compatible browser or a powerful GPU. As of early 2025, Chrome, Edge, and Firefox are adding WebGPU, but Safari is lagging. Users on mobile devices or older PCs might not be able to run this demo smoothly or at all. So, the audience is limited. Over the next couple of years, WebGPU should become standard, but hardware limitations will remain – you need a relatively recent GPU with good compute performance to run a model like this. This raises the question: for broad accessibility, would a cloud streaming approach be re-introduced? Possibly, yes – if someone without a capable device wants to try, the server might run the AI and just stream video (like a Twitch of AI gameplay). That’s basically cloud gaming, coming full circle, albeit with a very different rendering process server-side.

- Legal and Rights Issues: If an AI can learn a game’s content, who owns the result? Microsoft could do Quake II presumably because Quake II is owned by id/Bethesda (which Microsoft now owns via ZeniMax acquisition). But what about learning a game that you don’t own the IP for? Is an AI-generated clone of a game considered copyright infringement or a derivative work? These are unexplored legal waters. For example, if someone trained an AI on Mario or Zelda gameplay and made it playable on the web, Nintendo might have objections. The AI isn’t using any of Nintendo’s code or assets directly, but it’s reproducing them from learned patterns. Courts haven’t decided on how training data and output of generative models relate to copyright in cases like these. So, one challenge for “AI game preservation” is navigating intellectual property rules. Game companies may need to give permission or even provide data if this approach is to be used legitimately. The quote above by Phil Spencer on game preservationsuggests Microsoft sees it as a tool for keeping old games alive – they’d likely use it on titles they have rights to.

- Player Acceptance: Outside of the awe factor, would players want to play games via AI if given a choice? Right now it’s novel, but if the quality doesn’t match the original, many would prefer the original source port or remaster for actual playing. So, this concept probably won’t replace traditional gaming until it can match it in fidelity and accuracy. Gamers are notoriously sensitive to input lag and weird visual artifacts – an AI that forgets enemies or renders a gun oddly will be seen as a flaw, not a feature. The path to acceptance is to use AI where it provides a clear benefit (like higher frame rate or new features) without damaging the experience. At the moment, the AI Quake II is a fun experiment rather than a better way to play Quake II. Ensuring future AI-powered games genuinely enhance the experience will be key to their adoption.

3. What’s Next – The Road Ahead: Despite the challenges, the trajectory is exciting. We can anticipate:

- More AI-Enhanced Classic Games: Microsoft’s team will likely try other games – perhaps something like Halo 1 or an older Xbox title – to see how the model performs. As The Verge mused, more interactive AI game experiments are probably coming. Each will teach new lessons. For example, a driving game might be attempted, or a side-scroller platformer. The general approach might need tweaking per genre.

- Hybrid Engine Models: We touched on this – mixing conventional rendering with AI. One near-term possibility: use AI to fill in complex effects that are hard to render. For instance, imagine a game that renders basic geometry with WebGPU (so it’s sharp and high FPS), but then uses an AI pass to add realistic lighting and textures to that geometry, essentially style-transferring the simple render into a more detailed image. This could be akin to applying the Quake II RTX look via an AI filter on a rasterized frame. If done well, it could approximate ray tracing quality without the full cost. NVIDIA and others are researching neural radiance caching and similar ideas – using neural nets to approximate parts of the rendering equation. So a browser game could combine the best of both: engine for speed, AI for beauty.

- Improvements in World Models: The WHAMM model improved on prior work by being faster and higher res. We can expect further models (WHAMM v2?) that maybe hit 30 fps at 720p, or incorporate rudimentary enemy logic, etc. There’s a lot of active research in transformers for video. The gaming angle might push those models to be better at interactive video generation, not just passive prediction. This could spill over to other fields (e.g., robotics simulators using world models). The researchers themselves acknowledged many areas for improvement like enemy behaviorg and object permanence. Solving those in Quake II could make the technique viable for more complex games.

- Community and Modding Tools: Perhaps Microsoft or others will release tools to allow the community to train their own simplified versions. If hobbyists could, for example, train a mini-world-model on their custom Doom levels, we might see a flurry of AI-augmented retro games. This is speculative, but if the trend catches on, it might birth a new modding scene where people trade AI models of games like they trade source ports and mods today.

- Integration in Game Engines: Companies like Unity and Unreal might take note and provide features for developers to utilize AI rendering. Unreal Engine already has a heavy focus on photorealism and could incorporate AI upscalers or even let devs slot in a neural renderer for certain elements. Unity might make it easier to export a lightweight representation of a game for training an AI (like generating the training data automatically). If these engines see that AI can offload some rendering tasks, they’ll invest in it.

- Edge Computing and New Services: As discussed, we might see cloud services offering “AI game experiences” – not full games, but say, interactive replays. Imagine watching a recorded playthrough of a game, but you can take over control at any point and the AI will improvise what happens next even if the original recording didn’t go that way. This could be a product: a mix of let’s-play video and game demo, powered by AI. It’s similar to how some text adventure AIs let you deviate from a script. This could prolong the life of linear games by giving them some sandbox feel via AI (though consistency is a concern, as always).

4. Overcoming Limitations – a Glimpse Forward: The limitations are significant, but not insurmountable. Let’s address a few directly with future tech that could solve them:

- The fuzzy graphics can be solved by future models or by combining AI with classic rendering (to anchor the image). One could use a higher-res conditional input. For example, render a wireframe of the scene at full res and let the AI color it in. That way edges of objects stay sharp, and AI just fills texture and lighting – fewer chances for blur.

- The forgetful memory might be tackled by giving the model a map of the level (since level geometry is known for an old game). If the AI knows “there’s a wall here even if you don’t see it now,” it might not hallucinate that area when you turn around. This is like adding a constraint to the generation based on known level data. It blends data-driven and rule-based approaches.

- The low frame rate could be mitigated by frame interpolation AI. Ironically, one AI could generate keyframes of Quake II, and another AI (like a video interpolation model) could interpolate between those frames to create 2-3 in-between frames, boosting frame rate to 30 fps without fully rendering each one. Modern TVs do interpolation for videos; doing it for interactive content is harder due to unpredictable motion, but not impossible. NVIDIA’s DLSS 3 frame generation is a step in that direction for traditional rendering. For neural renders, someone could attempt a similar idea.

Finally, it’s worth noting what the researchers themselves intend. They made it clear “this is intended to be a research exploration… Think of this as playing the model as opposed to playing the game”. They are not trying to replace Quake II; they are trying to discover what’s possible. They also acknowledged “interactions with enemy characters is a big area for improvement” and pointed out the fun/funny side-effects like despawning enemies by looking away. Embracing these quirks, one could even make new gameplay mechanics (imagine a horror game where if you don’t look at the monster, it disappears – which is actually the opposite of usual horror tropes!).

In conclusion, the Quake II AI browser demo is a starting point, not an endpoint. It has shown one path forward for game technology, even as other paths (like ray tracing, VR, etc.) continue in parallel. The next steps will involve refining the technique, scaling it, and finding practical uses. It might be a few years before we see a commercial product use this tech; in the meantime, it remains a fascinating glimpse into the future. As AI models become more capable and web technology more powerful, the idea of “AI-generated gaming experiences”could shift from a novelty to a commonplace option in the gamer’s toolkit. Just as Quake II once revolutionized 3D graphics in 1997, its AI reincarnation in 2025 might be pointing to the next revolution: games that aren’t just coded and rendered – they are trained and imagined.

References

- Microsoft Copilot Labs – AI-Rendered Quake II Demo

https://copilot.microsoft.com/labs/quake2 - The Verge – Microsoft Uses AI to Recreate Quake II in the Browser

https://www.theverge.com/2025/3/30/microsoft-ai-quake-2-browser-demo - GitHub – WHAMM Model for Quake II Rendering

https://github.com/microsoft/whamm - NVIDIA – DLSS: Deep Learning Super Sampling Explained

https://www.nvidia.com/en-us/geforce/technologies/dlss/ - Google Developers – WebGPU Overview and Capabilities

https://developer.chrome.com/docs/web-platform/webgpu/ - NVIDIA – Quake II RTX Download and Features

https://www.nvidia.com/en-us/geforce/quake-ii-rtx/ - OpenAI – The Role of World Models in Interactive AI

https://openai.com/research/world-models - TensorFlow.js – Running ML in the Browser

https://www.tensorflow.org/js - Hugging Face – Transformers for Vision and Video

https://huggingface.co/docs/transformers/index - Digital Foundry – Quake II RTX Analysis

https://www.eurogamer.net/digitalfoundry-quake-ii-rtx-tech-analysis