AI in Government: Global Success Stories, Policy Frameworks, and the Road to Ethical Automation

In an era defined by rapid technological advancement and increasing demands for responsive governance, artificial intelligence (AI) has emerged as a strategic catalyst for transforming public administration. Around the world, national, regional, and local governments are increasingly integrating AI into their operational frameworks to enhance efficiency, streamline service delivery, and make data-informed decisions. From optimizing traffic flow in congested urban centers to predicting fraudulent claims in welfare systems, AI is no longer an experimental tool—it is an essential component of modern governance.

The growing momentum behind AI in the public sector is driven by a confluence of factors. Firstly, the public expects faster, more intuitive digital services that mirror the responsiveness of private sector platforms. Secondly, governments face intensifying budgetary pressures and workforce constraints that require innovative approaches to maintain service quality. Lastly, the proliferation of big data—ranging from census and health records to transportation and environmental sensors—necessitates advanced analytical tools that can extract actionable insights from vast information reservoirs. AI, particularly in the form of machine learning, natural language processing, and predictive analytics, is uniquely equipped to meet these challenges.

One of the key shifts in the digital evolution of government is the transition from basic digitization—such as electronic forms or websites—to intelligent automation, where systems not only collect data but interpret, learn from, and act on it. For example, municipal governments are deploying AI to detect infrastructure issues before they escalate into costly repairs, while national agencies use algorithms to enhance the accuracy and speed of administrative adjudication processes. These developments not only reduce administrative burden but also enable public officials to focus on strategic tasks requiring human judgment and ethical reasoning.

Early adopters of AI in government provide valuable blueprints for broader implementation. Estonia, often lauded as the world’s most digitally advanced government, uses AI to automate processes such as unemployment benefit assignment and judicial tasks. Singapore employs AI across a multitude of city-state functions, from predictive policing to intelligent traffic management. Meanwhile, the United States and European Union are steadily advancing policies and frameworks to govern the safe and effective use of AI in sensitive public domains.

However, while the potential benefits of AI in government are vast, they are matched by equally significant challenges. Issues such as algorithmic bias, lack of transparency, data governance, and public mistrust must be carefully navigated. Furthermore, governments must grapple with technical debt, outdated infrastructure, and skill shortages, all of which complicate AI adoption. This complexity underscores the need for a measured, strategic approach to deploying AI in public service—one that aligns technological innovation with ethical governance and democratic values.

This blog explores the state of AI in government by examining practical use cases, ethical and legal frameworks, successful case studies, implementation challenges, and future roadmaps. Through a comprehensive lens, it aims to answer a fundamental question: what works when it comes to AI in government?

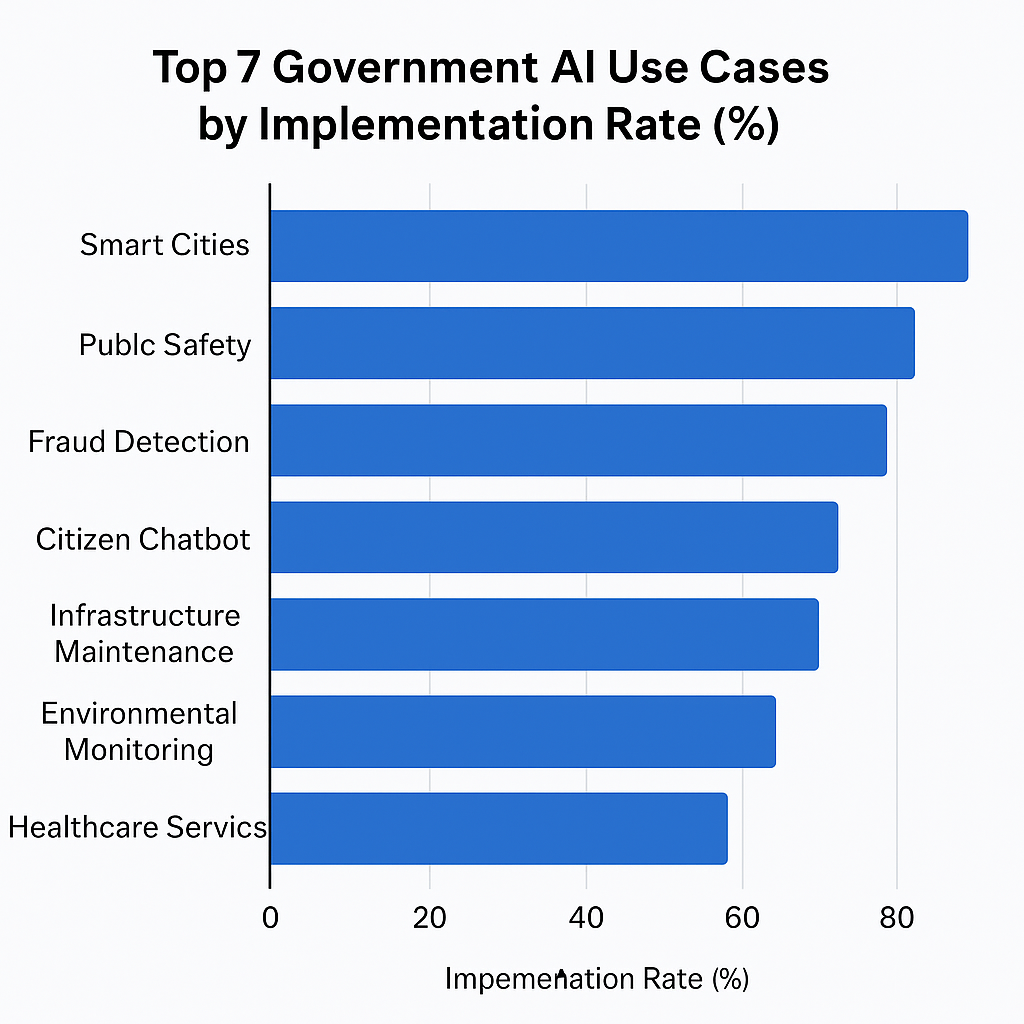

In the following sections, we will dissect the core areas where AI has delivered measurable public value, spotlight global best practices, and evaluate the institutional prerequisites for AI readiness. Alongside this analysis, two data-driven charts and one comparative table will provide a visual summary of trends and benchmarks. The goal is to inform policymakers, technologists, and the public on how to responsibly harness AI to create smarter, more resilient, and citizen-centric governments.

AI Use Cases in Government Operations

Artificial Intelligence has transitioned from a conceptual innovation to a practical tool driving efficiency, precision, and scale in government operations. Across the globe, public institutions are leveraging AI to automate tasks, reduce bureaucratic complexity, and improve the responsiveness of services delivered to citizens. By adopting AI systems in day-to-day functions, governments are redefining how essential public services are designed, managed, and delivered.

Smart City Management and Urban Analytics

One of the most visible and impactful applications of AI in government lies in smart city initiatives. Urban centers increasingly face challenges such as congestion, pollution, infrastructure wear, and public safety. Governments are employing AI-powered platforms that process real-time data from IoT sensors, surveillance cameras, and traffic systems to optimize city operations. For instance, AI algorithms can dynamically adjust traffic signals based on real-time vehicle flow, significantly reducing congestion and emissions. Similarly, smart waste management systems use predictive analytics to optimize collection routes, thereby reducing operational costs and environmental impact.

Cities like Barcelona and Singapore have integrated AI-driven dashboards that consolidate information across transportation, energy, water, and security networks. These platforms provide municipal administrators with actionable insights for real-time decision-making and long-term urban planning. As cities continue to grow, the demand for AI-driven spatial analytics and predictive modeling will become more critical for sustainable urban governance.

Enhancing Public Safety and Law Enforcement

Governments are increasingly turning to AI to bolster public safety and improve the effectiveness of law enforcement. AI-powered surveillance systems utilize computer vision to detect anomalies, identify suspicious behaviors, and enhance situational awareness. In certain jurisdictions, facial recognition technologies are employed—albeit controversially—for identifying individuals involved in criminal activity. While such technologies raise ethical and privacy concerns, their use has shown potential in accelerating investigations and preventing crimes in high-risk areas.

Predictive policing, another emergent application, uses historical crime data to forecast likely locations and times for criminal activity. Police departments in cities like Los Angeles and London have piloted such tools to allocate patrol resources more efficiently. Additionally, AI-driven forensic analysis tools can rapidly process large datasets—including audio, video, and digital evidence—to assist investigators in solving cases more swiftly than traditional manual methods.

Infrastructure Maintenance and Asset Management

AI is also revolutionizing the way governments maintain public infrastructure. Predictive maintenance systems analyze data from sensors embedded in roads, bridges, and public buildings to forecast wear and detect structural issues before they escalate into emergencies. This approach not only reduces downtime and repair costs but also improves public safety by preempting infrastructure failures.

For example, New York City has employed machine learning models to identify potholes by analyzing images captured from street-level cameras. Similarly, water utilities in the United Kingdom have adopted AI to predict pipe bursts by correlating environmental, usage, and historical data. These technologies allow for preemptive maintenance scheduling and optimized resource allocation, moving governments from reactive to proactive management strategies.

Citizen Services and AI Chatbots

As citizen expectations for fast, personalized digital services grow, many governments are deploying AI chatbots to handle routine public inquiries. These virtual assistants are available 24/7, capable of processing and responding to large volumes of questions related to taxation, social services, licensing, and public transportation. By doing so, they alleviate the burden on human customer service representatives and reduce waiting times for citizens.

One notable example is Australia’s “Alex,” a virtual assistant launched by the Australian Taxation Office, which has successfully handled millions of interactions with high satisfaction rates. In India, the Ministry of Electronics and Information Technology (MeitY) launched a multilingual chatbot to assist with digital literacy and services under the “Digital India” initiative. These tools exemplify how natural language processing (NLP) enables accessible, inclusive, and efficient communication between governments and their constituents.

Fraud Detection and Risk Assessment

Government programs related to taxation, healthcare, and welfare are particularly vulnerable to fraud, abuse, and error due to their size and complexity. AI provides a powerful toolset for detecting anomalies, uncovering hidden patterns, and flagging high-risk transactions. Machine learning models trained on historical data can identify suspicious claims, detect underreporting of income, or flag fictitious accounts with greater speed and accuracy than manual reviews.

For instance, the U.S. Internal Revenue Service (IRS) utilizes AI models to detect tax fraud by analyzing millions of filings for inconsistencies. Similarly, social security administrations in several European countries use AI to prevent overpayments and identify non-eligible recipients. These applications significantly reduce losses, protect public funds, and maintain the integrity of government programs.

Healthcare Resource Allocation and Pandemic Response

The COVID-19 pandemic highlighted the critical role AI can play in public health and emergency response. Governments used AI to forecast infection trends, identify high-risk regions, allocate medical supplies, and monitor compliance with public health measures. For example, South Korea implemented AI-based contact tracing and exposure notification tools that helped control the spread of the virus while minimizing lockdowns.

In non-emergency scenarios, public health agencies use AI to predict hospital admissions, optimize ambulance deployment, and prioritize patients for preventive care. AI algorithms also assist in drug approval processes, patient triage systems, and early disease detection in national screening programs. The integration of AI into health departments enables evidence-based policy decisions and targeted interventions that improve population health outcomes.

Environmental Monitoring and Climate Action

Climate change and environmental sustainability have become top priorities for governments worldwide. AI enhances environmental governance by enabling real-time monitoring of pollution levels, water quality, deforestation, and wildlife movement. AI-powered satellite imagery analysis helps track illegal land use, monitor forest degradation, and assess the impact of climate events such as floods and wildfires.

The European Space Agency’s Copernicus program uses AI to process vast volumes of Earth observation data, providing insights for policymakers on climate risks and biodiversity loss. In the United States, the Environmental Protection Agency (EPA) employs AI models to analyze air quality data and predict pollution spikes, enabling quicker public alerts and regulatory responses.

This wide-ranging applicability of AI in government operations demonstrates not only the maturity of the technology but also its alignment with public sector mandates. However, as implementation deepens, it becomes increasingly necessary to pair innovation with robust policy frameworks to address the ethical, legal, and operational implications.

Policy, Ethics, and Transparency in Government AI

As artificial intelligence becomes increasingly embedded in government operations, the imperative for robust policy frameworks, ethical safeguards, and transparent governance has never been more urgent. While AI presents transformative potential for enhancing public services, it also introduces profound questions around accountability, fairness, and democratic legitimacy. Governments must balance the drive for innovation with the responsibility to uphold citizens’ rights, values, and trust. This section examines the evolving landscape of public sector AI governance, focusing on regulatory developments, ethical considerations, and mechanisms to ensure transparency and public oversight.

The Need for AI Governance in the Public Sector

AI in government does not operate in a vacuum. When public institutions deploy algorithmic systems for decision-making—whether in policing, welfare distribution, or immigration—there are significant implications for due process, equity, and social justice. Unlike in the private sector, where market forces and competition exert some measure of accountability, governments must adhere to constitutional norms, legal standards, and democratic accountability.

Unregulated or poorly governed AI systems risk perpetuating systemic biases, making opaque decisions that affect citizens’ lives, and eroding trust in public institutions. These risks are exacerbated by the so-called "black box" nature of many AI models, especially deep learning systems that lack interpretability. As a result, governments have a distinct obligation to ensure AI systems are not only technically effective but also procedurally fair and socially acceptable.

Ethical AI Principles: From Declarations to Implementation

Over the past few years, numerous international bodies and national governments have published high-level principles for ethical AI. Common themes include fairness, transparency, accountability, privacy, and human oversight. The OECD’s AI Principles, the EU’s Ethics Guidelines for Trustworthy AI, and UNESCO’s Recommendation on the Ethics of Artificial Intelligence have set a global foundation for normative guidance.

However, translating these principles into actionable policies remains a complex challenge. Governments must operationalize ethical standards into procurement practices, risk assessments, and development protocols. For example, Canada has introduced an Algorithmic Impact Assessment (AIA) tool that scores proposed AI systems across dimensions such as privacy, human rights, and automated decision-making. Any AI project above a certain risk threshold must undergo a public consultation and demonstrate mitigation measures.

Similarly, New Zealand has adopted the Algorithm Charter for Aotearoa, which commits government agencies to explainable, inclusive, and fair use of algorithms. These frameworks demonstrate that ethical AI is not merely a conceptual goal, but a measurable and enforceable public obligation.

Transparency and Explainability in Government Algorithms

Transparency is a cornerstone of democratic governance. In the context of AI, this means that citizens should be able to understand how algorithmic decisions are made, what data is used, and what safeguards are in place. This is particularly vital when AI systems impact civil liberties, such as determining eligibility for benefits, surveillance, or policing.

Explainable AI (XAI) techniques are being developed to address these needs, allowing models to produce human-understandable justifications for their outputs. Governments are beginning to adopt these technologies, but challenges remain—especially in ensuring explainability at scale and in systems based on complex neural architectures.

Some jurisdictions have implemented “algorithmic registers” that disclose the use of automated decision systems by public agencies. The City of Amsterdam and Helsinki, for instance, maintain public portals where citizens can review the AI systems in use, the data they rely on, and the purposes they serve. Such initiatives not only foster transparency but also invite civic participation and oversight.

Legal Frameworks and Regulatory Oversight

On the legislative front, governments are developing comprehensive legal frameworks to regulate AI deployment. The European Union’s AI Act represents the most ambitious attempt to date. It classifies AI systems based on risk tiers and imposes stringent requirements on “high-risk” applications, particularly those used in law enforcement, border control, and social welfare. Obligations include data governance standards, transparency, human oversight, and conformity assessments.

The United States, while less centralized in regulatory approach, has seen progress at both federal and state levels. President Biden’s Executive Order on Safe, Secure, and Trustworthy AI mandates federal agencies to establish safeguards and conduct impact analyses for their AI tools. Meanwhile, the Federal Trade Commission (FTC) and National Institute of Standards and Technology (NIST) are working to define guidelines for trustworthy AI use.

Importantly, legal frameworks must not only regulate AI systems post-deployment but also guide their development lifecycle through design controls, audit trails, and contingency plans. Public agencies must build internal compliance capacities and create dedicated units for AI governance.

Public Trust, Civic Engagement, and Social Legitimacy

Technology alone cannot resolve governance challenges. Public trust is a critical factor in the successful adoption of AI in government. Citizens must believe that AI systems are designed and operated in their best interests, particularly those from vulnerable or marginalized communities who may be disproportionately impacted by algorithmic decisions.

To foster legitimacy, governments must invest in public engagement, participatory design, and open dialogue. Citizen assemblies, community consultations, and stakeholder mapping exercises are increasingly being used to co-create AI policies and ensure diverse voices are heard. For instance, the UK’s Centre for Data Ethics and Innovation has organized national forums to discuss AI’s societal impact and gather citizen feedback on specific policy proposals.

Trust is also reinforced through independent oversight. Countries like the Netherlands and Australia have established AI ethics committees and data protection authorities with investigatory powers. These bodies can intervene in cases of misuse, recommend corrective actions, and promote best practices across departments.

Procurement and Vendor Accountability

Finally, government AI systems are often built by third-party vendors. This necessitates clear procurement guidelines that enforce ethical standards and technical requirements. Governments must ensure that vendors disclose training data sources, model limitations, and bias mitigation techniques.

Framework contracts can embed fairness audits, explainability clauses, and data-sharing protocols. The World Bank and GovAI initiatives advocate for responsible procurement strategies, ensuring that ethical principles are upheld throughout the public-private partnership lifecycle.

In summary, as governments embrace the power of AI, they must simultaneously strengthen the guardrails that ensure this power is exercised responsibly. Policy, ethics, and transparency are not supplementary concerns—they are foundational pillars of any credible AI strategy in the public sector. By embedding accountability into system design, ensuring openness in algorithmic processes, and engaging citizens in meaningful dialogue, governments can unlock AI’s benefits while safeguarding democratic values.

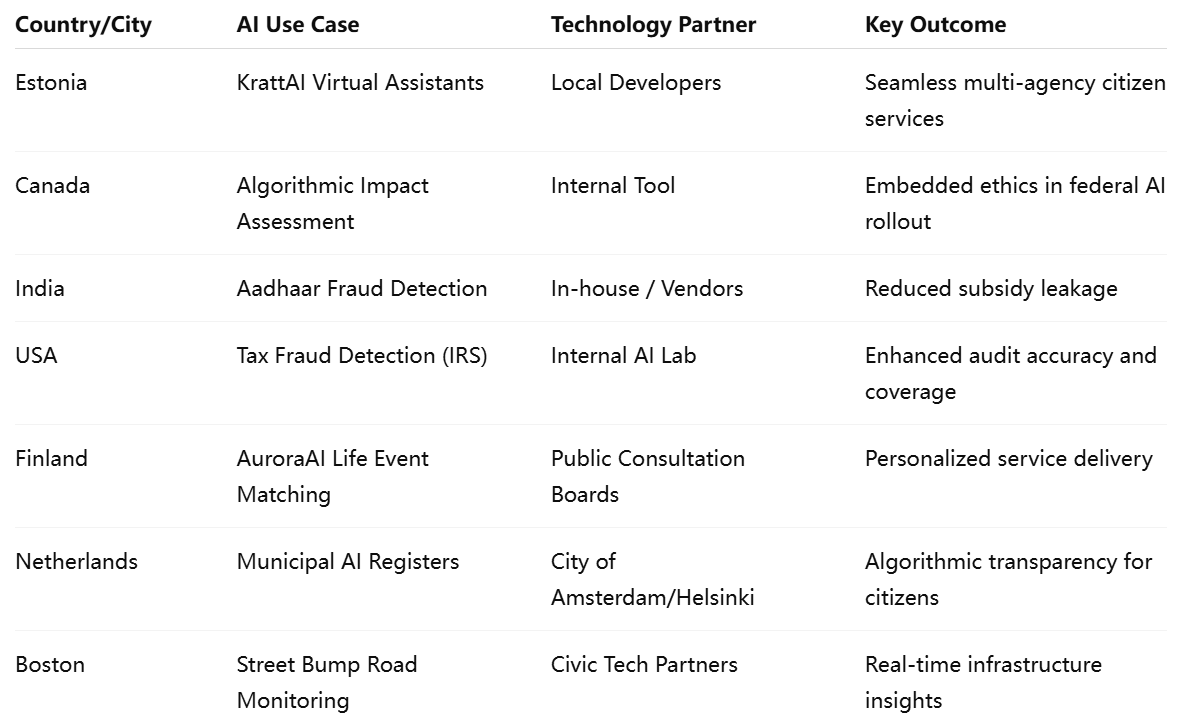

Real-World Case Studies: Global and Local Successes

While theoretical frameworks and ethical guidelines provide the foundation for AI integration in government, real-world implementations offer the clearest view of what truly works. Across diverse geopolitical and institutional contexts, several governments have successfully harnessed AI to modernize public administration, optimize resource allocation, and improve citizen services. These case studies—ranging from national digital ecosystems to localized pilot programs—highlight both the practical benefits and the nuanced challenges of AI deployment in the public sector.

Estonia: The Blueprint of a Digital Nation

Estonia stands out as one of the most advanced examples of a digitally native government. Since the early 2000s, Estonia has developed an extensive e-governance framework, and in recent years, it has integrated AI across numerous public services. The cornerstone of Estonia’s approach is its X-Road infrastructure, which enables seamless and secure data exchange between public and private sector entities.

One of Estonia’s most prominent AI projects is KrattAI, an interoperable network of public-sector virtual assistants. Named after a mythical creature that performs tasks for its master, KrattAI is designed to allow citizens to interact with multiple government services through a single AI-powered interface. These virtual assistants are trained to handle tasks ranging from renewing prescriptions to filing tax documents, all while adhering to stringent data privacy and transparency standards.

Moreover, Estonia’s AI-driven decision-support systems assist in judicial and administrative domains. For example, AI tools help process small claims in civil court proceedings, improving consistency and reducing case backlog. The Estonian government emphasizes “human-in-the-loop” oversight, ensuring that final decisions are reviewed and approved by civil servants.

Canada: Institutionalizing Impact Assessments

Canada has taken a pioneering role in creating institutional mechanisms for responsible AI governance. The federal government launched the Algorithmic Impact Assessment (AIA) framework as part of its Directive on Automated Decision-Making, making it mandatory for all federal agencies using AI systems that impact citizens.

This tool requires agencies to self-assess the potential risks of AI projects along multiple dimensions—including impacts on human rights, fairness, and transparency—before deployment. Based on the assigned risk level (from Level 1 to Level 4), specific requirements are triggered, such as peer reviews, public consultation, or algorithmic auditing.

The Canada Revenue Agency (CRA) has used AI for fraud detection and tax compliance, supported by AIA documentation and internal governance structures to monitor fairness and reduce algorithmic bias. This process has improved not only operational outcomes but also public confidence, demonstrating that robust oversight can coexist with technological innovation.

India: Scaling AI in Public Service Delivery

India, with its vast population and complex bureaucracy, presents both immense potential and considerable challenges for AI in governance. Nevertheless, the country has launched several high-impact AI initiatives, particularly in identity management and transportation.

The Aadhaar system, the world’s largest biometric ID program, has integrated AI to verify identity and detect fraud in welfare distribution. Machine learning algorithms analyze transaction patterns and biometric mismatches to identify anomalies, enabling more accurate targeting of subsidies and reducing leakages.

Another notable example is DigiYatra, an AI-powered facial recognition system introduced in major airports to streamline passenger boarding. By linking digital identity to flight data, DigiYatra reduces check-in times and enhances security without requiring physical documentation. Importantly, the system was designed with privacy considerations in mind, offering passengers the option to opt out.

India has also launched the National AI Portal and AI for All initiatives to support digital literacy, ethical guidelines, and data governance frameworks. These programs emphasize capacity building and inclusivity, ensuring that AI deployment does not exacerbate the digital divide.

United States: Departmental Innovation Amidst Decentralization

In the United States, AI implementation has largely been decentralized, with federal agencies independently pursuing AI-driven innovations. The Department of Homeland Security (DHS) has piloted machine learning models for visa fraud detection and border risk assessment, while the Department of Health and Human Services (HHS) uses AI to predict disease outbreaks and allocate resources.

The Internal Revenue Service (IRS) employs AI to detect tax evasion by analyzing patterns across large datasets of tax returns, audits, and financial transactions. By identifying inconsistencies and anomalies, the IRS has significantly increased the accuracy of its enforcement operations.

Additionally, the U.S. Citizenship and Immigration Services (USCIS) uses natural language processing (NLP) tools to automate responses to routine immigration queries, improving processing times and citizen engagement. However, these systems have also faced scrutiny over transparency and bias, prompting internal reviews and public consultations aimed at enhancing accountability.

A notable initiative is the AI.gov platform, which consolidates federal AI projects, offers educational resources, and provides access to guidelines such as the AI Risk Management Framework published by the National Institute of Standards and Technology (NIST). While the U.S. lacks a centralized AI regulatory body, efforts are underway to harmonize AI policy through executive actions and interagency collaborations.

Finland and the Netherlands: Participatory Approaches to AI Ethics

Both Finland and the Netherlands have earned recognition for their citizen-centric approach to AI governance. In Finland, the AuroraAI program links citizens to public services based on life events using predictive algorithms. For example, if a citizen has a child, the system might proactively recommend child care services, healthcare appointments, and parental leave benefits.

Rather than simply rolling out this technology, the Finnish government involved thousands of citizens in co-designing the platform. Public consultations, citizen panels, and cross-sector working groups shaped the ethical foundation and usability of the system.

Similarly, the Dutch National AI Strategy emphasizes human rights, openness, and accountability. Amsterdam and Helsinki jointly launched AI Registers—public databases where citizens can see what AI systems are used by municipal services, including information on data sources and risk ratings. These tools set a global benchmark for algorithmic transparency and civic empowerment.

Local Innovation: Boston’s AI for Infrastructure

At the municipal level, cities are increasingly experimenting with AI to address localized challenges. A compelling example comes from Boston, where the Public Works Department deployed an AI-driven application called Street Bump. This tool uses smartphone accelerometers and GPS to detect potholes in real-time as residents drive over road anomalies. The system aggregates data to identify and prioritize road repairs, replacing manual inspections with automated, crowd-sourced monitoring.

The platform not only improved maintenance response times but also demonstrated the potential of civic tech and public-private partnerships. Street Bump represents how even low-cost AI solutions can make tangible improvements in everyday public services when implemented with strategic vision and community engagement.

These global and local case studies illustrate that AI can generate substantial public value when implemented strategically, ethically, and inclusively. They reveal that success is not simply a function of technological maturity, but of institutional readiness, regulatory foresight, and civic trust.

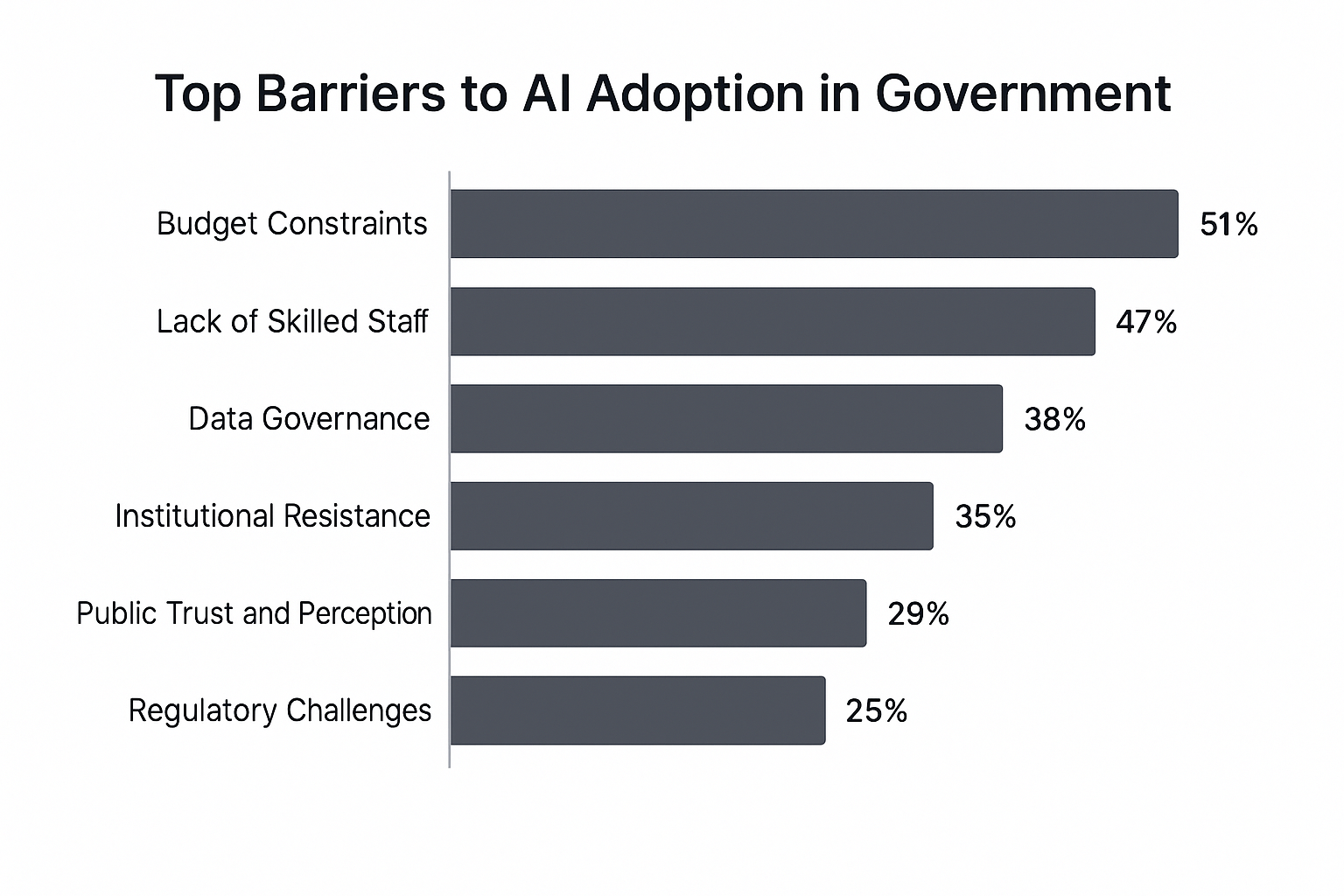

Barriers to Adoption and How to Overcome Them

While the promise of artificial intelligence in government is well-documented through early adoption case studies, the path to widespread and sustainable implementation is riddled with challenges. The complexity of public institutions, combined with the sensitivity of government functions, creates a unique set of barriers to AI adoption that differ significantly from those in the private sector. These barriers span financial constraints, skills shortages, institutional inertia, data governance complexities, and public skepticism. Addressing them requires a multidimensional approach that blends policy innovation, capacity building, and stakeholder collaboration.

Budget Constraints and Procurement Complexity

Government agencies often operate under strict budgetary allocations and face rigid procurement procedures that are ill-suited to fast-evolving technologies like AI. Unlike commercial organizations that can make agile investment decisions, public sector entities are bound by fiscal cycles, competitive bidding mandates, and lengthy approval processes. This inhibits the ability to rapidly prototype, test, and scale AI applications.

Furthermore, AI development and deployment require sustained investment—not only in software but also in infrastructure, cybersecurity, and workforce training. Many governments, especially in developing economies, struggle to justify such investments when faced with more immediate social service demands.

Strategic Response:

To overcome financial hurdles, governments can adopt modular AI architectures that allow incremental implementation, beginning with small-scale pilots. Public-private partnerships (PPPs) are also a viable model, enabling governments to share both costs and risks with external vendors. In addition, innovation funds and digital transformation grants—such as the EU’s Digital Europe Programme or the UK’s AI and Data Economy Fund—can be used to underwrite AI experimentation within ministries and municipalities.

Talent Shortage and Capacity Gaps

A critical barrier to AI adoption is the scarcity of AI expertise within the public sector. Governments often compete with tech firms and academia for highly skilled data scientists, machine learning engineers, and AI ethicists. Civil service salary structures and rigid career paths further exacerbate the difficulty in attracting and retaining top talent.

The challenge extends beyond technical staff. Policymakers, legal advisors, procurement officers, and program managers must also develop a functional understanding of AI to make informed decisions and provide effective oversight. Without this institutional competence, AI projects risk being poorly scoped, mismanaged, or ethically flawed.

Strategic Response:

Governments must prioritize AI literacy and workforce upskilling. National academies and public sector training institutions should integrate AI modules into their curricula. For example, Singapore’s Civil Service College offers AI fluency programs for public officers, while Canada and the UK provide digital competency frameworks for civil servants. Additionally, governments can create rotational fellowships and interchange programs with academia and the private sector to import expertise and foster knowledge transfer.

Data Governance and Interoperability Challenges

AI systems thrive on high-quality, well-structured data. However, many government departments operate in silos, with fragmented data architectures and incompatible formats. Legacy systems, which are still widely used across public institutions, pose integration challenges that limit the utility of AI models. Moreover, data sharing across departments or between national and local agencies is often restricted by bureaucratic, legal, or political constraints.

Beyond infrastructure issues, there are also significant concerns around data privacy, consent, and usage rights, particularly when dealing with sensitive domains such as health, taxation, and criminal justice. Failure to address these concerns can lead to public backlash and legal complications.

Strategic Response:

Governments must invest in data modernization—including cloud migration, standardized APIs, and data catalogs. Establishing centralized data governance bodies can ensure interoperability, enforce quality standards, and oversee ethical data use. Initiatives such as the European Interoperability Framework and India’s National Data Governance Policy serve as models for harmonized and secure data ecosystems. Furthermore, incorporating privacy-preserving techniques such as differential privacy, federated learning, and anonymization can help mitigate concerns about data misuse.

Institutional Resistance and Risk Aversion

Public institutions are inherently conservative, driven by risk aversion and compliance obligations. This culture can slow down innovation, particularly when the technology in question—such as AI—carries reputational and legal risks. Fear of failure, negative media coverage, or political backlash often discourages experimentation.

In addition, bureaucratic inertia and hierarchical decision-making processes can stifle innovation. Public sector AI initiatives frequently suffer from unclear ownership, fragmented leadership, and lack of cross-departmental coordination. Without a clear mandate and strategic alignment, AI projects may be abandoned midstream or fail to scale beyond pilot phases.

Strategic Response:

Institutional resistance can be mitigated by embedding AI initiatives within clear governance frameworks that define roles, responsibilities, and escalation mechanisms. Governments should establish AI steering committees or chief AI officers to provide strategic direction and ensure continuity. Creating regulatory sandboxes—controlled environments that allow safe experimentation—can enable innovation while managing risks. Additionally, documenting and publicly sharing lessons from pilot projects, whether successful or not, helps normalize experimentation and build institutional confidence.

Public Skepticism and Trust Deficit

AI systems used by governments directly impact citizens’ lives, from determining eligibility for services to monitoring public behavior. As such, any perception of bias, surveillance, or lack of transparency can erode public trust. Controversial deployments, such as facial recognition systems or predictive policing algorithms, have triggered protests and legal challenges in multiple countries.

The lack of explainability in AI decisions further complicates the situation. When citizens do not understand why a decision was made—especially if it appears unjust or opaque—they are more likely to view AI with suspicion. This trust deficit can significantly hinder the political feasibility of scaling AI across public institutions.

Strategic Response:

Building trust requires transparency, accountability, and civic engagement. Governments should publish algorithmic registers, conduct impact assessments, and provide accessible explanations of how AI systems work. Mechanisms for redress and human appeal must be embedded into any automated decision-making process. Furthermore, public consultations, citizen assemblies, and stakeholder workshops should be standard practice before launching AI initiatives with wide societal implications. As demonstrated by cities like Helsinki and Amsterdam, transparency combined with participatory governance can significantly enhance legitimacy and trust.

Fragmented Regulatory Environment

In many countries, the regulatory landscape surrounding AI remains underdeveloped or inconsistently applied. Different departments or regions may interpret ethical guidelines and data laws differently, leading to a lack of coherence and potential conflicts. This fragmentation complicates procurement, compliance, and enforcement.

Strategic Response:

To streamline AI governance, governments must establish unified policy frameworks that cut across agencies and levels of government. This includes central AI oversight bodies, national AI strategies, and common risk management protocols. Global coordination through platforms like the OECD, the World Economic Forum, and the UN AI for Good initiative can also help harmonize standards and encourage best practice sharing.

In conclusion, while the barriers to AI adoption in government are substantial, they are not insurmountable. Success requires more than technological solutions—it demands visionary leadership, organizational reform, and a steadfast commitment to ethical governance. By recognizing and proactively addressing these challenges, governments can pave the way for AI systems that are not only efficient but also trustworthy, inclusive, and accountable.

Building AI-Ready Governments

As artificial intelligence continues to evolve from a niche technological domain into a foundational element of public governance, the question is no longer whether governments should adopt AI, but how they can do so effectively, ethically, and at scale. The path forward demands a systemic transformation in how governments operate, design policy, manage talent, and interact with citizens. Building AI-ready governments requires not only the integration of digital infrastructure but also the cultivation of trust, the institutionalization of accountability, and the embedding of agility in administrative processes.

Institutionalizing AI Governance and Leadership

One of the most urgent steps in preparing governments for AI is the establishment of clear institutional governance. This includes the creation of centralized AI oversight bodies, the appointment of Chief AI Officers (CAIOs), and the development of interagency coordination councils. These entities should be empowered to set standards, monitor compliance, and provide strategic direction across ministries and departments.

Furthermore, national AI strategies must evolve from high-level vision documents into actionable roadmaps with measurable outcomes. Countries such as France, the United Arab Emirates, and South Korea have already moved in this direction, combining central leadership with performance monitoring and adaptive policy cycles. The integration of AI ethics committees and regulatory sandboxes within governance frameworks can provide additional safeguards while encouraging responsible experimentation.

Cultivating a Digitally Fluent Public Workforce

Governments must invest in equipping civil servants with the knowledge and skills needed to engage with AI systems critically and competently. AI-readiness is not confined to data scientists or developers; it must extend to policy analysts, procurement officers, frontline workers, and elected officials. Without widespread AI literacy, governments risk being overly reliant on external vendors, leading to knowledge asymmetries and weakened internal control.

Public administration academies should modernize their curricula to include foundational AI concepts, algorithmic accountability, and risk management practices. Micro-credentialing, on-the-job training, and cross-sector fellowships are also effective mechanisms to foster a more agile and informed workforce. Moreover, recruiting from diverse professional backgrounds—including law, philosophy, and social sciences—will help ensure AI development reflects multidisciplinary and inclusive perspectives.

Fostering Collaborative Ecosystems

Building AI-ready governments also necessitates strong collaboration with academia, civil society, and the private sector. No government can master the complexities of AI in isolation. Strategic partnerships with research institutions can help governments stay at the frontier of technological innovation and benefit from open-source solutions and collective intelligence.

Equally important is the inclusion of citizens and advocacy groups in AI policymaking. Participatory governance—through public consultations, citizen panels, and digital democracy platforms—strengthens legitimacy and aligns AI deployment with public values. The co-design of services with end users not only improves usability but also increases transparency and trust.

Open innovation platforms and civic tech accelerators can serve as testing grounds for pilot initiatives while creating feedback loops between government and the public. Successful models, such as the GovTech Singapore initiative and the UK’s GovAI program, demonstrate how inclusive ecosystems can accelerate scalable AI deployment while maintaining ethical integrity.

Ensuring Robust Legal and Ethical Infrastructure

As AI systems become more embedded in decision-making, the legal and ethical frameworks that govern them must be continuously updated. Legislative bodies should prioritize the development of comprehensive AI laws that address risk classification, redress mechanisms, and algorithmic transparency. These frameworks should be interoperable with international standards to avoid regulatory fragmentation and enable cross-border collaboration.

At the same time, governments must avoid overregulation that could stifle innovation or delay critical implementations. A tiered, risk-based approach—as exemplified by the European Union’s AI Act—offers a balanced model by calibrating regulatory burdens according to the potential harm of specific applications.

Embedding ethics-by-design into AI development processes is equally critical. This means integrating ethical principles from the outset of project design, ensuring that fairness, accountability, and non-discrimination are not afterthoughts but foundational components. Regular audits, impact assessments, and third-party reviews can further strengthen public oversight.

Future-Proofing Through Strategic Foresight

Finally, AI-readiness requires a forward-looking mindset. Governments must develop foresight capabilities to anticipate emerging challenges—such as AI in warfare, quantum computing impacts, or deepfake disinformation—and formulate proactive responses. Scenario planning, horizon scanning, and simulation exercises should be institutionalized within policymaking processes.

Investing in resilient infrastructure, adaptive legislation, and continuous public engagement will help governments remain flexible in the face of technological volatility. AI is not a static tool but a dynamic ecosystem; government systems must be equally dynamic to accommodate its evolution.

The road ahead for AI in government is one of promise and responsibility. AI can enhance public value, improve decision-making, and make institutions more responsive and equitable. But realizing this potential requires intentional design, inclusive governance, and unwavering commitment to democratic principles.

Governments that succeed will be those that view AI not merely as a technological upgrade but as a transformative opportunity to reimagine public service. By embedding AI-readiness into their core institutional DNA, these governments will not only meet today’s challenges but also shape a more intelligent, accountable, and resilient future.

References

- OECD AI Principles – OECD

https://oecd.ai/en/ai-principles - European Commission AI Act Overview

https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence - GovTech Singapore Initiatives

https://www.tech.gov.sg/ - KrattAI Project – Estonia

https://kratid.ee/en/ - Canada’s Algorithmic Impact Assessment Tool

https://www.canada.ca/en/government/system/digital-government/digital-government-innovations/responsible-use-ai/algorithmic-impact-assessment.html - AuroraAI Program – Finland

https://www.auroraai.fi/en/ - AI Register – City of Helsinki

https://ai.hel.fi/ - AI.gov – U.S. Federal AI Initiatives

https://www.ai.gov/ - Digital India and DigiYatra Programs

https://www.digitalindia.gov.in/ - World Economic Forum – AI Governance Toolkit

https://www.weforum.org/projects/global-ai-action-alliance