AI Hallucinations and Supply Chain Attacks: A Confluence of Emerging Risks

Artificial intelligence (AI) has made remarkable strides in recent years, especially with large language models (LLMs) capable of generating text and even code. However, these models sometimes “hallucinate” – producing information that sounds plausible but is entirely fabricated or incorrect. An AI hallucination occurs when an AI system (often an LLM) generates false or misleading content with a confident tone, potentially leading humans astray. For example, in early 2023, Google’s Bard chatbot famously claimed the James Webb Space Telescope took the first picture of an exoplanet – a false statement that went viral and embarrassed its makers. Such hallucinations can range from minor factual errors to intricate fabrications (like fake legal cases or nonexistent code libraries), posing risks in any domain where AI is applied.

Simultaneously, the world of cybersecurity has seen a sharp rise in software supply chain attacks – breaches where attackers target the dependencies and software supply processes of organizations rather than attacking them directly. In a supply chain attack, a threat actor compromises a trusted software component or update, thereby poisoning the downstream users of that software. High-profile incidents like the SolarWinds Orion compromise in 2020 (where a malicious update was installed by about 18,000 organizations) have illustrated how far-reaching these attacks can be. In 2022 alone, over 10 million people were impacted by supply chain attacks spanning 1,743 breached entities. Experts have observed an alarming growth trend – supply chain cyberattacks increased 78% in 2018 and soared by 742% between 2019 and 2022. This means what was once a niche attack technique has exploded into one of the most significant cybersecurity threats today.

In this blog post, we will explore AI hallucinations and software supply chain attacks in depth. We’ll introduce each concept and then examine their intersection – specifically how AI hallucinations in coding and software development could open the door to new supply chain vulnerabilities. Through case studies and examples, we will illustrate the dangers of these phenomena both independently and combined. Finally, we will discuss recommendations and best practices to mitigate the risks, with a focus on cybersecurity-sensitive applications where trust and accuracy are paramount. The goal is to provide a comprehensive understanding of these emerging risks in a formal yet accessible way for general readers and cybersecurity professionals alike.

Understanding AI Hallucinations

AI hallucinations refer to instances where an AI system generates output that looks valid but is actually baseless or incorrect. In essence, the AI is “seeing” or creating something that isn’t real – much like a hallucination in a human mind. Technically, this is often discussed in the context of generative AI models such as LLMs. “An AI hallucination is when a large language model generates false information or misleading results”, as defined by TechTarget. The key issue is that the AI’s response is syntactically correct and confident-sounding, making it hard for users to immediately tell it’s fabricated.

Why do hallucinations occur? There are several causes:

- Imperfect Training Data: LLMs learn from vast datasets scraped from the internet and other sources. If that data contains errors, inconsistencies, or biases, the model can internalize them. For example, if the training data has an incorrect fact repeated often, the model may present it as truth. Lack of information on a topic can also lead the AI to “fill in the blanks” with its best guess – often a hallucination.

- Prediction Limitations: These models don’t have a true “understanding” of facts; they predict likely sequences of words. Sometimes the prediction process (the transformer decoding) leads to fluent but incorrect outputs. The model might stitch together plausible-sounding phrases it has seen, but those phrases may not be grounded in reality.

- Ambiguous or Complex Prompts: If a user’s prompt or question is unclear, contradictory, or asks for unknown information, the AI may produce a distorted answer. LLMs are inclined to always give an answer – they don’t know how to say “I don’t know” unless trained explicitly to do so. As a result, they may confabulate – essentially inventing an answer that fits the question form, even if it’s false.

It’s important to note that hallucination is a well-known weakness of generative AI. Even advanced models designed to minimize errors can still confidently assert falsehoods. This has real-world consequences. A striking example occurred in the legal field in 2023: an attorney submitted a brief that cited six nonexistent court cases which an AI (ChatGPT) had completely made up. The issue went unnoticed until a judge questioned the sources, leading to sanctions against the lawyer for filing a brief full of “fake opinions” generated by the AI. This case highlighted that professionals relying on AI must verify its outputs – a hallucinating AI can seriously damage credibility and cause legal or financial harm.

In everyday use, AI hallucinations can produce anything from trivial misinformation (e.g. incorrectly stating a historical date) to dangerous advice. In healthcare, for instance, an AI assistant might hallucinate a medical recommendation that has no clinical basis, potentially risking patient safety. In software development (which we will focus on later), an AI coding assistant might fabricate a function or library that doesn’t exist. The risk is amplified when users trust AI output without verification. Misinformation can propagate quickly, and in high-stakes settings, a hallucination can lead to faulty decisions or security vulnerabilities.

It’s worth noting that researchers and developers are actively working on reducing hallucinations. Approaches include improving training data quality, grounding the model’s responses in factual databases, and refining the model’s temperature (a parameter that affects randomness in output – lower temperatures generally reduce creative fabrication). Nonetheless, as of 2025, hallucinations remain a significant challenge for AI. Users of tools like ChatGPT, Bard, or GitHub Copilot are advised to treat AI outputs as draft suggestions that need human review, rather than absolute truth.

Understanding Software Supply Chain Attacks

A software supply chain attack is a type of cyberattack where adversaries target the links in the chain of software development, distribution, or update mechanisms, rather than attacking an organization’s defenses head-on. In simpler terms, instead of breaking into a company’s servers directly, an attacker might compromise a software component or service that the company (and many others) rely on. By doing so, the attacker can infiltrate many targets at once through a trusted channel.

To appreciate the concept, consider how modern software is built: developers often use third-party libraries, frameworks, package managers (like npm, PyPI, Maven), container images, and so on. Organizations also receive software updates from vendors or open-source projects. Each of these is part of the supply chain of software. If a bad actor manages to slip malicious code into one of those third-party components or into a software update, anyone who installs or updates that software will inadvertently run the malicious code. This makes supply chain attacks extremely potent – a single compromise can potentially distribute malware to thousands of systems.

Common forms of supply chain attacks include:

- Dependency Hijacking (Typosquatting): Attackers upload malicious packages to public repositories with names that are mistyped versions of popular libraries (e.g., using “requests2” to target those who meant to install the well-known “requests” library). Developers who accidentally install the wrong name get the attacker’s code. Typosquatting has been a known issue for years.

- Dependency Confusion: A variant where attackers publish packages with names that exist in an organization’s private repositories, tricking the build system into pulling the malicious public version instead. This method was demonstrated in 2021 on major companies and proved quite effective.

- Compromising Legitimate Packages: Instead of relying on typos, attackers sometimes directly compromise a legitimate open-source project. This can happen by hacking the maintainer’s account or convincing them to introduce a malicious change. A notorious example is the event-stream incident (2018), where an attacker took over an NPM package and added code that stole cryptocurrency wallet keys from applications that used it. Since event-stream was a trusted library downloaded millions of times, the malicious update propagated widely before detection.

- Malicious Software Updates: In the case of commercial software, attackers may breach a software vendor’s build or update servers. The SolarWinds Orion attack is a dramatic example: in 2020, attackers (suspected to be state-sponsored) infiltrated SolarWinds’ build system and injected a backdoor into a routine software update of the Orion network management product. When SolarWinds delivered that update, approximately 18,000 organizations (including Fortune 500 companies and government agencies) unknowingly installed a backdoor. The attackers then used that access to spy on or attack high-value targets downstream. SolarWinds was one of multiple major supply chain attacks around 2020–2021 that sounded the alarm about this threat.

- Hardware/ Firmware Supply Chain (outside the scope of software packages): In some cases, malware or backdoors are inserted into hardware components or device firmware during manufacturing or distribution. (For example, rogue code on a network appliance or an USB device). This is more specialized but worth mentioning as part of the broader supply chain risk landscape.

The impact of supply chain attacks is often widespread because one breach cascades into many victims. The 2017 NotPetya malware attack, for instance, spread through a poisoned update from a Ukrainian accounting software vendor and caused billions in damages globally – a supply chain compromise used to launch what became a devastating malware outbreak. More recently, the 2021 Log4j vulnerability (Log4Shell) in a ubiquitous open-source logging library wasn’t a deliberate attack but a discovered flaw; however, it demonstrated supply chain vulnerability: countless applications were built on Log4j, so a single flaw meant thousands of products were exploitable. Organizations worldwide scrambled to patch or mitigate Log4j in their software supply chains.

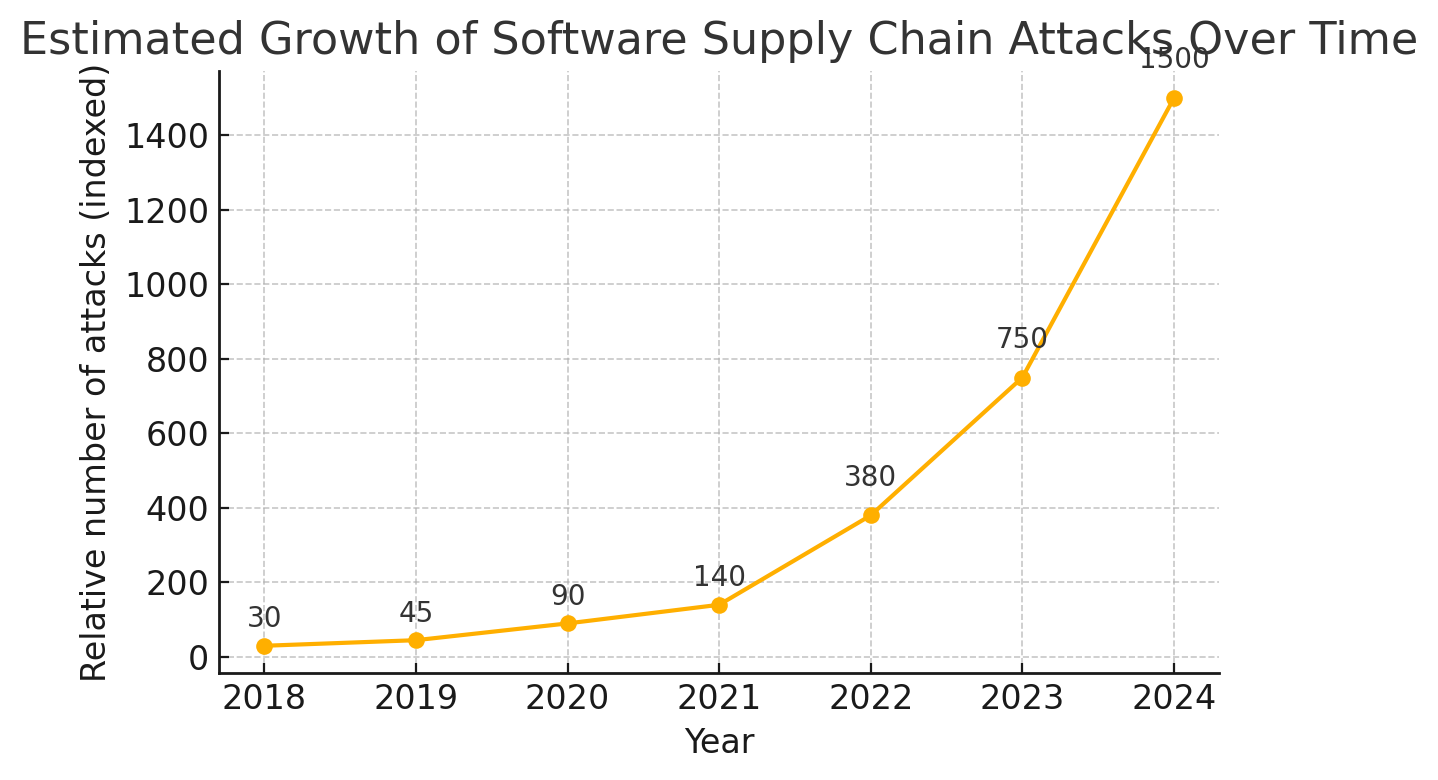

Supply chain attacks have moved to the forefront of cybersecurity concerns. Statistics underline this shift. In 2018, Symantec reported a 78% increase in supply chain attacks compared to the prior year From 2019 to 2022, the number of such attacks ballooned by 742% – an astonishing growth rate. Similarly, one report noted a 431% surge between 2021 and 2023. These percentages reflect the fact that software supply chain compromises have evolved from rare occurrences to near-daily incidents. Industry analysts predict that by 2025, almost half of organizations will have been targeted by a supply chain attack.

Why this dramatic rise? Several factors are at play:

- Increased Reliance on Third-Party Code: Modern applications might contain transitive dependencies (dependencies of dependencies) numbering in the hundreds. Each is a potential ingress point for malicious code if not carefully vetted. Attackers leverage this complexity.

- Economic and Strategic Appeal: It’s more bang-for-the-buck for attackers. Why pick a lock on one door when you can obtain a master key that opens thousands? Nation-state attackers use supply chain compromises for espionage (as seen in SolarWinds). Cybercriminals use them to mass-distribute malware (for example, inserting cryptomining or data-stealing code in popular libraries).

- Insufficient Controls in Software Ecosystems: Historically, package repositories (npm, PyPI, etc.) allowed anyone to publish packages with few security checks. Only recently have they started implementing measures like 2FA for maintainers and malware scanning. Similarly, many organizations didn’t rigorously verify software updates or the integrity of open-source components, creating an environment ripe for abuse.

The number of software supply chain attacks has skyrocketed in recent years, as illustrated by the exponential growth in incidents from 2018 through 2024 (indicative trend based on industry reports). Real-world data shows huge year-over-year jumps – for instance, 2023 saw more than 5× the supply chain attacks of 2021. Such trends underscore why supply chain security has become a top priority for organizations.

Organizations impacted by supply chain attacks face multifaceted fallout. There are direct consequences like malware infections, data breaches, or service outages. But there are also indirect effects: loss of customer trust, reputational damage, legal liabilities (if due diligence in securing the supply chain wasn’t performed), and steep financial costs. The average cost of a data breach is already high (estimated $4.45M in 2023 across industries), and supply chain breaches can multiply that impact by hitting many companies at once. In the next sections, we will see how the emerging issue of AI hallucinations might introduce new twists to this already challenging landscape.

Where AI Hallucinations and Supply Chain Attacks Intersect

At first glance, AI hallucinations and software supply chain attacks seem like unrelated problems – one is about AI making things up, and the other is about malicious code sneaking into our software. However, a critical intersection of the two has begun to emerge in the realm of AI-assisted software development and DevOps. Generative AI is increasingly used to help write code, configure systems, and suggest software components. If these AI tools hallucinate, they might inadvertently introduce vulnerabilities or even directly facilitate supply chain compromises. Let’s explore how this can happen.

Hallucinated Dependencies: The “Slopsquatting” Threat

One of the most concerning intersections is the case of hallucinated software dependencies. When developers use AI coding assistants (like OpenAI’s Codex/ChatGPT, GitHub Copilot, Amazon CodeWhisperer, etc.), they often ask for example code to accomplish tasks. The AI might respond with a code snippet that imports or requires a library – for instance, import foobarbaz – to achieve some functionality. Ideally, that library exists and is a known, safe package. But AIs have been observed to invent package names that sound plausible but do not actually exist. This is an AI hallucination in code form.

Why would an AI invent a package name? If the prompt is asking for something obscure or if the AI’s training data suggests a certain functionality but no single library name stands out, the model might generate a name by combining terms (e.g., “requests-html-parser” or “excel-magictool”) – the AI knows what such libraries should be named, but not that they don’t exist. The result is code referencing a phantom dependency.

While a non-existent import might seem like a harmless error (the code will throw an import error, alerting the developer something is wrong), it actually opens the door to a novel supply chain attack. Security researcher Seth Larson coined the term “slopsquatting” for this scenario – a play on “typosquatting” but involving AI “sloppy” outputs rather than human typos. In a slopsquatting attack, a malicious actor monitors AI-generated code recommendations (for example, by analyzing common answers on forums or directly querying AI for certain tasks) to collect the names of these hallucinated packages. The attacker then goes to a package repository (like Python Package Index or npm) and registers those exact names but fills them with malicious code.

The next time a developer receives that AI suggestion and sees an import error for, say, foobarbaz, they might think: “Oh, perhaps this is a real library I need to install.” They then search the package index, find that foobarbaz exists (because the attacker uploaded it), and install it – thereby pulling in the attacker’s payload. In effect, the AI hallucination provided a target, and the attacker turned it into a trap. This is a form of supply chain attack because the compromise occurs via a third-party package that the developer brings into their project under false pretenses.

This is not just a hypothetical thought experiment – research has demonstrated the feasibility. In mid-2023, Vulcan Cyber researchers analyzed ChatGPT’s coding answers and found it would indeed recommend non-existent packages fairly often. Out of 400 coding questions asked, about 25% of ChatGPT’s responses included at least one package name that did not exist on npm or PyPI. In total, the AI suggested over 150 unique fake packages in that experiment. The researchers then proved the danger by registering one such package on npm (one that ChatGPT had hallucinated) and uploading code that would steal data. They showed that an unsuspecting developer following the AI’s advice could easily fetch this malicious package.

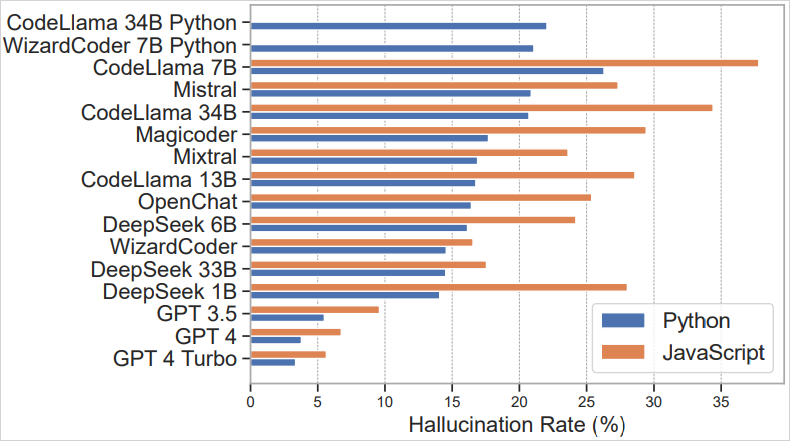

Later studies have dug even deeper. A March 2025 academic study titled “We Have a Package for You! A Comprehensive Analysis of Package Hallucinations by Code-Generating LLMs” examined 576,000 code samples generated by various AI models. The findings were eye-opening: roughly 20% of those AI-generated code snippets included imports of packages that didn’t exist. Importantly, this problem was observed across different AI coding systems. Open-source code models (like Code Llama, WizardCoder, etc.) were especially prone to hallucinating libraries, sometimes in 20–30% of cases. But even commercial models were not immune – the then-state-of-the-art GPT-4 model hallucinated a fake package in about 5% of its outputs. Five percent might sound small, but consider the scale of usage: if millions of developers are using these tools daily, a 5% chance of suggesting a bad dependency can translate to a wide attack surface.

This chart (based on a 2025 study) shows the percentage of code outputs that included a nonexistent package recommendation, for Python (blue) and JavaScript (orange) contexts. Notably, several open-source LLMs had very high hallucination rates (20–30% range), while advanced proprietary models like GPT-4 were lower (~5%) but still non-zero. Each hallucinated dependency is a potential target for a slopsquatting supply chain attack.

The same study also found that these hallucinated names are often predictable and repeatable. Over 200,000 unique fake package names were observed, but many followed patterns or were generated repeatedly by the models (58% of hallucinated names recurred across multiple runs and prompts). About 38% of the fake names resembled real packages (likely AI trying to adapt known names), 13% were simple typos of real ones, and about half were completely fabricated originals. The repetition means an attacker doesn’t have to guess randomly; they could prompt the AI a few times and compile a list of the most common hallucinated package names, then focus on creating malicious packages for those. Researchers from the open-source security firm Socket have warned that this creates a predictable attack surface – attackers can systematically weaponize these common AI mistakes.

In essence, AI hallucinations have introduced a new kind of software supply chain risk. Whereas traditional typosquatting relies on human error (a developer making a spelling mistake), slopsquatting relies on AI error. The outcome is similar: the developer installs the “wrong” package and gets compromised. But the AI’s authoritative suggestions may lull developers into a false sense of security. A piece of code in an official-looking answer or generated by a trusted AI assistant might not be second-guessed the way a random suggestion on a forum would be. This is why the intersection of AI hallucination and supply chain attack is so concerning – it combines the deceptive confidence of AI with the stealth and impact of supply chain compromises.

AI-Assisted Coding and Insecure Code Suggestions

Beyond outright fake libraries, AI coding assistants can introduce vulnerabilities in more subtle ways as well. Not every hallucination is a nonexistent package; sometimes an AI might hallucinate a code snippet – an algorithm, a configuration, a command – that is flawed or insecure. If developers blindly implement those suggestions, they might be baking vulnerabilities into software.

Some potential scenarios include:

- Use of Outdated/Vulnerable Components: An AI trained on public code might suggest using a specific version of a library or API that it “saw” frequently. But that version could be outdated and have known security flaws. For instance, an AI might frequently output

npm install [email protected]because that version appeared in training data, even though that version is old and newer versions fixed important security issues. If the developer doesn’t catch it and just uses what the AI provided, they’ve introduced a known vulnerability into their project. This isn’t exactly a hallucination (the package exists), but it’s a misguided recommendation that can compromise security – another form of AI error with supply chain implications (bringing in a vulnerable component). - Insecure Configurations: AI may generate configuration files (for Docker, Kubernetes, CI/CD pipelines, etc.). If it “hallucinates” a config option or setting, that setting might disable a security feature or open an unintended access. For example, an AI might suggest a Dockerfile that inadvertently exposes a secret or uses a less secure base image. In one hypothetical case, an AI writing a Kubernetes manifest could hallucinate a field that sets overly broad permissions for a container, creating a privilege escalation risk.

- Code Vulnerabilities as a Side-Effect: If an AI doesn’t fully “understand” the security context, it might produce code that technically works but isn’t secure. A classic concern is that an AI might reintroduce a vulnerability that was present in its training examples. For instance, if many code samples in training had a certain input sanitization mistake (leading to SQL injection), an AI could easily include that same mistake when generating new code. This isn’t a deliberate hallucination; it’s more of an omission or subtle error. But from the standpoint of the final software, it’s as dangerous as any bug. In 2021–2022 when GitHub Copilot was first released, researchers showed it would sometimes produce code with known flaws (like buffer overflows or weak cryptography) if the prompt was ambiguous about security requirements. Developers must therefore treat AI outputs with the same scrutiny as they would a human junior developer’s code – useful, but needs review and testing.

- Ambiguous Documentation and AI Guesses: AI might hallucinate details when documentation is missing. Imagine a developer asks, “How do I use the secure mode of Library X?” If the library’s secure mode isn’t documented in the training data, the AI might guess or mix it up with another library’s settings, possibly advising something incorrect. If that advice is taken, the software might appear to be in a secure configuration when it is not – a dangerous scenario for things like encryption libraries or authentication systems.

One emerging risk in AI-assisted development is over-reliance – trusting the AI’s output without verification. OWASP (a respected security community) has even catalogued this as a concern (termed “LLM09: Overreliance on AI”), warning that it can introduce vulnerabilities due to insecure defaults or recommendations. The onus is on developers to remain vigilant. Just because an AI is helping write the code does not mean the code is secure.

Automated Package Selection and DevOps Integrations

AI is not only being used to write application code; it’s also being eyed for automating parts of software maintenance and DevOps, such as selecting or updating dependencies, or even autonomously writing small modules. Here too, hallucinations or errors can have supply chain security implications.

Consider a future (or emerging) scenario where a dependency management bot powered by AI tries to auto-resolve software needs. For example, a build tool of the future might understand natural language: “I need a PDF parsing library.” The AI might then search and pick a library for you. If the AI’s knowledge is faulty or if it gets tricked by manipulated data, it could choose a compromised library. Attackers could create a malicious library with a description that looks extremely appealing for “PDF parsing” and even write fake GitHub stars or reviews. If the AI isn’t discerning enough, it might recommend that malicious library as the top choice. This is akin to SEO poisoning but for AI – feeding the AI ecosystem misleading signals so it prefers a bad component.

Even without a malicious adversary, an AI might mis-rank the trustworthiness of projects. It could hallucinate that a certain library is more popular or secure than it really is. Dependency selection requires nuance – a secure choice is based not just on functionality but on things like maintenance status, known vulnerabilities, and community trust. AI might not account for all these properly unless explicitly designed to, and a hallucination or omission could cause it to pick a risky dependency.

Another angle is using AI in CI/CD pipelines (Continuous Integration/Continuous Deployment). Imagine an AI system that monitors your project and automatically suggests dependency upgrades or code fixes. If that AI makes a mistake (hallucinates a patch or an upgrade path), it might, for example, switch to a wrong package source. There was a real concept tested in 2021 known as “dependency confusion” where security researchers showed that if internal project dependencies aren’t tightly controlled, external packages can sneak in. An AI that isn’t aware of an organization’s internal vs external packages could inadvertently introduce such confusion by pulling in the wrong one.

Additionally, prompt-based attacks on AI could factor in. If an AI agent in a build pipeline processes external data (say, release notes or changelogs from the web), an attacker could plant malicious instructions or misleading info in those sources (a form of data poisoning). The AI might then hallucinate or take an action that benefits the attacker (for instance, deciding to replace a safe component with a trojanized one because the release notes were tampered with to say “this new library is recommended by security experts”).

While these scenarios are forward-looking, they highlight that any use of AI in automated decision-making for software content brings a need for caution. Hallucinations are basically unforced errors by the AI. If those errors occur in a context where decisions are made about what code to run or include, the result can be a security incident.

Attacking the AI Supply Chain

When discussing intersections, it’s also worth noting the flip side: just as AI hallucinations can lead to supply chain attacks, supply chain attacks can target AI systems. AI models themselves have a “supply chain” – they are trained on data, integrated into applications, and receive model updates.

For instance, a malicious actor could attempt to poison the training data of an AI coding assistant. If an attacker can introduce enough tainted examples into the public code repositories that an AI learns from, they might cause the AI to preferentially suggest a specific insecure function or a backdoored snippet. This concept has been explored in research as data poisoning or model poisoning. An attacker could intentionally publish code that uses a fake package name repeatedly (with the hope an AI training on that data will learn to suggest it). Then they register that package for a supply chain attack. This is a complex, long-game attack, but not impossible especially for open-source LLMs where training data might not be filtered expertly.

Another aspect is attacking the prompts or input given to AI systems that manage infrastructure. If an AI agent is used in, say, cloud management (hypothetically, “AI Ops” tools that remediate issues), an attacker who gains partial access might feed it a malicious instruction that the AI will carry out in the environment. This strays into adversarial examples territory, but it shows that an AI integrated into systems becomes part of the supply chain trust chain – and if it hallucinate or is manipulated, it can cause harm.

In summary, AI and supply chain security are becoming intertwined. Generative AI’s mistakes open new avenues for attackers (like slopsquatting), and attackers in turn may attempt to exploit or influence AI behavior as part of their toolkit. Next, we will examine some case studies and incidents that highlight these issues in practice, both individually and combined.

Case Studies and Examples

To ground the discussion, let’s look at a few notable incidents and examples that illustrate the risks of AI hallucinations and supply chain attacks.

Case 1: Hallucinated Package Leads to Malware (Demo by Researchers)

One concrete example of the AI-to-supply-chain pipeline in action was demonstrated by Vulcan Cyber in 2023. As mentioned earlier, their team identified that ChatGPT was outputting code with fake package imports. To show the security implications, they selected one such fake package name that ChatGPT had hallucinated in multiple answers. It was a package that ostensibly didn’t exist – until they decided to create it. The researchers wrote a malicious payload (a script to steal system information) and published it to the npm registry under that hallucinated name. Now, if any developer came across one of ChatGPT’s answers suggesting to use that package, they would find it available online. Had they installed it, their system would quietly execute the info-stealing code. This controlled experiment highlighted the very real danger: AI hallucinations can be exploited. While this was a proof-of-concept, it is easy to imagine real attackers doing the same with more sinister intent (e.g., planting backdoors, ransomware, or exploit kits in such packages).

Notably, as of early 2025, security analysts had not yet seen widespread in-the-wild abuse of slopsquatting – but they caution that it’s likely only a matter of time. The necessary ingredients (AI hallucinating names, attackers able to upload to package repositories, developers looking to AI for code) are all present. The lack of reported incidents could be because this trend is very new, or because successful supply chain compromises are often stealthy and not immediately attributed to a cause like “AI suggested it.”

Case 2: SolarWinds – A Traditional Supply Chain Attack

To appreciate the scale of damage a supply chain attack can cause, consider the SolarWinds Orion breach (2020) as a classic case study (albeit without AI involvement). SolarWinds is a company that provides IT management software, and one of their popular products is Orion, used widely for network monitoring. Attackers (later attributed to a nation-state actor) managed to break into SolarWinds’ build system and inject malicious code into an Orion software update. This update was signed and distributed as if it were legitimate. Over the spring and summer of 2020, about 18,000 customers installed the tainted update. The malicious code created a backdoor on those systems, which the attackers then selectively used to penetrate deeper into networks of interest (including U.S. government agencies, tech companies, and more).

The full scope of the SolarWinds attack is beyond this post, but what matters here is that it exemplified how trust can be undermined at scale. Organizations trusted SolarWinds and its updates – that trust was weaponized against them. It took months to detect (FireEye, a security firm, ultimately discovered it when investigating an unrelated alert). The incident led to government hearings and a reassessment of software supply chain security, including initiatives to adopt SBOMs (Software Bills of Materials) and stricter development pipeline controls. SolarWinds did not involve AI, but if we project similar impact through an AI vector: imagine an AI widely used by developers started recommending a particular compromised library (due to a hallucination or data poisoning). The effect could parallel SolarWinds in scale: many applications quietly integrating a backdoor via that library until it’s finally noticed.

Case 3: AI Hallucination in Legal Domain

We touched on this earlier: the case of the hallucinating legal assistant. In a widely reported incident in mid-2023, lawyers in New York submitted a brief that cited fake cases which an AI had invented. While this did not involve a supply chain attack, it is illustrative of hallucination risk. The legal profession relies heavily on accurate citations and precedents. The AI (ChatGPT) responded to a query with what looked like solid case references – except they were complete fabrications. The lawyers, failing to double-check, included them in a court filing. The judge was not amused; ultimately, the attorneys were fined $5,000 and reprimanded. The judge’s message was clear: using AI is not an excuse for submitting false information. This incident sent a ripple through professional communities, reinforcing that AI’s outputs must be verified.

Why include this example in a discussion with cybersecurity professionals? It serves as a cautionary tale that AI hallucinations can fool even educated professionals when they occur in domains outside their immediate expertise or when users are overconfident. Today it’s a legal case; tomorrow it could be a misconfiguration suggestion for a firewall or a hallucinated command in a penetration test report. The consequences vary, but the underlying issue is the same – AI requires human oversight.

Case 4: Kaspersky’s Discovery – Malicious AI Tool Impersonators

In late 2024, Kaspersky researchers uncovered a year-long supply chain attack on the Python package index (PyPI) that had an interesting twist: the malicious packages were disguised as AI assistant tools. Attackers uploaded packages with names suggesting they were integrations or wrappers for popular chatbots (like OpenAI’s ChatGPT or Anthropic’s Claude). These packages indeed provided some chatbot functionality, but behind the scenes they also installed the JarkaStealer malware to steal data from users’ machines. Over 1,700 downloads occurred before the scheme was caught.

This case did not involve AI hallucinating those package names – instead, the attackers relied on people searching PyPI for AI-related tools. However, it shows a couple of things relevant to our discussion: (1) how attackers are capitalizing on the AI hype to distribute malware (knowing developers are eager to try AI integrations), and (2) how supply chain attacks often piggyback on trends. If and when AI coding assistants become a major vector (hallucinating names), we can expect attackers to be creative in leveraging that. The PyPI incident also reinforces that even if a package does what it claims (e.g., provides an AI feature), it can still be malicious in parallel – a reminder that functionality alone doesn’t guarantee safety.

Case 5: Open-Source Maintainer Hijacks (Colors/Faker Incident)

Another relevant example from 2022: the developer of two widely-used JavaScript libraries, colors and faker, intentionally pushed an update that made the libraries unusable (an act of protest). While not a malware attack, this caused thousands of projects to break. It was a wake-up call that even trusted supply chain components can suddenly behave unpredictably if maintainers go rogue or their accounts are compromised. Now imagine if an AI recommended using an alternative library which turned out to be a malicious clone set up after that incident – a developer seeking a quick fix might follow the AI’s suggestion and unknowingly introduce a backdoor.

This scenario underscores the importance of not just trusting a “brand name” or a suggestion, but verifying the source and integrity of open-source components. AI might not have context to know a maintainer has changed or a package was recently hijacked. Humans in the loop need to catch those nuances.

Mitigations and Best Practices

Given the twin challenges of AI hallucinations and supply chain attacks, what can individuals and organizations do to mitigate these risks? Below, we outline recommendations and best practices to address each area, with special attention to the intersection (AI-assisted development in a supply chain context). Adopting a proactive strategy on both fronts is crucial, especially for applications in sensitive or high-security environments.

Stay Skeptical of AI-Generated Content

The first line of defense is a mindset: never fully trust AI output without verification. For developers using AI coding assistants, this means reviewing every line of AI-written code as you would review a human colleague’s code. If the AI suggests using a library or API you’re not familiar with, double-check its existence and reputation. A quick manual search on official package repositories or documentation can confirm if a package is real. As researchers succinctly put it, “never assume a package mentioned in an AI-generated code snippet is real or safe.” If it’s not clearly a well-known library, treat it with caution.

Similarly, for text content (like reports, analyses, or configuration advice) generated by AI, cross-verify key facts. In a security context, if ChatGPT writes “Library X has no known vulnerabilities and is the industry standard,” don’t accept that at face value – consult a vulnerability database or official sources. Human oversight and a healthy dose of skepticism go a long way in catching AI mistakes before they cause damage.

Validate Dependencies and Monitor Package Repositories

To counter the slopsquatting risk and supply chain attacks in general, developers and DevOps teams should implement strict dependency validation practices:

- Manual Verification of New Packages: When adding a new open-source package to a project (especially one suggested by AI or found in some online snippet), check a few things manually: Does it have a legitimate website or documentation? Is the source code repository (GitHub, etc.) active and does it look authentic? How many downloads or users does it have (a brand-new package with almost no downloads is suspect if it claims to solve a common problem)? A simple search for “[package name] malware” might even reveal if others have flagged it.

- Use Trusted Sources and Lockfiles: Wherever possible, use official sources or mirrors for packages. Utilize lockfiles (pinning specific versions) and checksums. Package managers like npm, pip, and others often support lockfiles that ensure the exact version you developed with is the one that gets installed in production. This prevents scenarios where, for example, a malicious actor later uploads a new patch version of a library you use – your build will stick to the known good version unless you explicitly update. Also consider using checksum verification for critical packages (some languages allow you to specify expected hashes of dependencies). This way, even if a package name is squatted after the fact, it won’t match the hash of the intended legitimate package.

- Leverage Security Scanners: Incorporate software composition analysis (SCA) tools in your pipeline. These tools can automatically flag known vulnerable packages and sometimes even detect suspicious or anomalous package characteristics. For instance, they might warn if a dependency is pulling in an unexpected sub-dependency, or if an update includes unusual changes. Some modern package registries are beginning to scan for malware – for example, npm has malware detection for published packages. Keep an eye on those warnings. Community-driven sites like Snyk, OSS Index, or Socket.dev maintain lists of malicious or dubious packages; integrating those databases into your auditing process can catch an attacker’s package before it catches you.

- Monitor Repository Events: If you maintain an internal package repository or mirror, watch for any package that is fetched that wasn’t previously approved. In the dependency confusion attacks, companies set up monitors to detect if their systems ever tried to reach out to public repos for certain package names, which would indicate a misconfiguration. Similarly, if using AI, one might proactively check “did my project try to download any package that is not in our allow-list?” as a safety net.

Implement AI-Specific Guardrails

For organizations integrating AI into development workflows, it’s wise to add some guardrails around the AI tools:

- Temperature and Mode Settings: If the AI assistant allows configuration, use a low-creativity mode for coding. As noted by researchers, lowering the model’s temperature can reduce hallucinations. Deterministic or focused modes might make the AI more likely to stick to known facts or code. Some coding AIs might have an explicit “strict mode” – use it if available.

- Provide Context to AI: Often, hallucinations can be curtailed by grounding the AI with context. For example, if you have a knowledge base of approved libraries, feed that into the prompt (“Here is a list of libraries we use for X, Y, Z. Write code to do Z.”). The AI is then less likely to go off-script. This is a form of retrieval augmented generation – giving the AI factual context to lean on rather than relying purely on its parametric memory.

- AI Output Sandboxing: Treat AI suggestions as untrusted until proven otherwise. For code, that means testing in an isolated environment. The BleepingComputer report on hallucinated packages advises always testing AI-generated code in a safe, isolated sandbox before using it in production. This way, if the code tries to do something unexpected (like contacting an unknown server or reading files it shouldn’t), you can catch it without harm. In a sense, run static analysis or even dynamic analysis on AI-generated code as if it were code from an unknown contributor – because it essentially is.

- Feedback Loops: If the AI is internal or customizable, establish a feedback loop. When you catch a hallucination or a bad suggestion, feed that back to developers or the model maintainers. Over time, this can improve the AI’s outputs or at least help build a list of “do not trust” patterns.

Secure the Software Supply Chain

General best practices for supply chain security are critical and complement the AI-specific steps:

- Adopt SBOMs: A Software Bill of Materials is like an ingredients list for your software – it lists all components and their versions. Maintaining SBOMs for your projects and demanding them from vendors can greatly improve transparency. In the context of AI code suggestions, an SBOM can help you quickly identify if a “mystery” component got in. It also forces you to track dependencies formally. Should a vulnerability or malicious package be discovered, SBOMs let you rapidly assess where you’re affected. Regulatory trends (e.g., U.S. executive orders) are increasingly pushing for SBOMs because of incidents like SolarWinds. Embracing them early is wise.

- Enforce Code Reviews and 4-Eyes Principle: No code – whether written by a junior developer or an AI assistant – should enter critical codebases without review by another experienced developer. Code review not only catches logical errors but can also catch suspicious imports or odd code segments. If an AI slipped in a weird dependency, a human reviewer can question it: “What is this package? Do we really need it?” This process provides an opportunity to catch hallucination-induced issues before they merge into the main branch.

- Continuous Monitoring and Threat Intelligence: Keep abreast of threat intelligence on supply chain attacks. Subscribe to alerts or feeds for new malicious packages (many security firms and repo maintainers announce these). If a package you use gets compromised or a new form of attack is trending (like a surge in typosquatting for a popular library), you can act quickly – maybe temporarily freeze updates or run additional scans. In high-security environments, some even mirror all dependencies internally and do their own code vetting.

- Credential Security for Maintainers: If you run open-source projects or internal packages, secure those channels. Use 2-factor authentication on package manager accounts, sign your releases (code signing can assure consumers that an update is genuinely from you and not an attacker), and consider adopting repository security features like GitHub’s Dependabot alerts and branch protection. These reduce the chance that your project becomes part of someone else’s supply chain attack (e.g., if an attacker hijacks your library to attack its users).

Education and Policy

People are often the weakest link, but also the best defense if properly educated. Ensure that your team is aware of both AI’s limitations and supply chain attack methods:

- Conduct training sessions or share guidelines on safe use of AI for coding. For example, create a checklist: “Ask these questions when AI suggests a new dependency or unusual code – (a) Does it exist? (b) Is it the best practice? (c) Could it pose a risk? (d) Did I test it?”. Awareness can significantly reduce blind trust.

- Establish policies for introducing new dependencies. Some organizations have an approval process or at least a review step for adding any new third-party library. This might feel like red tape, but it can save you from a disastrous inclusion. Policies could range from “we only use libraries above X stars on GitHub” or “security team must review any package with no clear maintenance history” to more formal whitelisting.

- For supply chain security, promote a culture of “trust but verify”. Developers should understand that pulling a package is not risk-free. Encourage them to read maintainers’ notes, check how active the community is, etc. A simple rule of thumb: if a library is crucial, maybe have more than one set of eyes inspect its source (if open-source) for suspicious code. This isn’t always feasible, but at least inspect the high-impact ones (anything that deals with parsing untrusted input, for instance).

Use AI to Defend Against AI (and Supply Chain Attacks)

Interestingly, AI can also be part of the solution. Just as attackers or errors use AI, defenders can leverage AI for security:

- Some tools use machine learning to analyze package behavior. For instance, an ML system might flag an npm package that suddenly in a new version starts deleting files or making network calls as suspicious. These tools can augment traditional signature or rule-based detection of malware in dependencies.

- AI can assist in code review and threat modeling. For example, you might use an LLM to scan through a list of dependencies and summarize which ones have known vulnerabilities or what their security posture is. Or use AI to analyze your SBOM and highlight components that might be risky.

- In prompts: If you ask an AI coding assistant for code, also ask it to double-check its answer. For instance, “Is the above code using any functions or libraries that could be insecure or not real?” Sometimes the AI, upon reflection, will correct its earlier suggestion or add caveats. While you shouldn’t solely rely on it to self-verify (it can hallucinate an affirmation too!), this technique can sometimes catch obvious issues.

Incident Response Plan for Supply Chain and AI Issues

Finally, be prepared that despite precautions, things can go wrong. Have an incident response playbook that covers scenarios like “malicious dependency detected in our application” or “AI suggested code that introduced a bug – now what?”. This means:

- Scanning and Patching: If a bad package is found post hoc, how quickly can you find all instances of it in your environment? Using tools that scan code and artifact repositories for certain strings can help (for example, search your code for the package name across all projects).

- Containment: If a compromised library is running in production, treat it like a breach. Investigate what it could have done (check its code behavior, any outbound traffic from those systems, etc.). Rotate secrets if needed (e.g., if the library could have exfiltrated credentials).

- Root Cause Analysis: Determine how it got in. If it was due to an AI recommendation that wasn’t vetted, feed that back into improving the process (maybe require stronger validation for AI-sourced contributions).

- Communication: For broader supply chain issues (like a public breach such as SolarWinds or Log4j), ensure your stakeholders know you’re on top of it. Clients and regulators may need assurance that you weren’t affected or have remediated the issue. Having SBOMs and a clear action plan will make those communications smoother.

In conclusion, mitigating AI hallucination and supply chain risks requires a combination of technology, process, and mindset. There is no single silver bullet; instead, multiple layers of defense must work together. For AI, that means grounding it in facts, monitoring its output, and educating users. For supply chain security, it means knowing your components, verifying integrity, and preparing for the worst-case scenario. The intersection of these areas – such as AI hallucinating a dependency – calls for particularly close attention. The encouraging news is that awareness of these issues is growing, and tools and best practices are evolving rapidly to address them.

Conclusion

AI hallucinations and software supply chain attacks each pose significant challenges on their own. When combined, they create a convergent threat landscape where the mistakes of intelligent machines can be leveraged by malicious actors to compromise vast numbers of systems. In this post, we introduced AI hallucinations, explaining how and why AI sometimes “makes things up,” and we saw the potential risks ranging from misinformation to security vulnerabilities. We also explored software supply chain attacks, understanding how attackers infiltrate the very software building blocks that organizations trust, with historically devastating examples like SolarWinds and an alarming upward trend in recent years.

The intersection of these domains is where future security incidents may increasingly occur. AI-assisted coding is a boon to developer productivity, but as we’ve learned, it can inadvertently suggest dangerous things – like installing a completely fake package that an attacker has booby-trapped. This new vector (aptly named slopsquatting) turns an AI’s hallucination into an opportunity for supply chain compromise. We’ve also noted that AI can introduce more subtle flaws or steer us toward poor decisions if we’re not careful, which in a supply chain context could mean using untrustworthy dependencies or configurations.

Through case studies, we saw that these concerns are not just theoretical. Real incidents (and clever research demos) have shown the plausibility and impact: from ChatGPT inventing code libraries, to nation-state hackers breaching software pipelines, to malware hiding in packages named after AI tools. Each serves as a lesson that as our technology ecosystem grows more complex and interdependent, attacks often strike at the weakest link – which might be an unaware human trusting an AI or an overlooked library deep in the dependency tree.

For both general readers and cybersecurity professionals, the key takeaway is one of vigilance and adaptation. We must approach AI outputs with cautious optimism – leveraging their benefits while guarding against their failures. Likewise, we must treat our software supply chain as the critical attack surface that it is, putting in place the practices and tools to secure it.

The recommendations outlined – from verifying AI-suggested code, to implementing SBOMs, to scanning for malicious packages – all boil down to increasing our resilience against these threats. In a world where AI systems will only become more ingrained in development workflows, fostering a culture of security-aware AI usage is crucial. And as supply chain attacks continue to proliferate, collaborative efforts between industry, open-source communities, and policymakers to harden the software ecosystem (for example, initiatives like secure package registries, vulnerability disclosure programs, and stronger identity verification for code publishers) will be essential.

In summary, AI hallucinations and supply chain attacks are each challenging, but manageable with the right strategies. Together, they require us to be even more proactive and informed. By staying educated on these topics, sharing knowledge of threats, and employing best practices rigorously, we can reap the rewards of AI and open source software while minimizing the risks. The landscape of cybersecurity is ever-evolving – today’s novel concern (AI hallucinated malware) might become tomorrow’s common attack vector. Preparing for it now is not just prudent; it’s necessary to protect our software and systems in the AI-driven future.

References

- BleepingComputer – AI-hallucinated code dependencies become new supply chain riskbleepingcomputer.combleepingcomputer.com

- SecurityWeek – ChatGPT Hallucinations Can Be Exploited to Distribute Malicious Code Packagessecurityweek.comsecurityweek.com

- TechTarget – What are AI Hallucinations and Why Are They a Problem?techtarget.comtechtarget.com

- Guardian – Two US lawyers fined for submitting fake court citations from ChatGPTtheguardian.comtheguardian.com

- Hadrian Security Blog – 2023 is the year for software supply chain attackshadrian.io

- Embroker – Cyber attack statistics 2025 (supply chain attacks increase)embroker.com

- Global Supply Chain Law Blog – Supply Chains Are The Next Subject of Cyberattacksglobalsupplychainlawblog.comglobalsupplychainlawblog.com

- Fortinet – SolarWinds Supply Chain Attack (Impact)fortinet.com

- Kaspersky – Year-long PyPI supply chain attack using AI chatbot tools as lurekaspersky.comkaspersky.com

- OWASP – LLM Security (Overreliance on AI)genai.owasp.org