AI-Driven Software Engineering: How Artificial Intelligence Is Transforming the Way We Build Software

Artificial intelligence (AI) is transforming the way software is built. AI-driven software development refers to the integration of intelligent systems and machine learning into the software engineering process – from coding and testing to deployment and maintenance. This approach matters because it promises dramatic improvements in productivity, code quality, and development speed. Traditionally, writing and maintaining software is labor-intensive and error-prone. AI assistance offers innovative solutions to these long-standing challenges, automating routine tasks and providing smart suggestions. In recent years, advances in large language models (LLMs) and deep learning have made AI-driven development especially relevant. Modern code-focused AI systems (like OpenAI’s Codex and DeepMind’s AlphaCode) can understand natural language and generate code, which was not practical just a decade ago. The result is that developers are no longer coding entirely by hand – they increasingly collaborate with AI tools that accelerate development cycles. Businesses are taking notice: according to industry research, AI-enhanced coding can save up to 50% of developers’ time on repetitive tasks. In short, software engineering is evolving into a new era where human creativity is augmented by AI’s speed and precision, making this a critical development for engineers, tech executives, and even the general public who rely on software in everyday life.

What has changed to enable AI-driven development now? The convergence of big code data, powerful computing, and advanced algorithms. Companies like OpenAI, Google, and Microsoft have trained LLMs on billions of lines of code, giving these models an almost encyclopedic knowledge of programming. These AI models can autocomplete code, generate entire functions on prompt, and even find bugs by analyzing patterns across vast codebases. The transformative power of such AI-assisted programming is already evident in tools like GitHub Copilot and Amazon CodeWhisperer, which can suggest the next lines of code as a developer types. This represents a shift from developers being solely manual coders to becoming “orchestrators” of an AI-augmented coding process. Organizations that leverage AI-driven tools have reported faster development times and fewer errors, indicating that embracing AI in software engineering is becoming essential to . The following sections explore how we arrived at this point, the current landscape of AI tools in software development, changes in workflows, real-world impacts, technical foundations, challenges, and future trends in this rapidly evolving field.

Historical Evolution of Automation in Software Engineering

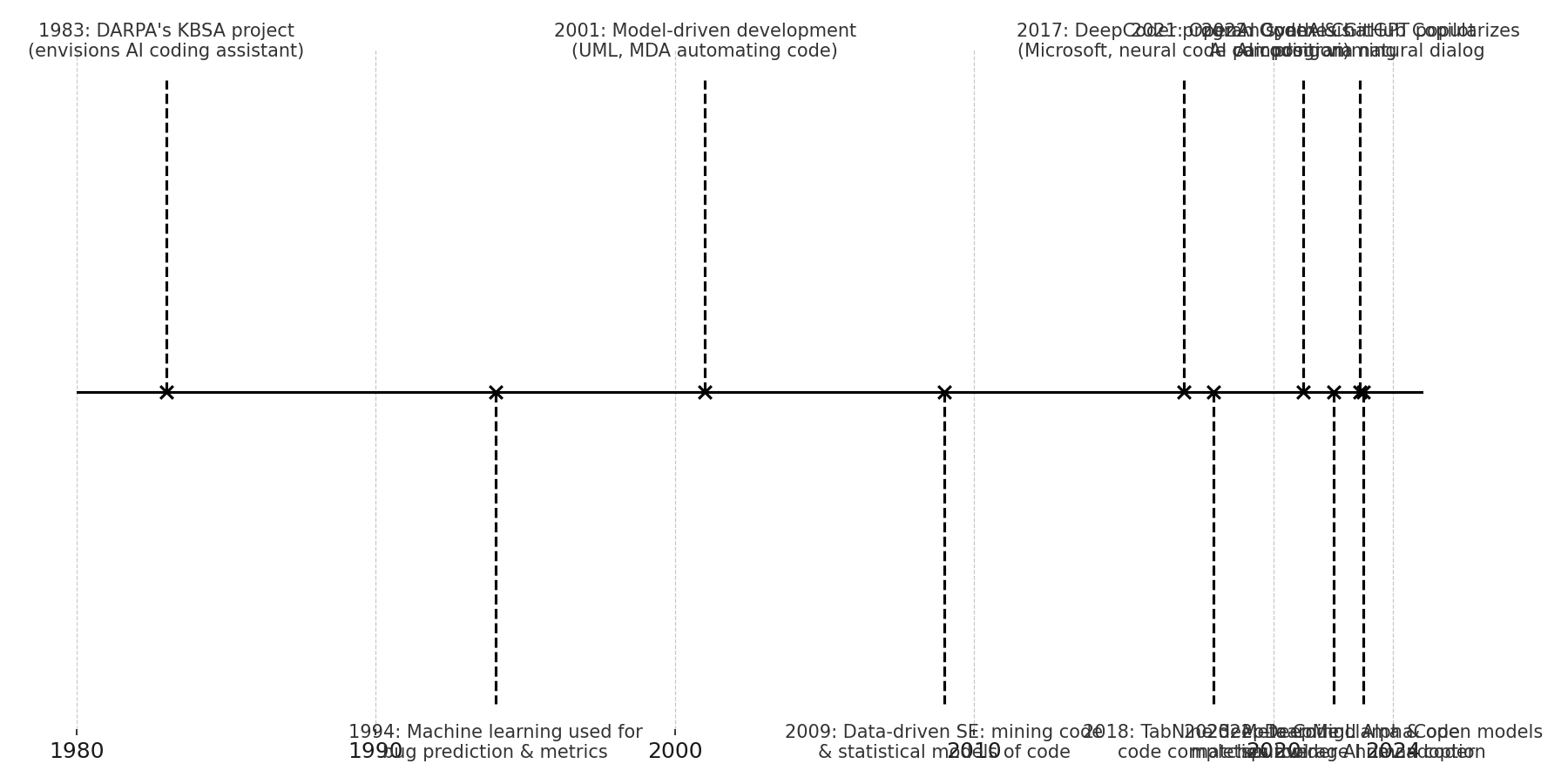

Software engineering has always sought greater automation. In fact, the dream of “automatic programming” – getting computers to write code themselves – dates back to the 1950s. Early ideas were overly optimistic, but they set a vision that researchers would chase for decades. By the 1980s, significant efforts were underway to apply AI to coding. For example, in 1983 the U.S. Air Force launched the Knowledge-Based Software Assistant (KBSA) project, which envisioned using AI and formal specifications to automatically generate efficient code. This knowledge-based approach was ahead of its time and encountered technical limitations, but it laid groundwork for future research. In the 1990s, progress continued modestly – expert systems and rule-based tools were used to aid software design, and researchers began applying machine learning algorithms to problems like bug prediction and effort estimation. By 1994, there were experiments using neural networks and statistical models to predict software defects and reliability, signaling one of the first uses of ML in software engineering processes.

The 2000s saw a different kind of automation push: model-driven development. Tools were created to generate code from high-level models (e.g. UML diagrams) – not quite “AI” in the modern sense, but a step toward automating coding tasks. In 2001, the Object Management Group (OMG) promoted Model-Driven Architecture (MDA), aiming to produce working code from abstract models. While model-driven approaches had success in niche areas, they did not fully deliver on replacing human coders. However, around the same time, data mining of open source repositories began to yield insight. In 2009, researchers showed that code, like natural language, has repetitive patterns – opening the door to applying statistical language modeling to source code. This finding of code naturalness meant that an AI could be trained to predict probable code snippets, much as predictive text works for sentences.

Since 2009, the synergy between software engineering and machine learning has intensified. Academic and industry efforts started focusing on how to make AI practical for coding. By the mid-2010s, deep learning brought major breakthroughs in related AI fields (speech recognition, computer vision) and researchers turned these techniques to programming. A notable milestone was Microsoft’s DeepCoder (2017), which learned to solve simple programming tasks by stitching together code pieces using neural networks. Around the same time, deep neural nets for code completion emerged – for instance, in 2018 the startup TabNine launched a developer tool that used a trained AI model to suggest code completions, significantly improving upon earlier rule-based autocomplete. The true inflection point came in the early 2020s with large-scale neural models: OpenAI’s Codex (2021) was a refined GPT-3 model specifically trained on code, enabling it to generate entire functions or programs from natural language prompts. Its public debut via GitHub Copilot in 2021 gave thousands of developers their first taste of AI pair programming, where the AI writes code alongside the human. From that moment, AI-assisted development went from research to mainstream. In 2022, DeepMind’s AlphaCode system achieved approximately median human performance in coding competitions, solving about 30% of presented problems and outperforming ~50% of human participants – a remarkable feat demonstrating how far AI code generation had come. Late 2022 then saw the arrival of OpenAI’s ChatGPT, which, while a general AI chatbot, proved extremely adept at answering programming questions and producing code snippets on demand. Unlike previous tools, ChatGPT made AI assistance accessible to the general public through simple dialogue, accelerating awareness and adoption of AI in software tasks. By 2023, companies like Meta released open-source code LLMs (Code Llama), and organizations worldwide began integrating AI into their development pipelines on a wide scale.

Timeline of key developments in AI-driven software engineering, from early concepts in the 1980s to widespread adoption by the mid-2020s. Advancements include the introduction of knowledge-based coding assistants, the use of machine learning for code analysis, and the recent breakthrough of large language models and AI pair programming tools.

This historical evolution shows a clear trend: as computing power and algorithms improved, automation in software engineering progressed from simple code generators and static analysis tools to sophisticated AI systems that can understand and write code. Each era built on past lessons – for example, the failures of 1980s expert systems taught us that flexibility was needed, which statistical learning in the 2000s began to provide. The last few years, often dubbed the “GitHub Copilot era,” represent the culmination of these advancements, where practical AI assistants are finally available to aid developers day-to-day. It’s a transformation in progress, built on decades of innovation.

Current AI Tools and Technologies in Software Development

Today, a rich ecosystem of AI-powered tools spans nearly every phase of the software development lifecycle. These tools leverage techniques like machine learning, natural language processing, and knowledge representation to assist (or even automate) tasks that once required painstaking human effort. Broadly, current AI in software engineering can be grouped into a few categories:

- Intelligent Code Generation and Completion: Modern IDE plug-ins and cloud-based services can generate code snippets or entire functions based on a description. They act as AI pair programmers. For example, generative AI assistants like GitHub Copilot, Amazon CodeWhisperer, and TabNine use large language models to suggest the next lines of code as you type. These systems have been trained on millions of code examples and can often produce correct boilerplate or routine code instantly. Developers thus spend less time writing repetitive code and more time on logic and design. Generative models can also translate natural language into code – you can ask for “a function that computes the Fibonacci sequence,” and the AI will draft it. Such tools significantly speed up implementation and help developers stay “in the flow” while codingg. They are most effective for automating standard patterns (e.g., API calls, data structure boilerplate) and providing quick prototypes of solutions.

- Automated Code Review and Refactoring: AI can also act as an intelligent reviewer, scanning code for bugs, style issues, or inefficiencies. Machine learning-based static analysis tools (like those by Snyk Code or DeepCode) go beyond traditional linters by learning from many codebases what kind of patterns lead to problems. They can catch tricky issues or suggest refactorings to improve readability and maintainability. For instance, Amazon’s CodeGuru Reviewer uses ML to flag resource leaks or concurrency issues in code, sometimes even suggesting a fix (e.g., recommending use of more efficient APIs) – effectively providing an AI second pair of eyes on pull requests. Similarly, AI-driven code formatters and refactoring tools can learn best practices from the entire developer community. One example is IntelliCode in Microsoft Visual Studio, which uses AI to offer context-aware code style suggestions (like how to name variables or which overload of a function is most likely needed) based on learned conventions. The benefit is higher code quality and consistency with less manual effort. Some AI systems are even capable of generating code documentation and comments by summarizing what a piece of code does, which blurs into the documentation domain.

- Bug Detection, Testing, and QA: Ensuring software correctness is traditionally very labor-intensive – writing test cases, running them, debugging failures. AI is making inroads here by automating testing and bug-finding. Tools now exist that employ AI to generate test cases automatically. For example, Diffblue Cover uses reinforcement learning to write JUnit tests for Java code, achieving high coverage without human input. AI can analyze code to identify edge cases or likely error conditions and create tests accordingly. In addition, techniques like fuzz testing (feeding random inputs to find crashes) have been enhanced by AI to better explore input space intelligently. AI-based testing can greatly expand the breadth of testing, catching issues that developers might miss. Furthermore, AI debugging assistants are emerging: given a failing test or an error log, an AI might suggest where in the code the problem lies and propose a patch. A pioneering example of this is Meta’s SapFix, which can automatically generate fixes for certain bugs and propose them to engineers. SapFix uses a combination of ML and program analysis to craft patches for crashes in the Facebook Android app, some of which have been deployed to production with minimal human edits. This kind of AI-driven debugging dramatically speeds up the bug-fix cycle. Similarly, predictive bug detection uses historical code data to predict which modules are likely to have defects (so teams can allocate testing effort accordingly). AI can prioritize testing on code that “looks suspicious” based on learned patterns (e.g., a function that is very complex or similar to past buggy code).

- DevOps Automation and Deployment: AI’s influence doesn’t stop at coding – it also extends to how software is built, integrated, and deployed. AIOps (AI for IT Operations) is an emerging field where AI techniques are applied to streamline continuous integration/continuous deployment (CI/CD) pipelines, monitor systems, and manage infrastructure. For example, AI can optimize build processes by caching or parallelizing tasks in smart ways, predict failures in deployment (and roll back automatically), or recommend the best infrastructure configuration for a given application. Cloud providers are incorporating AI to manage resources: autoscaling, load balancing, and failure recovery can be driven by ML models that learn usage patterns. There are tools that analyze past deployment logs and alert if a new deployment likely introduced a bug (a form of anomaly detection). Intelligent monitoring systems use AI to detect performance issues or errors in production by learning the normal patterns of application metrics. When something deviates, the AI can pinpoint which microservice or server is the probable culprit faster than a human operator scanning logs. Overall, AI in DevOps aims for more reliable releases and quicker mitigation of incidents. Another example is project planning and estimation – AI can analyze historical project data to predict timelines or suggest how to allocate team members, thus assisting engineering managers in decision-making.

- Requirements and Design Assistance: Although less mature than coding assistance, there are AI tools starting to help at the earlier stages of development. Natural language processing (NLP) techniques can parse requirements documents or user stories to highlight ambiguities or even convert them into initial models. For instance, an AI might read a set of user requirements and suggest a list of features or clarify vague statements (“When you say the system should be ‘fast’, do you mean under 1 second response time?”). Some experimental tools generate UML diagrams from textual descriptions, assisting in software design. Moreover, AI-based prototyping tools can turn hand-drawn interface sketches into working UI code, expediting the design to implementation transition. These applications are still emerging, but they foreshadow a future where AI helps translate human intentions into software specifications, bridging the gap between non-technical stakeholders and developers.

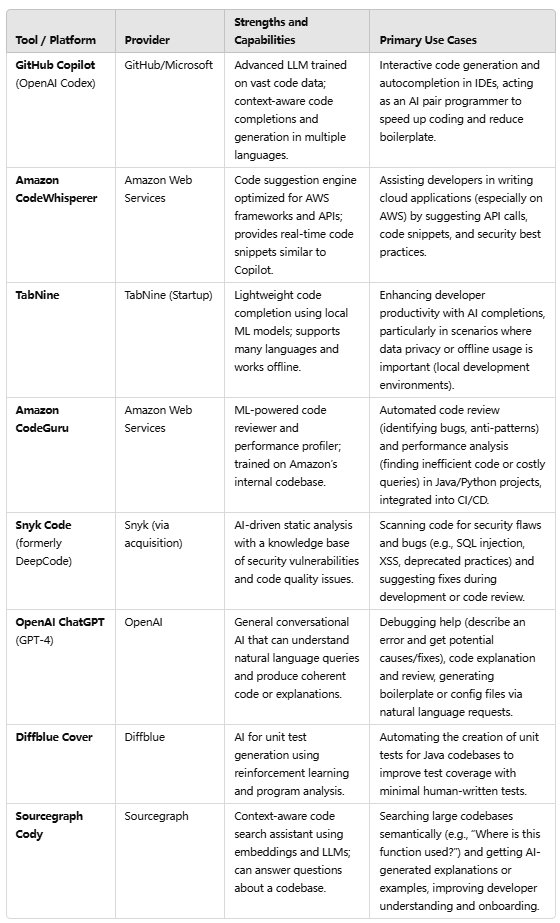

The current landscape thus includes a broad spectrum of AI interventions throughout the development lifecycle. Table 1 below summarizes some notable AI tools and platforms widely used in software engineering today, along with their key strengths and typical use cases:

Table 1: Notable AI Tools and Platforms in Software Engineering

Note: The above list is not exhaustive, but it highlights the diversity of AI tools available. Some are focused on assisting developers at coding time (Copilot, CodeWhisperer, TabNine), others on code quality (CodeGuru, Snyk Code), and yet others on broader tasks like conversational problem solving (ChatGPT) or test generation (Diffblue). Importantly, these tools are complementary – a development team might use several in tandem. For instance, a team could use Copilot to write code, Snyk to scan it for security issues, and Diffblue to generate tests, thereby injecting AI benefits at multiple points in their workflow.

How AI is Reshaping Workflows and Team Roles

The infusion of AI into software engineering is not just a technical upgrade; it’s fundamentally reshaping how development teams work. Workflows are being transformed as certain tasks become automated or AI-assisted, and consequently the roles and responsibilities of team members are evolving.

Perhaps the most significant change is the shift in the developer’s role from being the sole producer of code to being a supervisor and curator of AI-generated code. Developers increasingly function like an editor or architect, guiding AI tools that handle routine coding. Instead of manually writing every line, a programmer might now write a function stub and let the AI complete it, then review and adjust the output. This requires a new skill set – developers must be good at prompting or instructing AI, and at critically evaluating AI contributions. As one survey noted, developers’ roles are gradually shifting from traditional coding to “supervising and assessing AI-generated suggestions”. In practice, this means code reviews now often include reviewing AI-written sections, and developers need to ensure the AI’s code aligns with requirements and has no hidden bugs. The human remains ultimately responsible, but the nature of the work tilts more toward oversight, integration, and fine-tuning of AI outputs.

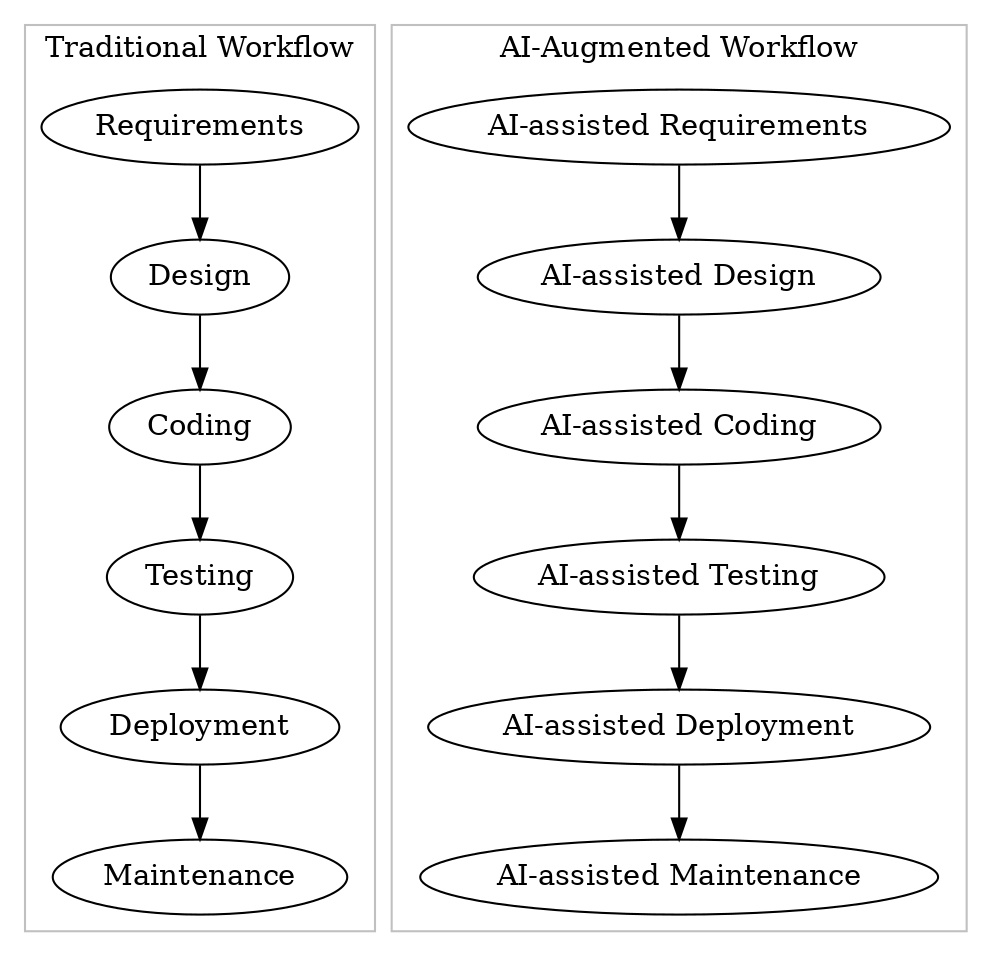

The traditional software development workflow is therefore being augmented at almost every stage. Consider the classic stages of Requirements → Design → Coding → Testing → Deployment → Maintenance. In a non-AI world, each of these is performed predominantly by humans (with some script automation). In an AI-augmented workflow, many of these stages have AI “assistants” working in tandem with humans. The diagram below illustrates this transformation, comparing a traditional workflow to an AI-augmented workflow:

Comparison of a traditional software development workflow (left) with an AI-augmented workflow (right). In the AI-driven process, each phase – from requirements and design to coding, testing, deployment, and maintenance – is supported by AI assistants or automation. Developers shift from performing every task manually to orchestrating and validating the contributions of AI tools.

In the AI-augmented workflow, requirements might be partially analyzed by NLP tools; design might be assisted by model generation; coding is done with AI pair programmers; testing leverages AI-generated cases; deployment and maintenance use AI for monitoring and optimization. The developer’s day-to-day experience changes accordingly. They spend less time on grunt work (like writing boilerplate code or simple test cases) and more time on higher-level decision making. Team roles are shifting:

- Developers as “AI Orchestrators”: Developers now often serve as the point of coordination between various AI tools. A term gaining popularity is “prompt engineer,” reflecting how devs craft prompts or instructions for AI to get the desired output. While writing code remains central, they also need to interpret natural language requirements for the AI, or select which suggestion from an AI to accept. As Deloitte observers put it, we’re moving toward a future where developers act as “orchestrators of AI-driven development ecosystems”. They ensure all the AI inputs (code suggestions, bug fixes, test cases) fit together correctly in the final software.

- Quality Assurance (QA) and Test Engineers: These roles are also evolving. With AI able to generate tests or detect bugs, QA engineers spend less time writing extensive test scripts. Instead, they might focus on training and validating AI testing tools – for example, seeding the AI with example test scenarios or reviewing AI-flagged defects for validity. The QA role shifts toward managing the AI test generation process and devising clever test ideas that AI might miss. On the flip side, because AI can produce code that may be superficially correct but subtly wrong, QA is as important as ever to catch issues. So testers become the safety net for AI-generated code, tuning test coverage criteria and ensuring the AI’s contributions don’t introduce hidden regressions. In some cases, QA engineers might pair with AI tools like property-based testing frameworks or fuzzers that use ML to maximize coverage.

- Tech Leads / Architects: Technical leaders find that AI tools give them new leverage but also require new oversight. They can delegate routine design-level decisions to AI (like suggesting a standard architecture or library for a task), but they must guide the AI with the project’s big-picture in mind. Architects may use AI to evaluate multiple design alternatives quickly. The role becomes more about judgment and guidance: setting up the guardrails for AI (what it should or shouldn’t do in the codebase), and focusing on complex design problems that AI cannot handle. They also need to consider integration of AI tools – choosing which tools to adopt, and ensuring these tools have access to the right project context (for example, feeding domain-specific documentation into an AI assistant to make its suggestions more accurate for the team’s use case).

- DevOps and Operations Teams: For operations, AI automation means fewer manual interventions for scaling or monitoring issues. Ops engineers increasingly work alongside AI that handles many alerts and can even execute runbook actions automatically. Their role shifts to overseeing AI operations – verifying that the AI’s decisions (like restarting a service or redistributing load) were appropriate and fine-tuning the logic or model when the environment changes. Additionally, Ops teams might collaborate with developers more closely since continuous deployment pipelines now involve AI (for code checks, etc.), merging responsibilities in a DevOps fashion. Trust becomes key: operations must trust the AI to handle midnight pages correctly, which means thorough testing of AI ops tools and possibly new fail-safes.

- Product Managers / Analysts: While not engineers, these roles also feel an impact. AI-assisted development can drastically shorten development timelines for certain features (e.g., a prototype can be built in days instead of weeks with AI help). Product managers need to adjust planning and be ready for faster iteration. Moreover, AI can generate multiple options for a feature implementation – product managers might be involved in picking which AI-proposed solution better aligns with user needs. Requirements gathering might involve working with AI tools to parse customer feedback at scale (using sentiment analysis, etc.), thus PMs become adept at using AI for analysis. They also have to be vigilant about the ethical use of AI – for example, ensuring AI-generated code does not inadvertently introduce bias or licensing issues, which might not be obvious to developers focused on technical aspects.

Crucially, human expertise remains indispensable in this AI-driven workflow. AI tools, while powerful, do make mistakes – they can produce syntactically correct but logically flawed code, overlook context that a human finds obvious, or suggest insecure solutions. Therefore, teams incorporate a human-in-the-loop at critical junctures. Code suggestions from AI are reviewed by humans (just as one would review a junior developer’s code). AI-generated test cases are checked to ensure they make sense. Deployment decisions recommended by AI might go through a change control board. In essence, the workflow changes to “many quick AI drafts, followed by human review and final decision.” This dynamic can actually improve team collaboration: junior developers empowered with AI can contribute more, while senior developers focus on mentoring and oversight. Some companies even pair programmers with AI in a manner similar to pair programming – one “drives” (writes prompts and code) while the other “navigates” (reviews AI outputs and maintains architectural vision).

One outcome of AI adoption is the need for new training and upskilling. Organizations are realizing that developers must learn how to effectively use AI tools – a skill that is now being called “AI literacy” in software engineering. This includes knowing how to phrase prompts for optimal results, how to interpret probabilistic or uncertain answers from AI, and understanding the underlying limitations of these tools (for example, knowing that an LLM might not know about a library updated after its training cutoff). As such, some companies are conducting internal workshops for developers on using Copilot or similar tools effectively. Universities and coding bootcamps are also starting to incorporate AI coding assistants into their curriculum so that new graduates are prepared for AI-augmented workflows.

Additionally, team processes are being adjusted. Agile methodologies, for instance, are adapting to AI assistance – sprint planning might account for AI potentially doubling the velocity on certain tasks, and “spikes” (exploratory tasks) might be done by asking an AI for prototypes. Code review processes may include an extra step where an AI tool runs a static analysis or even a formal verification before human review. Some teams implement policy checks: e.g., if AI suggests code, ensure it is annotated or flagged, so it gets more scrutiny or testing. There are also emerging best practices on deciding when not to use AI – for critical code (security-critical algorithms, for example), teams might intentionally avoid AI-generated code to maintain rigorous control and transparency.

In summary, AI-driven development is fostering a more collaborative and fluid workflow. The boundaries between roles get blurrier (developers do some testing with AI help; testers read some code, etc.), and the overall pace from idea to deployment accelerates. While AI does not replace human developers (indeed, developers are more important than ever in guiding the AI), it does elevate their work. They can focus on creative problem solving, complex debugging, and innovative feature development, while delegating mundane tasks to machines. This human-AI partnership is fast becoming the norm. As IBM describes, software engineers are moving from being code implementers to “orchestrators of technology,” leveraging AI to boost productivity and focusing on higher-level problem-solving. Far from rendering engineers obsolete, AI is augmenting their capabilities – but it requires developers to adapt their workflows and mindset to this new reality.

Real-World Examples and Case Studies

The impact of AI-driven development is not just theoretical; many organizations are already reaping tangible benefits. Below are several real-world examples and case studies illustrating how AI has improved productivity, code quality, and delivery timelines in software projects:

- GitHub Copilot boosting developer productivity: Since its launch, GitHub Copilot (powered by OpenAI’s Codex) has been the subject of multiple studies. One controlled experiment by GitHub found striking results: developers using Copilot were able to complete a coding task 55% faster than those without Copilot. In this study, two groups were asked to implement an HTTP server; the Copilot-assisted group had a 78% completion rate versus 70% for the control group, and they finished in almost half the time. Another field study involving over 4,000 developers across Microsoft and other companies showed a 26% increase in the number of pull requests completed per week when developers had access to Copilot. These are remarkable boosts in throughput. Beyond speed, surveys indicate developers feel more satisfied and less frustrated when using AI assistance – about 73% reported Copilot helps them stay in the flow and 87% said it reduces mental effort on repetitive tasksg. This evidence suggests that AI coding assistants are having a positive effect on both productivity and developer experience.

- Accelerating development at Amazon with AI: Amazon has invested heavily in AI tools to improve its own software engineering. An example shared by Amazon’s CEO, Andy Jassy, revealed that their internal AI coding assistant (codenamed “Amazon Code-Q”) drastically sped up code maintenance for their large codebases. By automating code upgrades and migrations, this tool saved an estimated 4,500 developer-years of effort, translating to about $260 million in annual efficiency gains. These staggering numbers come from allowing AI to handle mundane tasks like updating code to new APIs or fixing common errors, freeing human developers to focus on more strategic initiatives. Another Amazon tool, CodeGuru, was used on AWS services where it identified performance issues (like inefficient database queries) that, once fixed, saved millions of dollars in compute costs. This shows AI doesn’t just save time – it can significantly impact the bottom line.

- AI-powered bug fixing and reliability at Meta (Facebook): As mentioned, Facebook’s engineering team deployed SapFix in 2018. In production, SapFix automatically generated fixes for certain crash bugs in the Facebook Android app and submitted them for review. In its proof-of-concept use, SapFix was able to propose patches for bugs discovered by Facebook’s automated testing tool Sapienz, in many cases before the problematic code ever hit production. Engineers would then review the AI’s patch and approve it for deployment if it was satisfactory. This reduced the debugging and patching time from potentially days or weeks (for an engineer to identify and fix the bug) to just hours. It was the first known use of AI-driven bug fixing at such a scale (millions of devices). As a direct result, the stability of the app improved and engineers spent less time on tedious debugging of null pointer exceptions or similar issues. Meta’s example shows that AI can increase software reliability by responding to problems faster than human cycles normally allow.

- AlphaCode and problem-solving efficiency: While DeepMind’s AlphaCode was a research system (not deployed in a software company), its performance provides a case study in AI capability. AlphaCode was evaluated on Codeforces programming competitions and achieved roughly median competitor performance, writing correct solutions for about 30% of the problems in a test set. This is noteworthy because these competition problems are complex algorithmic challenges, often requiring creative insights. AlphaCode’s approach was to generate a large number of candidate programs for each problem and then smartly filter and test them to identify correct ones. In essence, it simulated a thousand virtual programmers attempting solutions and picked the best one. For businesses, this hints at future AI that could handle complex tasks like optimizing algorithms or resolving computational bottlenecks. In one instance, DeepMind reported that an AlphaCode-like approach was able to discover a new algorithmic solution that was more efficient than known human solutions. Similarly, DeepMind’s later system AlphaDev applied reinforcement learning to low-level code optimization and uncovered a sorting algorithm that was up to 70% faster for certain small data sets than the decades-old human-crafted algorithms in the standard C++ library. This discovery has since been integrated into the libc++ library, improving real-world software performance. These cases demonstrate AI’s potential not only to speed up what humans can do, but to occasionally surpass human performance and innovate in areas like algorithm design, which can greatly improve software efficiency.

- Widespread adoption in industry and startups: Beyond the tech giants, many companies of all sizes are now adopting AI tools. For instance, TabNine’s AI assistant (one of the early products in this space) reports serving over 1 million developers worldwide. This wide usage, including at companies that handle sensitive code (TabNine can run locally without sending code to the cloud), indicates trust and reliance on AI coding tools is growing. Startups have also sprung up to tackle niche problems – such as Kite (for Python autocompletion), DeepSource (AI-assisted code review and optimization), and MutableAI (code transformation suggestions). Investment is pouring in: in 2023, venture funding for AI coding tools surged, with one example being Paris-based startup Poolside securing $500 million in funding even before launching their product, and another startup Magic raising $320 million to build an “AI software developer”. Such large investments are a bet that AI-driven development will become ubiquitous. Early adopters in enterprise report significant improvements – for example, a case study from a logistics firm (as cited by Groove Technology) noted that integrating AI into their development pipeline reduced their software delivery timeline from 6 months to 4 months, thanks to faster coding and testing cycles. Another real-world data point: software consultancy Brainhub reported that AI-based code analysis tools helped one of their client projects cut down code review times by nearly 50%, as the AI pre-flagged issues for human reviewers to focus on.

Collectively, these examples paint a picture of substantial gains in speed and efficiency. Projects finish faster, teams tackle more ambitious problems, and software can be more robust from the outset. However, it’s also evident that human oversight remains a factor – for each success story, the AI’s output was guided or checked by humans. The takeaway is that the best results come from a collaboration: AI accelerates the work and humans ensure the quality and direction. Companies that have embraced this collaboration are reporting not just quantitative improvements (like percent faster completion), but qualitative ones: developers can attempt solutions they wouldn’t have had time for before, and teams feel a greater sense of confidence that AI is watching out for certain classes of errors.

One notable qualitative impact is democratization of coding. Less experienced developers, with AI help, can produce code closer to the level of senior developers for certain tasks. This flattens the learning curve and can reduce the skill gap on a team. It’s common to hear junior programmers say that an AI assistant helps them learn – it not only suggests code, but often explains it in comments or can be asked for clarification (especially with conversational tools like ChatGPT). In effect, it’s like having a mentor available 24/7. This was observed in internal studies as well: Microsoft noted that less experienced developers benefited even more from AI assistance than veteran developers, because the AI provided on-the-spot guidance and examples.

Of course, not every experience is uniformly positive. Some teams initially reported that AI suggestions sometimes needed significant rework, or that integrating AI tools required changes to tooling and editor setups. But as the technology matures and developers learn its best use, the trend in case after case is improved outcomes. For instance, an engineering team at fintech company Intuit trialed AI code review and found that while it didn’t replace human reviewers, it caught 20% additional issues that humans missed, particularly security-related mistakes (like using a weak cryptographic function). They treated the AI review as an extra automated check in their pipeline and saw a measurable drop in post-release defects.

In summary, real-world evidence from tech giants and smaller companies alike shows that AI-driven development can significantly boost productivity (often 20–50% improvement), enhance code quality, and speed up delivery. Projects that integrate AI in coding and testing are hitting their milestones faster and with fewer bugs in production. Importantly, these improvements compound: faster development means faster feedback and iteration, which can lead to better end products and more innovation. Companies are not only completing backlogs quicker but also tackling tasks that were once shelved due to limited capacity. While AI is not a magic bullet and must be used wisely, these case studies demonstrate that when properly integrated, AI is a powerful amplifier of human software engineering capabilities.

Technical Underpinnings of AI Applications in Coding

Under the hood of these AI-driven development tools are a variety of sophisticated technical approaches. Understanding the technical underpinnings helps explain what these tools can (and cannot) do, and how they achieve their capabilities. Here we explore the key technologies enabling AI in software engineering: large language models, reinforcement learning, code analysis techniques, and more.

- Large Language Models (LLMs) for Code: The core of many modern code generation tools (Copilot, CodeWhisperer, ChatGPT, etc.) is a large language model. These are deep neural networks (often based on the Transformer architecture) trained on massive datasets of source code in many programming languages, as well as natural language documentation. Models like OpenAI’s Codex or Meta’s Code Llama essentially learn to predict the next token in a sequence, whether that token is a piece of code or text. By training on billions of code tokens, the models learn syntax, coding idioms, and even some semantics (like which library call is appropriate in context). Technically, these are the same kind of models that have been used for text (like GPT-3/4), but fine-tuned on code repositories (e.g., public GitHub). They capture statistical patterns of “what code usually looks like” for a given context. When you invoke Copilot, the underlying model takes the current file (and perhaps related context) as input and continues it. For example, given a function signature and a comment describing the function, the model can emit a plausible implementation. The strength of LLMs is in producing syntactically correct and contextually relevant code quickly. They excel at boilerplate and common tasks – essentially, they’ve “seen” thousands of similar code snippets during training and can generalize from that experience. However, they don’t truly understand the code as a human would; they don’t execute it in their mind. They rely on patterns. That’s why they can sometimes produce errors if the prompt situation is rare or tricky (the model might interpolate from vaguely related examples, sometimes incorrectly). Technically, LLMs use techniques like attention mechanisms to consider different parts of the input context (e.g., earlier code, comments) when generating each token, which helps maintain consistency and adhere to given specifications (like variable names or described requirements). State-of-the-art code LLMs (such as GPT-4 or Google’s PaLM-Coder) are also enormous in size – tens of billions of parameters – which gives them the capacity to store a vast amount of “knowledge” about programming. Some models incorporate few-shot learning, meaning they can take not only the immediate code context but also some examples of usage or style as part of the prompt to tailor their output. This allows customization without retraining. There’s also a trend of enabling LLMs to call external tools (like compilers or documentation lookup) to verify their outputs, leading to more reliable code generations. In summary, LLMs bring a powerful statistical understanding of code, enabling generation and completion tasks that feel like a predictive autopilot for coding.

- Reinforcement Learning (RL) for Code: Reinforcement learning is another AI technique applied in scenarios where an agent must learn by trial and error to achieve a goal. In software engineering, RL has been used in a few innovative ways. One is in game-ifying code generation or optimization tasks. DeepMind’s AlphaDev, for example, treats the task of improving an algorithm as a single-player game where each move is adding an instruction to the algorithm and a reward is given for faster performance. Through billions of simulation rounds (thanks to heavy compute), the RL agent gradually discovered sequences of CPU instructions that led to faster sorting logic than known human algorithms. This is essentially an application of RL to low-level code optimization – something compilers typically do with heuristics. Google’s research on MLGO similarly used RL to train a neural network to make compiler optimization decisions (like how to inline functions or order passes) that outperformed hand-tuned compiler heuristics. Another use of RL is in program synthesis from examples. Microsoft’s DeepCoder (and related academic projects) can be seen through an RL lens: an agent constructs a program piece by piece and gets reward if the program passes given input-output examples. The search space is huge (all possible programs), but RL combined with neural guidance helps prioritize likely useful code pieces. Moreover, some AI test generation tools use RL: they treat executing tests on software as an environment where the reward is higher coverage or finding a crash, and they learn to generate API calls or inputs that maximize that reward over time. RL is particularly useful when there’s a clear objective (like “minimize execution time” or “maximize code coverage”) and a way to simulate outcomes quickly. It adds a degree of goal-directed intelligence beyond the pattern recognition of LLMs. However, RL models often require a lot of iterations and careful reward design. In practice, RL has been successful in niche but critical tasks – e.g., discovering a better algorithm or tuning a performance parameter – which can then be directly applied to real software for big gains. AlphaDev’s case, finding a 70% speed improvement in sorting short sequences, is a prime example of how RL can achieve something novel in code that purely pattern-based AI might not. We can expect to see more RL in areas like autonomous debugging agents (that try sequences of fixes to see which resolves a bug, learning from each attempt) or in deployment optimization (agents learning optimal schedules for rolling out updates with minimal user impact, for instance).

- Static and Dynamic Analysis with AI (ML-assisted compilers and analysis tools): Long before the deep learning boom, software engineering had a rich set of static analysis tools (for checking code without running it) and dynamic analysis tools (for monitoring code during execution). Modern AI is augmenting these traditional techniques. For static analysis, AI/ML can prioritize warnings by learning from past data which patterns are true problems versus false positives. For example, a static analysis tool might flag 100 potential null-pointer dereferences in a codebase; an ML model could be trained on historical triage data to predict which 10 are most likely real issues, focusing developer attention. Machine learning in compilers is another area: a compiler like LLVM has many heuristics (e.g., how to order optimization passes, how to allocate registers) – researchers have applied genetic algorithms and neural networks to learn better strategies than default, given a specific workload. Google’s MLGO project replaced some LLVM optimization heuristics with an RL-trained policy, resulting in slightly more optimized binaries automatically. Over thousands of compilations, the model learns, for example, how to better decide inlining (to inline or not to inline a function) depending on code patterns, yielding performance improvements that generalize. On the dynamic side, AI-assisted profiling might learn what typical performance profiles look like and highlight anomalies (e.g., “this request is consuming 2x more CPU than similar ones, likely due to a regression here”). AI can also help in memory leak detection – by learning what normal memory allocation patterns are for an application and flagging functions that allocate in suspicious growing patterns, even if they don’t strictly violate a rule. Graphical models and Bayesian networks have been used to predict software quality metrics or hot spots in code that will generate bugs. For instance, code properties like complexity, churn, dependency metrics can feed a model predicting modules with a high chance of defects. These predictions help target code inspections or refactoring efforts proactively. Additionally, graph neural networks (GNNs) are increasingly applied to code, since code has natural graph structures (ASTs, control flow graphs, program dependency graphs). A GNN can learn to propagate information along these structures – e.g., to detect if a certain variable misuse is possible by analyzing data flow. This has led to ML-based vulnerability scanners that outperform purely syntactic checkers by understanding code semantics better. In summary, AI is enhancing program analysis by dealing with uncertainty and learning from data, rather than relying solely on rule-based logic. This means more intelligent tools that can adapt to the codebase they are applied to and improve over time as they see more code.

- Neural-Symbolic and Constraint Solving Techniques: Some advanced research and tools combine neural learning with traditional symbolic reasoning. For example, an AI might use an SMT solver (which can formally prove properties about programs) in tandem with neural heuristics that guide what properties to check. This is relevant in formal verification: proving correctness properties of code is very hard, but neural nets can guess loop invariants or likely assertions that help the solver. Microsoft’s research on neural program synthesis often adopts this approach: use neural networks to generate candidate programs or invariants, then use a formal method to verify correctness, and iteratively refine. Another burgeoning area is using AI to translate between programming languages or from pseudocode to code (leveraging sequence-to-sequence learning, akin to translation). There was a successful example where OpenAI’s Codex translated some legacy code (like COBOL) to modern languages by being trained on parallel data. This kind of automation can modernize old systems faster.

- Large-Scale Code Search and Embeddings: AI techniques are also used to create vector representations (embeddings) of code that capture semantic similarity. This underpins tools like Sourcegraph Cody or Amazon’s CodeGuru Reviewer when it suggests improvements by finding similar code snippets. By encoding code into a numeric vector space, an AI can quickly retrieve “code that looks like this,” which is useful for finding duplicates, detecting plagiarism, or locating where a certain bug fix might need to be applied in multiple places. These embeddings can be learned by neural networks trained to represent code fragments such that those with similar functionality end up close in vector space.

Technically, many of these AI models require big data and significant compute. Training a code model like Codex involves feeding in essentially all public open-source code – on the order of many terabytes of data – and training on supercomputers with specialized hardware (GPUs/TPUs) over weeks. Reinforcement learning on code can involve millions of executions of code in simulation. This means these tools often come from well-resourced research labs or companies. However, once trained, they can be packaged and run in the cloud or even locally (with some optimizations) to serve many users.

It’s also worth noting the limitations from a technical perspective. LLMs, for instance, have a context window limit – they might only consider, say, the last 4,000 tokens. If a codebase is huge, they might not “remember” earlier parts unless a mechanism like retrieval (searching relevant files and including them in the prompt) is used. They also do not inherently know if a piece of generated code will compile or run correctly – they have to infer it. That’s why integrating compilers or runtime feedback (like unit tests in the loop) is an active area. Some systems now do “execution-in-the-loop”: generate code, run it (in a sandbox), see if it does what’s expected, and use that feedback to adjust (this is a form of reinforcement learning from real feedback). This technique was used in AlphaCode’s validation step (it ran generated code against tests) and is being integrated into coding assistants to self-correct obvious mistakes.

Another technical underpinning is natural language understanding for connecting code with human intent. Models like GPT-4 can parse a natural language bug report and locate in the code where the issue might be, by connecting linguistic descriptions to code behavior (learned from documentation and Q&A sites). This is how ChatGPT can often accept a description of a problem and directly output a code fix.

Lastly, an important component is the concept of human feedback in training. OpenAI’s Codex and ChatGPT, for example, were fine-tuned not just on code but on examples of human interactions and preferences (via Reinforcement Learning from Human Feedback, RLHF). That means the model was trained to not just write code, but write it in a way that a user finds helpful (e.g., including comments, not using overly obscure tricks, etc.). This human-in-the-loop training aligns the AI’s behavior more with user expectations, making the tools more practically useful.

In sum, the technical foundation of AI-driven software engineering tools is a blend of cutting-edge machine learning and classic programming analysis. Large language models provide the fluent generation and completion abilities by learning from big data. Reinforcement learning and search provide problem-solving power to explore solutions beyond what’s in the data. Graph and static analysis techniques integrate domain knowledge of code structure, enhanced with ML to handle complexity. And around all this, systems engineering glue integrates these AI components into developer workflows (editors, version control, CI pipelines). The result feels to the user like a helpful colleague inside the computer – but under the covers it’s a synergy of algorithms relentlessly optimizing different aspects of the software development process.

Ethical, Security, and Trust Challenges in AI-Driven Development

While the benefits of AI in software development are clear, this paradigm shift also introduces a host of ethical, security, and trust challenges that organizations and practitioners must address. Adopting AI-driven tools is not without risk. Below, we outline some of the major concerns and challenges:

- Code Quality and Reliability of AI-Generated Code: One primary concern is that AI-generated code may look correct at a glance but harbor subtle bugs or inefficiencies. AI models do not truly understand the intent behind code – they might generate something that passes basic tests but fails in edge cases. In practice, it has been observed that AI-generated code can introduce errors and bugs, requiring developers to perform thorough reviews to ensure quality. For example, an AI might use an outdated method or a deprecated API because it was prevalent in its training data. Or it might produce logically incorrect code if the problem is tricky, even though the syntax is fine. This inconsistency means developers must maintain critical oversight. Blindly trusting AI output is dangerous – the AI might be 90% correct, but the remaining 10% could be critical. Moreover, AI often produces code that is overly verbose or complex (since it may include all sorts of error-checks or generic patterns to be “safe”), which can complicate maintenance. Thus, teams need to set guidelines on reviewing AI contributions: for critical modules, AI-suggested code may undergo extra scrutiny or testing. The onus is on developers to not become over-reliant or complacent, assuming the AI’s first answer is always right.

- Security Vulnerabilities and AI: Security is a significant challenge. AI models trained on public code might not be up-to-date on the latest secure coding practices or vulnerabilities. If much training data precedes, say, 2018, the model may not “know” that a certain function is now considered unsafe or that a new API provides better security. AI-generated code may omit crucial security steps or use vulnerable idioms, inadvertently introducing weaknesses. For instance, an AI might suggest constructing an SQL query via string concatenation (leading to SQL injection risk) because it saw similar code in training, unless it has context to know about prepared statements. OpenAI’s early Codex experiments did show it could produce insecure code if not carefully prompted. Additionally, because the AI doesn’t truly grasp the implications of data handling, it might not sanitize inputs or enforce access controls unless explicitly instructed. There’s also concern that attackers could use the same AI tools to find exploits or write malware (though that’s a separate but related security worry). To mitigate these issues, security review cannot be skipped – AI suggestions should be run through static analysis or security scanners just like human code, and developers should be trained to spot common vulnerabilities even in AI-produced code. On the plus side, AI tools themselves are being developed to check for security issues (like GitHub’s code scanning with ML). But at least in the early adoption phase, security professionals advise caution: assume AI-written code is as error-prone as novice developer code and treat it with the same diligence.

- Intellectual Property (IP) and Licensing Concerns: AI models trained on open source code potentially raise legal and ethical issues about code use and attribution. There have been debates and even legal inquiries into whether tools like Copilot may occasionally regurgitate sections of licensed code verbatim without attribution. If an AI suggests a snippet that happens to come directly from, say, a GPL-licensed repository, and a developer unknowingly includes it in a proprietary codebase, this could violate licensing terms. The AI doesn’t have a built-in notion of software licenses. It’s trained on “open” code, but not all open code is free of obligations. There is a risk of code plagiarism or license infringement through AI. Companies are addressing this: GitHub Copilot, for example, introduced filters to detect and avoid output that matches longer sequences from training data. Still, organizations using AI code generators often establish policies like: “All AI-generated code must be treated as third-party code until reviewed.” They may require developers to double-check any non-trivial AI output against known open-source code to ensure they’re not copying something wholesale. Another aspect is ownership of AI-created code. If an AI generates a substantial piece of code, who owns it? The developer who prompted it? The tool provider? IP law is catching up, but currently it leans towards treating the user as the author (since AI isn’t a legal entity). However, companies might have clauses in terms of service about code generated by their AI. Caution and clarity are needed so that adopting AI doesn’t inadvertently entangle a project in legal uncertainty.

- Bias and Fairness: AI systems can inherit biases present in their training data. In the context of code, this might mean perpetuating certain stylistic biases or even more concerning issues. For instance, if training code contains biases (say, an AI support system might generate examples consistently using certain demographic names or only male pronouns in documentation comments, etc.), it could propagate non-inclusive practices. There’s also the concept of algorithmic bias in requirements or user data analysis – if an AI is used to predict user needs or analyze behavior, it might reflect societal biases (this is more on the product side but still a concern in AI-driven software outcomes). Another fairness concern is that if AI assists some developers and not others (due to access or cost), it could create disparities in team performance – though this is more of an adoption issue.

- Transparency and Explainability: AI decisions in code suggestions are often a “black box.” When an AI recommends a particular algorithm or approach, it’s not easy to ask it why it chose that approach (unless using an interactive system like ChatGPT which might provide some reasoning on request). This lack of explainability can be problematic. In critical domains (healthcare software, aerospace), one needs to justify design decisions for safety and compliance. If an AI suggested a piece of code, how do we trace the rationale? Lack of transparency can affect trust – developers might be wary of integrating code they don’t fully understand. It also complicates debugging: if an issue arises in AI-written code, one might not immediately grasp the logic as one would with code they wrote themselves. Addressing this may involve tools that accompany code suggestions with explanations or references (e.g., “I used this approach because it’s a known pattern for X, see reference”). Some AI coding assistants are adding features to cite the sources of their suggestions (like pointing to a Stack Overflow answer or documentation that inspired the code). Nevertheless, explainability remains an open challenge.

- Over-Reliance and Skill Degradation: A more human-factors issue is the risk that developers become overly reliant on AI and let their own skills atrophy. If one constantly uses AI to do the heavy lifting, one might not develop the deep understanding that comes from implementing things oneself. New developers might lean on AI for every answer and thus not learn how to solve problems from first principles. Seasoned developers might skip staying up-to-date, trusting AI to know what’s current (which might not always be true, depending on the model’s knowledge cutoff). This challenge suggests that developers should still practice coding without AI assistance regularly to keep their skills sharp, and treat AI as a tool, not a crutch. There is also the scenario of automation complacency – similar to pilots relying on autopilot – developers might become less vigilant, potentially allowing errors to slip by. To counter this, teams sometimes enforce practices like code reading sessions or require developers to reimplement critical sections without AI to verify correctness.

- Data Privacy: Many AI coding assistants operate as cloud services – meaning the code you’re writing (which might be proprietary or sensitive) is sent to the AI provider’s servers for processing. This raises confidentiality and privacy issues. Some companies (especially in finance, defense, etc.) have been hesitant or outright banned the use of such tools for fear of leaking code to external servers. While reputable providers claim they don’t store or use your prompted code beyond providing the service, it remains a trust issue. In response, solutions like on-premises AI models or local deployment of AI assistants (as offered by some, or via open-source models one can run internally) are emerging for those who require it. Privacy of any user data that the AI sees is also a concern – for example, if AI is used to analyze logs or user feedback that contain personal data, regulations like GDPR require careful handling. Thus companies must ensure that using an AI service doesn’t inadvertently violate data protection policies. Vendor contracts and technical safeguards (like encryption in transit, not retaining data, etc.) need to be scrutinized.

- Ethical Use and Responsibility: There are broader ethical questions: If an AI is used to make decisions (like prioritizing features or allocating resources), is it fair and who is accountable? In software development context, one ethical aspect is job impact – the fear that AI could displace jobs. While so far AI seems to augment rather than replace developers, organizations have an ethical responsibility to retrain and redeploy staff rather than treat AI purely as a way to cut headcount. There’s also the issue of responsibility for errors: if AI introduces a bug that causes a serious failure, who is accountable? Legally it will be the company and likely the engineers, not the AI tool vendor (most have disclaimers). So engineers must understand that using AI doesn’t absolve them of responsibility for the code – they should treat AI suggestions as if they were written by a junior colleague or found on Stack Overflow: useful, but to be double-checked.

- Organizational and Cultural Challenges: Integrating AI tools can face human resistance. Developers might worry about their role or feel uneasy about an AI reviewing their code or suggesting better solutions. There can be a cultural barrier to trusting AI output (sometimes for good reason). Change management is needed – organizations may need to educate teams on the value of AI tools, address concerns, and set a culture where using AI is seen as collaborative rather than cheating or threatening. Another challenge is ensuring that AI tools align with company-specific standards or style. AI might suggest something that doesn’t mesh with the project’s architectural vision. Hence, some companies work on customizing models with their internal codebase so the AI aligns with their conventions (which is possible with fine-tuning or providing extensive project context to the model).

To address these challenges, several best practices are emerging:

- Human-in-the-Loop Processes: Always include a human review stage for AI outputs, especially for critical code. For example, treat AI-written code as one would treat code written by a junior developer – mandatory code review and testing before acceptance.

- AI Coding Guidelines: Companies are drafting guidelines for when and how to use AI. For instance, “Do not use AI suggestions for cryptography or security-critical code without security team approval” or “Prefer AI for boilerplate, but not for complex domain logic unless reviewed by domain expert.”

- Tooling to Catch AI Mistakes: Use linters, static analyzers, and tests liberally on AI-generated code. If possible, use multiple AIs – for example, have one AI generate code and another audit it (some have suggested this “AI pair programming” concept where two different models cross-verify each other).

- Education and Training: Train developers in secure coding and debugging with AI in the loop. Make sure they know the pitfalls (like the training cutoff issue, or that the AI might lack context). Also, train them on IP issues – e.g., if an AI output looks too specific or complex beyond the developer’s knowledge, treat it with suspicion and investigate its origin.

- Policy on Data Usage: If using cloud-based AI, ensure compliance by maybe anonymizing code (difficult for code) or choosing providers that offer private instances. Alternatively, opt for local/offline AI models for sensitive projects, even if they are slightly less powerful, to guarantee no code leakage.

- Continuous Monitoring of AI suggestions: Gather metrics on AI usage – which suggestions were accepted, which led to bugs later. This can identify patterns of AI failures, which can be fed back to either the vendor or used to inform the team about what not to trust. For instance, if data shows AI-suggested multi-threading code often has race conditions, one might disable AI help for concurrency-related code.

On the compliance side, regulatory bodies are beginning to pay attention. The EU’s proposed AI Act, for example, might categorize AI coding tools in certain risk categories if they significantly influence software behavior in areas like safety or human rights. Organizations might soon need to document their use of AI in software similar to how they audit open-source usage.

In conclusion, trust but verify is the watchphrase. AI-driven software development offers great rewards but must be approached with a clear-eyed understanding of the risks. By instituting proper checks and balances – much like any engineering safety protocol – teams can enjoy the productivity gains without falling prey to the pitfalls. Embracing AI in development is as much about responsible use as it is about cutting-edge technology. Those who navigate these challenges successfully will set the standards for ethical and safe AI-enhanced engineering practices.

Future Trends and the Potential Future of Autonomous Software Engineering

Looking ahead, the trajectory of AI in software engineering points towards even deeper integration and more advanced capabilities. While it’s difficult to predict exactly how things will unfold, we can identify several trends and research directions that are likely to shape the future of autonomous or semi-autonomous software development:

- More Autonomous Coding Agents: Today’s AI assistants are mostly reactive – they respond to prompts or specific tasks. A future trend is the development of autonomous coding agents that can take high-level objectives and iteratively work towards them with minimal human intervention. Early experiments, such as OpenAI’s AutoGPT or Meta’s “Developer” persona in their research, hint at AI that could potentially create a simple app from scratch by breaking down tasks, writing code, testing it, and adjusting. While current results are rudimentary, the idea is that an AI could act as a project developer: given a description like “Build a to-do list web app with user login,” it could scaffold the project, set up frameworks, generate code for components, run and test the application, and even deploy a working version – asking for help only when needed. Achieving this reliably will require advances in multi-step planning (a known challenge for AI), improved error handling, and the ability to learn and adapt during the development process (not just generate static code). We might see specialized agents for specific domains, like an “AI Mobile Developer” that knows Android/iOS patterns in depth and can assemble a basic mobile app itself. Such agents would essentially automate the role of a junior developer or integrator. However, human developers would still guide them: setting the requirements, handling the creative design decisions, and reviewing the final output. Over time, as trust builds, these agents might handle more grunt work autonomously.

- End-to-End AI Integration in the SDLC: We will likely see AI support not just in coding and testing, but at every phase of the software development lifecycle in a cohesive way. Requirements engineering could benefit from AI that translates stakeholder meetings or user stories into formal requirements or diagrams. For example, imagine feeding a set of user interviews to an AI and it produces a requirements document draft or user story map. During design, AI could suggest architectures based on non-functional requirements (scalability, security), perhaps by referencing known architectures used by successful systems with similar needs. In implementation, as we have, AI aids coding. During continuous integration, AI might intelligently decide which tests to run (based on an analysis of the code changes), or even generate on-the-fly additional tests if it suspects a certain area is affected. For deployments, AI can optimize rollout strategies (maybe using predictive analytics to schedule deployments at low-traffic periods automatically). In maintenance, AI will likely help with legacy code comprehension – an AI could read an old codebase and produce summary documentation, or even refactor it to modernize it (some experimental tools already do automated refactoring suggestions). Predictive maintenance is another area: by analyzing code commit history and issue trackers, AI might predict components of the system that are “hot spots” likely to fail or need refactoring, prompting proactive action. The future might bring a fully AI-augmented SDLC where the AI isn’t a separate tool at each stage, but a continuous presence – like a project guardian angel that knows the project’s context from inception to production and offers pertinent help throughout.

- Domain-Specific AI Dev Tools: We can expect AI tools to become more domain-aware. Currently, general models might not know, for instance, the intricacies of financial compliance rules or medical device software standards. Future AI will be specialized or fine-tuned for domains, incorporating domain knowledge and terminology. For example, in game development, an AI could be tuned with knowledge of game engines, physics, and design patterns common in game code; it could then not only write code but also propose improvements to game logic or performance optimizations specific to game loops. In web development, an AI might deeply know React or Angular frameworks and be able to enforce best practices of those automatically. There’s active work on AI-assisted database query optimization – essentially an AI DBA that can redesign queries or schema for efficiency, which is very domain-specific. Domain specialization will make AI suggestions more accurate and contextually relevant, reducing the “silly mistakes” an out-of-the-box AI might make due to lack of context.

- Collaboration Among AI Agents and Humans: Future development might involve a mixed team of humans and AI agents. We might see scenarios where multiple AI agents with different roles collaborate: e.g., one agent generates code, another reviews it for errors (like a built-in pair programmer), another focuses on security aspects, and a human oversees the final merge. This multi-agent approach, if orchestrated well, could parallelize and double-check work efficiently. Research projects are exploring how different AI agents might pass tasks among themselves (an analogy is an AI “scrum team” where each agent specializes in part of the task backlog). The human developers in such scenarios act more like project managers or lead engineers, guiding the AI team, resolving any impasses, and handling the complex tasks the AI cannot. This vision is speculative but not implausible as AI becomes more capable.

- Natural Language and High-Level Intent Programming: We may move closer to the ideal of telling the computer what we want, and having it figure out how to do it. Today you can say “Write me a function that does X” and get a piece of code. Tomorrow, you might say “Build me a system that does X” and get a first-pass implementation. As AI’s ability to reason improves, the gap between intent and implementation shrinks. This is akin to the long-standing dream of very high-level programming languages. AI might become the ultimate compiler for human intentions – you describe features or behaviors in near-natural language, and the AI synthesizes the code to match. We see glimmers of this in tools like ChatGPT plugins where you can ask it to do a multi-step operation (like “take the data from this API, filter it, and plot a graph”) and it writes the glue code to do so. One can imagine future IDEs where non-programmer domain experts could effectively “program” by conversing with the AI in their own domain language, and the AI translates that into working software. This would broaden who can create software (a democratization often called “no-code/low-code” movement, turbocharged with AI). It raises questions of quality and maintainability, but for certain classes of applications (simpler or standard ones), it could work quite well.

- Improved AI Reasoning and Error Understanding: Future AI models are likely to incorporate more logical reasoning, possibly hybridizing with symbolic AI. This means they won’t just generate code by pattern, but also internally check constraints. We already see early versions of this: some experimental models can detect that their output is likely to throw a compilation error and correct it before showing it to the user. DeepMind’s AlphaCode, for instance, ran generated code against tests internally. Future coding AIs might routinely run a quick static analysis or simulate execution in a sandbox to ensure their suggestion actually works. The result would be far more reliable outputs. Also, AI might become better at understanding error messages and debugging. If a compiler error arises or a test fails, the AI could diagnose and fix it, essentially learning from its mistakes on the fly (something humans do in debugging). This ties into reinforcement learning and self-feedback loops.

- Ethical AI Development Practices and Standards: We can anticipate the emergence of standards and certifications for AI-developed software. For example, regulatory bodies might require that safety-critical code (like in medical devices or automotive software) that was AI-assisted must pass certain verification steps, or that the AI tool used is certified for that domain. There may also be industry standards for documenting the involvement of AI in a codebase (for traceability). We might even see AI coding licenses – analogous to open source licenses – that dictate how AI-generated code can be used or attributed. The developer community might also develop norms, such as including comments when AI is used.

- Continuous Learning AI integrated with DevOps (MLOps meets DevOps): Future AI coding systems might continuously improve by learning from the specific codebase they’re deployed in. Imagine an AI assistant that observes all the code commits and feedback in a project and gradually adapts to the team’s style and preferences. This is a bit tricky due to potential overfitting or leaking sensitive code outside, but technically feasible with on-premise models. Such an AI would become an increasingly personalized assistant, knowing the code history (“the last time we tried to implement something like this, it caused a bug, so I’ll warn the developer now”). This merges the idea of DevOps (which is about continuous integration and delivery) with machine learning (continuous learning from new data).

- AI in Software Maintenance and Evolution: A significant portion of software engineering is maintenance of legacy systems. We will see AI taking a stronger role here – from automated refactoring to migrating code to new languages. For instance, COBOL or older Java applications that need to be modernized could be largely converted by AI, with humans doing final adjustments. AI could also monitor running systems and autonomously suggest improvements in performance or updates of dependencies when new versions come out (maybe even automatically testing and upgrading a dependency if it’s safe).

- Quantum Computing and AI in Software Engineering: Looking further out, if quantum computing becomes practical for certain computations, AI might help in developing quantum software as well. Quantum programming is notoriously difficult; an AI assistant might help translate classical algorithms into quantum counterparts or optimize quantum circuits – a niche but important area for the future of computing. Additionally, as quantum computers might solve certain problems much faster, they could be used to train or run AI models that aid development, though that’s speculative at this point.

Throughout all these trends, one thing is clear: human engineers will remain crucial, but their focus will shift more and more to high-level supervision, creative design, complex problem solving, and ensuring ethical and safe use of technology. Routine programming might eventually be almost fully automated, just as assembly coding gave way to high-level programming, which is now giving way to AI-assisted programming. This doesn’t reduce the importance of understanding computing – in fact, it arguably raises it: future developers need to have excellent abstraction skills, domain knowledge, and oversight capabilities. They’ll be like conductors of an orchestra of AI tools.

As a forward-looking analysis, it’s also worth mentioning the unknown unknowns: AI research is advancing quickly, and there may be breakthroughs (for example, AI systems that can truly understand software requirements or prove complex program properties) that radically change software engineering again. We might reach a point where for certain well-defined tasks, no human coding is needed at all – the AI handles it end-to-end. On the other hand, we might also hit limits of what AI can do (for instance, truly creative software architecture might remain a human forte for a long time, as it requires intuition, experience, and sometimes thinking outside the patterns present in training data).

In terms of the future of autonomous software engineering, a compelling vision is one of collaboration and co-evolution of human and AI capabilities. As AI gets better at coding, humans can push to higher levels of thinking – focusing on product vision, user experience, and solving new problems – which in turn gives AI new targets to reach. The endgame is not AI replacing developers, but raising the level of abstraction at which developers operate. In the 1960s, programming was flipping switches and writing in machine code; then we invented higher-level languages, and then libraries and frameworks – each step allowing us to build more complex systems more easily. AI might be the next step in that evolution: the tool that handles the boilerplate and the known, enabling developers to focus on the novel and the conceptual.

Already, the collaboration between humans and AI is producing results neither could achieve alone – such as AlphaDev’s algorithm discovery or Copilot’s significant productivity boosts. This collaborative trend will likely intensify. The most successful teams of the future might be those that figure out the optimal division of labor between creative human intelligence and tireless machine intelligence.

To conclude, the future of AI-driven software development holds tremendous promise. We can expect more automation, smarter tools, and possibly a redefinition of what “coding” means. Yet, the essence of software engineering – solving problems for people, with constraints and creativity – will remain. AI will simply change how we solve those problems. It’s an exciting time: we stand at a point analogous to the early days of high-level programming languages or the birth of the internet – a revolutionary technology is reshaping our discipline. As we move forward, it will be crucial to continue emphasizing ethical considerations, education, and human-centric design of these AI tools. By doing so, we ensure that the future of autonomous software engineering is one where human and AI work hand-in-hand to achieve feats of development previously unattainable, unlocking new levels of innovation in software that will benefit society at large.

In the words of one industry observer: “The future of programming lies in the evolving collaboration between humans and AI, working together to design and engineer complex, dynamic systems.”. That future is now coming into view, and it will be our job as software engineers to guide it responsibly and creatively to its full potential.

References

- OpenAI, “Introducing GitHub Copilot.”

https://openai.com/blog/github-copilot - Microsoft Research, “Evaluating GitHub Copilot’s Impact on Developer Productivity.”

https://www.microsoft.com/en-us/research/publication/evaluating-copilot - GitHub, “The State of AI in Software Development.”

https://github.blog/2023-06-22-the-state-of-ai-in-software-development - DeepMind, “AlphaCode: Competitive Programming with AI.”

https://www.deepmind.com/blog/competitive-programming-with-alphacode - DeepMind, “AlphaDev Discovers Faster Sorting Algorithms Using AI.”

https://www.deepmind.com/blog/discovering-faster-algorithms-with-alphadev - Amazon Web Services, “CodeWhisperer: AI Coding Companion.”