AI-Driven 3D Immersive Experience Networks: Powering the Next Digital Frontier

In the coming years, advances in artificial intelligence (AI), networking, and 3D graphics will combine to transform how we work, learn, and play. We are on the cusp of an era of AI-driven immersive networks, where virtual and augmented reality (VR/AR) environments are intelligent, adaptive, and hyper-connected. (Here, “XR” refers broadly to Extended Reality technologies, including fully immersive VR and real-time AR.) AI algorithms can analyze user behavior, sensor inputs, and context data in real time to dynamically shape the experience. At the same time, next-generation networks like 5G and beyond provide ultrafast, low-latency links that allow rich 3D content to stream anywhere. This synergy means our digital and physical worlds are merging in unprecedented ways.

These trends are already reflected in market forecasts. For example, the global virtual reality market (covering VR headsets, software, and services) was about $16.3 billion in 2024 and is projected to exceed $123 billion by 2032. Likewise, shipments of AR devices are exploding: ABI Research projects AR headset shipments surging from about 1.4 million units in 2022 to nearly 47 million by 2027. This explosive growth underlines how quickly immersive tech is entering the mainstream. Businesses are already taking note: gaming and media firms, retailers, educators, and manufacturers all see AI-driven XR as a strategic frontier. In short, immersive networks are poised to become as ubiquitous as smartphones — the only question is how quickly companies will catch on.

AI-Powered Immersive Experiences

At the heart of these future worlds is AI – the “brain” that makes immersive experiences come alive. In modern games and simulations, AI-driven content generation and behavior allow environments to evolve in real time. For example, non-player characters (NPCs) in games can carry on unscripted conversations and adapt their tactics based on your style of play. As one industry report notes, AI-powered NPCs “are capable of engaging in real-time conversation” using natural language generation, and even generate dynamic responses as the game unfolds. Procedural content generation means the game world itself can change from session to session. In practice, an AI can create new levels, quests, and even music on the fly, so that “no two playthroughs are exactly the same,” significantly boosting replay value. In effect, generative AI turns static 3D scenes into living, responsive worlds.

These AI techniques extend into all dimensions of an immersive experience. Computer vision and machine learning running on a headset or phone can recognize real objects and overlay contextual information in real time. For instance, an AR app pointed at a factory machine might immediately highlight the overheating component that needs service, while a home-decorator app could allow you to “place” virtual furniture on the floor. AI models can also translate languages instantly: imagine wearing AR glasses that translate foreign signs into your own language as you walk down a street. In fact, SAPizon notes that modern AI now enables real-time object recognition, text translation, and even the creation of highly realistic 3D models on the fly. In essence, AI brings intelligence and understanding to 3D scenes, letting the virtual content react to the real world and the user’s needs.

In the healthcare domain, immersive AI is already yielding tangible benefits. Virtual reality therapy is used today for pain management and rehabilitation by immersing patients in calming or controlled environments. For example, Cedars-Sinai Medical Center treated burn patients with VR headsets, transporting them into snowy mountains or tranquil beaches during wound care; patients reported dramatically reduced pain and anxiety. AI enhances these therapies by tailoring each scenario: the system can adjust difficulty or intensity based on the patient’s biofeedback. If sensors detect stress, the VR environment might become even more soothing; if a stroke patient successfully moves a virtual limb, the next task can adapt to be slightly more challenging. This adaptive feedback loop – powered by machine learning – makes each session highly personalized and effective.

Education and training are similarly being redefined by AI-powered XR. Immersive learning experiences – from virtual science labs to flight simulators – let people practice complex tasks in a safe, controlled setting. AI can personalize these lessons on the fly. For example, a virtual reality anatomy class can use AI to track whether a medical student has mastered the circulatory system; if not, the software might branch to extra explanations or quizzes on that topic in real time. Companies and schools report that AI-enhanced VR training boosts retention: trainees in high-risk jobs (like first responders or machinery operators) learn skills more quickly and with fewer mistakes. In fact, one study found that VR training reduced workplace injury risk by about 43%, and dramatically increased knowledge retention (VR-trained workers retained 75% of content vs. 10% for traditional training). By mixing realistic 3D scenarios with AI-driven feedback, organizations prepare learners more effectively at lower cost and risk.

More broadly, AI enriches every aspect of an immersive environment. In live events and entertainment, AI can adjust visuals and narratives in response to the audience. A virtual concert, for instance, might use AI to analyze crowd reactions (via voice or motion data) and dynamically shift the light show or background content. AI-driven avatars can serve as personal guides or hosts. In retail and marketing, AI-powered AR shopping apps are emerging: these apps recommend outfits or products based on your style profile, and instantly visualize virtual try-ons. As described in a case study of AI-augmented shopping, an AR headset can identify a customer’s preferences and past purchases, then AI curates a list of recommended items; edge servers process this data locally to minimize lag, while high-speed networks stream rich 3D overlays of products in real time. The result is an “immersive shopping experience” that feels intuitive and futuristic.

In summary, AI in immersive networks plays three key roles: Perception (computer vision and sensing), Intelligence (NLP, recommendation, decision-making), and Generation (creating or adapting 3D content). Whenever the virtual environment needs to “know” something or create something — whether recognizing a gesture, simulating an NPC, or generating new scenery — AI is at work behind the scenes. For business decision-makers, this means that the line between AI investments and XR investments is blurring: the two go hand-in-hand.

The Underlying Technology Ecosystem

Delivering AI-driven 3D experiences requires a powerful technology backbone. On the hardware side, graphics processors (GPUs) and AI accelerators are essential. Modern AR/VR devices pack dedicated chips to render high-resolution, stereoscopic graphics at 60+ frames per second. For example, high-end VR headsets (like the Meta Quest Pro or Valve Index) and AR glasses (Microsoft HoloLens, Magic Leap, or emerging lightweight wearables) use specialized GPUs and display engines to create convincing virtual scenes. These chips also often include AI cores; NVIDIA’s Tensor Cores or dedicated neural processing units handle real-time vision tasks and language processing. In short, the latest GPUs are the engines that drive both the imagery and the AI inside XR.

3D graphics software is also advancing. Game engines like Unity and Unreal now integrate AI tools for animating characters and optimizing scenes. Path-tracing and ray-tracing allow virtual light to behave more naturally, yielding photorealism in VR. Procedural generation algorithms (often AI-enhanced) can automatically build terrain, architecture, and even crowds, reducing the human cost of content creation. As these tools mature, developers can craft vast immersive worlds with fewer resources.

Networks and connectivity are equally critical. Immersive AR/VR is extremely sensitive to lag and bandwidth. Jittery graphics or delays in sensor data can break the illusion (and even cause motion sickness). This is why 5G and edge computing have become game-changers for XR. 5G cellular networks deliver multi-gigabit throughput and latencies under a few milliseconds, making it feasible to stream high-fidelity VR content to a mobile headset. At the same time, Multi-access Edge Computing (MEC) places small servers close to users (for example, in cell towers or office buildings). In practice, an application might offload heavy tasks like AI inference or 3D rendering to the edge server, then send the results back over 5G. For example, in an AR shopping scenario, the device can capture images of a product, the nearby edge server identifies and renders enhancements, and then high-definition overlays appear on the user’s headset with negligible delay. This 5G+MEC combination “supercharges” immersive apps by ensuring real-time responsiveness. Industry analysts note that as 5G, edge, and AI converge, they “create a robust ecosystem” for seamless, personalized AR/VR experiences.

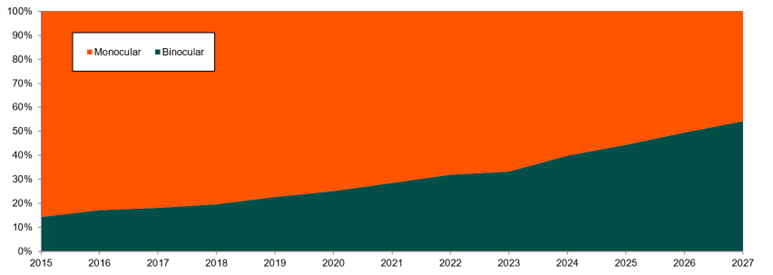

The following charts illustrate how rapidly the infrastructure is scaling.

Figure 1: AR smart glasses shipments by device type (monocular vs binocular), projected 2015–2027 (ABI Research).

The data show that AR hardware is entering the mainstream at breakneck speed. Shipments of AR glasses are forecast to leap from just 1.4 million units in 2022 to about 46.9 million in 2027 – roughly a 100% annual growth rate. Notably, the mix of hardware is changing: early AR glasses were mostly monocular (one-eye) devices, but by the mid-2020s binocular models (with dual cameras for richer depth and graphics) will dominate. This transition implies that consumers will soon be using very capable AR devices everywhere, from smartphones to lightweight headsets.

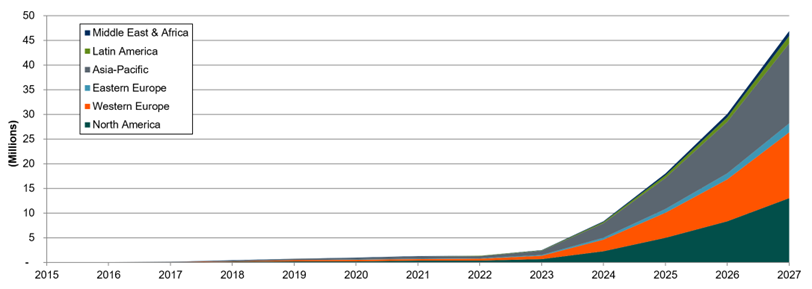

Likewise, the demand for immersive devices is truly global.

Figure 2: Regional breakdown of AR smart glasses shipments (projected 2015–2027).

The chart breaks out shipments by region (North America in dark green, Western Europe orange, Asia-Pacific gray/blue, etc.). Asia-Pacific leads in volume due to strong electronics manufacturing and large consumer markets. North America and Europe also show steep growth curves, reflecting enterprise and consumer adoption. In total, these projections point to tens of millions of new AR/VR devices per year by the late 2020s. Such scale is critical to justify the AI and network investments.

Putting it all together, the tech ecosystem for AI-driven 3D networks includes:

- AR/VR Devices: Headsets, glasses, motion trackers, hand controllers, gloves, and haptic wearables. These are the interface to the user, capturing head and hand movement and displaying rich 3D graphics. Next-gen devices increasingly add eye-tracking and neural-input sensors.

- AI Compute: High-performance GPUs and specialized neural chips that run AI models. These processors handle computer vision (scene understanding), NLP (voice/chat), and generative algorithms in real time. Many tasks can run on-device or on nearby edge servers.

- 3D Engines and Middleware: Software platforms (Unreal, Unity, proprietary engines) that render scenes and simulate physics. Increasingly, they include AI-driven features: for example, an engine might use machine learning to animate virtual crowds or simulate realistic cloth and hair.

- Cloud & Edge Services: Data centers and edge nodes that host content, synchronize multi-user experiences, and perform heavy computation. Edge computing, in particular, keeps latency low. For instance, an edge server could run an AI model to identify a product in your view, then quickly return recommendations.

- Networks: High-bandwidth links (5G, fiber optics, Wi-Fi 6/7) that connect everything. Ultra-low latency is key – a round-trip delay of just 10 ms can be perceptible in VR. As [Netscribes] emphasizes, combining 5G’s speed, edge proximity, and AI intelligence unlocks smooth and personalized XR experiences.

- Sensors & IoT: Complementary devices that feed data into the experience. Environment cameras, GPS, LIDAR maps, health monitors, and Internet-of-Things sensors can all enrich XR. For example, a smart home could stream its sensor readings into your VR interface to automate tasks. AI then fuses these inputs into the immersive application.

- Software Platforms: Operating environments and standards for XR. These include avatar frameworks, spatial audio systems, cross-platform libraries, and security layers. For example, OpenXR is an emerging standard that aims to make XR software portable across devices. Interoperability will be crucial as multiple vendors deploy compatible virtual content.

In essence, building AI-driven immersive networks means integrating cutting-edge components across hardware and software domains. The result will be a highly responsive, personalized, and spatially rich Internet. As one analysis puts it, combining all of these technologies “addresses core challenges” of AR/VR and unlocks new possibilities; as 5G and AI mature together, their impact on immersive computing will grow exponentially.

Applications Across Industries

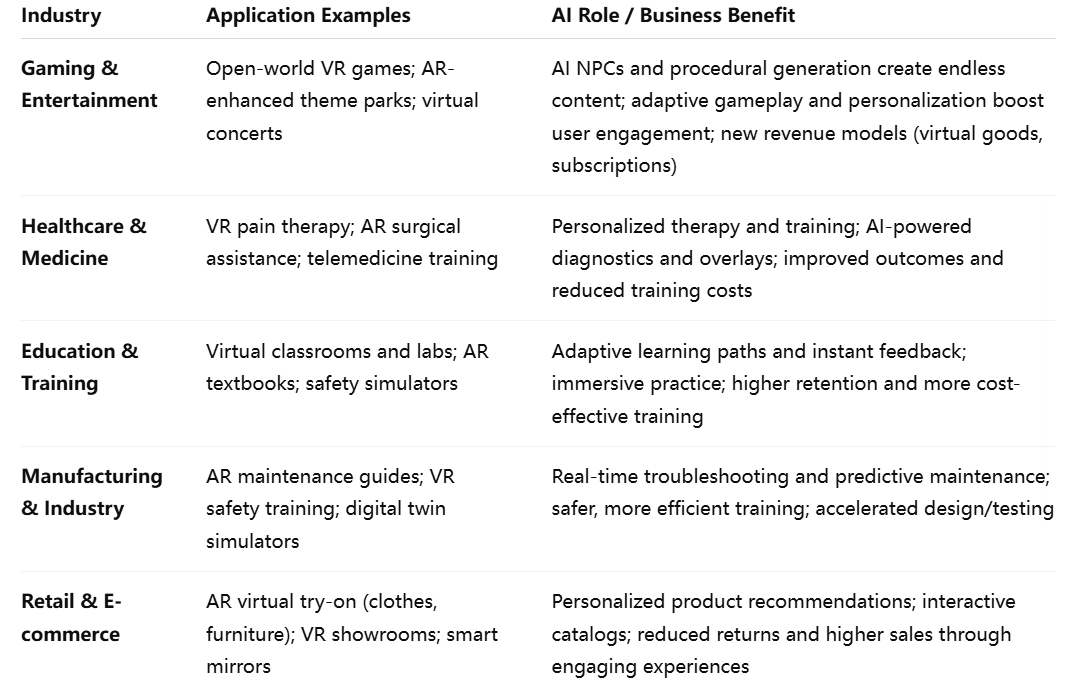

AI-driven 3D experiences are not a niche novelty but a strategic platform used in many sectors. The table below compares key use cases and benefits across industries:

These examples illustrate the versatile value of AI-driven immersion. In each case, the business benefit comes from either engaging users in new ways or increasing operational efficiency (often both). Below are a few elaborations:

- Gaming & Entertainment: This is the most mature market for XR. Games and theme parks use VR/AR to offer compelling experiences. AI adds depth: for example, an action game might use reinforcement learning to tailor enemy behavior to each player’s skill. Media companies are developing virtual concerts and movies where audiences can interact. The result is more personalized entertainment and strong user loyalty. As players spend more time and money in these environments, companies gain new revenue streams (e.g. digital merchandise, ad placements). In short, AI makes entertainment more immersive and monetizable.

- Healthcare: Beyond therapy, hospitals use AR/VR to improve patient care and training. Surgeons can wear AR headsets that overlay critical data (like MRI images) on the patient during operations. AI-driven image recognition highlights anatomy or pathology (for example, outlining a tumor’s boundary on the surgeon’s view). Nurses and doctors also train in VR: the system simulates emergencies with AI-controlled patients, allowing staff to practice procedures without risk. These innovations lead to better outcomes and lower costs. For instance, VR training has been shown to dramatically reduce training-related errors and injuries. The global market for VR in healthcare is rapidly growing; analysts expect it to reach ~$6.9 billion by 2026, reflecting its increasing importance.

- Education: Schools and companies use immersive XR as a teaching tool. In a VR chemistry lab, students can perform experiments safely; an AI tutor in the app might point out mistakes as they happen. In remote education, a virtual classroom can bring together students from anywhere in the world in 3D space, with AI adjusting language or difficulty in real time. Corporate training is another big area: simulations of factory floors or emergency situations let employees gain hands-on practice. AI then tracks progress and optimizes the lesson plan for each learner (for example, re-teaching a concept if needed). Early results show these methods improve learning outcomes and retention compared to traditional lectures.

- Manufacturing & Industry: Factories are deploying AR to aid assembly and maintenance. A technician wearing AR glasses might see step-by-step instructions overlaid on the actual machinery. AI can diagnose issues by analyzing sensor data and then highlight the problem area in AR. One case study in an automotive plant found that using AR-guided assembly cut error rates by about 30%. On the design side, engineers use VR digital twins: a 3D virtual model of a factory line or product. They can simulate changes in VR (with physics and data-driven models) before touching any real hardware. Bernard Marr describes how industries are harnessing “the power of collaborative virtual and digital environments” via digital twins. By merging AI, IoT, and XR, companies accelerate product development and reduce costly trial-and-error.

- Retail & E-commerce: Shopping is becoming an immersive, personalized experience. Customers can use AR on their phone or smart glasses to “try on” clothes, place virtual furniture in their home, or preview a car in their driveway. Retailers also create VR stores where shoppers browse as if in a mall. AI drives personalization here: recommendation engines suggest items based on your style, and computer vision apps can identify what you’re looking at and bring up reviews or discounts. In a futuristic vision of AR shopping, researchers describe a store where AI continuously learns from a customer’s actions – combining edge computing, 5G, and AI – to instantly update product overlays and suggestions in real time. The payoff is significant: studies show retailers that use AR see higher conversion rates and lower return rates, since customers make more informed purchases.

In all these industries, we see a common pattern: AI-driven XR provides either deeper engagement (capturing customer attention, enhancing experience) or greater efficiency (speeding training, reducing errors). Often it does both – for instance, VR therapy (healthcare) engages patients emotionally and yields better clinical outcomes. For business leaders, the takeaway is that immersive AI is not just a tech novelty but a platform for innovation across the board.

Future Trends and Business Implications

Looking ahead, several mega-trends will shape the next wave of AI-immersive networks:

- Persistent Metaverse Worlds: We are gradually moving toward continuous virtual environments (the “metaverse”) where people live, work, and play. In these worlds, users have persistent identities and assets. For businesses, this means entirely new engagement and marketing channels. As one analysis points out, metaverse platforms can give companies “a 360-degree view of their customers” and enable hyper-personalized, interactive marketing. For example, a car manufacturer could host a virtual test-drive event, collecting data on user preferences and behaviors. Those insights (powered by AI analytics) translate into better products and targeted offers. In short, companies that build virtual customer experiences now can gain a competitive edge in brand loyalty and data intelligence.

- Industrial Metaverse & Digital Twins: The metaverse is not just for consumers. An “industrial metaverse” is emerging, where engineers and managers collaborate in shared virtual spaces. A key component is the digital twin: a live 3D model of a physical asset or process. By linking real-time sensor data and AI into a VR simulation, organizations can test changes in the virtual twin before implementing them on the factory floor. For example, architects using AR might stand on a construction site and see a VR model of the planned building all around them, adjusting designs in real time. Companies like BMW already use VR twins to accelerate vehicle prototyping. In this future, engineers will rely on AI-augmented virtual worlds to innovate faster and more safely.

- Connectivity Upgrades: Immersive networks will rely on even more powerful connectivity. Telecom companies are developing 6G, promising terabit-per-second speeds and built-in AI orchestration of the network. This could enable devices like ultralight AR glasses that beam content from the cloud with imperceptible lag. Wi-Fi 7 will similarly raise home/office wireless bandwidth. For businesses, this means reviewing network infrastructure: upgrading to 5G/6G and implementing edge data centers (for example, on-premise MEC nodes) will be crucial to support high-fidelity XR applications at scale. Those who plan now can avoid bottlenecks later.

- Advances in XR Hardware: We can also expect hardware leaps. Research into eye-tracking and foveated rendering (sharpening the image where the eye looks) will reduce the processing power needed for VR. Haptic technology is improving, allowing users to “feel” virtual objects. Perhaps most intriguingly, brain-computer interfaces (BCI) are being explored as an input method. While still experimental, companies like Neuralink envision VR controllers operated by thought. If realized, this would blur the line between imagination and virtual action. Businesses should monitor these developments, as they could open entirely new interaction modes (and raise new ethical questions).

- Generative AI Content: Just as we have seen AI create text and images, we will soon have AI that generates 3D worlds, characters, and animations. Imagine an AI system that, given a simple sketch or a textual prompt, builds a complete VR environment. Companies like Microsoft and NVIDIA are already exploring tools where users can spawn avatars or scenes with a few words. This democratizes content creation: a small team could generate thousands of virtual product models or training scenarios without manual 3D design. Strategically, businesses should consider how generative AI might lower content costs but also think about intellectual property and quality control in AI-created content.

- Standards and Interoperability: For the metaverse to flourish, standards will be key. Initiatives like the OpenXR API and the Metaverse Standards Forum are working to ensure virtual assets and avatars can move between platforms. For example, a piece of virtual clothing you “buy” in one world might be usable in another. Companies should stay engaged with these ecosystems: investing in interoperability today can prevent having assets locked into a single vendor’s silo tomorrow. This matters for long-term strategy, especially as partnerships and acquisitions in the XR space accelerate.

- Privacy, Security, and Ethics: More data flows means more privacy and security concerns. XR devices collect vast information (biometrics, gaze patterns, spatial location). AI algorithms can infer even sensitive traits. Companies must build trust by securing this data and being transparent. For example, businesses may need to anonymize virtual meeting data or enforce age restrictions in virtual games. Additionally, AI in XR raises fairness issues: personalized experiences should not reinforce biases. Emerging regulations (such as AI governance rules) will eventually apply to immersive AI systems. Forward-thinking firms will establish ethical guidelines now, treating them as integral to their technology strategy.

For business leaders, the rise of AI-driven immersive networks carries both opportunity and responsibility. On one hand, early adopters can reap significant rewards: new revenue models (virtual goods, immersive advertising, subscription worlds) and deeper customer engagement. Pilot programs often reveal surprising gains – for instance, companies replacing some in-person training with VR have cut training time and costs dramatically while improving outcomes. Retailers using AR try-on experiences report higher conversion and lower returns. Manufacturers using digital twins uncover costly issues before they occur. These benefits translate directly to the bottom line.

On the other hand, success requires deliberate planning. Business strategies should include:

- Pilot Projects: Start with focused experiments (e.g. an AR app for field technicians, or a VR showroom prototype). Measure user response and ROI carefully. Early pilots help build internal expertise and create “XR champions” within the organization.

- Ecosystem Partnerships: The XR and AI landscape is vast. Partnering with specialists (cloud providers, AI vendors, hardware makers) can accelerate development. For instance, a hospital might team with a medtech startup for an AR surgery app, rather than build it entirely in-house. Strategic alliances (with universities, startups, or industry consortia) will also help share risk and learn best practices.

- Skill Development: Immersive content creation is a new discipline. Upskill or hire designers, 3D artists, and developers familiar with XR and AI tools. Platforms like Unity and Unreal offer training for developers, and some firms create “XR labs” for employees to experiment. Equally important is training the end-users (e.g. nurses, factory workers) to ensure comfortable adoption.

- Infrastructure Investment: Ensure networks and IT infrastructure are ready. This may involve upgrading Wi-Fi on campus for AR use, deploying local edge servers for low-latency computing, or securing enough 5G bandwidth. A phased rollout (e.g. start with one department or region) can manage costs while scaling up infrastructure gradually.

- Data and Metrics: Define clear success metrics (e.g. reduction in training time, increase in sales, user engagement levels). Collect data from XR apps – which might include novel metrics like time spent in VR or virtual item interactions. Use AI analytics on this data to refine your strategy. Documenting concrete ROI from initial projects will build support for expansion.

In conclusion, AI-driven 3D immersive networks are poised to become a core part of the digital economy. By fusing AI’s intelligence with high-speed networks and rich 3D interfaces, we can create experiences far beyond today’s screen-based apps. The industries that learn to leverage these technologies will gain powerful new capabilities – from ultra-personalized customer engagement to radically more effective training and design. The imperative is clear: start exploring now, build the necessary infrastructure and partnerships, and adapt your business models for a future where the digital and physical increasingly intertwine. Those companies that move first will set the standards for the next era of human-computer interaction and will reap the rewards of a truly immersive world.

References

- NVIDIA Omniverse: A Platform for Connecting and Building Custom 3D Pipelines

https://www.nvidia.com/en-us/omniverse/ - Unity: Real-Time Development Platform for XR Experiences

https://unity.com/ - Unreal Engine: XR Development Tools and AI Integration

https://www.unrealengine.com/en-US/xr - Meta Reality Labs: Research in AI and Mixed Reality

https://about.fb.com/realitylabs/ - Microsoft HoloLens and Mixed Reality Technology

https://www.microsoft.com/en-us/hololens - Snap AR: Developer Platform for Augmented Reality

https://ar.snap.com/ - Qualcomm Snapdragon XR Platforms

https://www.qualcomm.com/products/application/xr - Magic Leap: Enterprise-Focused AR Solutions

https://www.magicleap.com/ - 5G and Edge Computing for XR (Ericsson Industry Insights)

https://www.ericsson.com/en/blog/2023/5g-and-edge-computing-are-transforming-extended-reality - PwC Report: Seeing is Believing – The Value of AR and VR for Business

https://www.pwc.com/gx/en/industries/technology/publications/seeing-is-believing.html