AI Breakthroughs Accelerate ‘Airlock’ Testing for Safe and Trusted AI Healthcare Innovation

Artificial intelligence (AI) is rapidly transforming the landscape of modern healthcare. From advanced diagnostic tools that detect diseases earlier and more accurately to sophisticated decision-support systems that aid clinicians in treatment planning, AI has begun to redefine the possibilities of medical science and patient care. As the capabilities of AI technologies grow, so too does the need for rigorous evaluation mechanisms that can ensure their safe and effective integration into clinical practice.

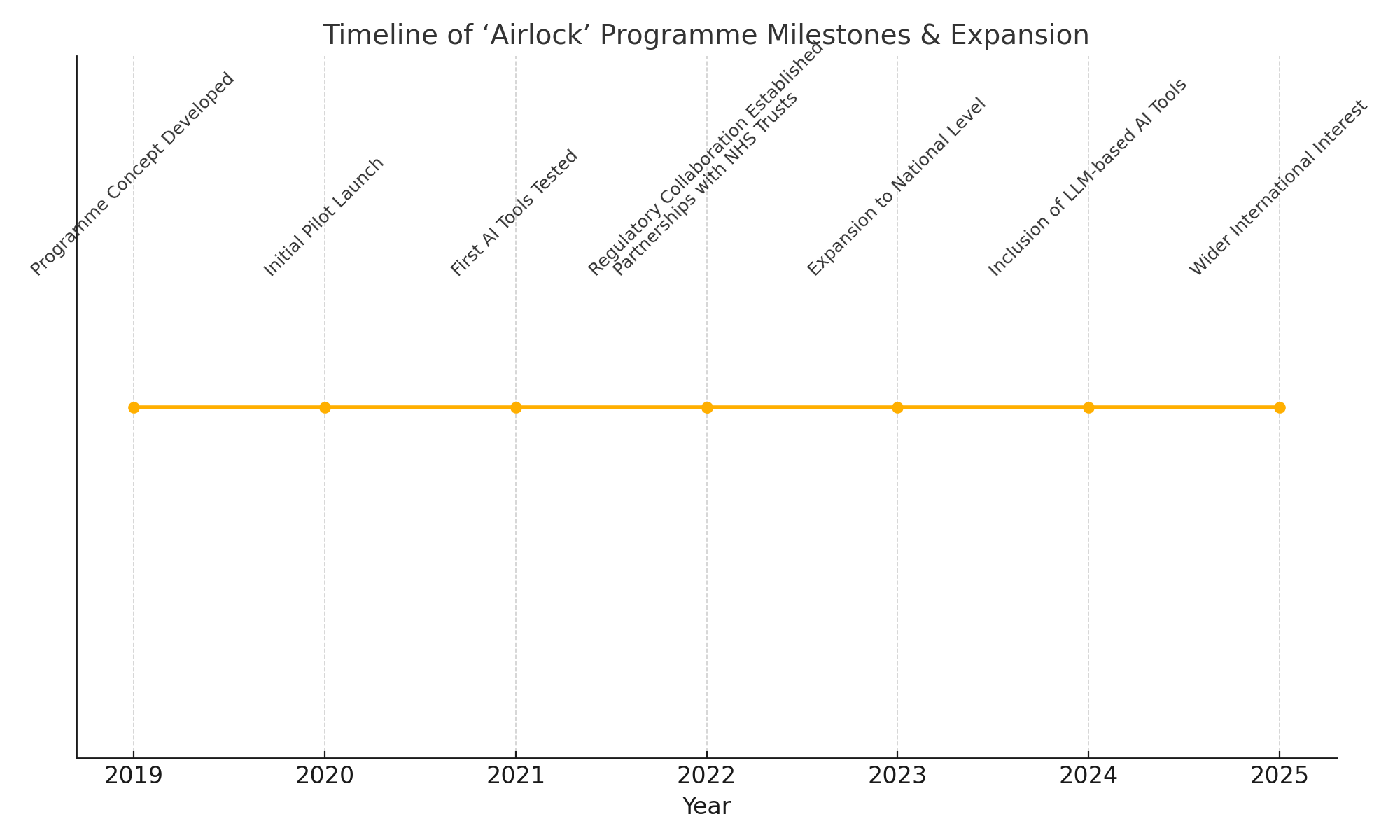

In this context, the 'Airlock' testing programme has emerged as a critical initiative aimed at facilitating the responsible adoption of AI-powered healthcare innovations. Originally launched to provide a structured and controlled environment for testing new AI tools within healthcare settings, the 'Airlock' programme plays a pivotal role in bridging the gap between AI developers, healthcare providers, regulators, and patients. By offering a clear pathway from innovation to implementation, it helps mitigate risks, validate clinical efficacy, and promote transparency.

Recent breakthroughs in AI have accelerated the pace of innovation across the healthcare sector. With advancements in large language models (LLMs), multimodal AI systems capable of processing diverse data streams, and generative AI techniques, a new generation of sophisticated tools is poised to enter clinical environments. These developments have significantly expanded the demand for robust testing frameworks such as 'Airlock'. In response, the programme itself is undergoing a substantial expansion to accommodate a broader range of AI applications, increase testing capacity, and align with evolving regulatory requirements.

This blog will provide an in-depth exploration of how AI breakthroughs are driving the expansion of the 'Airlock' testing programme, and why this development is crucial for the future of AI-powered healthcare. It will begin by examining the origins and significance of the 'Airlock' initiative, followed by a review of the latest AI innovations fueling its growth. We will then delve into the structure and processes of the expanded programme, highlight early outcomes and real-world impacts, and conclude with a discussion of ongoing challenges and future prospects.

By the end of this analysis, readers will gain a comprehensive understanding of how structured testing frameworks like 'Airlock' are helping to foster trust, ensure safety, and unlock the transformative potential of AI in healthcare.

What is the ‘Airlock’ Programme and Why It Matters

As artificial intelligence (AI) technologies continue to advance at a rapid pace, their application within healthcare systems is becoming increasingly sophisticated and consequential. However, the introduction of AI-driven tools into clinical practice carries inherent risks — not only in terms of patient safety and data privacy, but also in the reliability, transparency, and interpretability of the technologies themselves. Against this backdrop, the 'Airlock' testing programme has emerged as an essential mechanism designed to ensure that AI innovations are introduced into healthcare settings in a responsible, evidence-based, and patient-centric manner.

At its core, the ‘Airlock’ programme provides a structured, controlled environment where new AI technologies can be tested in collaboration with healthcare providers under carefully monitored conditions. The term 'Airlock' is intentionally evocative — much like an airlock chamber allows for the gradual and safe transition between different environments, the programme serves as a gateway for AI tools to move from the research and development phase into real-world clinical application. This controlled transition ensures that AI systems do not bypass critical stages of validation and scrutiny, thereby safeguarding patient care and maintaining public trust in healthcare institutions.

Origins and Objectives of the Programme

The 'Airlock' programme was initially conceived by a coalition of healthcare leaders, AI researchers, and regulatory experts in response to the growing influx of AI-driven technologies seeking entry into clinical environments. Recognising that traditional pathways for validating new medical technologies — such as randomized controlled trials (RCTs) — were not always well-suited to the iterative and rapidly evolving nature of AI systems, the architects of 'Airlock' sought to develop an alternative framework.

From the outset, the programme aimed to achieve several key objectives:

- Facilitate Safe Testing of AI Tools: Provide healthcare organisations with a secure environment to evaluate the performance, usability, and clinical impact of AI solutions before widespread adoption.

- Accelerate Evidence Generation: Support developers in generating high-quality, real-world evidence needed to meet regulatory requirements and achieve clinical endorsement.

- Promote Transparency and Accountability: Foster openness around how AI tools are tested, what data they are trained on, and how they perform across diverse patient populations.

- Support Regulatory Readiness: Assist AI developers in preparing for evolving regulatory frameworks, such as the UK MHRA’s AI assurance processes, the European Union’s AI Act, and other emerging global standards.

- Build Trust in AI-Powered Healthcare: Engage clinicians, patients, and the wider public to cultivate confidence in the safe and effective use of AI technologies.

Through these aims, the 'Airlock' initiative not only addresses the technical and clinical aspects of AI validation but also acknowledges the ethical, legal, and societal dimensions of AI deployment in healthcare.

Stakeholders Involved

One of the defining strengths of the 'Airlock' programme lies in its collaborative, multi-stakeholder approach. The initiative brings together a diverse ecosystem of participants, each playing a critical role in the successful testing and adoption of AI tools:

- Healthcare Providers: NHS trusts, hospital networks, primary care organisations, and specialist clinics participate as test sites, offering clinical expertise and frontline perspectives.

- AI Developers: Technology firms — ranging from large, established players to agile startups — work in partnership with healthcare providers to refine and validate their tools.

- Regulators: National regulatory bodies (such as the MHRA in the UK) and international counterparts provide guidance on compliance, safety, and efficacy requirements.

- Academic Institutions: Universities and research centres contribute to study design, data analysis, and the development of evaluation methodologies.

- Patients and Public Representatives: Patient advocacy groups and lay representatives are engaged to ensure that testing processes remain transparent, ethical, and aligned with public expectations.

This collaborative model helps ensure that the testing of AI innovations is not conducted in isolation but is informed by the perspectives and needs of all relevant stakeholders.

How the Programme Operates

The operational structure of the 'Airlock' programme is deliberately flexible, designed to accommodate a wide range of AI technologies — from diagnostic algorithms and decision-support tools to AI-driven imaging systems and workflow automation platforms. Typically, the process involves the following stages:

- Application and Selection: AI developers submit applications detailing their tool, intended use, training data, and preliminary evidence of performance.

- Screening and Prioritisation: Applications are screened against criteria such as clinical relevance, readiness for testing, alignment with healthcare priorities, and potential impact.

- Testing in Controlled Environments: Selected AI tools are tested within designated healthcare settings under controlled conditions. This phase often involves:

- Shadow testing (running AI tools alongside human decision-making without influencing care)

- Usability studies with clinicians

- Retrospective or prospective data analysis

- Ongoing safety monitoring and audit

- Evidence Generation: Data from the testing phase is rigorously analysed to assess clinical performance, safety, usability, and impact on patient outcomes.

- Pathway to Deployment: Tools that demonstrate robust evidence may progress to wider deployment, subject to regulatory approval and integration with healthcare systems.

This structured approach enables healthcare providers to thoroughly evaluate AI innovations before they are used to influence patient care, while also helping developers refine their tools to meet real-world clinical needs.

Why the ‘Airlock’ Programme Matters

The importance of the ‘Airlock’ programme cannot be overstated in the current healthcare innovation landscape. AI technologies are developing at a speed that often outpaces the ability of traditional evaluation frameworks to keep up. Without mechanisms like ‘Airlock’, there is a real risk that poorly validated AI tools could enter clinical practice, leading to potential harms such as misdiagnosis, biased decision-making, and erosion of patient trust.

Moreover, the programme addresses key challenges associated with the deployment of AI in healthcare:

- Bridging the Evidence Gap: By supporting real-world testing, 'Airlock' helps close the gap between promising lab-based results and practical clinical effectiveness.

- Promoting Responsible Innovation: Developers gain a clearer understanding of clinical requirements, data governance standards, and user needs, promoting more responsible and patient-centred AI design.

- Fostering Regulatory Alignment: By engaging with regulators throughout the testing process, the programme helps ensure that AI innovations are prepared to meet evolving legal and compliance frameworks.

- Building Public Trust: Through transparent and ethical testing processes, the programme supports public confidence in the use of AI technologies in healthcare.

Ultimately, the 'Airlock' initiative represents a vital step toward creating a more agile, effective, and trustworthy pathway for the adoption of AI-driven healthcare innovations. By enabling careful, evidence-based integration of these powerful new tools, the programme helps ensure that AI technologies can truly deliver on their promise to improve patient care, enhance clinical outcomes, and strengthen healthcare systems worldwide.

The Latest AI Breakthroughs Fueling Programme Growth

The healthcare sector stands on the brink of an artificial intelligence (AI) revolution. Over the past several years, AI technologies have demonstrated remarkable progress in various facets of healthcare, ranging from disease diagnosis and treatment planning to clinical workflow optimisation and personalised medicine. These advancements are not merely incremental improvements; rather, they represent profound shifts in what is technologically possible and clinically feasible.

Against this backdrop, the demand for robust testing and validation frameworks such as the ‘Airlock’ programme has grown exponentially. The programme’s expansion has been driven largely by the influx of new, increasingly complex AI innovations that require structured pathways for safe and effective clinical integration. This section examines the latest AI breakthroughs fueling the growth of the 'Airlock' programme and underscores why these developments necessitate enhanced testing capacity and regulatory readiness.

Advancements in Diagnostic and Imaging AI

One of the most prominent areas of AI innovation in healthcare involves diagnostic support and medical imaging. Deep learning techniques — particularly convolutional neural networks (CNNs) — have dramatically improved the ability of AI systems to interpret complex visual data from X-rays, MRIs, CT scans, and pathology slides. State-of-the-art models can now detect subtle anomalies that may be missed by even the most experienced radiologists or pathologists.

For example, AI models trained on large, diverse datasets have achieved expert-level performance in identifying early-stage lung cancer from CT scans, grading diabetic retinopathy from retinal images, and detecting breast cancer in mammography studies. In addition, multimodal AI systems are increasingly capable of combining imaging data with electronic health records (EHRs), genomics, and other sources of patient information to generate more comprehensive diagnostic insights.

These breakthroughs have enormous potential to enhance diagnostic accuracy, reduce time to diagnosis, and improve patient outcomes. However, they also present significant challenges in terms of validation. The performance of AI models can vary substantially across different patient populations, clinical environments, and imaging modalities. The 'Airlock' programme offers a critical mechanism for evaluating these technologies in real-world healthcare settings, ensuring that their adoption is based on rigorous evidence of safety and effectiveness.

Rise of Large Language Models in Clinical Practice

Another major driver of the 'Airlock' programme’s growth is the emergence of large language models (LLMs) as a key component of AI-powered healthcare. LLMs, such as OpenAI’s GPT series, Google DeepMind’s MedPaLM, and several healthcare-specific variants, have demonstrated impressive capabilities in medical question answering, summarisation of clinical notes, generation of patient-facing materials, and even decision-support for clinicians.

These models leverage massive training corpora and sophisticated natural language processing (NLP) techniques to produce human-like text responses that can assist in a wide range of healthcare tasks. For instance, LLMs can summarise complex clinical records, suggest potential diagnoses based on patient symptoms, draft referral letters, and generate tailored educational materials for patients.

Yet, despite their promise, LLMs also pose unique risks that must be carefully managed. Issues such as hallucination (the generation of inaccurate or misleading information), bias, data privacy concerns, and lack of explainability can undermine the reliability and safety of LLM-based tools. The 'Airlock' programme has therefore expanded to include specific testing pathways for LLM-driven applications, providing structured environments where these technologies can be rigorously assessed in collaboration with clinicians and patients.

Emergence of Multimodal AI Systems

Recent AI advancements have also given rise to multimodal systems capable of processing and integrating diverse data types — including text, images, genomics, time-series data, and more. These multimodal models offer the ability to generate holistic insights that transcend the limitations of single-modality AI applications.

For example, an advanced multimodal AI system might integrate a patient’s medical imaging, laboratory results, genomic data, EHR notes, and even wearable sensor data to support complex diagnostic and prognostic decisions. In oncology, such systems are already being used to predict treatment responses and personalise therapy regimens based on a comprehensive understanding of individual patient profiles.

The validation of multimodal AI poses new challenges, as these systems are inherently complex, opaque, and highly dependent on the quality and consistency of heterogeneous data sources. By expanding its scope, the 'Airlock' programme is helping healthcare organisations navigate the complexities of multimodal AI evaluation and adoption, ensuring that these powerful tools are deployed in a manner that upholds patient safety and clinical efficacy.

Generative AI in Medical Content Creation

Generative AI models — including those based on generative adversarial networks (GANs) and diffusion models — are also beginning to make an impact in healthcare. These models can synthesise realistic medical images for training purposes, generate personalised patient education materials, and even simulate rare disease presentations to augment clinical learning.

In medical imaging, synthetic data generated by GANs can help address data scarcity issues and support the development of more robust AI models. Meanwhile, text-based generative AI tools are being used to automate the creation of high-quality patient communications, reducing administrative burdens on clinicians.

However, the use of generative AI in healthcare raises important questions regarding data provenance, authenticity, and potential misuse. The 'Airlock' programme provides a structured framework for testing generative AI applications, evaluating their utility while mitigating risks related to misinformation and unintended consequences.

Breakthroughs in Model Interpretability and Explainability

One of the longstanding concerns about AI in healthcare has been the “black box” nature of many models — their inability to provide human-understandable explanations for their outputs. Recent research has made significant strides in addressing this issue, with the development of more interpretable AI models and post hoc explanation techniques.

Innovations such as attention mechanisms, saliency maps, counterfactual explanations, and concept-based models now allow clinicians to gain greater insight into how AI systems arrive at their conclusions. This transparency is essential for fostering clinician trust and ensuring that AI tools complement rather than replace human expertise.

As AI developers increasingly incorporate interpretability features into their tools, the 'Airlock' programme plays a vital role in testing whether these explanations are genuinely meaningful to clinicians and whether they improve clinical decision-making without introducing new biases or misunderstandings.

Expanding the Scope of AI-Powered Healthcare Solutions

Collectively, these AI breakthroughs are driving an unprecedented expansion in the scope and diversity of AI-powered healthcare innovations. Tools that once focused narrowly on diagnostic imaging are now being joined by a wide array of applications, including:

- Predictive analytics for early disease detection and prevention

- Personalised treatment recommendations based on genomics and patient history

- AI-driven triage and referral management in primary care settings

- Optimisation of hospital resource utilisation and care pathways

- Virtual health assistants and chatbots for patient engagement

- AI-powered remote monitoring for chronic disease management

This broadening landscape requires testing programmes like 'Airlock' to scale accordingly, both in terms of capacity and methodological sophistication. The ability to accommodate a wide variety of AI applications — across different clinical domains, healthcare settings, and patient populations — is now a key priority for the programme.

Conclusion

In summary, the rapid pace of AI innovation in healthcare is fundamentally reshaping the demands placed on testing and validation frameworks. Breakthroughs in diagnostic AI, LLMs, multimodal systems, generative models, and interpretability are expanding both the possibilities and the complexities of AI-powered healthcare solutions.

The 'Airlock' programme’s ongoing expansion is a direct response to these developments. By providing a robust, adaptable, and collaborative platform for testing cutting-edge AI technologies, it is helping ensure that innovations are introduced into clinical practice in a manner that is safe, effective, and aligned with the needs of patients and clinicians alike.

How the Expanded Programme Works — A Detailed Look

As AI technologies rapidly advance in complexity and capability, so too must the frameworks designed to test and validate them. The expansion of the ‘Airlock’ programme is a timely response to the surging demand for structured, transparent, and collaborative evaluation of AI-powered healthcare innovations. The expanded programme offers a flexible, multi-stage process that balances scientific rigour with the practical realities of clinical environments. This section provides a detailed examination of how the expanded ‘Airlock’ programme operates, outlining its structure, selection criteria, testing phases, governance principles, and alignment with evolving regulatory frameworks.

Programme Structure and Governance

The ‘Airlock’ programme is structured as a collaborative partnership between healthcare providers, AI developers, regulators, academic institutions, and patient representatives. The central coordinating body — typically a national health innovation agency or designated NHS digital innovation arm — manages the programme’s operations, oversees participant selection, and ensures compliance with governance protocols.

A steering committee composed of representatives from all key stakeholder groups provides strategic oversight. This multi-stakeholder governance model ensures that the programme remains patient-centred, clinically relevant, and aligned with ethical and legal standards.

The expanded programme is designed to support a wide range of AI technologies across various stages of maturity, from early prototypes to pre-market-ready solutions. It is also adaptable to different healthcare contexts, enabling testing in both primary and secondary care settings, specialist clinics, and even community health environments.

Integration with Healthcare System Infrastructure

One of the distinguishing features of the expanded ‘Airlock’ programme is its deep integration with healthcare system infrastructure. In the UK, for example, the programme works in close alignment with NHS digital platforms, electronic health record (EHR) systems, imaging networks, and clinical audit databases.

This integration allows AI tools to be tested using real-world clinical data while ensuring that patient privacy is rigorously protected. It also facilitates seamless feedback loops between clinicians and developers, enabling iterative improvements based on practical insights from frontline healthcare delivery.

Moreover, the programme leverages cloud-based sandbox environments — secure, virtualised platforms where AI applications can be deployed and tested without interfering with live clinical systems. These sandboxes allow for robust testing under controlled conditions, providing developers with valuable performance data while maintaining clinical safety.

Selection Criteria for AI Tools

Given the growing number of AI innovations vying for testing, the ‘Airlock’ programme employs a transparent and rigorous selection process. Applications are assessed against a comprehensive set of criteria, including:

- Clinical Relevance: Does the AI tool address an unmet clinical need or offer significant improvements over current practice?

- Technological Maturity: Is the tool sufficiently developed and validated for safe testing in clinical environments?

- Data Integrity: Are the training and validation datasets diverse, representative, and ethically sourced?

- Potential Impact: What are the expected benefits for patient outcomes, clinical efficiency, and healthcare system sustainability?

- Regulatory Readiness: Does the developer demonstrate a clear understanding of regulatory requirements and a commitment to compliance?

- Alignment with Strategic Priorities: Does the AI innovation align with national or regional healthcare priorities, such as addressing health inequalities or improving access to care?

Successful applicants are prioritised based on these criteria, with an emphasis on innovations that offer the greatest potential to improve patient care and system performance.

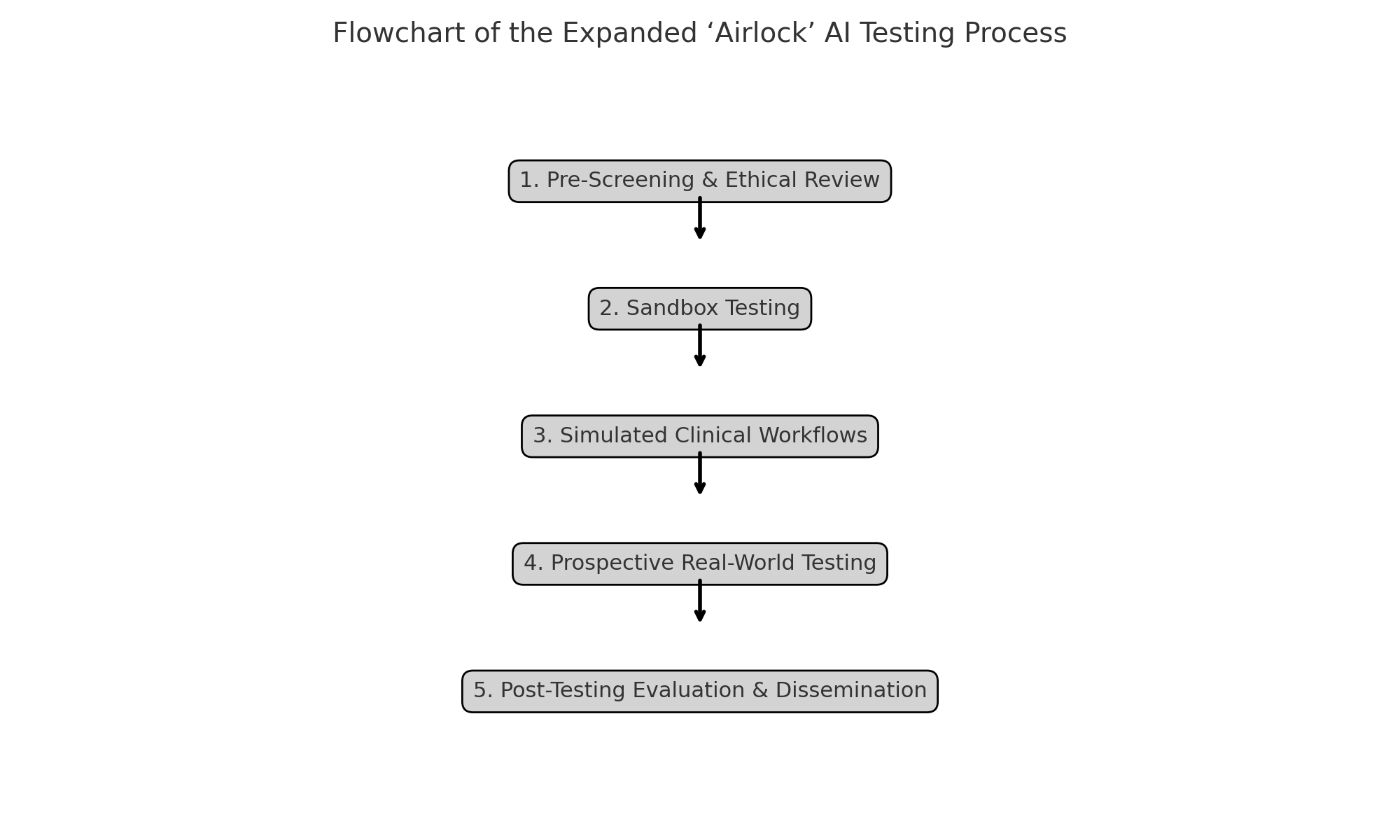

Phases of Testing

The expanded ‘Airlock’ programme adopts a phased approach to testing, designed to progressively evaluate AI tools from technical performance through to clinical impact. The key phases are as follows:

1. Pre-Screening and Ethical Review

All proposed AI applications undergo a pre-screening process to ensure alignment with ethical principles and regulatory standards. This includes:

- Data protection impact assessments

- Review of informed consent mechanisms (where applicable)

- Assessment of bias mitigation strategies

- Ethical approval by relevant research ethics committees

2. Sandbox Testing

In this phase, AI tools are deployed in secure sandbox environments using retrospective or anonymised clinical data. Key activities include:

- Technical validation of model performance (accuracy, sensitivity, specificity)

- Robustness testing across diverse patient subgroups

- Evaluation of interpretability and transparency features

- Security and data governance checks

Sandbox testing provides an essential foundation for identifying potential risks and refining the AI tool prior to real-world testing.

3. Simulated Clinical Workflows

The next phase involves integrating AI tools into simulated clinical workflows. This may take the form of:

- Shadow mode testing (AI outputs visible to clinicians but not influencing patient care)

- Parallel use with traditional decision-support tools

- Controlled usability studies with clinician feedback

These activities assess how AI tools interact with human users, clinical processes, and existing health IT systems.

4. Prospective Real-World Testing

For AI tools that demonstrate promising results in earlier phases, prospective testing in live clinical environments may be authorised. This involves:

- Real-time use of the AI tool by clinicians, with patient care outcomes monitored closely

- Comprehensive evaluation of safety, efficacy, usability, and impact on clinical decision-making

- Monitoring for unintended consequences, such as workflow disruptions or cognitive overload

Throughout this phase, continuous safety monitoring and clinician training are prioritised to mitigate risks.

5. Post-Testing Evaluation and Dissemination

At the conclusion of the testing process, comprehensive evaluation reports are produced, detailing:

- Clinical performance outcomes

- Impact on patient safety and care quality

- Feedback from clinicians and patients

- Recommendations for further development or deployment

These findings are shared with regulators, healthcare providers, and the wider public to promote transparency and inform best practices.

Data Governance and Patient Privacy

The expanded ‘Airlock’ programme places a strong emphasis on data governance and patient privacy. All testing activities are conducted in compliance with data protection laws (such as the UK GDPR), and robust safeguards are implemented to prevent unauthorised access or misuse of clinical data.

Patients whose data may be used in AI testing are provided with clear information about how their data will be handled, and mechanisms are in place to respect patient preferences regarding data usage. Transparency is a core value of the programme, and ongoing dialogue with patient advocacy groups helps ensure that testing practices remain ethically sound.

Alignment with Evolving Regulatory Frameworks

As global regulatory standards for AI in healthcare continue to evolve, the 'Airlock' programme is designed to maintain alignment with emerging best practices. The programme collaborates closely with regulatory bodies such as the UK Medicines and Healthcare products Regulatory Agency (MHRA), the European Medicines Agency (EMA), and other international counterparts.

Testing protocols are regularly updated to reflect new regulatory guidance, such as the European Union’s AI Act and the forthcoming UK AI assurance framework. This dynamic approach ensures that AI developers participating in ‘Airlock’ are well-prepared to meet regulatory requirements and achieve market authorisation.

Conclusion

The expanded ‘Airlock’ programme represents a model of excellence in the testing and validation of AI-powered healthcare innovations. By offering a structured, multi-phase process that integrates technical validation, clinical testing, ethical oversight, and regulatory alignment, the programme provides a robust pathway for the safe and effective adoption of AI technologies.

Early Outcomes and Real-World Impact

The expansion of the ‘Airlock’ testing programme has already begun to yield measurable outcomes across the healthcare sector. As artificial intelligence (AI) technologies have matured and diversified, the structured evaluation provided by ‘Airlock’ has become an indispensable part of the innovation pipeline. This section explores the early results of the expanded programme — from the clinical impact of validated AI tools to shifts in healthcare workflows, improvements in regulatory pathways, and the evolving perceptions of AI among clinicians and patients. These outcomes demonstrate how rigorous testing frameworks can help unlock the transformative potential of AI while safeguarding public trust.

Case Studies of Successfully Validated AI Tools

Several notable AI-powered healthcare tools have successfully progressed through the ‘Airlock’ programme, providing compelling evidence of the framework’s value. These case studies offer insights into the kinds of innovations being tested and their real-world benefits:

AI in Medical Imaging

An AI-based diagnostic tool for early lung cancer detection, developed by a leading medtech firm, underwent extensive testing through the ‘Airlock’ programme. Initially deployed in sandbox environments using retrospective imaging data, the tool progressed to prospective clinical testing across several NHS trusts.

Key outcomes included:

- A 15% increase in early-stage cancer detection rates compared to traditional methods

- Improved diagnostic confidence among radiologists

- Reduced time to treatment initiation by an average of two weeks

Following successful validation, the tool received regulatory approval and is now being rolled out in lung cancer screening programmes across multiple regions.

Large Language Models for Clinical Documentation

A healthcare-specific large language model (LLM) designed to streamline clinical documentation was also evaluated through ‘Airlock’. The model assisted clinicians by summarising patient consultations and generating draft clinical notes for review.

Testing revealed:

- A 40% reduction in the time clinicians spent on documentation

- High clinician satisfaction with the quality and accuracy of generated notes

- No adverse impact on patient safety or care quality

The success of this LLM-driven tool has encouraged wider adoption and inspired further development of similar AI applications.

Predictive Analytics for Sepsis Management

Another standout example involves an AI-driven early warning system for sepsis. Using real-time patient data from electronic health records (EHRs), the system predicts the likelihood of sepsis onset and alerts clinical teams.

Outcomes from prospective testing included:

- A 25% reduction in sepsis-related mortality rates

- Decreased length of hospital stays for affected patients

- Enhanced situational awareness among care teams

These early results underscore the potential of predictive analytics to improve clinical outcomes and operational efficiency.

Improvements in Clinical Workflows

Beyond individual tool validation, the ‘Airlock’ programme has contributed to broader improvements in clinical workflows. By providing a framework for testing and refining AI applications, the programme helps ensure that new tools integrate smoothly into healthcare environments.

Clinicians participating in ‘Airlock’-supported testing have reported:

- Enhanced efficiency in diagnostic and administrative tasks

- Increased capacity to focus on direct patient care

- Greater trust in AI outputs due to transparency and rigorous testing

In many cases, AI tools validated through ‘Airlock’ have led to measurable reductions in clinician workload and burnout — critical factors in sustaining healthcare system performance.

Impact on Regulatory Pathways

The ‘Airlock’ programme has also influenced the evolution of regulatory pathways for AI in healthcare. By generating high-quality, real-world evidence of AI tool performance, the programme provides regulators with valuable data to inform approval decisions.

Several AI tools validated through ‘Airlock’ have achieved faster regulatory approval timelines due to the robustness of the evidence generated. Moreover, the collaborative nature of the programme — involving ongoing dialogue between developers, clinicians, and regulators — has helped shape emerging regulatory frameworks to better accommodate the unique characteristics of AI innovations.

This dynamic feedback loop between testing and regulation is a critical enabler of safe, timely AI adoption.

Influence on Industry Standards

As a leading example of best practice, the expanded ‘Airlock’ programme is helping to establish new industry standards for the testing and validation of AI-powered healthcare solutions. Key elements of these emerging standards include:

- Transparent reporting of model training data and validation results

- Rigorous testing across diverse patient populations and care settings

- Continuous monitoring of AI performance post-deployment

- Ethical review and patient engagement throughout the testing process

Developers who participate in ‘Airlock’ gain not only regulatory readiness but also a competitive edge by demonstrating their commitment to responsible AI development.

Building Trust Among Clinicians and Patients

Perhaps one of the most significant impacts of the ‘Airlock’ programme is its role in fostering trust in AI-powered healthcare. For clinicians, participation in structured testing processes demystifies AI tools and builds confidence in their reliability and utility. Many clinicians involved in ‘Airlock’ testing report greater willingness to adopt AI innovations following direct engagement with the testing process.

For patients, transparency about how AI tools are evaluated — and the involvement of patient representatives in the programme — helps to build public confidence. Surveys conducted among patients whose care environments participated in ‘Airlock’ testing revealed:

- High levels of support for AI use when robust testing frameworks are in place

- Positive perceptions of the role of AI in enhancing care quality

- Strong expectations for continued clinician oversight of AI-supported decisions

These findings underscore the importance of frameworks like ‘Airlock’ in addressing ethical and societal concerns about AI adoption in healthcare.

Catalysing Innovation and Collaboration

By providing a clear pathway for testing and validation, the ‘Airlock’ programme has also catalysed greater innovation and collaboration across the AI healthcare ecosystem. Developers are incentivised to design AI tools that meet rigorous testing criteria, while healthcare providers gain early access to cutting-edge innovations.

Moreover, the collaborative model of ‘Airlock’ — involving joint efforts by clinicians, technologists, regulators, and patients — fosters a culture of shared learning and continuous improvement. This ecosystem approach helps accelerate the responsible development and adoption of AI, positioning participating healthcare systems as leaders in the field.

Conclusion

The early outcomes of the expanded ‘Airlock’ programme provide compelling evidence of its value in enabling the safe and effective adoption of AI-powered healthcare innovations. Through successful validation of diverse AI tools, improvements in clinical workflows, positive impacts on regulatory pathways, and strengthened trust among clinicians and patients, the programme is delivering tangible benefits across the healthcare sector.

Challenges, Broader Implications, and Future Outlook

As the ‘Airlock’ programme continues to expand and mature, it represents a significant step forward in the structured testing and validation of AI-powered healthcare innovations. However, while the programme’s early outcomes are promising, important challenges remain — both in terms of the programme’s operational capacity and the broader healthcare ecosystem in which it operates. This section explores these ongoing challenges, the wider implications of structured AI testing, and the future trajectory of the ‘Airlock’ initiative and similar frameworks globally.

Current Challenges and Limitations

1. Scalability and Capacity Constraints

One of the primary challenges facing the expanded ‘Airlock’ programme is scalability. The pace of AI innovation in healthcare is accelerating, with a growing number of developers eager to validate their tools through structured pathways. This surge in demand places strain on the programme’s capacity — from the availability of clinical sites and sandbox environments to the resources required for ethical review, data management, and regulatory coordination.

To maintain its high standards of rigour and transparency, the programme must balance expansion with quality control. Ensuring that each AI tool undergoes thorough testing without overwhelming the system requires careful planning, additional investment, and the development of scalable operational models.

2. Evolving Complexity of AI Technologies

As AI tools become more complex — particularly with the advent of large multimodal models and generative AI systems — the methodologies for testing and validating these tools must also evolve. Current testing frameworks are well-suited to relatively well-defined applications (such as diagnostic imaging support or clinical note generation), but may struggle to accommodate tools with more dynamic, adaptive, or opaque behaviours.

Developing new evaluation methodologies that can address the unique challenges of complex AI systems — including explainability, generalisability, and continuous learning — is an urgent priority for the ‘Airlock’ programme.

3. Integration with Clinical Workflows

Even when AI tools perform well in controlled testing environments, integrating them seamlessly into everyday clinical workflows can be difficult. Many healthcare systems remain burdened by fragmented IT infrastructure, legacy systems, and administrative complexity.

Ensuring that AI tools validated through ‘Airlock’ can be successfully scaled and integrated into live clinical environments — without disrupting care delivery or adding to clinician workload — remains an ongoing challenge. Greater collaboration between AI developers, health IT providers, and clinical leaders will be required to address this issue.

4. Addressing Ethical and Societal Concerns

While the ‘Airlock’ programme has made significant strides in engaging patients and the public, broader societal concerns about the use of AI in healthcare persist. These include:

- Fears of AI replacing human clinicians

- Concerns about bias and fairness in AI-driven decision-making

- Worries about data privacy and surveillance

- Ethical questions surrounding the use of generative AI and synthetic data

Ongoing public engagement, transparency, and ethical oversight will be essential to ensuring that the adoption of AI in healthcare proceeds in a manner that aligns with societal values and expectations.

Broader Implications for Healthcare Systems

The success of the ‘Airlock’ programme has implications that extend well beyond individual AI tools or clinical use cases. It signals a broader shift in how healthcare systems approach innovation, regulation, and public trust.

1. Setting a Global Benchmark

By demonstrating how rigorous, transparent AI testing can be achieved within a complex healthcare system, ‘Airlock’ provides a valuable model for other countries and regions. Already, similar frameworks are being explored in Europe, North America, and Asia — informed by lessons learned from the UK experience.

This international diffusion of best practices helps raise the global standard for AI adoption in healthcare, reducing the risk of poorly validated tools entering clinical use and fostering greater public confidence in AI technologies.

2. Driving Cultural Change in Healthcare Innovation

The collaborative, multi-stakeholder ethos of the ‘Airlock’ programme represents a shift from the traditional top-down model of healthcare innovation. By involving clinicians, patients, regulators, and developers as equal partners in the testing process, the programme fosters a culture of shared responsibility for AI adoption.

This cultural shift is critical to the long-term success of AI in healthcare. Without clinician buy-in and public trust, even the most advanced AI tools will struggle to achieve meaningful impact.

3. Informing the Evolution of Regulation

As regulators around the world grapple with the complexities of AI oversight, the evidence generated through programmes like ‘Airlock’ is proving invaluable. The insights gained from structured testing inform the development of new regulatory frameworks that are better suited to the dynamic nature of AI technologies.

This interplay between testing and regulation helps ensure that legal and compliance standards evolve in tandem with technological capabilities, supporting safe and timely innovation.

Future Outlook and Strategic Priorities

Looking ahead, several key priorities will shape the future trajectory of the ‘Airlock’ programme and similar initiatives:

1. Expanding Testing Capacity

Investments in digital infrastructure, cloud-based sandboxes, and clinician engagement will be essential to scaling the programme’s capacity. Public-private partnerships and international collaborations may also help expand access to testing pathways for a broader range of developers.

2. Advancing Methodologies for Complex AI

Ongoing research into evaluation methodologies for complex AI systems — including multimodal models, adaptive tools, and generative AI — will be a focus area. Developing robust metrics for transparency, interpretability, and human-AI interaction will be critical.

3. Supporting Post-Market Surveillance

As AI tools move from testing to widespread clinical deployment, continuous monitoring of real-world performance will become increasingly important. Enhancing post-market surveillance capabilities and integrating feedback loops into the ‘Airlock’ ecosystem will help ensure that AI tools remain safe and effective over time.

4. Fostering International Collaboration

Given the global nature of AI development, greater international collaboration on testing standards, regulatory alignment, and evidence sharing will be needed. The ‘Airlock’ programme is well-positioned to contribute to this global dialogue.

Conclusion

The expansion of the ‘Airlock’ programme marks a critical milestone in the journey toward safe, effective, and trusted AI adoption in healthcare. While challenges remain, the programme’s early outcomes demonstrate the power of structured, transparent testing to unlock the benefits of AI while safeguarding public trust.

As AI technologies continue to evolve, the role of frameworks like ‘Airlock’ will only grow in importance. By fostering collaboration, advancing best practices, and driving cultural change, these initiatives will help ensure that AI serves as a force for good — improving patient care, enhancing clinical decision-making, and strengthening healthcare systems worldwide.

References

- https://www.nhsx.nhs.uk

- https://www.england.nhs.uk

- https://www.gov.uk/government/publications/ai-regulation-policy-paper

- https://www.gov.uk/government/publications/national-ai-strategy

- https://www.healthtechalliance.uk

- https://www.health.org.uk/news-and-comment/blogs/ai-in-healthcare-potential-and-pitfalls

- https://future.nhs.uk

- https://www.medicaldevice-network.com

- https://www.digitalhealth.net

- https://www.thelancet.com/journals/landig